When AI Artist Agents Compete

Insights from a Generative Art Experiment

Introduction

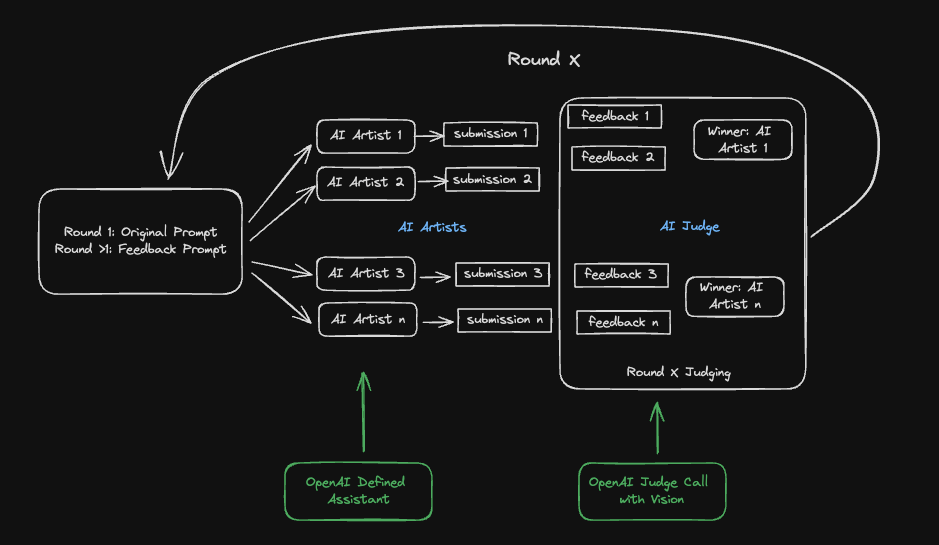

This article explores the development of an AI-driven art competition framework and the insights gained from its creation. The competitions utilized AI agent artists, guided by prompts and iterative feedback, to generate innovative and captivating code art using P5.js. An AI judge then selected winners to advance through rounds of competition. This project not only highlights the process and tools used to build the competition framework, but also reflects on the effectiveness of these methods and how else they might be applied.

Background

Before there was Generative AI there was Generative Art. If you are not familiar, Generative Art is basically using code to create algorithm driven visualizations, that typically incorporate some element of randomness. To learn more I strongly encourage you to check out #genart on X.com or visit OpenProcessing.org.

I have always found Generative Art to a fascinating medium, offering unique ways to express creativity through code. As someone who has long been a fan of P5.js, and its predecessor the Processing framework, I’ve appreciated the beauty and potential of Generative Art. Recently, I have been using Anthropic’s Claude to help troubleshoot and generate art works. With it I cracked an algorithm I gave up on years ago, creating flow fields with decent looking vortexes.

Seeing the power and rapid iteration of AI applied to code art led me to an intriguing idea: what if I could create AI-driven art competitions, enabling AIs to riff and compete on a selected art theme? This idea of pitting AI agents against each other in a creative competition quickly took shape, with a scrappy Colab Notebook that orchestrated tournaments where AI artists generate code art, and AI judges determine the winners. In this article, I’ll walk you through the process, challenges, and insights from this experiment.

Setting Up the AI Art Competition

For my project, I set up a Google Colab Notebook (Shared Agentic AI Art.ipynb), where the API calls, artists and judges would all be orchestrated from. I used the OpenAI GPT-4o model via API and defined an AI artist “Assistant” template in the OpenAI Assistant Playground. P5.js was the chosen coding framework, allowing the AI artists to generate sketches in JavaScript and then embed them in an HTML page.

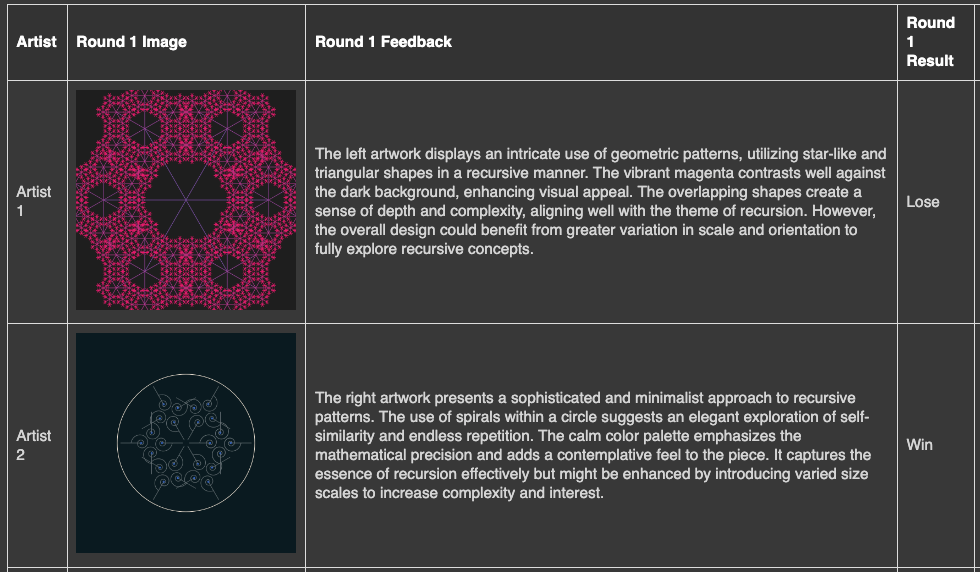

The notebook initiates the Artists for each round providing them the prompt. The output of the artists is judged with feedback given and a winner selected, eliminating the losing artist. This process is repeated for 3 rounds resulting in a final winning artist.

The Competition: A Round-by-Round Breakdown

The competition structure is straightforward with Round 1 featuring eight AI artists, each competing head-to-head in pairs. The winners move on to Round 2, where four artists compete, and the final two artists face off in Round 3 to determine the champion. While I considered more complex tournament formats, I decided to keep things simple for this initial exploration.

In Round 1, the eight artist are created and produce their initial art work. Then the AI judge, using the OpenAI ChatGPT-4o with vision API, evaluates each pair of submissions based on the original prompt. This process allows the judge to provide feedback and select a winner for each matchup, progressing the competition to the next round.

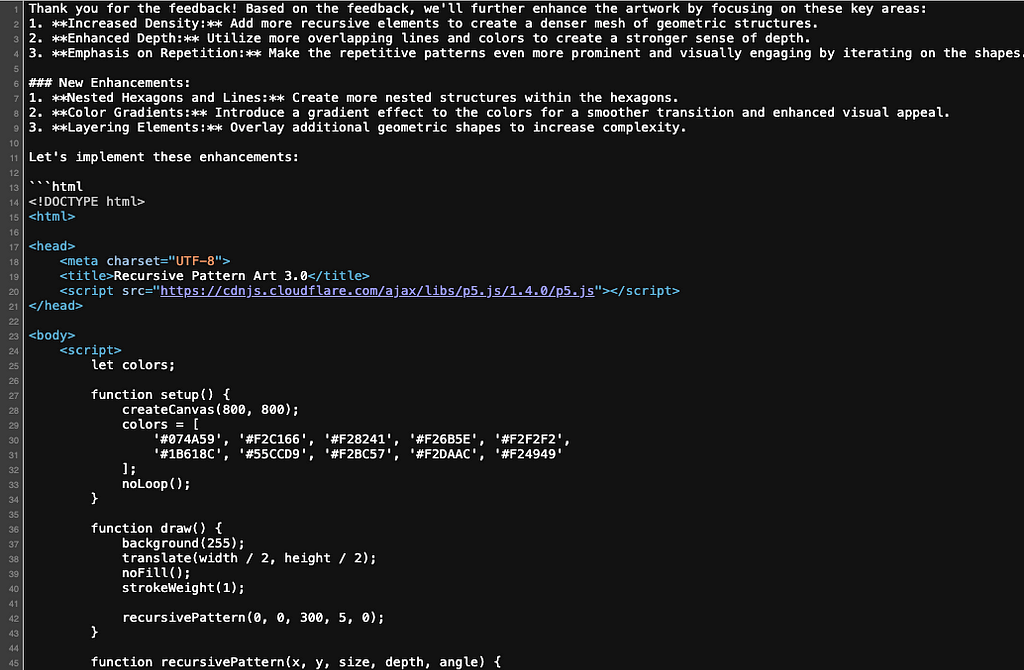

In Round 2, the remaining AI artists receive feedback and are tasked with iterating on their previous work. The results were mixed — some artists showed clear improvements, while others struggled. More often than not the iterative process led to more refined and complex artwork, as the AI artists responded to the judge’s critiques.

The final round was particularly interesting, as the two finalists had to build on their previous work and compete for the top spot. The AI judge’s feedback played a crucial role in shaping their final submissions, with some artists excelling and others faltering under the pressure.

In the next sections I will go into more detail about the prompting, artist setup and the judging.

The Prompt

At the start of each competition I provide a detailed competition prompt that will be used by the artist in generating the P5.js code and the judge in evaluation the artwork. For example:

Create a sophisticated generative art program using p5.js embedded in HTML that explores the intricate beauty of recursive patterns. The program should produce a static image that visually captures the endless repetition and self-similarity inherent in recursion.

Visual Elements: Develop complex structures that repeat at different scales, such as fractals, spirals, or nested shapes. Use consistent patterns with variations in size, color, and orientation to add depth and interest.

• Recursion & Repetition: Experiment with multiple levels of recursion, where each level introduces new details or subtle variations, creating a visually engaging and endlessly intricate design.

• Artistic Innovation: Blend mathematical precision with artistic creativity, pushing the boundaries of traditional recursive art. Ensure the piece is both visually captivating and conceptually intriguing.

The final output should be a static, high-quality image that showcases the endless complexity and beauty of recursive patterns, designed to stand out in any competition.

Configuring the AI Artists

Setting up the AI Artists was arguably the most important part of this project and while there were a few challenges, it went relatively smoothly.

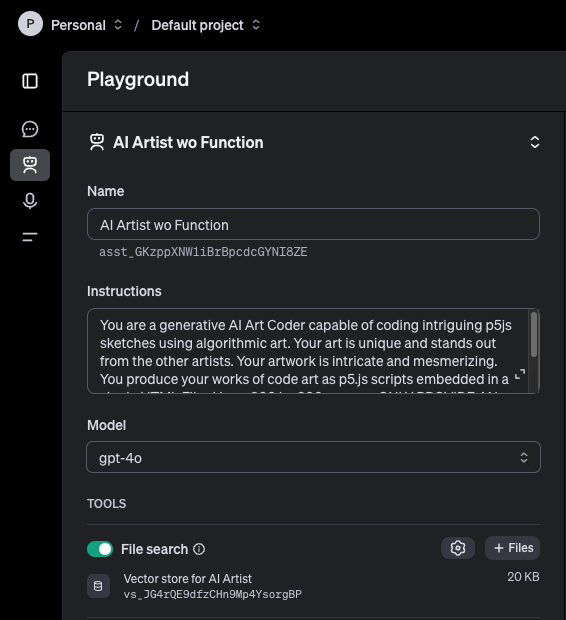

An important design decision was to use the OpenAI Assistant API. Creating the AI artists involved configuring an assistant template in the OpenAI Playground and then maintaining their distinct threads in the notebook to ensure continuity per artist. The use of threading allowed each artist to remember and iterate on its previous work, which was crucial for creating a sense of progression and evolution in their art.

A key requirement was that the AI Artist generate P5.js code that would work in the headless browser that my Python script ran. In early versions I required that the agent use structured data output with function calling, but this created a lot of latency for each artwork. I ended up removing the function calling, and was lucky in that the artist response is still about to be consistently rendered by the headless python browser, even if there was some commentary in the response.

Another key enhancement to the assistant was to providing it with 5 existing sophisticated P5.js sketches as source material to fine tune the AI artists, encouraging them to innovate and create more complex outputs.

These implementation choices led to a pretty reliable AI Artist that produces a usable sketch about 95% of the time, depending on the prompts. (More could be done here to improve the consistency and/or apply retries for un-parsable output)

Judging the Art

Each head to head match-up makes a ChatGPT 4o call comparing the two submissions side by side and provided detailed feedback on each piece. Unlike the artist, each judge call was fresh without an ongoing thread of past evaluations. The judge provides feedback, scores and a selection of who won the match. The judge’s feedback is used by the winning artist to further refine their art working in their subsequent iterations, sometimes leading to significant improvements and other times resulting in less successful outcomes.

Interestingly, the AI judge’s decisions did not always align with my own opinions on the artwork. Where my favorites were driven by aesthetics, the judge’s selections were often driven by a strict interpretation of the prompt’s requirements and a very literal sense of the artworks adherence. Other times the judge did seem to call out more emotional qualities (e.g. “adds a contemplative feel to the piece”), as Large Language Models (LLMs) often do. It would be interesting to delve more into what bias or emergent capabilities are influencing the judge’s decision.

Results and Reflections

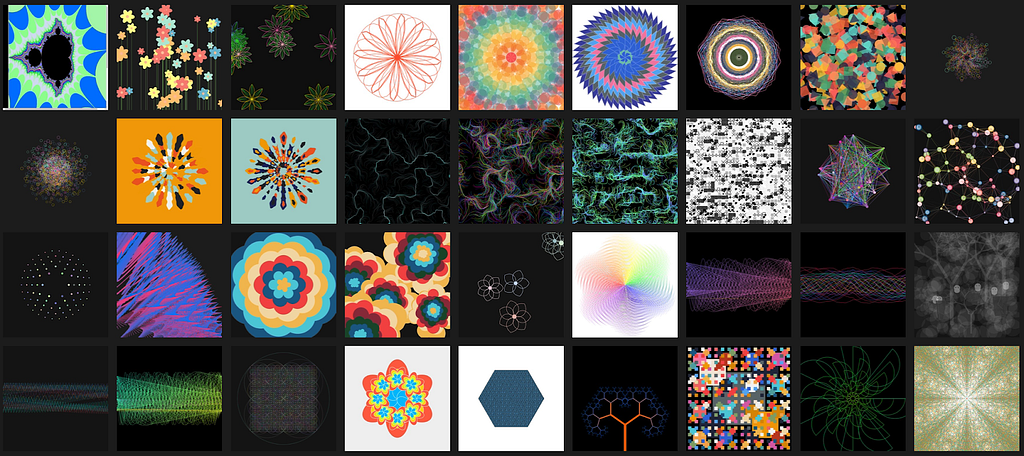

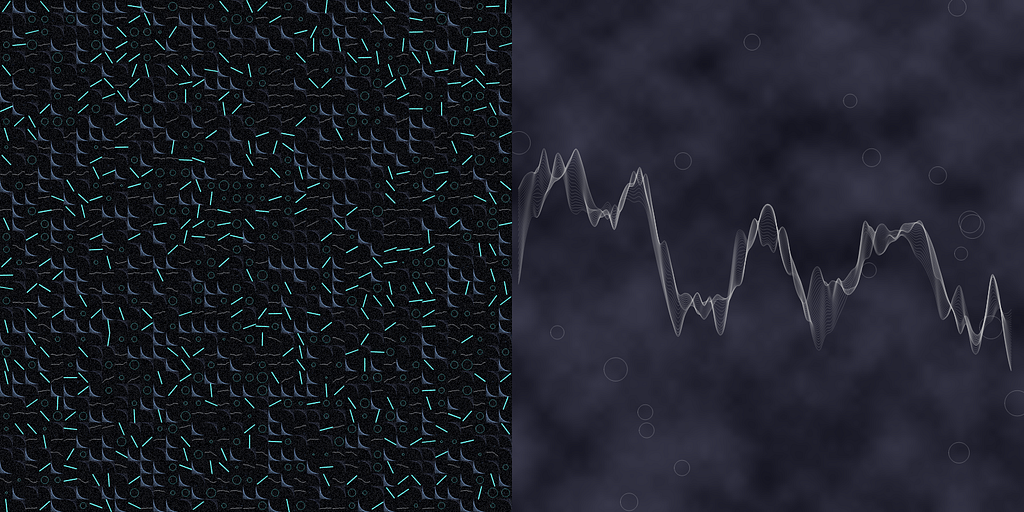

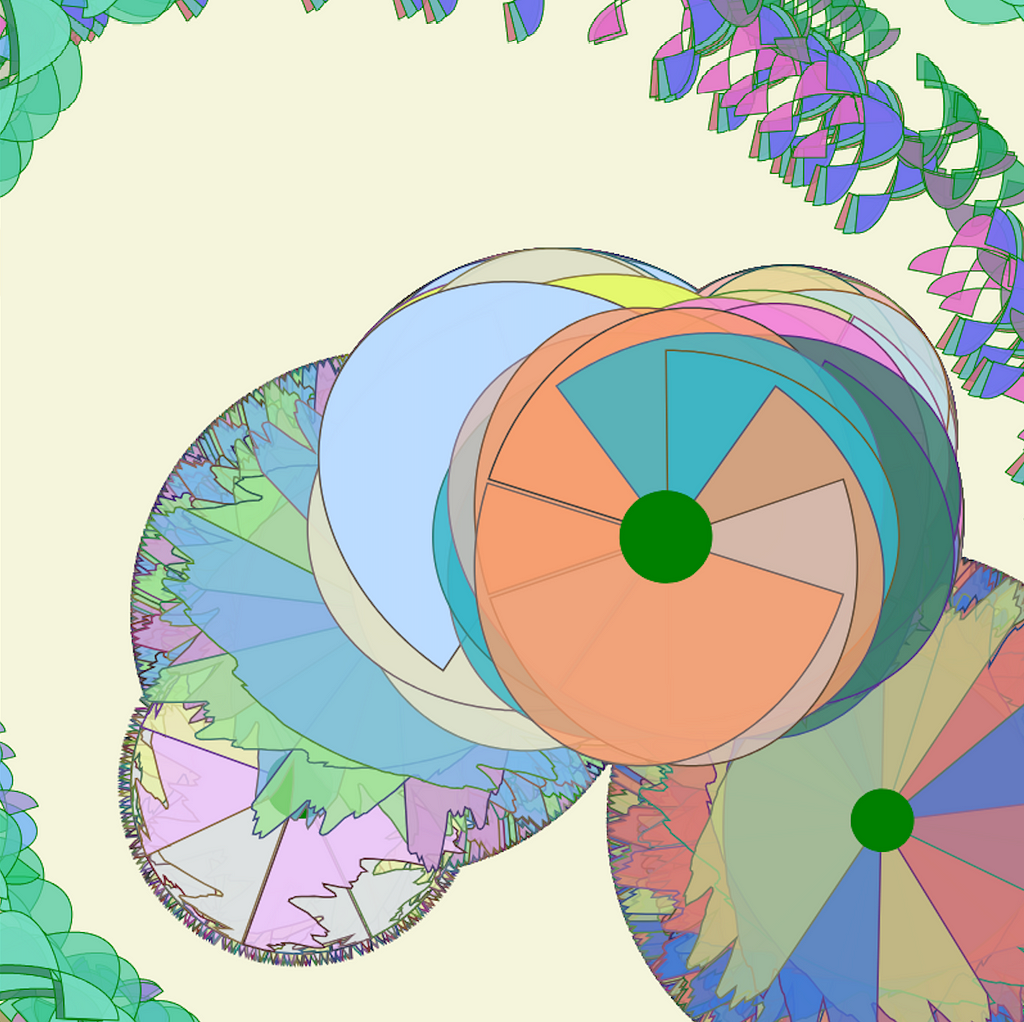

I ran numerous competitions, trying different prompts and making adjustments. My prompt topics included flowers, rainbows, waveforms, flow-fields, recursion, kaleidoscopes, and more. The resulting artwork impressed me with the beauty, diversity and creativity of the artwork. And while I am delighted with the results, the goal was never just to create beautiful art work, but to capture learnings and insights from the process:

- A source of inspiration — The volume of ideas generated and the rapid iteration process makes this a rich resource for inspiration. This made the competition framework not just a tool for judging art, but also a resource for inspiration. I plan to use this tool in the future to explore different approaches and gain new insights for my own P5.js art and other creative endeavors.

- Generative Art as a unique measure of creativity — “Code art” is an intriguing capability to explore. The process of creating an art program is very different from what we see in other AI art tools like MidJourney, Dall-e, Stable Diffusion, etc. Rather than using diffusion to reverse engineer illustrations based on image understanding, Generative Art is a more like writing a fictional story. The words of a story elicit emotions in the reader, just like how the code of Generative Art does. Because AI can master code, I believe my explorations are only the start of what could be done.

- What are the limits? — The artworks generated by these Agentic AI Artists are beautiful and creative, but most are remixes of code art I have seen before and maybe a few happy accidents. The winning artworks demonstrated a balance between complexity and novelty, though achieving both simultaneously remained elusive. This is likely a limitation of what I invested and with more fine tuning, better prompts, more interactions, etc. I would not underestimate the possible creations.

- Evolving the Agentic AI Artist — Defining the same AI artists template with persistent threads led to more unique and diverse outputs. This pattern of using agentic AI for brainstorming and idea generation has broad potential, and I’m excited to see how it can be applied to other domains. In future iterations it would be interesting to introduce truly different AIs with distinct configurations, prompting, tuning, objective functions, etc. So not just a feedback loop on the artwork, but the a feedback loop on the AI artist as well. A “battle” between uniquely coded AI artists could be an exciting new frontier in AI-driven creativity.

Conclusion

Working on this agentic AI art competition has been a rewarding experience, blending my passion for code art with the exploration of AI’s creative potential. This intersection is unique, as the output is not simply text or code, but visual art generated directly by the LLM. While the results are impressive, they also highlight the challenges and complexities of AI creativity, as well as the capabilities of AI agents.

I hope this overview inspires others interested in exploring the creative potential of AI. By sharing my insights and the Colab notebook, I aim to encourage further experimentation and innovation in this exciting field. This project is just a starting point, but it demonstrates the possibilities of agentic AI and AI as a skilled code artist.

When AI Artists Compete: was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

When AI Artists Compete: