Unpacking the six core traits of AI agents and why foundations matter more than buzzwords

The tech world is obsessed with AI agents. From sales agents to autonomous systems, companies like Salesforce and Hubspot claim to offer game changing AI agents. Yet, I have yet to see a compelling truly agentic experience built from LLMs. The market is full of botshit, and if the best Salesforce can do is say their new agent performs better than a publishing house’s previous chatbot, that’s disappointingly unimpressive.

And here’s the most important question no one is asking: even if we could build fully autonomous AI agents, how often would they be the best thing for users?

Let’s use the use case of travel planning through the lens of agents and assistants. This specific use case helps clarify what each component of agentic behavior brings to the table, and how you can ask the right questions to separate hype from reality. By the end I hope you will decide for yourself if true AI autonomy is a worthwhile right strategic investment or the decade’s most costly distraction.

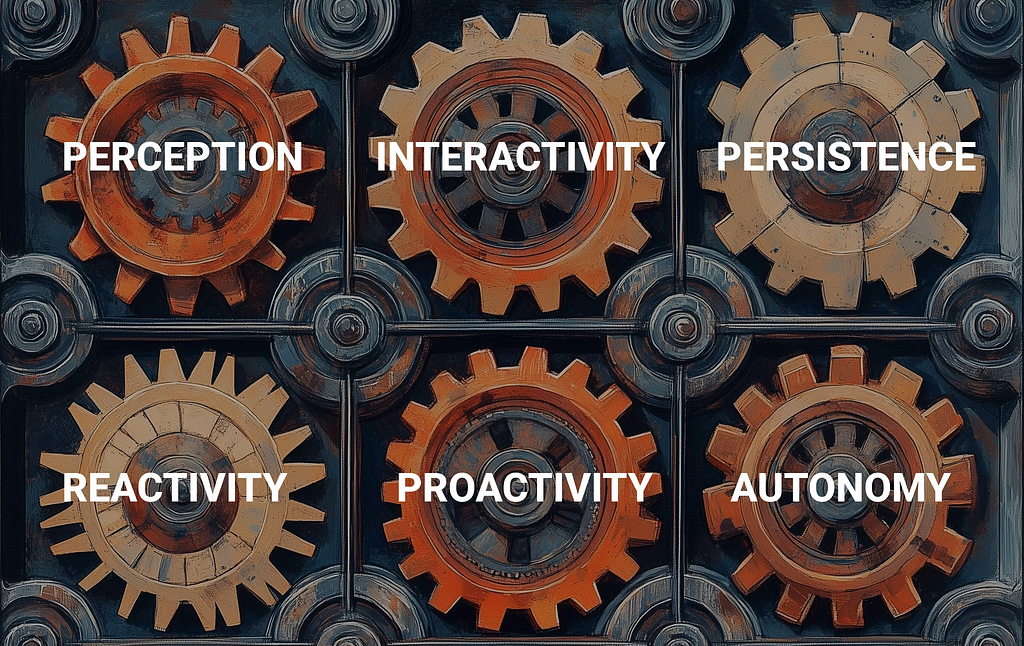

The Spectrum of Agentic Behavior: A Practical Framework

There is no consensus, both in academia and in industry about what makes a true “agent”. I advocate businesses adopt a spectrum framework instead, borrowing six attributes from AI academic literature. The binary classification of “agent” or “not agent” is often unhelpful in the current AI landscape for several reasons:

- It doesn’t capture the nuanced capabilities of different systems.

- It can lead to unrealistic expectations or underestimation of a system’s potential.

- It doesn’t align with the incremental nature of AI development in real-world applications.

By adopting a spectrum-based approach, businesses can better understand, evaluate, and communicate the evolving capabilities and requirements of AI systems. This approach is particularly valuable for anyone involved in AI integration, feature development, and strategic decision-making.

Through the example of a travel “agent” we’ll see how real-world implementations can fall on a spectrum of agentic behavior for the different attributes. Most real world applications will fall somewhere between the “basic” and “advanced” tiers of each. This understanding will help you make more informed decisions about AI integration in your projects and communicate more effectively with both technical teams and end-users. By the end, you’ll be equipped to:

- Detect the BS when someone claims they’ve built an “AI agent”.

- Understand what really matters when developing AI systems.

- Guide your organization’s AI strategy without falling for the hype.

The Building Blocks of Agentic Behavior

1. Perception

The ability to sense and interpret its environment or relevant data streams.

Basic: Understands text input about travel preferences and accesses basic travel databases.

Advanced: Integrates and interprets multiple data streams, including past travel history, real-time flight data, weather forecasts, local event schedules, social media trends, and global news feeds.

An agent with advanced perception might identify patterns in your past travel decisions, such as a preference for destinations that don’t require a car. These insights could then be used to inform future suggestions.

2. Interactivity

The ability to engage effectively with its operational environment, including users, other AI systems, and external data sources or services.

Basic: Engages in a question-answer format about travel options, understanding and responding to user queries.

Advanced: Maintains a conversational interface, asking for clarifications, offering explanations for its suggestions, and adapting its communication style based on user preferences and context.

LLM chatbots like ChatGPT, Claude, and Gemini have set a high bar for interactivity. You’ve probably noticed that most customer support chatbots fall short here. This is because customer service chatbots need to provide accurate, company-specific information and often integrate with complex backend systems. They can’t afford to be as creative or generalized as ChatGPT, which prioritizes engaging responses over accuracy.

3. Persistence

The ability to create, maintain, and update long-term memories about users and key interactions.

Basic: Saves basic user preferences and can recall them in future sessions.

Advanced: Builds a comprehensive profile of the user’s travel habits and preferences over time, continually refining its understanding.

True persistence in AI requires both read and write capabilities for user data. It’s about writing new insights after each interaction and reading from this expanded knowledge base to inform future actions. Think of how a great human travel agent remembers your love for aisle seats or your penchant for extending business trips into mini-vacations. An AI with strong persistence would do the same, continuously building and referencing its understanding of you.

ChatGPT has introduced elements of selective persistence, but most conversations effectively operate with a blank slate. To achieve a truly persistent system you will need to build your own long term memory that includes the relevant context with each prompt.

4. Reactivity

The ability to respond to changes in its environment or incoming data in a timely fashion. Doing this well is heavily dependent on robust perceptive capabilities.

Basic: Updates travel cost estimates when the user manually inputs new currency exchange rates.

Advanced: Continuously monitors and analyzes multiple data streams to proactively adjust travel itineraries and cost estimates.

The best AI travel assistant would notice a sudden spike in hotel prices for your destination due to a major event. It could proactively suggest alternative dates or nearby locations to save you money.

A truly reactive system requires extensive real time data streams to ensure robust perceptive capabilities. For instance, our advanced travel assistant’s ability to reroute a trip due to a political uprising isn’t just about reacting quickly. It requires:

- Access to real-time news and government advisory feeds (perception)

- The ability to understand the implications of this information for travel (interpretation)

- The capability to swiftly adjust proposed plans based on this understanding (reaction)

This interconnection between perception and reactivity underscores why developing truly reactive AI systems is complex and resource-intensive. It’s not just about quick responses, but about creating a comprehensive awareness of the environment that enables meaningful and timely responses.

5. Proactivity

The ability to anticipate needs or potential issues and offer relevant suggestions or information without being explicitly prompted, while still deferring final decisions to the user.

Basic: Suggests popular attractions at the chosen destination.

Advanced: Anticipates potential needs and offers unsolicited but relevant suggestions.

A truly proactive system would flag an impending passport expiration date, suggest the subway instead of a car because of anticipated road closures, or suggest a calendar alert to make a reservation at a popular restaurant the instant they become available.

True proactivity requires full persistence, perception, and also reactivity for the system to make relevant, timely and context-aware suggestions.

6. Autonomy

The ability to operate independently and make decisions within defined parameters.

The level of autonomy can be characterized by:

- Resource control: The value and importance of resources the AI can allocate or manage.

- Impact scope: The breadth and significance of the AI’s decisions on the overall system or organization.

- Operational boundaries: The range within which the AI can make decisions without human intervention.

Basic: Has limited control over low-value resources, makes decisions with minimal system-wide impact, and operates within narrow, predefined boundaries. Example: A smart irrigation system deciding when to water different zones in a garden based on soil moisture and weather forecasts.

Mid-tier: Controls moderate resources, makes decisions with noticeable impact on parts of the system, and has some flexibility within defined operational boundaries. Example: An AI-powered inventory management system for a retail chain, deciding stock levels and distribution across multiple stores.

Advanced: Controls high-value or critical resources, makes decisions with significant system-wide impact, and operates with broad operational boundaries. Example: An AI system for a tech company that optimizes the entire AI pipeline, including model evaluations and allocation of $100M worth of GPUs.

The most advanced systems would make significant decisions about both the “what” (ex: which models to deploy where) and “how” (resource allocation, quality checks), making the right tradeoffs to achieve the defined objectives.

It’s important to note that the distinction between “what” and “how” decisions can become blurry, especially as the scope of tasks increases. For example, picking to deploy a much larger model that requires significant resources touches on both. The key differentiator across the spectrum of complexity is the increasing level of resources and risk the agent is entrusted to manage autonomously.

This framing allows for a nuanced understanding of autonomy in AI systems. True autonomy is about more than just independent operation — it’s about the scope and impact of the decisions being made. The higher the stakes of an error, the more important it is to ensure the right safeguards are in place.

A Future Frontier: Proactive Autonomy

The ability to not only make decisions within defined parameters, but to proactively modify those parameters or goals when deemed necessary to better achieve overarching objectives.

While it offers the potential for truly adaptive and innovative AI systems, it also introduces greater complexity and risk. This level of autonomy is largely theoretical at present and raises important ethical considerations.

Not surprisingly, ost of the examples of bad AI from science fiction are systems or agents that have crossed into the bounds of proactive autonomy, including Ultron from the Avengers, the machines in “The Matrix”, HAL 9000 from “2001: A Space Odyssey”, and AUTO from “WALL-E” to name a few.

Proactive autonomy remains a frontier in AI development, promising great benefits but requiring thoughtful, responsible implementation. In reality, most companies need years of foundational work before it will even be feasible — you can save the speculation about robot overlords for the weekends.

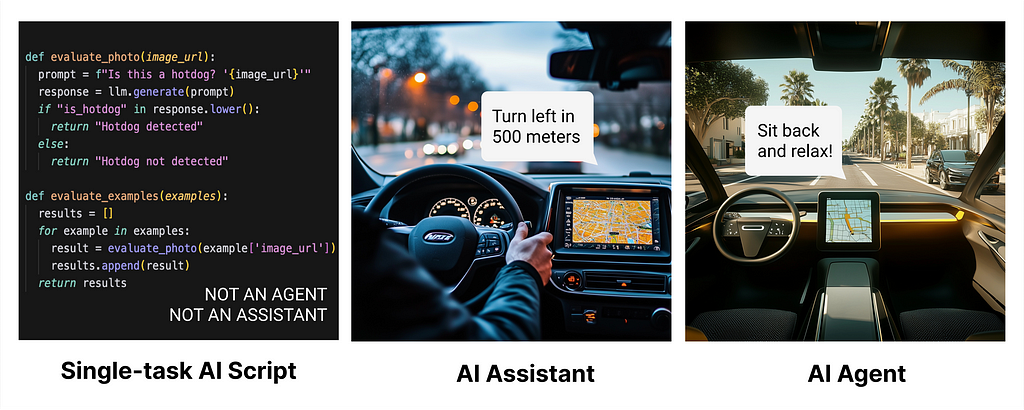

Agents vs. Assistants

As we consider these six attributes, I’d like to propose a useful distinction between what I call ‘AI assistants’ and ‘AI agents’.

An AI Agent:

- Demonstrates at least five of the six attributes (may not include Proactivity)

- It exhibits significant Autonomy within its defined domain, deciding which actions to carry out to complete a task without human oversight

An AI assistant

- Excels in Perception, Interactivity, and Persistence

- May or may not have some degree of Reactivity

- Has limited or no Autonomy or Proactivity

- Primarily responds to human requests and requires human approval to carry out actions

While the industry has yet to converge on an official definition, this framing can help you think through the practical implications of these systems. Both agents and assistants need the foundations of perception, basic interactivity, and persistence to be useful.

By this definition a Roomba vacuum cleaner is closer to a true agent, albeit a basic one. It’s not proactive, but it does exercise autonomy within a defined space, charting its own course, reacting to obstacles and dirt levels, and returning itself to the dock without constant human input.

GitHub Copilot is a highly capable assistant. It excels at augmenting a developer’s capabilities by offering context-aware code suggestions, explaining complex code snippets, and even drafting entire functions based on comments. However, it still relies on the developer to decide where to ask for help, and a human makes the final decisions about code implementation, architecture, and functionality.

The code editor Cursor is starting to edge into agent territory with its proactive approach to flagging potential issues in real time. Cursor’s ability today to make entire applications based on your description is also much closer to a true agent.

While this framework helps distinguish true agents from assistants, the real-world landscape is more complex. Many companies are rushing to label their AI products as “agents,” but are they focusing on the right priorities? It’s important to understand why so many businesses are missing the mark — and why prioritizing unflashy foundation work is essential.

Foundations Before Flash: The Critical Role of Data in AI Perception

Developer tools like Cursor have seen huge success with their push towards agentic behavior, but most companies today are having less than stellar results.

Coding tasks have a well-defined problem space with clear success criteria (code completion, passing tests) for evaluation. There is also extensive high quality training and evaluation data readily available in the form of open source code repositories.

Most companies trying to introduce automation don’t have anything close to the right data foundations to build on. Leadership often underestimates how much of what customer support agents or account managers do relies on unwritten information. How to work around an error message or how soon new inventory is likely to be in stock are some examples of this. The process of properly evaluating a chatbot where people can ask about anything can take months. Missing perception foundations and testing shortcuts are some of the main contributors to the prevalence of botshit.

Start with the Problem: Why User-Centric AI Wins

Before pouring resources into either an agent or an assistant, companies should ask what users actually need, and what their knowledge management systems can support today. Most are not ready to power anything agentic, and many have significant work to do around perception and persistence in order to power a useful assistant.

Some recent examples of half-baked AI features that were rolled back include Meta’s celebrity chatbots nobody wanted to talk to and LinkedIn’s recent failed experiment with AI-generated content suggestions.

Waymo and the Roomba solved real user problems by using AI to simplify existing activities. However, their development wasn’t overnight — both required over a decade of R&D before reaching the market. Today’s technology has advanced, which may allow lower-risk domains like marketing and sales to potentially achieve autonomy faster. However, creating exceptional quality AI systems will still demand significant time and resources.

The Path Forward: Align Data, Systems, and User Needs

Ultimately, an AI system’s value lies not in whether it’s a “true” agent, but in how effectively it solves problems for users or customers.

When deciding where to invest in AI:

- Define the specific user problem you want to solve.

- Determine the minimum pillars of agentic behavior (perception, interactivity, persistence, etc.) and level of sophistication for each you need to provide value.

- Assess what data you have today and whether it’s available to the right systems.

- Realistically evaluate how much work is required to bridge the gap between what you have today and the capabilities needed to achieve your goals.

With a clear understanding of your existing data, systems, and user needs, you can focus on solutions that deliver immediate value. The allure of fully autonomous AI agents is strong, but don’t get caught up in the hype. By focusing on the right foundational pillars, such as perception and persistence, even limited systems can provide meaningful improvements in efficiency and user satisfaction.

Ultimately, while neither HubSpot nor Salesforce may offer fully agentic solutions, any investments in foundations like perception and persistence can still solve immediate user problems.

Remember, no one marvels at their washing machine’s “autonomy,” yet it reliably solves a problem and improves daily life. Prioritizing AI features that address real problems, even if they aren’t fully autonomous or agentic, will deliver immediate value and lay the groundwork for more sophisticated capabilities in the future.

By leveraging your strengths, closing gaps, and aligning solutions to real user problems, you’ll be well-positioned to create AI systems that make a meaningful difference — whether they are agents, assistants, or indispensable tools.

What Makes a True AI Agent? Rethinking the Pursuit of Autonomy was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

What Makes a True AI Agent? Rethinking the Pursuit of Autonomy

Go Here to Read this Fast! What Makes a True AI Agent? Rethinking the Pursuit of Autonomy