A Concise explanation for the general reader

Have you ever wondered how generative AI gets its work done? How does it create images, manage text, and perform other tasks?

The crucial concept you really need to understand is latent space. Understanding what the latent space is paves the way for comprehending generative AI.

Let me walk you through few examples to explain the essence of a latent space.

Example 1. Finding a better way to represent heights and weights data.

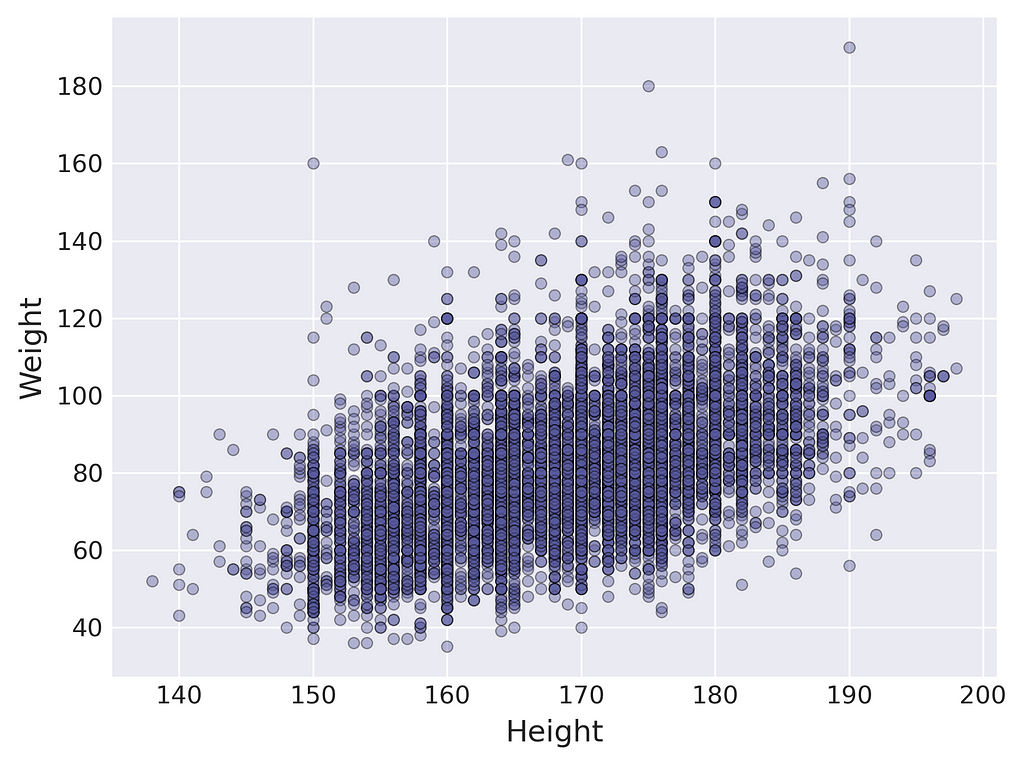

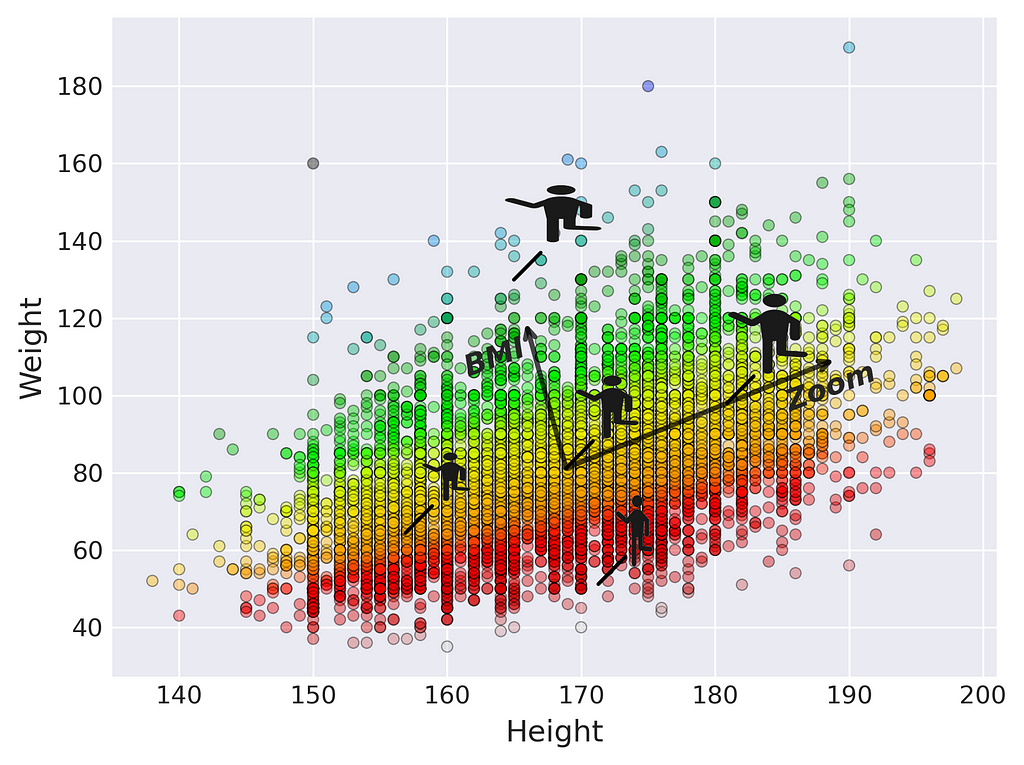

Throughout my numerous medical data research projects, I gathered a lot of measurements of patients’ weights and heights. The figure below shows the distribution of measurements.

You can consider each point as a compressed version of information about a real person. All details such as facial features, hairstyle, skin tone, and gender are no longer available, leaving only weight and height values.

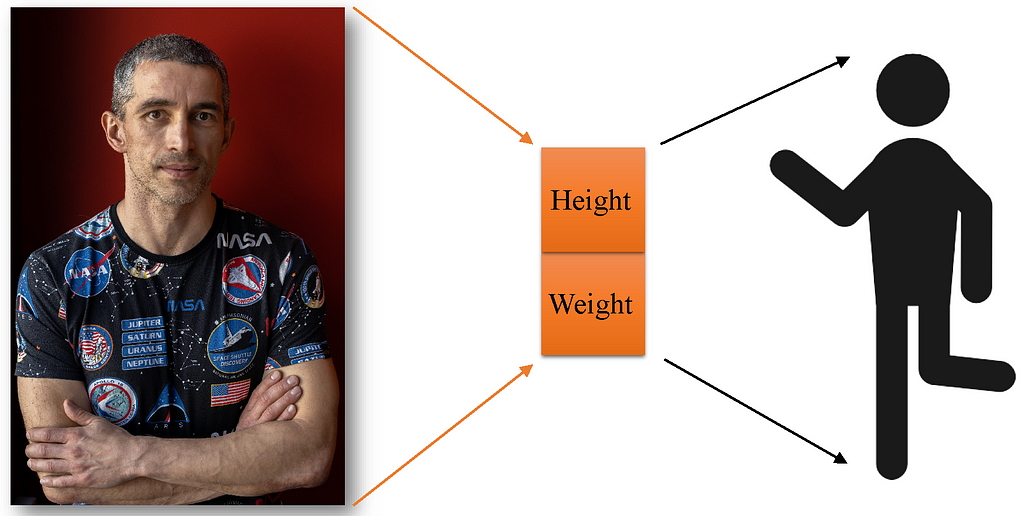

Is it possible to reconstruct the original data using only these two values? Sure, if your expectations aren’t too high. You simply need to replace all the discarded information with a standard template object to fill in the gaps. The template object is customized based on the preserved information, which in this case includes only height and weight.

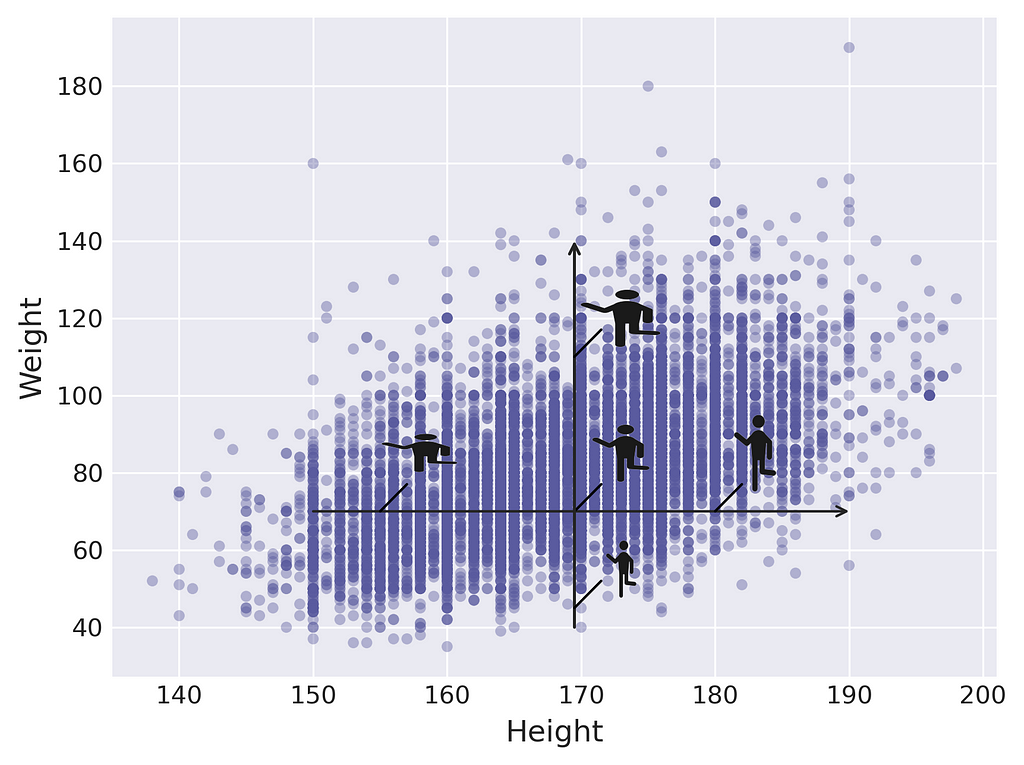

Let’s delve into the space defined by the height and weight axes. Consider a point with coordinates of 170 cm for height and 70 kg for weight. Let this point serve as a reference figure and position it at the origin of the axes.

Moving horizontally keeps your weight constant while altering your height. Likewise, moving up and down keeps your height the same but changes your weight.

It might seem tricky because when you move in one direction, you have to think about two things simultaneously. Is there a way to improve this?

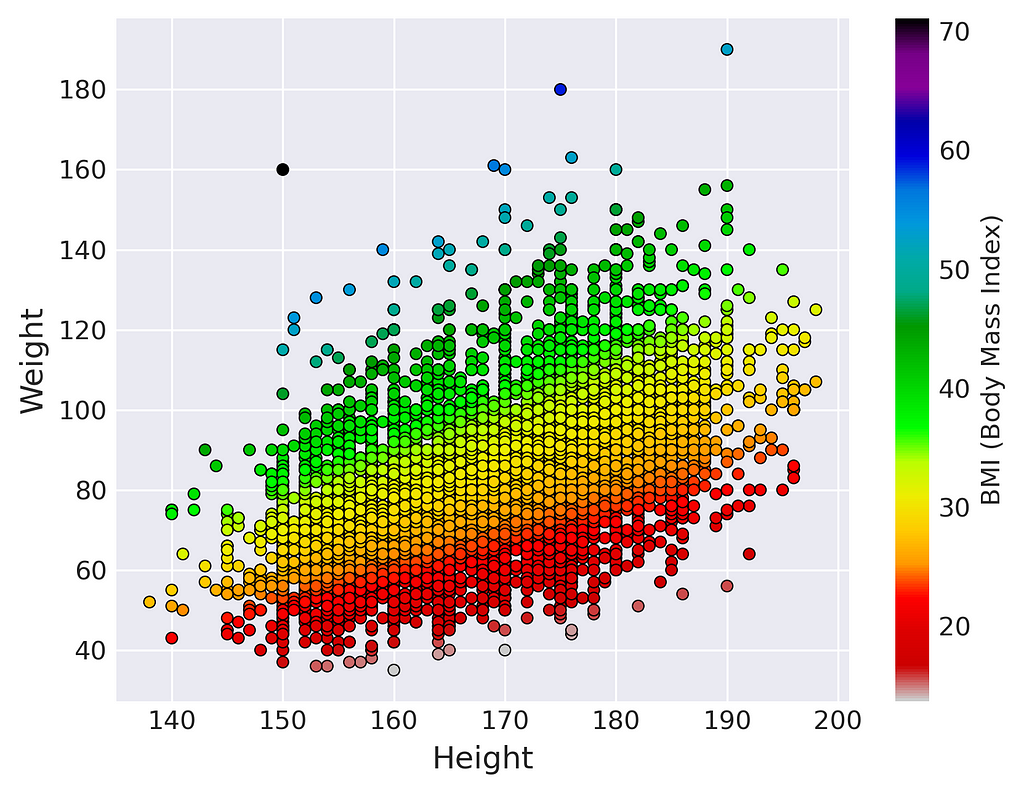

Take a look at the same dataset colour-coded by BMI.

The colors nearly align with the lines. This suggests that we could consider other axes that might be more convenient for generating human figures.

We might name one of these axes ‘Zoom’ because it maintains a constant BMI, with the only change being the scale of the figure. Likewise, the second axis could be labeled BMI.

The new axes offer a more convenient perspective on the data, making it easier to explore. You can specify a target BMI value and then simply adjust the size of the figure along the ‘Zoom’ axis.

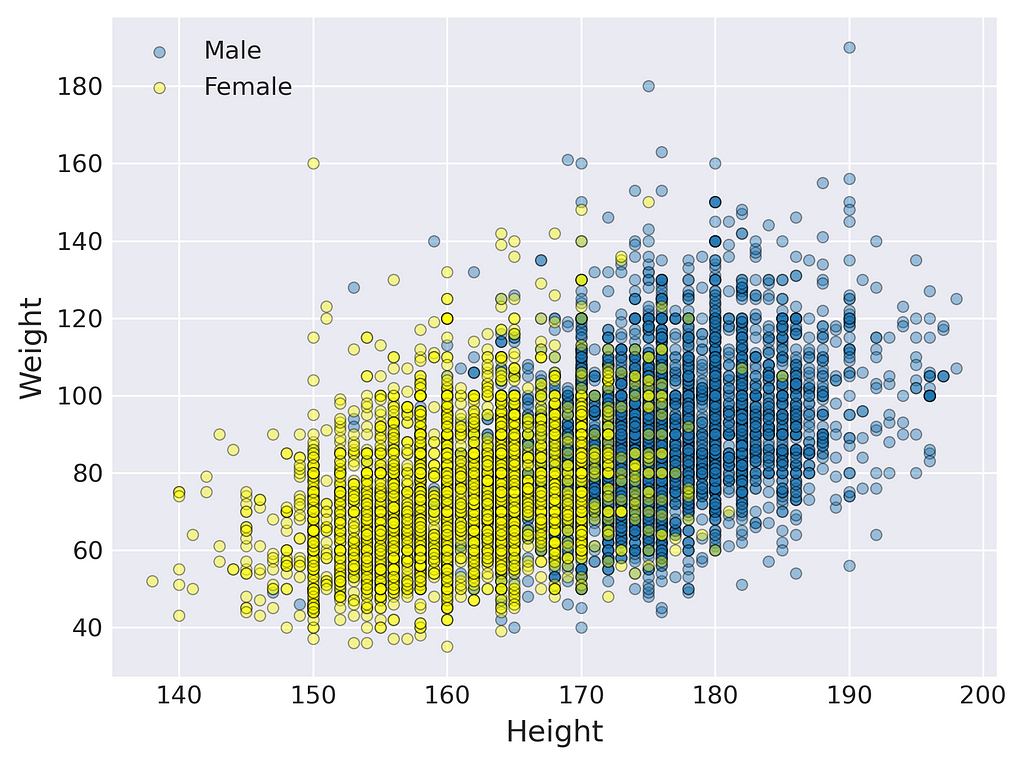

Looking to add more detail and realism to your figures? Consider additional features, such as gender, for instance. But from now on, I can’t offer similar visualizations that encompass all aspects of the data due to the lack of dimensions. I’m only able to display the distribution of three selected features: two features are depicted by the positions of points on the axes, with the third being indicated by color.

To improve the previous human figure generator, you can create separate templates for males and females. Then generate a female in yellow-dominant areas and a male where blue prevails.

As more features are taken into account, the figures become increasingly realistic. Notice also that a figure can be generated for every point, even those not present in the dataset.

This is what I would call a top-down approach to generate synthetic human figures. It involves selecting measurable features and identifying the optimal axes (directions) for exploring the data space. In the machine learning community, the first is called feature selection, and the second is termed feature extraction. Feature extraction can be carried out using specialized algorithms, e.g., PCA¹ (Principal Component Analysis), allowing the identification of directions that represent the data more naturally.

The mathematical space from which we generate synthetic objects is termed the latent space for two reasons. At first, the points (vectors) in this space are simply compressed, imperfect numerical representations of the original objects, much like shadows. Secondly, the axes defining the latent space often bear little resemblance to the originally measured features. The second reason will be better demonstrated in the next examples.

Example 2. Aging of human faces.

Twoday’s generative AI follows a bottom-up approach, where both feature selection and extraction are performed automatically from the raw data. Consider a vast dataset comprising images of faces, where the raw features consist of the colors of all pixels in each image, represented as numbers ranging from 0 to 255. A generative model like GAN² (Generative Adversarial Network) can identify (learn) a low-dimensional set of features where we can find the directions that interest us the most.

Imagine you want to develop an app that takes your image and shows you a younger or older version of yourself. To achieve this, you need to sort all latent space representations of images (latent space vectors) according to age. Then, for each age group, you have to determine the average vector.

If all goes well, the average vectors would align along a curve, which you can consider to approximate the age value axis.

Now, you can determine the latent space representation of your image (encoding step) and then move it along the age direction as you wish. Finally, you decode it to generate a synthetic image portraying the older (or younger) version of yourself. The idea of the decoding step here is similar to what I showed you in Example 1, but theoretically and computationally much more advanced.

The latent space allows exploration into other interesting dimensions, such as hair length, smile, gender, and more.

Example 3. Arranging words and phrases based on their meanings.

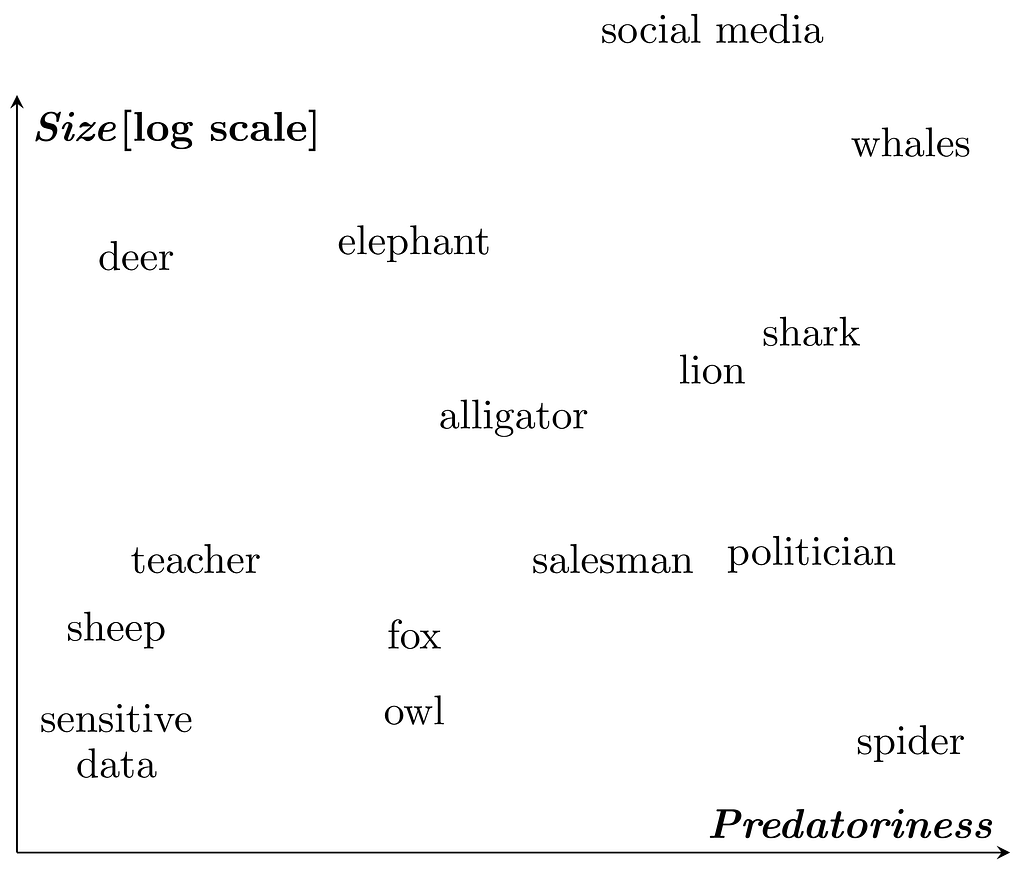

Let’s say you’re doing a study on predatory behavior in nature and society and you’ve got a ton of text material to analyze. For automating the filtering of relevant articles, you can encode words and phrases into the latent space. Following the top-down approach, let this latent space be based on two dimensions: Predatoriness and Size. In a real-world scenario, you’d need more dimensions. I only took two so you could see the latent space for yourself.

Below, you can see some words and phrases represented (embedded) in the introduced latent space. Using an analogy to physics: you can think of each word or phrase as being loaded with two types of charges: predatoriness and size. Words/phrases with similar charges are located close to each other in the latent space.

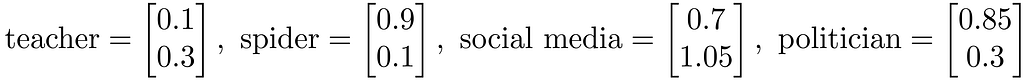

Every word/phrase is assigned numerical coordinates in the latent space.

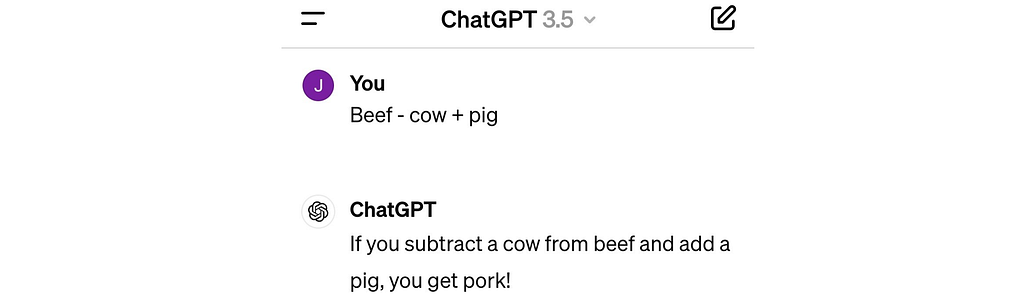

These vectors are latent space representations of words/phrases and are referred to as embeddings. One of the great things about embeddings is that you can perform algebraic operations on them. For example, if you add the vectors representing ‘sheep’ and ‘spider’, you’ll end up close to the vector representing ‘politician’. This justifies the following elegant algebraic expression:

Do you think this equation makes sense?

Try out the latent space representation used by ChatGPT. This could be really entertaining.

Final words

The latent space represents data in a manner that highlights properties essential for the current task. Many AI methods, especially generative models and deep neural networks, operate on the latent space representation of data.

An AI model learns the latent space from data, projects the original data into this space (encoding step), performs operations within it, and finally reconstructs the result into the original data format (decoding step).

My intention was to help you understand the concept of the latent space. To delve deeper into the subject, I suggest exploring more mathematically advanced sources. If you have good mathematical skills, I recommend following the blog of Jakub Tomczak, where he discusses hot topics in the field of generative AI and offers thorough explanations of generative models.

Unless otherwise noted, all images are by the author.

References

[1] Deisenroth, Marc Peter, A. Aldo Faisal, Cheng Soon Ong. Mathematics for machine learning. Cambridge University Press, 2020.

[2] Jakub M. Tomczak. Deep Generative Modeling. Springer, 2022

What Is a Latent Space? was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

What Is a Latent Space?