The first part of a practical guide to using HuggingFace’s CausalLM class

If you’ve played around with recent models on HuggingFace, chances are you encountered a causal language model. When you pull up the documentation for a model family, you’ll get a page with “tasks” like LlamaForCausalLM or LlamaForSequenceClassification.

If you’re like me, going from that documentation to actually finetuning a model can be a bit confusing. We’re going to focus on CausalLM, starting by explaining what CausalLM is in this post followed by a practical example of how to finetune a CausalLM model in a subsequent post.

Background: Encoders and Decoders

Many of the best models today such as LLAMA-2, GPT-2, or Falcon are “decoder-only” models. A decoder-only model:

- takes a sequence of previous tokens (AKA a prompt)

- runs those tokens through the model (often creating embeddings from tokens and running them through transformer blocks)

- outputs a single output (usually the probability of the next token).

This is contrasted with models with “encoder-only” or hybrid “encoder-decoder” architectures which will input the entire sequence, not just previous tokens. This difference disposes the two architectures towards different tasks. Decoder models are designed for the generative task of writing new text. Encoder models are designed for tasks which require looking at a full sequence such as translation or sequence classification. Things get murky because you can repurpose a decoder-only model to do translation or use an encoder-only model to generate new text. Sebastian Raschka has a nice guide if you want to dig more into encoders vs decoders. There’s a also a medium article which goes more in-depth into the differeneces between masked langauge modeling and causal langauge modeling.

For our purposes, all you need to know is that:

- CausalLM models generally are decoder-only models

- Decoder-only models look at past tokens to predict the next token

With decoder-only language models, we can think of the next token prediction process as “causal language modeling” because the previous tokens “cause” each additional token.

HuggingFace CausalLM

In HuggingFace world, CausalLM (LM stands for language modeling) is a class of models which take a prompt and predict new tokens. In reality, we’re predicting one token at a time, but the class abstracts away the tediousness of having to loop through sequences one token at a time. During inference, CausalLMs will iteratively predict individual tokens until some stopping condition at which point the model returns the final concatenated tokens.

During training, something similar happens where we give the model a sequence of tokens we want to learn. We start by predicting the second token given the first one, then the third token given the first two tokens and so on.

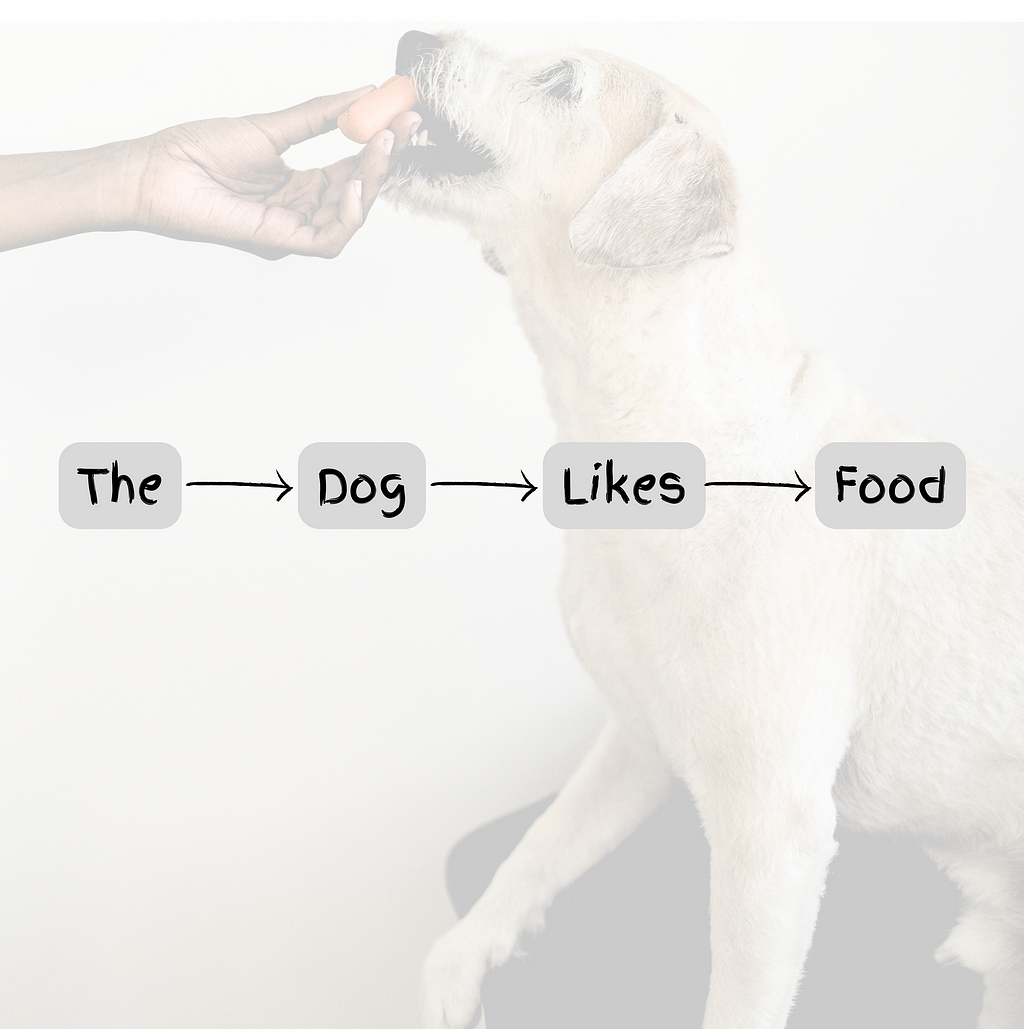

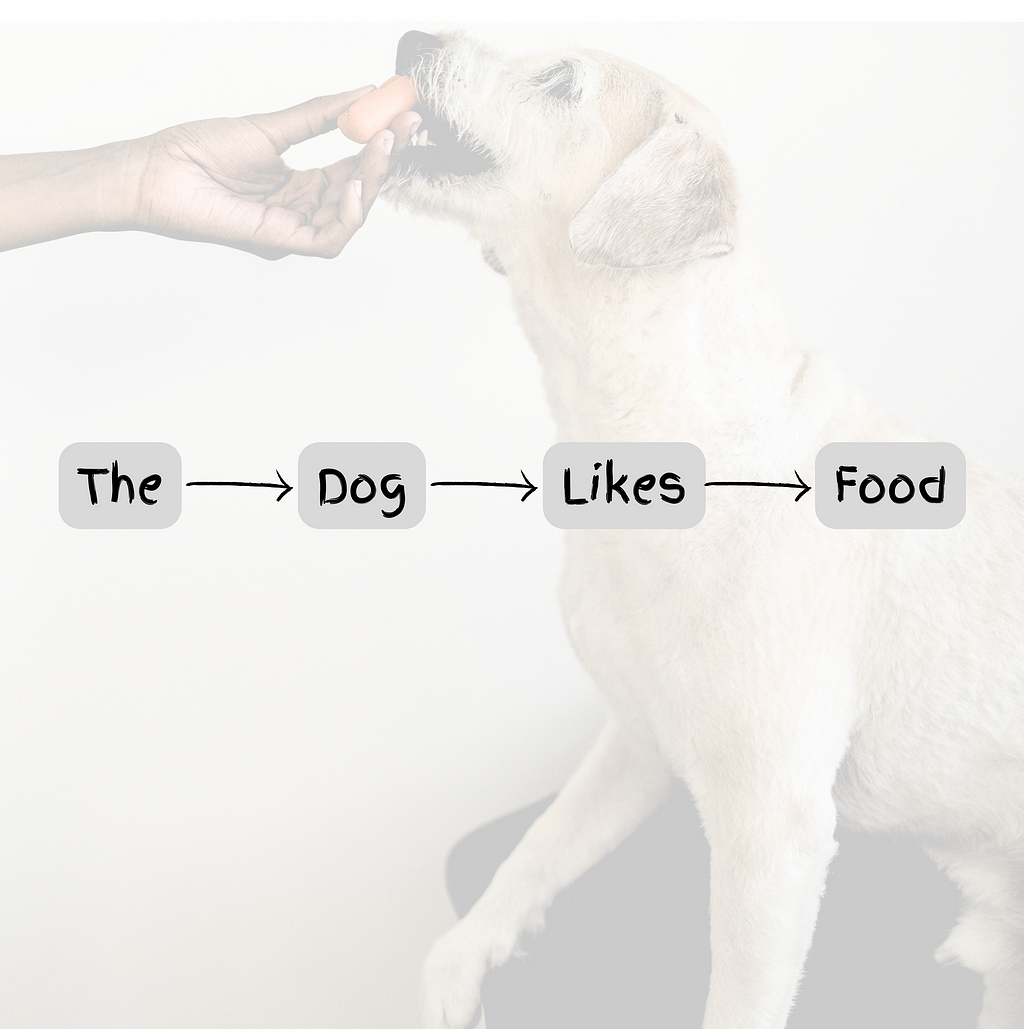

Thus, if you want to learn how to predict the sentence “the dog likes food,” assuming each word is a token, you’re making 3 predictions:

- “the” → dog,

- “the dog” → likes

- “the dog likes” → food

During training, you can think about each of the three snapshots of the sentence as three observations in your training dataset. Manually splitting long sequences into individual rows for each token in a sequence would be tedious, so HuggingFace handles it for you.

As long as you give it a sequence of tokens, it will break out that sequence into individual single token predictions behind the scenes.

You can create this ‘sequence of tokens’ by running a regular string through the model’s tokenizer. The tokenizer will output a dictionary-like object with input_ids and an attention_mask as keys, like with any ordinary HuggingFace model.

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("bigscience/bloom-560m")

tokenizer("the dog likes food")

>>> {'input_ids': [5984, 35433, 114022, 17304], 'attention_mask': [1, 1, 1, 1]}

With CausalLM models, there’s one additional step where the model expects a labels key. During training, we use the “previous” input_ids to predict the “current” labels token. However, you do not want to think about labels like a question answering model where the first index of labels corresponds with the answer to the input_ids (i.e. that the labels should be concatenated to the end of the input_ids). Rather, you want labels and input_ids to mirror each other with identical shapes. In algebraic notation, to predict labels token at index k, we use all the input_ids through the k-1 index.

If this is confusing, practically, you can usually just make labels an identical copy of input_ids and call it a day. If you do want to understand what’s going on, we’ll walk through an example.

A quick worked example

Let’s go back to “the dog likes food.” For simplicity, let’s leave the words as words rather than assigning them to token numbers, but in practice these would be numbers which you can map back to their true string representation using the tokenizer.

Our input for a single element batch would look like this:

{

"input_ids": [["the", "dog", "likes", "food"]],

"attention_mask": [[1, 1, 1, 1]],

"labels": [["the", "dog", "likes", "food"]],

}

The double brackets denote that technically the shape for the arrays for each key is batch_size x sequence_size. To keep things simple, we can ignore batching and just treat them like one dimensional vectors.

Under the hood, if the model is predicting the kth token in a sequence, it will do so kind of like so:

pred_token_k = model(input_ids[:k]*attention_mask[:k]^T)

Note this is pseudocode.

We can ignore the attention mask for our purposes. For CausalLM models, we usually want the attention mask to be all 1s because we want to attend to all previous tokens. Also note that [:k] really means we use the 0th index through the k-1 index because the ending index in slicing is exclusive.

With that in mind, we have:

pred_token_k = model(input_ids[:k])

The loss would be taken by comparing the true value of labels[k] with pred_token_k.

In reality, both get represented as 1xv vectors where v is the size of the vocabulary. Each element represents the probability of that token. For the predictions (pred_token_k), these are real probabilities the model predicts. For the true label (labels[k]), we can artificially make it the correct shape by making a vector with 1 for the actual true token and 0 for all other tokens in the vocabulary.

Let’s say we’re predicting the second word of our sample sentence, meaning k=1 (we’re zero indexing k). The first bullet item is the context we use to generate a prediction and the second bullet item is the true label token we’re aiming to predict.

k=1:

- Input_ids[:1] == [the]

- Labels[1] == dog

k=2:

- Input_ids[:2] == [the, dog]

- Labels[2] == likes

k =3:

- Input_ids[:3] == [the, dog, likes]

- Labels[3] == food

Let’s say k=3 and we feed the model “[the, dog, likes]”. The model outputs:

[P(dog)=10%, P(food)=60%,P(likes)=0%, P(the)=30%]

In other words, the model thinks there’s a 10% chance the next token is “dog,” 60% chance the next token is “food” and 30% chance the next token is “the.”

The true label could be represented as:

[P(dog)=0%, P(food)=100%, P(likes)=0%, P(the)=0%]

In real training, we’d use a loss function like cross-entropy. To keep it as intuitive as possible, let’s just use absolute difference to get an approximate feel for loss. By absolute difference, I mean the absolute value of the difference between the predicted probability and our “true” probability: e.g. absolute_diff_dog = |0.10–0.00| = 0.10.

Even with this crude loss function, you can see that to minimize the loss we want to predict a high probability for the actual label (e.g. food) and low probabilities for all other tokens in the vocabulary.

For instance, let’s say after training, when we ask our model to predict the next token given [the, dog, likes], our outputs look like the following:

Now our loss is smaller now that we’ve learned to predict “food” with high probability given those inputs.

Training would just be repeating this process of trying to align the predicted probabilities with the true next token for all the tokens in your training sequences.

Conclusion

Hopefully you’re getting an intuition about what’s happening under the hood to train a CausalLM model using HuggingFace. You might have some questions like “why do we need labels as a separate array when we could just use the kth index of input_ids directly at each step? Is there any case when labels would be different than input_ids?”

I’m going to leave you to think about those questions and stop there for now. We’ll pick back up with answers and real code in the next post!

Training CausalLM Models Part 1: What Actually Is CausalLM? was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Training CausalLM Models Part 1: What Actually Is CausalLM?

Go Here to Read this Fast! Training CausalLM Models Part 1: What Actually Is CausalLM?