Exploring the THInC framework for humor detection

Humor is an essential aspect of what makes humans, humans, but it is also an aspect that many contemporary AI models are very lacking in. They haven’t got a funny bone in them, not even a humerus. While creating and detecting jokes might seem unimportant, an LLM would likely be able to use this knowledge to craft even better, more human-like responses to questions. Understanding human humor also indicates a rudimentary understanding of human emotion and much greater functional competence.

Unfortunately, research into humor detection and classification still has several glaring issues. Most existing research either fails to apply existing linguistic and psychological theory to computation or fails to be interpretable enough to connect the model’s results and the theories of humor. That’s where the THInC (Theory-driven humor Interpretation and Classification) model comes in. This new approach, proposed by researchers from the University of Antwerp, the University of Leuven, and the University of Montreal at the 2024 European Conference on AI, seeks to leverage existing theories of humor through the use of Generalized Additive Models and pre-trained language transformers to create a highly accurate and interpretable humor detection methodology.

In this article, I aim to summarize the THInC framework and how Marez et al. approaches the difficult problem of humor detection. I recommend checking out their paper for more information.

Humor Theories

Before we dive into the computational approach of the paper, we need to first answer the question: what makes something funny? Well, one can look into humor Theories (insert citation here), various axioms that aim to explain why a joke could be considered a joke. There are countless humor theories, but there are three major ones that tend to take the spotlight:

Incongruity Theory: We find humor in events that are surprising or don’t fit our expectations of events playing out without being outright mortifying. It could just be a small deviation of the norm or a massive shift in tone. A lot of absurd humor fits under this umbrella.

Superiority Theory: We find humor in the misfortune of others. People often laugh at the expense of someone deemed to be lesser, such as a wrongdoer. Home Alone is an example.

Relief Theory: humor and laughter are mechanisms people develop to release their pent-up emotions. This is best demonstrated by comic relief characters in fiction designed to break up the tension in a scene with a well(or not so well) timed joke.

Encoding Humor Theory

The most significant difficulty researchers have had with incorporating humor into AI is determining how to distill it into a computable format. Due to their vague nature, a theory can be arbitrarily stretched to fit any number of jokes. This poses a problem for anyone attempting to detect humor. How can one convert something as qualitative as humor to numerical values?

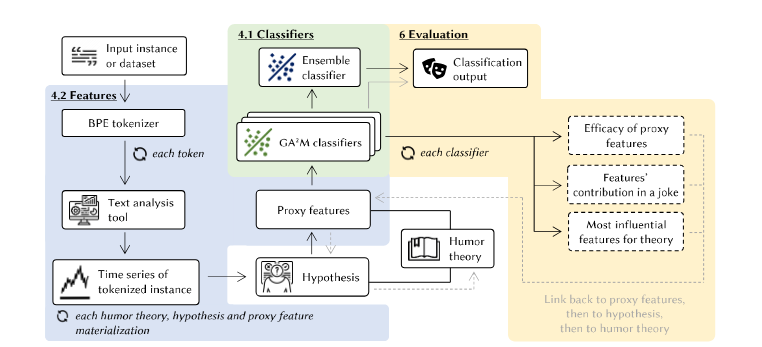

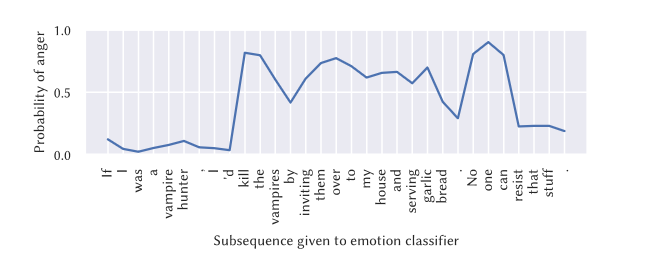

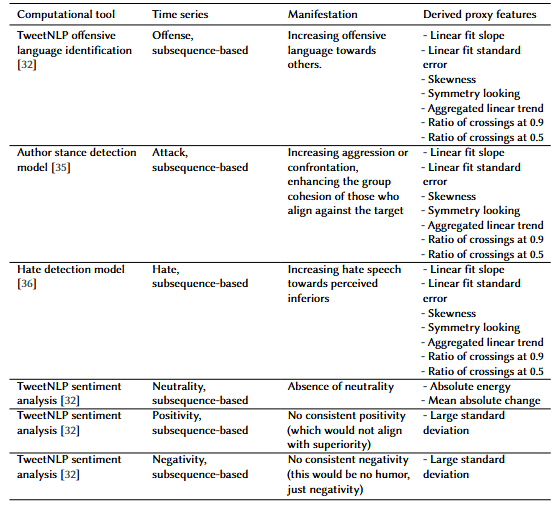

Marez et al. took a clever approach to encoding the theories. Jokes usually work in a linear manner, with a clear start and end to a joke, so they decided to transform the text into a time series. By tokenizing the sentence and using tools like TweetNLP’s sentiment analysis and emotion recognition models, the researchers developed a way to map how different emotions changed over time in a given sentence.

From here, they generated several hypotheses to serve as “manifestations” of the humor theories they could use to create features. For example, a hypothesis/manifestation of the relief theory is an increase in optimism within the joke. Using the manifestation, they would find ways to convert that to numerical proxy features, which serve as a representation of the humor theory and the hypothesis. The example of increasing optimism would be represented by the slope of the linear fit of the time series. The group would define several hypotheses for every humor theory, convert each to a proxy feature, and use those proxy features to train each model.

For example, the model for the superiority theories would use the proxy features representing offense and attack. In contrast, the relief theory would use features representing a change in optimism or joy.

Methods

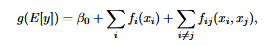

Once the proxy features were calculated, Marez et al. used a Generalized Additive Model (GAM) with pairwise interactions (AKA a GA2M) model to interpretively classify humor.

A Generalized Additive Model (GAM) is an extension of generalized linear models (GLMs) that allows for non-linear relationships between the features and the output[3]. Rather than sum linear terms, a GAM sums up nonlinear functions such as splines and polynomials to get a final data fit. A good comparison would be a scoreboard. Each function in the GAM is a separate player that individually contributes or detracts from the overall score. The final score is the prediction the model makes.

A GA2M extends the standard GAM by incorporating pairwise terms, enabling it to capture not just how individual features contribute to the predictions but also how pairs of features interact with each other [1]. Looking back to the scoreboard example, a GA2M would be what happens if we included teamwork in the mix, where features can “interact” with each other.

The specific GAM chosen by Marez et al. is the EBM(Explainable Boosting Machine) from the InterpretML Library. An EBM applies gradient boosting to each feature to significantly improve the performance of a model. For more details, refer to the InterpretML documentation here or the explanation by its developer here

Why GA2M?

Interpretability: GAMs and by extension GA2Ms allow for interpretability on the feature level. An outside party would be able to see the impacts that individual proxy features have on the results.

Flexibility: By incorporating interaction terms, GA2M enables the exploration of relationships between different features. This is particularly useful in humor classification. For example, it can help us understand how optimism relates to positivity when following the relief theory.

At the end of the training, the group can then combine the results from each of the classifiers to determine the relative impact of each emotion and each humor theory on whether or not a phrase will be perceived as a joke.

Results

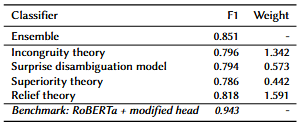

The model was remarkably accurate, with the combined model having an F1 score of 85%, indicating that the model has high precision and recall. The individual models also performed reasonably well, with F1 scores ranging from 79 to 81.

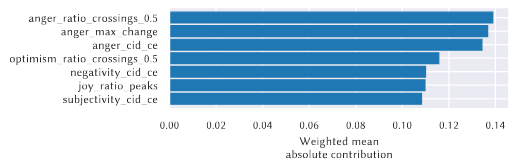

Furthermore, the model keeps this score while being very interpretable. Below, we can see each proxy feature’s contribution to the result.

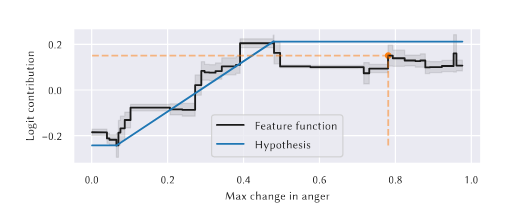

A GA2M also allows for feature-level analysis of contribution where the feature function can be graphed to determine the contribution of a feature in relation to its value. Figure 6 below shows an example of this. The graph shows how an increased anger change also contributes to a higher likelihood of being classified as a joke under the incongruity theory.

Despite the framework’s incredible performance, the proxy features could be improved. These include revisiting and revising existing humor theories and making the proxy features more robust to the noise present in the text.

Conclusion

Humor is still a nebulous aspect of the human experience. Our current humor theories are still vague and too flexible, which can be annoying to convert to a computational model. The THInC framework is a promising step in the right direction. There’s no doubt that the framework has its issues, but many of those flaws stem from the unclear nature of humor itself. It’s hard to get a machine to know humor when humans still haven’t figured it out. The integration of sentiment analysis and emotion recognition into humor classification demonstrates a novel approach to incorporating humor theories into humor detection and the use of a GA2M is an ingenious way to incorporate the many nuances of humor into its function.

Resources

- THInC Github Repository: https://github.com/Victordmz/thinc-framework/tree/1

- THInC Paper: https://doi.org/10.48550/arXiv.2409.01232

- Explanation of EBM Video: https://youtu.be/MREiHgHgl0k?si=_zHOsZKlzJOD8k9m

- EBM Docs: https://interpret.ml/docs/ebm.html

References

[1] De Marez, V., Winters, T., & Terryn, A. R. (2024). THInC: A Theory-Driven Framework for Computational humor Detection. arXiv preprint arXiv:2409.01232.

[2] A. Nijholt, O. Stock, A. Dix, J. Morkes, humor modeling in the interface, in: CHI’03 extended abstracts on Human factors in computing systems, 2003, pp. 1050–1051

[3] Hastie, T., Tibshirani, R., & Friedman, J. H. (2009). The Elements of Statistical Learning: Data Mining, Inference, and Prediction (2nd ed.). New York, Springer

Think You Know a Joke? AI for Humor Detection. was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Think You Know a Joke? AI for Humor Detection.

Go Here to Read this Fast! Think You Know a Joke? AI for Humor Detection.