In the emerging era of AI development, what should be the focal points for data science teams?

Until recently AI models were accessible only via solutions made by data scientists or other service providers. Today, AI is being democratized and available for non-AI experts allowing them to develop their own AI-driven solutions.

What used to take weeks or months for data science teams to collect data, annotate, fit, and deploy a model, can take a few minutes to build with simple prompts and the latest generative AI model. As AI technology progresses such is the expectation to adopt it and build smarter AI-driven products and we as AI-experts hold the responsibility in supporting it across the organization.

We at Wix are no strangers to this transformation, since 2016 (way before ChatGPT — Nov 22) our data science team has been crafting numerous impactful AI-powered features. In recent times, with the advent of the GenAI revolution, an increasing number of roles within Wix have embraced this trend and Together, we’ve successfully rolled out numerous additional features, empowering website creation with chatbots, enriching content creation capabilities, and optimizing how agencies work.

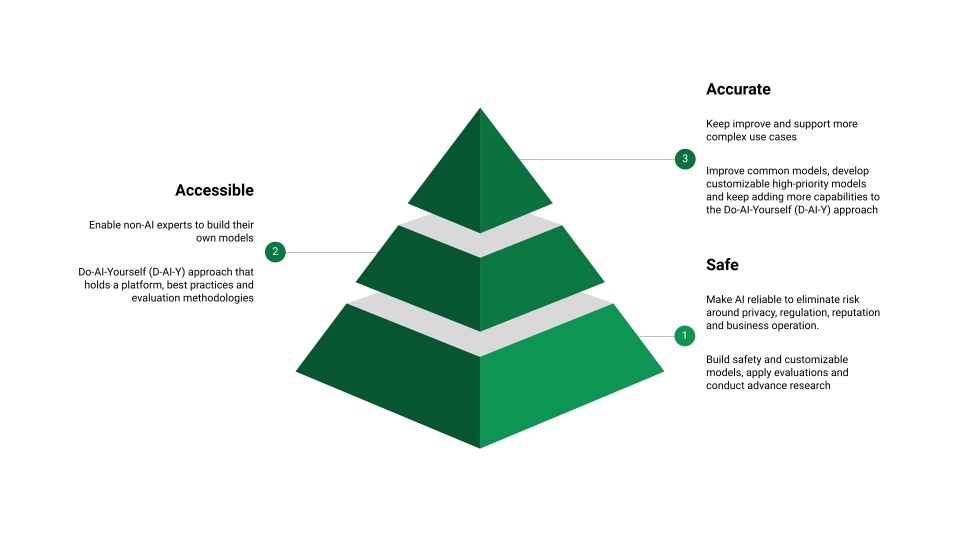

In our role as a data science group at Wix, we bear the responsibility for ensuring the quality and widespread acceptance of AI. Recognizing the need to actively contribute to the democratization of AI, we have identified three key roles that we must undertake and spearhead: 1. Ensuring Safety, 2. Enhancing Accessibility, and 3. Improving Accuracy.

Data Science + Product teams = AI Impact

The art of building AI models is the capability to navigate and generalize to unseen edge cases. It requires a data science practice that comprises business and data understanding that is iteratively evaluated and tuned.

Democratization of AI to product teams (product managers, developers, analysts, UX, content writers etc.) can boost the time to ship AI-driven applications but requires collaboration with data science to come up with the right processes and techniques.

In the SWOT diagrams below we can see how data science and product teams complement each other’s weaknesses and threats with their strengths and opportunities and eventually ship impactful, reliable, cutting edge AI-products on time.

1. Ensuring AI Safty

One of the most discussed topics these days is the safety of using AI. When focusing on product-oriented solutions there are a few areas that we have to consider.

- Regulation — models can make decisions that might discriminate against certain populations for example give discounts based on gender or Gender discrimination for high-paying job ads. Also, when using third-party tools such as external large language models (LLMs) company secret data or users’ Personal Identifiable Information (PII) can be leaked. Recently Nature argued that there should be a regulatory overview for applications based on LLMs.

- Reputation — user-facing models can have mistakes and produce bad experiences, for example, a chatbot based on LLMs can answer wrongly or not up-to-date answer or toxic racist answers or Air Canada chatbot inconsistencies.

- Damage — decision-making models can predict wrong answers and affect the business operation, for example, a model that predicts house pricing causes 500M $ loss.

Data scientists understand the uncertainty of AI models and can offer different solutions to handle such risks and allow safe usage of the tech, For example:

- Safe modeling — develop models to mitigate the risk, for example, a PII masking model and misuse detection model.

- Evaluation at scale — Apply advanced data evaluation techniques to monitor and analyze the model’s performance and type of errors.

- Models’ customization — working with clean annotated data, filtering out harmful and irrelevant data points, and building smaller and more customizable models.

- Ethics research — read and apply the latest research around ethics at AI and come up with best practices.

2. Enhancing AI Accessibility

AI should be easy to use and available to non-AI experts to integrate into their products. Until recently the way to integrate with models was online/offline models that were developed by a data scientist, they are reliable, use-case-specific models, and their predictions are accessible.

But their main drawback is that they are not customizable by a non-AI expert. This is why we came up with a Do-AI-Yourself (D-AI-Y) approach that allows you to build your model and then deploy them as a service on a platform.

The goal is to build simple yet valuable models fast with little AI expertise. In case the model requires improvements and research we have a data scientist on board.

The D-AI-Y holds the following components:

- Education: teach the organization about AI and how to use it properly, at Wix we have an AI ambassador program, which is a gateway of AI knowledge between the different groups at Wix and the Data Science group, where groups’ representatives are trained and updated with new AI tools and best practices in order to increase scale quality and velocity of the AI-based projects in Wix.

- Platform: have a way to connect to LLMs and write prompts. The platform should count for the cost and scale of the model and accessibility to internal data sources. At Wix, the data science group built an AI platform that connects different roles at Wix to models from a variety of vendors (to reduce LLM vendor lock) and other capabilities like semantic search. The platform acts as a centralized hub for everyone to use and share their models, governance, monitor and serve them in production.

- Best practices and tools for building simple straightforward models using prompts or dedicated models to solve a certain learning task: classification, QA bot, Recommender system, semantic search, etc.

- Evaluation: for each learning task we suggest a certain evaluation process and also provide data curation guidance if needed.

For example, A company builds many Q&A models using Retrieval Augment Generation (RAG), an approach that answers questions by searching for relevant evidence that can answer the question and then augment the evidence into the LLM’s prompt so it could generate a reliable answer based on it.

So, Instead of just connecting black boxes and hoping for the best, The data science team can come up with: 1. And educational material and lectures about the RAG topic, for example this lecture I gave about semantic search used to improve RAG. 2. Equip the platform with suitable vector DB and relevant embedder 3. guidelines for building RAG, how to retrieve the evidence and write the generation prompt 4. Guidelines and tools that will support proper evaluation of RAG just like explained in this TDS post and the RAG triad by Trulens .

This will allow many roles in the company to build their own RAG based apps models in a reliable, accurate and scalable way.

3. Improving AI Accuracy

As AI becomes more and more adopted such as the expectation to build more complex, accurate, and advanced solutions. At the end of the day, there is a limit to how much a non-AI expert can improve the models’ performance as it requires a deeper understanding of how the models work.

To make models more accurate the data science group is focusing on these types of efforts:

- Improve common models — customize and improve models to hold Wix knowledge and outperform the external general out-of-the-box models.

- Customize models — highly prioritized and challenging models that the D-AI-Y can not support. Unlike common models, here we have very use-case-specific models that require customization.

- Improve the D-AI-Y — as we improve our D-AI-Y platform, best practices, tools, and evaluation AI becomes more accurate, therefore we keep investing research time and effort in enhancing and identifying innovative ways to make it better.

Conclusion

After years of waiting, the democratization of AI is happening, let’s embrace it! Product teams’ inherent understanding of the business together with the ease of use of GenAI allows them to build AI-driven features that boost their product capabilities.

Because non-AI experts are not equipped with a deep understanding of how AI models work and how to evaluate them properly at scale they might face issues around results’ reliability and accuracy. This is where the data science group can assist and support their efforts by guiding the teams on how to safely use the models, create mitigation services if needed, share the latest best practices around new AI capabilities, evaluate their performance, and serve them at scale.

When an AI feature shows great business impact, the product teams will immediately start shifting their effort towards improving the results, this is where data scientists can offer advanced approaches to improve performance as they understand how these models work.

To conclude, The role of data science in democratizing AI is a crucial one, as it bridges the gap between AI technology and those who may not have extensive AI expertise. Through collaboration between data scientists and product teams, we can harness the strengths of both fields to create safe, accessible, and accurate AI-driven solutions that drive innovation and deliver exceptional user experiences. With ongoing advancements and innovations, the future of democratized AI holds great potential for transformative change across industries.

*Unless otherwise noted, all images are by the author

The Role of Data Science in Democratizing AI was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

The Role of Data Science in Democratizing AI

Go Here to Read this Fast! The Role of Data Science in Democratizing AI