Prompting ChatGPT and other chat-based language AI — and why you should (not) care about it

Foreword

This article sheds some light on the question of how to “talk” to Large Language Models (LLM) that are designed to interact in conversational ways, like ChatGPT, Claude and others, so that the answers you get from them are as useful as possible for the task at hand. This particular communication from human to the language chatbot is what’s typically referred to as prompting. With this article, I mean to give people with no computer science background a compact overview of the topic so that everyone can understand. It can also help businesses to contextualize of what (not) to expect from their LLM adaption endeavors.

Prompting is the first of four steps you can take when climbing the ladder of adapting language models for your businesses’ custom use. I have introduced the overall 4 Step Framework of unlocking custom LLM in my previous post. If you haven’t already it might be helpful to read it first so that you can fit the ideas presented here into a larger context.

The Business Guide to Tailoring Language AI

Introduction

Shortly after the mass market introduction of ChatGPT, a new, hot profession has entered the AI scene: Prompt engineering. These AI “whisperers”, i.e. people that have certain skills to “prompt”, that is, talk to language AI so that it responds in useful ways, have become highly sought-after (and generously paid) new roles. Considering that a main building block of proper prompting is simply (or not so simply) giving precise instructions (see below), I must confess that I was surprised by this development (regardless of the fact that prompt engineering certainly involves more than just “whispering”): Isn’t communicating in a precise and concise manner a basic professional skill that we all should possess? But then again, I was reflecting on how important it is to have well-crafted requirements in software development, and “requirement engineering” roles have been an important ingredient of successful software development projects for a while now.

I observe a level of uncertainty and “best guessing” and even contradictions in the topic of LLM and prompting that I have not yet experienced in any IT-related subject. This has to do with the type and size of AI models and their stochastic characteristics, which is beyond the scope of this article. Considering the 1.76 trillion parameters of models like GPT-4, the number of possible combinations and paths from input (your “prompt”) to output (the model response) is virtually indefinite and non-deterministic. Hence, applications treat these models mainly as black boxes, and related research focuses on empirical approaches such as benchmarking their performance.

The sad news is that I cannot present you a perfect one-size-fits-all prompting solution that will forever solve your LLM requirements. Add to this that different models behave differently, and you may understand my dilemma. There’s some good news, though: On the one hand, you can, and should, always consider some basic principles and concepts that will help you optimize your interactions with the machines. Well-crafted prompts gets you farther than poor ones, and this is why it is well worthwhile to dig a bit deeper into the topic. On the other hand, it may not even be necessary to worry too much about prompting at all, which saves you valuable computing time (literally, CPU/GPU and figuratively, in your own brain).

Start with Why

Here I am not referring to Simon Sinek’s classic TEDx business advice. Instead, I encourage you to curiously wonder why technology does what it does. I strongly believe in the notion that if you understand at least a bit of the inner workings of software, it will tremendously help you in its application.

So how, in principle, is the input (the prompt) related to the output (the response), and why is it that proper prompts result in better suited responses? To figure this out, we need to have at least a superficial look at the model architecture and its training and fine-tuning, without needing to understand the details of the impressive underlying concepts like the infamous Transformer Architecture and Attention Mechanisms which ultimately caused the breakthrough of ChatGPT-like Generative AI as we know it today.

For our purposes, we can look at it from two angles:

How does the model retrieve knowledge and generate its response?

and closely related

How has the model been trained and fine-tuned?

It is important to understand that an LLM is in essence a Deep Neural Network and as such, it works based on statistics and probabilities. Put very simplistically, the model generates output which reflects the closest match to the context, based on its knowledge it has learned from vast amounts of training data. One of the building blocks here are so-called Embeddings, where similar word meanings are (mathematically) close to each other, even though the model does not actually “understand” those meanings. If this sounds fancy, it kinda is, but at same time, it is “only” mathematics, so don’t be afraid.

When looking at training, it helps considering the training data and process a language model has gone through. Not only has the model seen vast amounts of text data. It has also learned what makes out a high rated response to a specific question, for instance on sites like StackOverflow, and on high-quality Q&A assistant documents written for model training and tuning. In addition, in its fine-tuning stage, it learned and iteratively adapted its optimal responses based on human feedback. Without all this intense training and tuning efforts, the model might answer a question like “what is your first name” simply with “what is your last name”, because it has seen this frequently on internet forms [1].

Where I am trying to get at is this: When interacting with natural language AI, always keep in mind what and how the model has learned and how it gets to its output, given your input. Even though no one really knows this exactly, it is useful to consider probable correlations: Where and in what context could the model have seen input similar to yours before? What data has been available during the pre-training stage, and in which quality and quantity? For instance: Ever wondered why LLMs can solve mathematical equations (not reliably, however, sometimes still surprisingly), without inherent calculation capabilities? LLMs don’t calculate, they match patterns!

Prompting 101

There is a plethora of Prompting techniques, and plenty of scientific literature that benchmarks their effectiveness. Here, I just want to introduce a few well-known concepts. I believe that once you get the general idea, you will be able to expand your prompting repertoire and even develop and test new techniques yourself.

Ask and it will be given to you

Before going into specific prompting concepts, I would like to stress a general idea that, in my opinion, cannot be stressed enough:

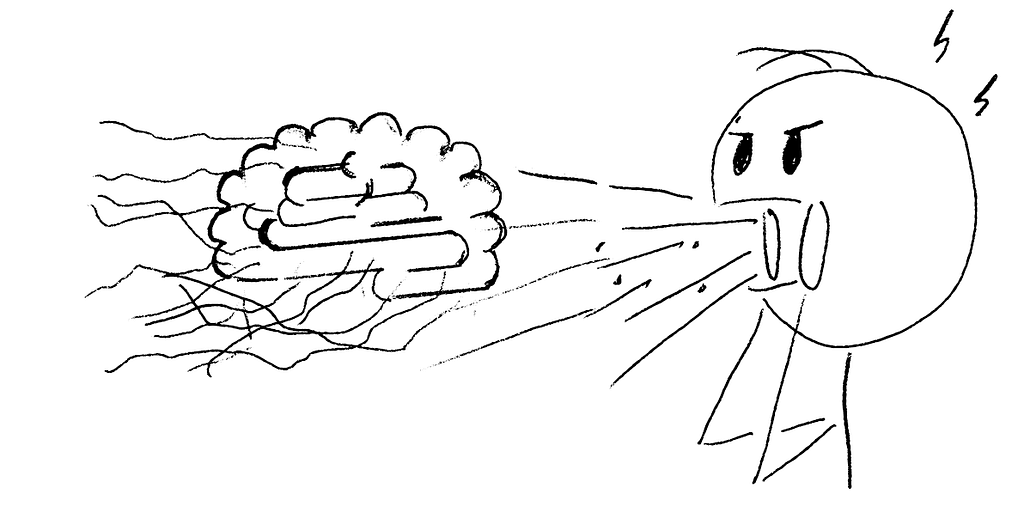

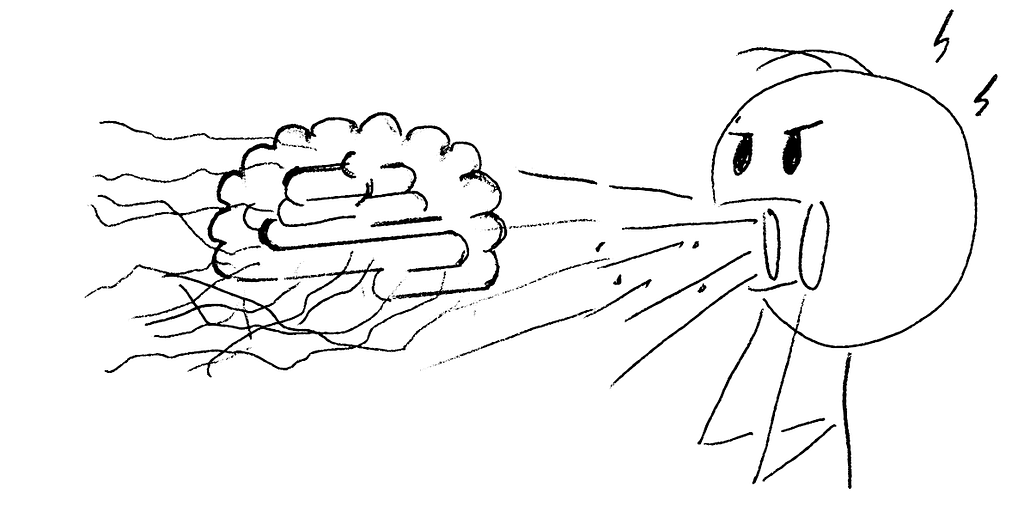

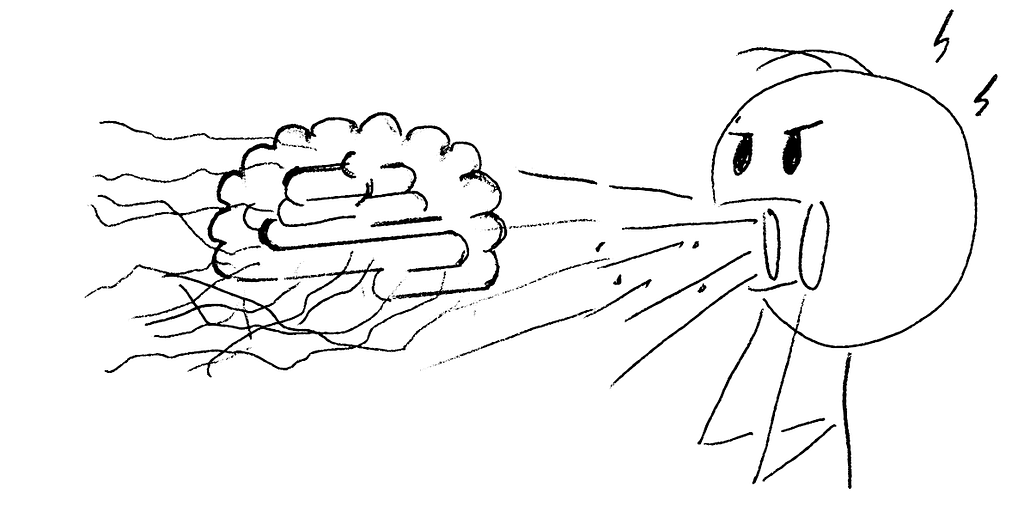

The quality of your prompt highly determines the response of the model.

And by quality I don’t necessarily mean a sophisticated prompt construction. I mean the basic idea of asking a precise question or giving well-structured instructions and providing necessary context. I have touched on this already when we met Sam, the piano player, in my previous article. If you ask a bar piano player to play some random Jazz tune, chances are that he might not play what you had in mind. Instead, if you ask exactly what it is you want to hear, your satisfaction with the result is likely to increase.

Similarly, if you ever had the chance of, say, hire someone to do something around your house and your contract specification only says, say, “bathroom renovation”, you might be surprised that in the end your bathroom does not look like what you had in mind. The contractor, just like the model, will only refer to what he has learned about renovations and bathroom tastes and will take the learned route to deliver.

So here are some general guidelines for prompting:

· Be clear and specific.

· Be complete.

· Provide context.

· Specify the desired output style, length, etc.

This way, the model has sufficient and matching reference data in your prompt that it can relate to when generating its response.

Roleplay prompting — simple, but overrated

In the early days of ChatGPT, the idea of roleplay prompting was all around: Instead of asking the assistant to give you an immediate answer (i.e. a simple query), you first assign it a specific role, such as “teacher” or “consultant” etc. Such a prompt could look like [2]:

From now on, you are an excellent math teacher and always teach your students math problems correctly. And I am one of your students.

It has been shown that this concept yields superior results. One paper reports that by this role play, the model implicitly triggers a step by step reasoning process, which is what you want it to do when applying the CoT- technique, see below. However, this approach has also been shown to sometimes perform sub-optimal and needs to be well designed.

In my experience, simply assigning a role doesn’t do the trick. I have experimented with the example task from the paper referred to above. Unlike in this research, GPT3.5 (which is as of today the free version of OpenAI’s ChatGPT, so you can try it yourself) has given the correct result, using a simple query:

I have also experimented with different logical challenges with both simple queries and roleplay, using a similar prompt like the one above. In my experiments two things happen:

either simple queries provides the correct answer on the first attempt, or

both simple queries and roleplay come up with false, however different answers

Roleplay did not outperform the queries in any of my simple (not scientifically sound) experiments. Hence, I conclude that the models must have improved recently and the impact of roleplay prompting is diminishing.

Looking at different research, and without extensive further own experimenting, I believe that in order to outperform simple queries, roleplay prompts need to be embedded into a sound and thoughtful design to outperform the most basic approaches — or are not valuable at all.

I am happy to read your experiences on this in the comments below.

Few-Shot aka in-context learning

Another intuitive and relatively simple concept is what’s referred to as Few-Shot prompting, also referred to as in-context learning. Unlike in a Zero-Shot Prompt, we not only ask the model to perform a task and expect it to deliver, we additionally provide (“few”) examples of the solutions. Even though you may find this obvious that providing examples leads to better performance, this is quite a remarkable ability: These LLMs are able to in-context learn, i.e. perform new tasks via inference alone by conditioning on a few input-label pairs and making predictions for new inputs [3].

Setting up a few-shot prompt involves

(1) collecting examples of the desired responses, and

(2) writing your prompt with instructions on what to do with these examples.

Let’s look at a typical classification example. Here the model is given several examples of statements that are either positive, neutral or negative judgements. The model’s task is to rate the final statement:

Again, even though this is a simple and intuitive approach, I am sceptical about its value in state-of-the-art language models. In my (again, not scientifically sound) experiments, Few-Shot prompts have not outperformed Zero-Shot in any case. (The model knew already that a drummer who doesn’t keep the time, is a negative experience, without me teaching it…). My finding seems to be consistent with recent research, where even the opposite effect (Zero-Shot outperforming Few-Shot) has been shown [4].

In my opinion and on this empirical background it is worth considering if the cost of design as well as computational, API and latency cost of this approach are a worthwhile investment.

CoT-Prompting or “Let’s think step-by-step’’

Chain of Thought (CoT) Prompting aims to make our models better at solving complex, multi-step reasoning problems. It can be as simple as adding the CoT instruction “Let’s think step-by-step’’ to the input query, to improve accuracy significantly [5][6].

Instead of just providing the final query or add one or few examples within your prompt like in the Few-Shot approach, you prompt the model to break down its reasoning process into a series of intermediate steps. This is akin to how a human would (ideally) approach a challenging problem.

Remember your math exams in school? Often, at more advanced classes, you were asked to not only solve a mathematical equation, but also write down the logical steps how you arrived at the final solution. And even if it was incorrect, you might have gotten some credits for mathematically sound solution steps. Just like your teacher in school, you expect the model to break the task down into sub-tasks, perform intermediate reasoning, and arrive at the final answer.

Again, I have experimented with CoT myself quite a bit. And again, most of the time, simply adding “Let’s think step-by-step” didn’t improve the quality of the response. In fact, it seems that the CoT approach has become an implicit standard of the recent fine-tuned chat-based LLM like ChatGPT, and the response is frequently broken down into chunks of reasoning without the explicit command to do so.

However, I came across one instance where the explicit CoT command did in fact improve the answer significantly. I used a CoT example from this article, however, altered it into a trick question. Here you can see how ChatGPT fell into my trap, when not explicitly asked for a CoT approach (even though the response shows a step wise reasoning):

When I added “Let’s think step-by-step” to the same prompt, it solved the trick question correctly (well, it is unsolvable, which ChatGPT rightfully pointed out):

To summarize, Chain of Thought prompting aims at building up reasoning skills that are otherwise difficult for language models to acquire implicitly. It encourages models to articulate and refine their reasoning process rather than attempting to jump directly from question to answer.

Again, my experiments have revealed only limited benefits of the simple CoT approach (adding “Let’s think step-by-step“). CoT did outperform a simple query on one occasion, and at the same time the extra effort of adding the CoT command is minimal. This cost-benefit ratio is one of the reasons why this approach is one of my favorites. Another reason why I personally like this approach is, it not only helps the model, but also can help us humans to reflect and maybe even iteratively consider necessary reasoning steps while crafting the prompt.

As before, we will likely see diminishing benefits of this simple CoT approach when models become more and more fine-tuned and accustomed to this reasoning process.

Conclusion

In this article, we have taken a journey into the world of prompting chat-based Large Language Models. Rather than just giving you the most popular prompting techniques, I have encouraged you to begin the journey with the question of Why prompting matters at all. During this journey we have discovered that the importance of prompting is diminishing thanks to the evolution of the models. Instead of requiring users to invest in continuously improving their prompting skills, currently evolving model architectures will likely further reduce their relevance. An agent-based framework, where different “routes” are taken while processing specific queries and tasks, is one of those.

This does not mean, however, that being clear and specific and providing the necessary context within your prompts isn’t worth the effort. On the contrary, I am a strong advocate of this, since it not only helps the model but also yourself to figure out what exactly it is you’re trying to achieve.

Just like in human communication, multiple factors determine the appropriate approach for reaching a desired outcome. Often, it is a mix and iteration of different approaches that yield optimal results for the given context. Try, test, iterate!

And finally, unlike in human interactions, you can test virtually limitlessly into your personal trial-and-error prompting journey. Enjoy the ride!

References

[1]: How Large Language Models work: From zero to ChatGPT

https://medium.com/data-science-at-microsoft/how-large-language-models-work-91c362f5b78f

[2]: Better Zero-Shot Reasoning with Role-Play Prompting

https://arxiv.org/abs/2308.07702

[3]: Rethinking the Role of Demonstrations: What Makes In-Context Learning Work?

https://arxiv.org/abs/2202.12837

[4]: Prompt Programming for Large Language Models: Beyond the Few-Shot Paradigm

https://dl.acm.org/doi/abs/10.1145/3411763.3451760.

[5]: When do you need Chain-of-Thought Prompting for ChatGPT?

https://arxiv.org/abs/2304.03262

[6]: Large Language Models are Zero-Shot Reasoners

https://arxiv.org/abs/2205.11916

The Business Guide to Tailoring Language AI Part 2 was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

The Business Guide to Tailoring Language AI Part 2

Go Here to Read this Fast! The Business Guide to Tailoring Language AI Part 2