Go Here to Read this Fast! Next-gen GPU memory will achieve unheard-of speeds

Originally appeared here:

Next-gen GPU memory will achieve unheard-of speeds

Go Here to Read this Fast! Next-gen GPU memory will achieve unheard-of speeds

Originally appeared here:

Next-gen GPU memory will achieve unheard-of speeds

Japan is an innovative country that leads the way on many technological fronts. But the wheels of bureaucracy often turn incredibly slowly there. So much so, that the government still requires businesses to provide information on floppy disks and CD-ROMs when they submit certain official documents.

That’s starting to change. Back in 2022, Minister of Digital Affairs Taro Kono urged various branches of the government to stop requiring businesses to submit information on outdated forms of physical media. The Ministry of Economy, Trade and Industry (METI) is one of the first to make the switch. “Under the current law, there are many provisions stipulating the use of specific recording media such as floppy disks regarding application and notification methods,” METI said last week, according to The Register.

After this calendar year, METI will no longer require businesses to submit data on floppy disks under 34 ordinances. The same goes for CD-ROMs when it comes to an unspecified number of procedures. There’s still quite some way to go before businesses can stop using either format entirely, however.

Kono’s staff identified some 1,900 protocols across several government departments that still require the likes of floppy disks, CD-ROMs and even MiniDiscs. The physical media requirements even applied to key industries such as utility suppliers, mining operations and aircraft and weapons manufacturers.

There are a couple of main reasons why there’s a push to stop using floppy disks, as SoraNews24 points out. One major factor is that floppy disks can be hard to come by. Sony, the last major manufacturer, stopped selling them in 2011. Another is that some data types just won’t fit on a floppy disk. A single photo can easily be larger than the format’s 1.4MB storage capacity.

There are some other industries that still rely on floppy disks. Some older planes need them for avionics, as do and some aging medical devices. It also took the US government until 2019 to stop using floppy disks to coordinate nuclear weapon launches.

This article originally appeared on Engadget at https://www.engadget.com/japan-will-no-longer-require-floppy-disks-for-submitting-some-official-documents-212048844.html?src=rss

Originally appeared here:

Japan will no longer require floppy disks for submitting some official documents

Embracer Group, the Swedish holding company undergoing restructuring, has reportedly canceled a Deus Ex game. Bloomberg says developers had been working on the unannounced title for two years. Neither Embracer nor developer Eidos addressed the reported cancellation specifically, but they confirmed they were laying off 97 employees at Deus Ex developer Eidos Montreal.

Eidos will reportedly focus instead on “an original franchise.” Bloomberg’s sources say the Deus Ex game was scheduled to start production later this year. The franchise’s most recent mainline installment was 2016’s Deus Ex: Mankind Divided.

After aggressively growing through acquisitions during the pandemic, Embracer Group entered a turbulent period last year. The company announced a restructuring plan in June 2023 after an unnamed partner pulled out of a planned deal that would have brought in $2 billion over six years. Axios later reported the mysterious investor was Savvy Games Group, which the Saudi government funds.

In August, Embracer announced the closure of Volition, the studio behind the Saints Row series. The parent company laid off about 900 employees in September and another 50 workers at Chorus developer Fishlabs. Earlier this month, Embracer shuttered Lost Boys Interactive, makers of Tiny Tina’s Wonderland — pinning the blame on “headwinds facing the industry right now.”

— Eidos-Montréal (@EidosMontreal) January 29, 2024

Embracer says the restructuring phase will run until the end of March. The company claims it will provide regular updates on the process, including when it publishes its next quarterly report on February 15.

Alongside the alleged Deus Ex cancellation, Eidos confirmed it let go of 97 employees from development teams, administration and support services. “The global economic context, the challenges of our industry and the comprehensive restructuring announced by Embracer have finally impacted our studio,” Eidos wrote in a statement.

This article originally appeared on Engadget at https://www.engadget.com/a-new-deus-ex-game-was-reportedly-canceled-amid-embracers-crisis-194919207.html?src=rss

Microsoft didn’t have to look too far to find the new president of Blizzard. Former Call of Duty general manager Johanna Faries is replacing Mike Ybarra, who stood down from the role amid last week’s sweeping layoffs in Microsoft’s gaming division. Blizzard was said to be particularly hard hit as Microsoft fired around 1,900 people.

Faries, a former National Football League executive, joined Activision as the head of Call of Duty esports in 2018. She started overseeing all things Call of Duty in 2021 and officially starts her new role on February 5.

Blizzard has largely operated independently since it merged with Activision in 2008. As such, Blizzard workers may be forgiven for being concerned at someone from the Activision side taking control. Former Activision Blizzard CEO Bobby Kotick often meddled in Blizzard’s affairs, reportedly resulting in Overwatch 2 delays, among other things.

In an attempt to soothe any worries, Faries wrote in an email to staff that “Activision, Blizzard, and King are decidedly different companies with distinct games, cultures and communities. It is important to note that Call of Duty’s way of waking up in the morning to deliver for players can often differ from the stunning games in Blizzard’s realm: each with different gameplay experiences, communities that surround them, and requisite models of success. I’ve discussed this with the Blizzard leadership team and I’m walking into this role with sensitivity to those dynamics, and deep respect for Blizzard, as we begin to explore taking our universes to even higher heights.”

Faries added that she is “committed to doing everything I can to help Blizzard thrive, with care and consideration for you and for our games, each unique and special in their own right.” Meanwhile, on X, Faries wrote that Blizzard’s Diablo 4 was part of her current rotation of games, alongside Call of Duty and Baldur’s Gate 3.

This article originally appeared on Engadget at https://www.engadget.com/former-call-of-duty-chief-johanna-faries-is-blizzards-new-president-193852238.html?src=rss

“Generative Adversarial Nets” (GANs) demonstrated outstanding performance in generating realistic synthetic data which are indistinguishable from the real data in the past. Unfortunately, GANs caught the public’s attention because of its unethical applications, deepfakes (Knight, 2018).

This article illustrates a case with a good motive in the application of GANs in the context of fraud detection.

Fraud detection is an application of binary classification prediction. Fraud cases, which account for only a small fraction of the transaction universe, constitute a minority class that makes the dataset highly imbalanced. In general, the resulting model tends to be biased towards the majority class and tends to underfit to the minority class. Thus, the less balanced the dataset, the poorer the performance of the classification predictor would be.

My motive here is to use GANs as a data augmentation tool in an attempt to address this classical problem of fraud detection associated with the imbalanced dataset. More specifically, GANs can generate realistic synthetic data of the minority fraud class and transform the imbalanced dataset perfectly balanced.

And, I am hoping that this sophisticated algorithm could materially contribute to the performance of fraud detection. In other words, my initial expectation is: the better sophisticated algorithm, the better performance.

A relevant question is if the use of GANs will guarantee a promising improvement in the performance of fraud detection and satisfy my motive. Let’s see.

In principle, fraud detection is an application of binary classification algorithm: to classify each transaction whether it is a fraud case or not.

Fraud cases account for only a small fraction of the transaction universe. In general, fraud cases constitute the minority class, thus, make the dataset highly imbalanced.

The fewer fraud cases, the more sound the transaction system would be.

Very simple and intuitive.

Paradoxically, that sound condition was one of the primary reasons that made fraud detection challenging in the past, if not impossible. It is simply because it was difficult for a classification algorithm to learn the probability distribution of the minority class of fraud.

In general, the more balanced the dataset, the better the performance of the classification predictor. In other words, the less balanced (or the more imbalanced) the dataset, the poorer the performance of classifier.

This paints the classical problem of fraud detection: a binary classification application with highly imbalanced dataset.

In this setting, we can use Generative Adversarial Nets (GANs) as a data augmentation tool to generate realistic synthetic data of the minority fraud class to transform the entire dataset more balanced in an attempt to improve the performance of the classifier model of fraud detection.

This article is divided into the following sections:

Overall, I will primarily focus on the topic of GANs (both the algorithm and the code). For the remaining topics of the model development other than GANs, such as data preprocessing and classifier algorithm, I will only outline the process and refrain from going into their details. In this context, this article assumes that the readers have a basic knowledge about the binary classifier algorithm (especially, Ensemble Classifier that I selected for fraud detection) as well as general understanding of data cleaning and preprocessing.

For the detailed code, the readers are welcome to access the following link: https://github.com/deeporigami/Portfolio/blob/6538fcaad1bf58c5f63d6320ca477fa867edb1df/GAN_FraudDetection_Medium_2.ipynb

GANs is a special type of generative algorithm. As its name suggests, Generative Adversarial Nets (GANs) is composed of two neural networks: the generative network (the generator) and the adversarial network (the discriminator). GANs pits these two agents against each other to engage in a competition, where the generator attempts to generate realistic synthetic data and the discriminator to distinguish the synthetic data from the real data.

The original GANs was introduced in a seminal paper: “Generative Adversarial Nets” (Goodfellow, et al., Generative Adversarial Nets, 2014). The co-authors of the original GANs portrayed GANs with a counterfeiter-police analogy: an iterative game, where the generator acts as a counterfeiter and the discriminator plays the role of the police to detect the counterfeit that the generator forged.

The original GANs was innovative in a sense that it addressed and overcame conventional difficulties in training deep generative algorithm in the past. And as its core, it was designed with bi-level optimization framework with an equilibrium seeking objective setting (vs maximum likelihood oriented objective setting).

Ever since, many variant architectures of GANs have been explored. As a precaution, this article refers solely to the prototype architecture of the original GANs.

Generator and Discriminator

Repeatedly, in the architecture of GANs, the two neural networks — the generator and the discriminator — compete against each other. In this context, the competition takes place through the iteration of forward propagation and backward propagation (according to the general framework of neural networks).

On one hand, it is straight-forward that the discriminator is a binary classifier by design: it classifies whether each sample is real (label: 1) or fake/synthetic (label:0). And the discriminator is fed with both the real samples and the synthetic samples during the forward propagation. Then, during the backpropagation, it learns to detect the synthetic data from the mixed data feed.

On the other hand, the generator is a noise distribution by design. The generator is fed with the real samples during the forward propagation. Then, during the backward propagation, the generator learns the probability distribution of the real data in order to better simulate its synthetic samples.

And these two agents are trained alternately via “bi-level optimization” framework.

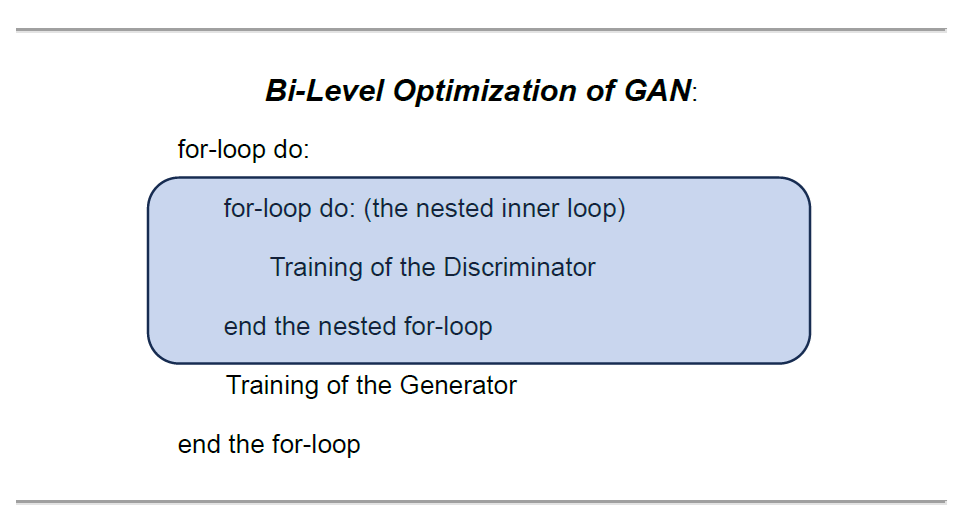

Bi-level Training Mechanism (bi-level optimization method)

In the original GAN paper, in order to train these two agents that pursue their diametrically opposite objectives, the co-authors designed a “bi-level optimization (training)” architecture, in which one internal training block (training of the discriminator) is nested within another high-level training block (training of the generator).

The image below illustrates the structure of “bi-level optimization” in the nested training loops. The discriminator is trained within the nested inner loop, while the generator is trained in the main loop at the higher level.

And GANs trains these two agents alternately in this bi-level training architecture (Goodfellow, et al., Generative Adversarial Nets, 2014, p. 3). In other words, while training one agent during the alternation, we need to freeze the learning process of the other agent (Goodfellow I. , 2015, p. 3).

Mini-Max Optimization Objective

In addition to the “bi-level optimization” mechanism which enables the alternate training of these two agents, another unique feature that differentiates GANs from the conventional prototype of neural network is its mini-max optimization objective. Simply put, in contrast to the conventional maximum seeking approach (such as maximum-likelihood) , GANs pursues an equilibrium-seeking optimization objective.

What is an equilibrium-seeking optimization objective?

Let’s break it down.

GANs’ two agents have two diametrically opposite objectives. While the discriminator, as a binary classifier, aims at maximizing the probability of correctly classifying the mixture of the real samples and the synthetic samples, the generator’s objective is to minimize the probability that the discriminator correctly classifies the synthetic data: simply because the generator needs to fool the discriminator.

In this context, the co-authors of the original GANs called the overall objective a “minimax game”. (Goodfellow, et al., 2014, p. 3)

Overall, the ultimate mini-max optimization objective of GANs is not to search for the global maximum/minimum of either of these objective functions. Instead, it is set to seek an equilibrium point which can be interpreted as:

And the equilibrium point could be conceptually represented by the probability of random guessing, 0.5 (50%), for the discriminator: D(z) => 0.5 .

Let’s transcribe the conceptual framework of GANs’ minimax optimization in terms of their objective functions.

The objective of the discriminator is to maximize the objective function in the following image:

In order to resolve a potential saturation issue, they converted the second term of the original log-likelihood objective function for the generator as follows and recommended to maximize the converted version as the generator’s objective:

Overall, the architecture of GANs’ “bi-level optimization” can be translated in to the following algorithm.

For more details about the algorithmic design of GANs, please read another article of mine: Mini-Max Optimization Design of Generative Adversarial Nets .

Now, let’s move on to the actual coding with a dataset.

In order to highlight GANs algorithm, I will primarily focus on the code of GANs here and only outline the rest of the process.

For fraud detection, I selected the following dataset of credit card transactions from Kaggle: https://www.kaggle.com/datasets/mlg-ulb/creditcardfraud

Data License: Database Contents License (DbCL) v1.0

Here is a summary of the dataset.

The dataset contains 284,807 transactions. In the dataset, we have only 492 fraud cases (including 29 duplicated cases).

Since the fraud class accounts for only 0.172% of all transactions, it constitutes an extremely small minority class. This dataset is an appropriate one for illustrating the classical problem of fraud detection associated with the imbalanced dataset.

It has the following 30 features:

The label is set as ‘Class’.

Since the dataset has already been pretty much, if not perfectly, cleaned, I only had to do few things for the data cleaning: elimination of duplicated data and removal of outliers.

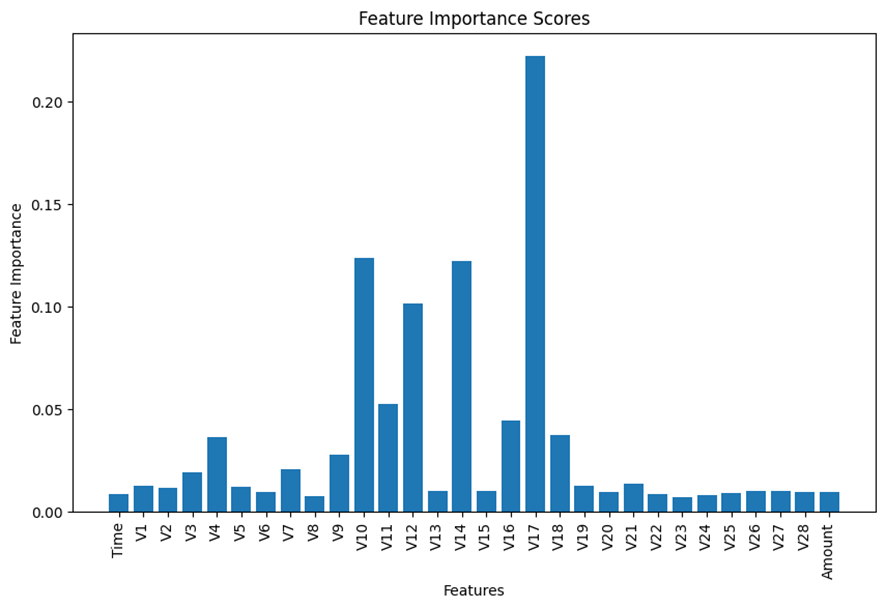

Thereafter, given 30 features in the dataset, I decided to run the feature selection to reduce the number of the features by eliminating less important features before the training process. I selected the built-in feature importance score of the scikit-learn Random Forest Classifier to estimate the scores of all the 30 features.

The following chart displays the summary of the result. If interested in the detailed process, please visit my code listed above.

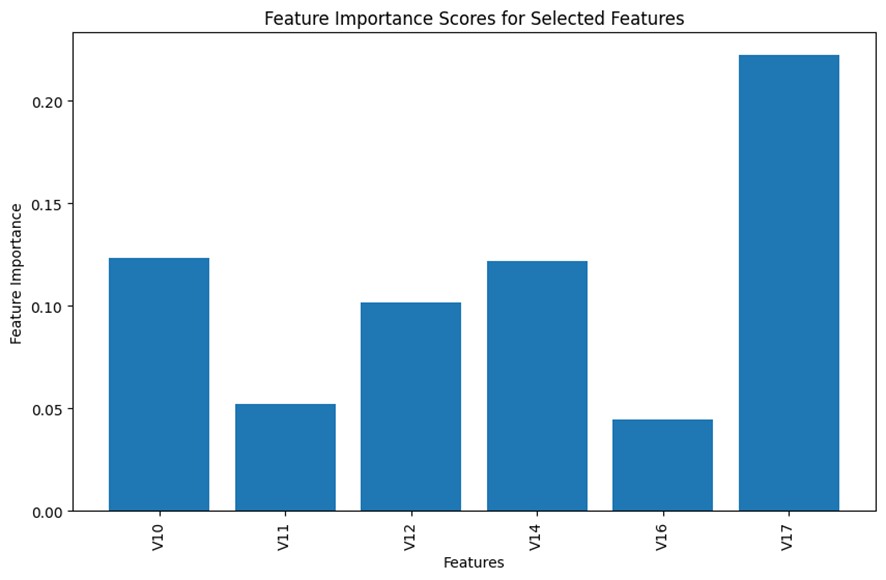

Based on the results displayed in the bar chart above, I made my subjective judgement to select the top 6 features for the analysis and remove all the remaining insignificant features from the model building process.

Here is the selected top 6 important features.

For the model building purpose going forward, I focused on these 6 selected features. After the data preprocessing, we have the working dataframe, df, of the following shape:

Hopefully, the feature selection would reduce the complexity of the resulting model and stabilize its performance, while retaining critical information for optimizing a binary classifier.

Finally, it’s time for us to use GANs for data augmentation.

So how many synthetic data do we need to create?

First of all, our interest for the data augmentation is only for the model training. Since the test dataset is out-of-sample data, we want to preserve the original form of the test dataset. Secondly, because our intention is to transform the imbalanced dataset perfectly, we do not want to augment the majority class of non-fraud cases.

Simply put, we want to augment only the train dataset of the minority fraud class, nothing else.

Now, let’s split the working dataframe into the train dataset and the test dataset in 80/20 ratio, using a stratified data split method.

# Separate features and target variable

X = df.drop('Class', axis=1)

y = df['Class']

# Splitting data into train and test sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42, stratify=y)

# Combine the features and the label for the train dataset

train_df = pd.concat([X_train, y_train], axis=1)

As a result, the shape of the train dataset is as follows:

Let’s see the composition (the fraud cases and the non-fraud cases) of the train dataset.

# Load the dataset (fraud and non-fraud data)

fraud_data = train_df[train_df['Class'] == 1].drop('Class', axis=1).values

non_fraud_data = train_df[train_df['Class'] == 0].drop('Class', axis=1).values

# Calculate the number of synthetic fraud samples to generate

num_real_fraud = len(fraud_data)

num_synthetic_samples = len(non_fraud_data) - num_real_fraud

print("# of non-fraud: ", len(non_fraud_data))

print("# of Real Fraud:", num_real_fraud)

print("# of Synthetic Fraud required:", num_synthetic_samples)

# of non-fraud: 225632

# of Real Fraud: 378

# of Synthetic Fraud required: 225254

This tells us that the train dataset (226,010) is comprised of 225,632 non-fraud data and 378 fraud data. In other words, the difference between them is 225,254. This number is the number of the synthetic fraud data (num_synthetic_samples) that we need to augment in order to perfectly match the numbers of these two classes within the train dataset: as a reminder, we do preserve the original test dataset.

Next, let’s code GANs.

First, let’s create custom functions to determine the two agents: the discriminator and the generator.

For the generator, I create a noise distribution function, build_generator(), which requires two parameters: latent_dim (the dimension of the noise) as the shape of its input; and the shape of its output, output_dim, which corresponds to the number of the features.

# Define the generator network

def build_generator(latent_dim, output_dim):

model = Sequential()

model.add(Dense(64, input_shape=(latent_dim,)))

model.add(Dense(128, activation='sigmoid'))

model.add(Dense(output_dim, activation='sigmoid'))

return model

For the discriminator, I create a custom function build_discriminator() that takes input_dim, which corresponds to the number of the features.

# Define the discriminator network

def build_discriminator(input_dim):

model = Sequential()

model.add(Input(input_dim))

model.add(Dense(128, activation='sigmoid'))

model.add(Dense(1, activation='sigmoid'))

return model

Then, we can call these function to create the generator and the discriminator. Here, for the generator I arbitrarily set latent_dim to be 32: you can try other value here, if you like.

# Dimensionality of the input noise for the generator

latent_dim = 32

# Build generator and discriminator models

generator = build_generator(latent_dim, fraud_data.shape[1])

discriminator = build_discriminator(fraud_data.shape[1])

At this stage, we need to compile the discriminator, which is going to be nested in the main (higher) optimization loop later. And we can compile the discriminator with the following argument setting.

# Compile the discriminator model

from keras.metrics import Precision, Recall

discriminator.compile(optimizer=Adam(learning_rate=0.0002, beta_1=0.5), loss='binary_crossentropy', metrics=[Precision(), Recall()])

For the generator, we will compile it when we construct the main (upper) optimization loop.

At this stage, we can define the custom objective function for the generator as follows. Remember, the recommended objective was to maximize the following formula:

def generator_loss_log_d(y_true, y_pred):

return - K.mean(K.log(y_pred + K.epsilon()))

Above, the negative sign is required, since the loss function by default is designed to be minimized.

Then, we can construct the main (upper) loop, build_GANs(generator, discriminator), of the bi-level optimization architecture. In this main loop, we compile the generator implicitly. In this context, we need to use the custom objective function of the generator, generator_loss_log_d, when we compile the main loop.

As aforementioned, we need to freeze the discriminator when we train the generator.

# Build and compile the GANs upper optimization loop combining generator and discriminator

def build_gan(generator, discriminator):

discriminator.trainable = False

model = Sequential()

model.add(generator)

model.add(discriminator)

model.compile(optimizer=Adam(learning_rate=0.0002, beta_1=0.5), loss=generator_loss_log_d)

return model

# Call the upper loop function

gan = build_gan(generator, discriminator)

At the last line above, gan calls build_gan() in order to implement the batch training below, using Keras’ model.train_on_batch() method.

As a reminder, while we train the discriminator, we need to freeze the training of the generator; and while we train the generator, we need to freeze the training of the discriminator.

Here is the batch training code incorporating the alternating training process of these two agents under the bi-level optimization framework.

# Set hyperparameters

epochs = 10000

batch_size = 32

# Training loop for the GANs

for epoch in range(epochs):

# Train discriminator (freeze generator)

discriminator.trainable = True

generator.trainable = False

# Random sampling from the real fraud data

real_fraud_samples = fraud_data[np.random.randint(0, num_real_fraud, batch_size)]

# Generate fake fraud samples using the generator

noise = np.random.normal(0, 1, size=(batch_size, latent_dim))

fake_fraud_samples = generator.predict(noise)

# Create labels for real and fake fraud samples

real_labels = np.ones((batch_size, 1))

fake_labels = np.zeros((batch_size, 1))

# Train the discriminator on real and fake fraud samples

d_loss_real = discriminator.train_on_batch(real_fraud_samples, real_labels)

d_loss_fake = discriminator.train_on_batch(fake_fraud_samples, fake_labels)

d_loss = 0.5 * np.add(d_loss_real, d_loss_fake)

# Train generator (freeze discriminator)

discriminator.trainable = False

generator.trainable = True

# Generate synthetic fraud samples and create labels for training the generator

noise = np.random.normal(0, 1, size=(batch_size, latent_dim))

valid_labels = np.ones((batch_size, 1))

# Train the generator to generate samples that "fool" the discriminator

g_loss = gan.train_on_batch(noise, valid_labels)

# Print the progress

if epoch % 100 == 0:

print(f"Epoch: {epoch} - D Loss: {d_loss} - G Loss: {g_loss}")

Here, I have a quick question for you.

Below we have an excerpt associated with the generator training from the code above.

Can you explain what this code is doing?

# Generate synthetic fraud samples and create labels for training the generator

noise = np.random.normal(0, 1, size=(batch_size, latent_dim))

valid_labels = np.ones((batch_size, 1))

In the first line, noise generates the synthetic data. In the second line, valid_labels assigns the label of the synthetic data.

Why do we need to label it with 1, which is supposed to be the label for the real data? Didn’t you find the code counter-intuitive?

Ladies and gentlemen, welcome to the world of counterfeiters.

This is the labeling magic that trains the generator to create samples that can fool the discriminator.

Now, let’s use the trained generator to create the synthetic data for the minority fraud class.

# After training, use the generator to create synthetic fraud data

noise = np.random.normal(0, 1, size=(num_synthetic_samples, latent_dim))

synthetic_fraud_data = generator.predict(noise)

# Convert the result to a Pandas DataFrame format

fake_df = pd.DataFrame(synthetic_fraud_data, columns=features.to_list())

Finally, the synthetic data is created.

In the next section, we can combine this synthetic fraud data with the original train dataset to make the entire train dataset perfectly balanced. I hope that the perfectly balanced training dataset would improve the performance of the fraud detection classification model.

Repeatedly, the use of GANs in this project is exclusively for data augmentation, but not for classification.

First of all, we would need the benchmark model as the basis of the comparison in order for us to evaluate the improvement made by the GANs based data augmentation on the performance of the fraud detection model.

As a binary classifier algorithm, I selected Ensemble Method for building the fraud detection model. As the benchmark scenario, I developed a fraud detection model only with the original imbalanced dataset: thus, without data augmentation. Then, for the second scenario with data augmentation by GANs, I can train the same algorithm with the perfectly balanced train dataset, which contains the synthetic fraud data created by GANs.

Benchmark Scenario: Ensemble without data augmentation

Next, let’s define the benchmark scenario (without data augmentation). I decided to select Ensemble Classifier: voting method as the meta learner with the following 3 base learners.

Since the original dataset is highly imbalanced, rather than accuracy I shall select evaluation metrics from the following 3 options: precision, recall, and F1-Score.

The following custom function, ensemble_training(X_train, y_train), defines the training and validation process.

def ensemble_training(X_train, y_train):

# Initialize base learners

gradient_boosting = GradientBoostingClassifier(random_state=42)

decision_tree = DecisionTreeClassifier(random_state=42)

random_forest = RandomForestClassifier(random_state=42)

# Define the base models

base_models = {

'RandomForest': random_forest,

'DecisionTree': decision_tree,

'GradientBoosting': gradient_boosting

}

# Initialize the meta learner

meta_learner = VotingClassifier(estimators=[(name, model) for name, model in base_models.items()], voting='soft')

# Lists to store training and validation metrics

train_f1_scores = []

val_f1_scores = []

# Splitting the train set further into training and validation sets

X_train, X_val, y_train, y_val = train_test_split(X_train, y_train, test_size=0.25, random_state=42, stratify=y_train)

# Training and validation

for model_name, model in base_models.items():

model.fit(X_train, y_train)

# Training metrics

train_predictions = model.predict(X_train)

train_f1 = f1_score(y_train, train_predictions)

train_f1_scores.append(train_f1)

# Validation metrics using the validation set

val_predictions = model.predict(X_val)

val_f1 = f1_score(y_val, val_predictions)

val_f1_scores.append(val_f1)

# Training the meta learner on the entire training set

meta_learner.fit(X_train, y_train)

return meta_learner, train_f1_scores, val_f1_scores, base_models

The next function block, ensemble_evaluations(meta_learner, X_train, y_train, X_test, y_test), calculates the performance evaluation metrics at the meta learner level.

def ensemble_evaluations(meta_learner,X_train, y_train, X_test, y_test):

# Metrics for the ensemble model on both traininGANsd test datasets

ensemble_train_predictions = meta_learner.predict(X_train)

ensemble_test_predictions = meta_learner.predict(X_test)

# Calculating metrics for the ensemble model

ensemble_train_f1 = f1_score(y_train, ensemble_train_predictions)

ensemble_test_f1 = f1_score(y_test, ensemble_test_predictions)

# Calculate precision and recall for both training and test datasets

precision_train = precision_score(y_train, ensemble_train_predictions)

recall_train = recall_score(y_train, ensemble_train_predictions)

precision_test = precision_score(y_test, ensemble_test_predictions)

recall_test = recall_score(y_test, ensemble_test_predictions)

# Output precision, recall, and f1 score for both training and test datasets

print("Ensemble Model Metrics:")

print(f"Training Precision: {precision_train:.4f}, Recall: {recall_train:.4f}, F1-score: {ensemble_train_f1:.4f}")

print(f"Test Precision: {precision_test:.4f}, Recall: {recall_test:.4f}, F1-score: {ensemble_test_f1:.4f}")

return ensemble_train_predictions, ensemble_test_predictions, ensemble_train_f1, ensemble_test_f1, precision_train, recall_train, precision_test, recall_test

Below, let’s look at the performance of the benchmark Ensemble Classifier.

Training Precision: 0.9811, Recall: 0.9603, F1-score: 0.9706

Test Precision: 0.9351, Recall: 0.7579, F1-score: 0.8372

At the meta-learner level, the benchmark model generated F1-Score at a reasonable level of 0.8372.

Next, let’s move on to the scenario with data augmentation using GANs . We want to see if the performance of the scenario with GAN can outperform the benchmark scenario.

GANs Scenario: Fraud Detection with data augmentation by GANs

Finally, we have constructed a perfectly balanced dataset by combining the original imbalanced train dataset (both non-fraud and fraud cases), train_df, and the synthetic fraud dataset generated by GANs, fake_df. Here, we will preserve the test dataset as original by not involving it in this process.

wdf = pd.concat([train_df, fake_df], axis=0)

We will train the same ensemble method with the mixed balanced dataset to see if it will outperform the benchmark model.

Now, we need to split the mixed balanced dataset into the features and the label.

X_mixed = wdf[wdf.columns.drop("Class")]

y_mixed = wdf["Class"]

Remember, when I ran the benchmark scenario earlier, I already defined the necessary custom function blocks to train and evaluate the ensemble classifier. I can use those custom functions here as well to train the same Ensemble algorithm with the combined balanced data.

We can pass the features and the label (X_mixed, y_mixed) into the custom Ensemble Classifier function ensemble_training().

meta_learner_GANs, train_f1_scores_GANs, val_f1_scores_GANs, base_models_GANs=ensemble_training(X_mixed, y_mixed)

Finally, we can evaluate the model with the test dataset.

ensemble_evaluations(meta_learner_GANs, X_mixed, y_mixed, X_test, y_test)

Here is the result.

Ensemble Model Metrics:

Training Precision: 1.0000, Recall: 0.9999, F1-score: 0.9999

Test Precision: 0.9714, Recall: 0.7158, F1-score: 0.8242

Finally, we can assess whether the data augmentation by GANs improved the performance of the classifier, as I expected.

Let’s compare the evaluation metrics between the benchmark scenario and GANs scenario.

Here is the result from the benchmark scenario.

# The Benchmark Scenrio without data augmentation by GANs

Training Precision: 0.9811, Recall: 0.9603, F1-score: 0.9706

Test Precision: 0.9351, Recall: 0.7579, F1-score: 0.8372

Here is the result from GANs scenario.

Training Precision: 1.0000, Recall: 0.9999, F1-score: 0.9999

Test Precision: 0.9714, Recall: 0.7158, F1-score: 0.8242

When we review the evaluation results on the training dataset, clearly GANs scenario outperformed the benchmark scenario over all the three evaluation metrics.

Nevertheless, when we focus on the results on the out-of-sample test data, GANs scenario outperformed the benchmark scenario only for precision (Benchmark: 0.935 vs GANs Scenario: 0.9714): it failed do so for recall and F1-Score (Benchmark: 0.7579; 0.8372 vs GANs Scenario: 0.7158; 0.8242).

These two comparisons indicate: while the data augmentation by GANs was successful in simulating the realistic fraud data within the training dataset, it has failed to capture the diversity of the actual fraud cases included in the out-of-sample test dataset.

GANs was too good in simulating the particular probability distribution of the train data. Ironically, as a result, the use of GANs as the data augmentation tool, accounting for overfitting to the train data, resulted in a poor generalization of the resulting fraud detection (classification) model.

Paradoxically, this particular example made a counter-intuitive case that a better sophisticated algorithm might not necessarily guarantee a better performance when compared with simpler conventional algorithms.

In addition, we could also take into account of another unintended consequence, wasteful carbon footprint: adding energy demanding algorithms into your model development could increase the carbon footprint in the use of the machine learning in our daily life. This case could illustrate an example of an unnecessarily wasteful case which wasted energy unnecessarily without delivering a better performance.

Here I leave you some links regarding energy consumption of machine learning.

Today, we have many variants of GANs. In the future article, I would like to explore other variants of GANs to see if any variant can capture a wider diversity of the original samples so that it can improve the performance of a fraud detector.

Thanks for reading.

Michio Suginoo

Fraud Detection with Generative Adversarial Nets (GANs) was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Fraud Detection with Generative Adversarial Nets (GANs)

Go Here to Read this Fast! Fraud Detection with Generative Adversarial Nets (GANs)

Weather prediction is a very complex problem to solve. Numerical Weather Predictions (NWP) models, Weather Research and Forecasting (WRF) models, have been used to solve the problem, however, the accuracy and precision sometimes are found to be lacking.

Being the complex problem it is, it has attracted interest and the pursuit of solutions from data scientists to data science enthusiasts to meteorological engineers. Solutions have been found, however consistency and uniformity has not. The solution varies from area to area, from mountain to plateau, from swamps to tundra. From my own personal experience and I am sure from others’ experiences too, weather prediction has been found to be a tough cookie to crack. Quoting a certain shrimp billionaire:

It is like a box of chocolates, you never know what you’re gonna get.

Recently, Deepmind released a new tool: Graphcast, an AI model for faster and more accurate global weather forecasting, taking a shot at making this particular bag of chocolates tastier and more efficient. On a Google TPU v4 machine, using Graphcast, one can fetch predictions at a 0.25 degree spatial resolution in less than a minute. It solves a lot of issues one might face when predicting using conventional methods:

What isn’t so mind boggling is the data preparation required to fetch predictions using the aforementioned tool.

However, worry not, I shall be your knight in a dark and gloomy armor and explain, in this article, the steps required to prepare and format data and finally, fetch predictions using Graphcast.

Note: The usage of the word “AI” nowadays reminds me very much of how “quantum” is used in Marvel movies.

Getting the predictions is a process which can be divided into the below sections:

Graphcast states that using the current weather data and the data from 6 hours ago, one can make predictions 6 hours into the future. Taking an example to put it simply:

It is important to note that 2024–01–01 18:00 will be the first prediction fetched. Graphcast can additionally fetch data for 10 days, with a 6 hour gap between each prediction. So, the other timestamps for which predictions can be fetched are:

To summarize, data for 40 timestamps can be predicted using the input of two timestamps.

For the code I will present in this article, I have assigned the following values to certain parameters that dictate how fast you can get the predictions and the memory used.

Below is the code for importing the required packages, initializing arrays for fields required for input and prediction purposes and other variables that will come in handy.

import cdsapi

import datetime

import functools

from graphcast import autoregressive, casting, checkpoint, data_utils as du, graphcast, normalization, rollout

import haiku as hk

import isodate

import jax

import math

import numpy as np

import pandas as pd

from pysolar.radiation import get_radiation_direct

from pysolar.solar import get_altitude

import pytz

import scipy

from typing import Dict

import xarray

client = cdsapi.Client() # Making a connection to CDS, to fetch data.

# The fields to be fetched from the single-level source.

singlelevelfields = [

'10m_u_component_of_wind',

'10m_v_component_of_wind',

'2m_temperature',

'geopotential',

'land_sea_mask',

'mean_sea_level_pressure',

'toa_incident_solar_radiation',

'total_precipitation'

]

# The fields to be fetched from the pressure-level source.

pressurelevelfields = [

'u_component_of_wind',

'v_component_of_wind',

'geopotential',

'specific_humidity',

'temperature',

'vertical_velocity'

]

# The 13 pressure levels.

pressure_levels = [50, 100, 150, 200, 250, 300, 400, 500, 600, 700, 850, 925, 1000]

# Initializing other required constants.

pi = math.pi

gap = 6 # There is a gap of 6 hours between each graphcast prediction.

predictions_steps = 4 # Predicting for 4 timestamps.

watts_to_joules = 3600

first_prediction = datetime.datetime(2024, 1, 1, 18, 0) # Timestamp of the first prediction.

lat_range = range(-180, 181, 1) # Latitude range.

lon_range = range(0, 360, 1) # Longitude range.

# A utility function used for ease of coding.

# Converting the variable to a datetime object.

def toDatetime(dt) -> datetime.datetime:

if isinstance(dt, datetime.date) and isinstance(dt, datetime.datetime):

return dt

elif isinstance(dt, datetime.date) and not isinstance(dt, datetime.datetime):

return datetime.datetime.combine(dt, datetime.datetime.min.time())

elif isinstance(dt, str):

if 'T' in dt:

return isodate.parse_datetime(dt)

else:

return datetime.datetime.combine(isodate.parse_date(dt), datetime.datetime.min.time())

When it comes to machine learning, in order to get some predictions, you have to give the ML model some data using which it spits out a prediction. For example, when predicting whether a person is Batman, the input data might be:

Similarly, Graphcast too takes certain inputs, which we obtain from CDS, using its python library: cdsapi. Currently, the data publisher uses the Creative Commons Attribution 4.0 License, which means that anyone can copy, distribute, transmit, and adapt the work as long as the original author is given credit.

However, authentication is required before making requests to fetch data using cdsapi, the instructions for which are provided by CDS and is pretty straightforward.

Assuming you are now CDS-approved, inputs can be created, which involves the following steps:

Other small steps include:

The code for creating the input data is as follows.

# Getting the single and pressure level values.

def getSingleAndPressureValues():

client.retrieve(

'reanalysis-era5-single-levels',

{

'product_type': 'reanalysis',

'variable': singlelevelfields,

'grid': '1.0/1.0',

'year': [2024],

'month': [1],

'day': [1],

'time': ['00:00', '01:00', '02:00', '03:00', '04:00', '05:00', '06:00', '07:00', '08:00', '09:00', '10:00', '11:00', '12:00'],

'format': 'netcdf'

},

'single-level.nc'

)

singlelevel = xarray.open_dataset('single-level.nc', engine = scipy.__name__).to_dataframe()

singlelevel = singlelevel.rename(columns = {col:singlelevelfields[ind] for ind, col in enumerate(singlelevel.columns.values.tolist())})

singlelevel = singlelevel.rename(columns = {'geopotential': 'geopotential_at_surface'})

# Calculating the sum of the last 6 hours of rainfall.

singlelevel = singlelevel.sort_index()

singlelevel['total_precipitation_6hr'] = singlelevel.groupby(level=[0, 1])['total_precipitation'].rolling(window = 6, min_periods = 1).sum().reset_index(level=[0, 1], drop=True)

singlelevel.pop('total_precipitation')

client.retrieve(

'reanalysis-era5-pressure-levels',

{

'product_type': 'reanalysis',

'variable': pressurelevelfields,

'grid': '1.0/1.0',

'year': [2024],

'month': [1],

'day': [1],

'time': ['06:00', '12:00'],

'pressure_level': pressure_levels,

'format': 'netcdf'

},

'pressure-level.nc'

)

pressurelevel = xarray.open_dataset('pressure-level.nc', engine = scipy.__name__).to_dataframe()

pressurelevel = pressurelevel.rename(columns = {col:pressurelevelfields[ind] for ind, col in enumerate(pressurelevel.columns.values.tolist())})

return singlelevel, pressurelevel

# Adding sin and cos of the year progress.

def addYearProgress(secs, data):

progress = du.get_year_progress(secs)

data['year_progress_sin'] = math.sin(2 * pi * progress)

data['year_progress_cos'] = math.cos(2 * pi * progress)

return data

# Adding sin and cos of the day progress.

def addDayProgress(secs, lon:str, data:pd.DataFrame):

lons = data.index.get_level_values(lon).unique()

progress:np.ndarray = du.get_day_progress(secs, np.array(lons))

prxlon = {lon:prog for lon, prog in list(zip(list(lons), progress.tolist()))}

data['day_progress_sin'] = data.index.get_level_values(lon).map(lambda x: math.sin(2 * pi * prxlon[x]))

data['day_progress_cos'] = data.index.get_level_values(lon).map(lambda x: math.cos(2 * pi * prxlon[x]))

return data

# Adding day and year progress.

def integrateProgress(data:pd.DataFrame):

for dt in data.index.get_level_values('time').unique():

seconds_since_epoch = toDatetime(dt).timestamp()

data = addYearProgress(seconds_since_epoch, data)

data = addDayProgress(seconds_since_epoch, 'longitude' if 'longitude' in data.index.names else 'lon', data)

return data

# Adding batch field and renaming some others.

def formatData(data:pd.DataFrame) -> pd.DataFrame:

data = data.rename_axis(index = {'latitude': 'lat', 'longitude': 'lon'})

if 'batch' not in data.index.names:

data['batch'] = 0

data = data.set_index('batch', append = True)

return data

if __name__ == '__main__':

values:Dict[str, xarray.Dataset] = {}

single, pressure = getSingleAndPressureValues()

values['inputs'] = pd.merge(pressure, single, left_index = True, right_index = True, how = 'inner')

values['inputs'] = integrateProgress(values['inputs'])

values['inputs'] = formatData(values['inputs'])

There are 11 prediction fields:

The targets one passes is essentially an empty xarray for all the prediction fields at:

The code to do so, is shared below.

# Includes the packages imported and constants assigned.

# The functions created for the inputs also go here.

predictionFields = [

'u_component_of_wind',

'v_component_of_wind',

'geopotential',

'specific_humidity',

'temperature',

'vertical_velocity',

'10m_u_component_of_wind',

'10m_v_component_of_wind',

'2m_temperature',

'mean_sea_level_pressure',

'total_precipitation_6hr'

]

# Creating an array full of nan values.

def nans(*args) -> list:

return np.full((args), np.nan)

# Adding or subtracting time.

def deltaTime(dt, **delta) -> datetime.datetime:

return dt + datetime.timedelta(**delta)

def getTargets(dt, data:pd.DataFrame):

# Creating an array consisting of unique values of each index.

lat, lon, levels, batch = sorted(data.index.get_level_values('lat').unique().tolist()), sorted(data.index.get_level_values('lon').unique().tolist()), sorted(data.index.get_level_values('level').unique().tolist()), data.index.get_level_values('batch').unique().tolist()

time = [deltaTime(dt, hours = days * gap) for days in range(4)]

# Creating an empty dataset using latitude, longitude, the pressure levels and each prediction timestamp.

target = xarray.Dataset({field: (['lat', 'lon', 'level', 'time'], nans(len(lat), len(lon), len(levels), len(time))) for field in predictionFields}, coords = {'lat': lat, 'lon': lon, 'level': levels, 'time': time, 'batch': batch})

return target.to_dataframe()

if __name__ == '__main__':

# The code for creating inputs will be here.

values['targets'] = getTargets(first_prediction, values['inputs'])

As was the case with the targets, forcings too contains values for every coordinate and prediction timestamp but not the pressure level. The fields in forcings are:

It is important to note that the above values are assigned wrt the prediction timestamp. As was the case when processing the inputs, year and day progress depends only on the timestamp and the solar radiation was fetched from the single-level source. However, since one is making predictions, i.e., getting values for the future, the solar values, in the case of forcings, will not be available in the CDS dataset. For this we simulate the solar radiation values using the pysolar library.

# Includes the packages imported and constants assigned.

# The functions created for the inputs and targets also go here.

# Adding a timezone to datetime.datetime variables.

def addTimezone(dt, tz = pytz.UTC) -> datetime.datetime:

dt = toDatetime(dt)

if dt.tzinfo == None:

return pytz.UTC.localize(dt).astimezone(tz)

else:

return dt.astimezone(tz)

# Getting the solar radiation value wrt longitude, latitude and timestamp.

def getSolarRadiation(longitude, latitude, dt):

altitude_degrees = get_altitude(latitude, longitude, addTimezone(dt))

solar_radiation = get_radiation_direct(dt, altitude_degrees) if altitude_degrees > 0 else 0

return solar_radiation * watts_to_joules

# Calculating the solar radiation values for timestamps to be predicted.

def integrateSolarRadiation(data:pd.DataFrame):

dates = list(data.index.get_level_values('time').unique())

coords = [[lat, lon] for lat in lat_range for lon in lon_range]

values = []

# For each data, getting the solar radiation value at a particular coordinate.

for dt in dates:

values.extend(list(map(lambda coord:{'time': dt, 'lon': coord[1], 'lat': coord[0], 'toa_incident_solar_radiation': getSolarRadiation(coord[1], coord[0], dt)}, coords)))

# Setting indices.

values = pd.DataFrame(values).set_index(keys = ['lat', 'lon', 'time'])

# The forcings dataset will now contain the solar radiation values.

return pd.merge(data, values, left_index = True, right_index = True, how = 'inner')

def getForcings(data:pd.DataFrame):

# Since forcings data does not contain batch as an index, it is dropped.

# So are all the columns, since forcings data only has 5, which will be created.

forcingdf = data.reset_index(level = 'level', drop = True).drop(labels = predictionFields, axis = 1)

# Keeping only the unique indices.

forcingdf = pd.DataFrame(index = forcingdf.index.drop_duplicates(keep = 'first'))

# Adding the sin and cos of day and year progress.

# Functions are included in the creation of inputs data section.

forcingdf = integrateProgress(forcingdf)

# Integrating the solar radiation values.

forcingdf = integrateSolarRadiation(forcingdf)

return forcingdf

if __name__ == '__main__':

# The code for creating inputs and targets will be here.

values['forcings'] = getForcings(values['targets'])

Now that the three pillars of Graphcast is created, we enter the home stretch. Like in a NBA final, having won 3 games, we now proceed to the nittiest grittiest part, to get it done.

Like Kobe Bryant once said,

Job’s not over yet.

When it comes to an xarray, there are two main types of data:

Every value that a data variable contains, has certain coordinates assigned to it. The coordinates are those on which the value of the data variable depends on. Taking an example out of our own data,

Hence, before we proceed to predicting the weather, we make sure each data variable is assigned to its right coordinates, the code for which is presented below.

# Includes the packages imported and constants assigned.

# The functions created for the inputs, targets and forcings also go here.

# A dictionary created, containing each coordinate a data variable requires.

class AssignCoordinates:

coordinates = {

'2m_temperature': ['batch', 'lon', 'lat', 'time'],

'mean_sea_level_pressure': ['batch', 'lon', 'lat', 'time'],

'10m_v_component_of_wind': ['batch', 'lon', 'lat', 'time'],

'10m_u_component_of_wind': ['batch', 'lon', 'lat', 'time'],

'total_precipitation_6hr': ['batch', 'lon', 'lat', 'time'],

'temperature': ['batch', 'lon', 'lat', 'level', 'time'],

'geopotential': ['batch', 'lon', 'lat', 'level', 'time'],

'u_component_of_wind': ['batch', 'lon', 'lat', 'level', 'time'],

'v_component_of_wind': ['batch', 'lon', 'lat', 'level', 'time'],

'vertical_velocity': ['batch', 'lon', 'lat', 'level', 'time'],

'specific_humidity': ['batch', 'lon', 'lat', 'level', 'time'],

'toa_incident_solar_radiation': ['batch', 'lon', 'lat', 'time'],

'year_progress_cos': ['batch', 'time'],

'year_progress_sin': ['batch', 'time'],

'day_progress_cos': ['batch', 'lon', 'time'],

'day_progress_sin': ['batch', 'lon', 'time'],

'geopotential_at_surface': ['lon', 'lat'],

'land_sea_mask': ['lon', 'lat'],

}

def modifyCoordinates(data:xarray.Dataset):

# Parsing through each data variable and removing unneeded indices.

for var in list(data.data_vars):

varArray:xarray.DataArray = data[var]

nonIndices = list(set(list(varArray.coords)).difference(set(AssignCoordinates.coordinates[var])))

data[var] = varArray.isel(**{coord: 0 for coord in nonIndices})

data = data.drop_vars('batch')

return data

def makeXarray(data:pd.DataFrame) -> xarray.Dataset:

# Converting to xarray.

data = data.to_xarray()

data = modifyCoordinates(data)

return data

if __name__ == '__main__':

# The code for creating inputs, targets and forcings will be here.

values = {value:makeXarray(values[value]) for value in values}

Having calculated, processed and assembled the inputs, targets and forcings, it is now time to make predictions.

We now require the model weights and normalization statistics files, which are provided by Deepmind.

The files to be downloaded are:

The relative paths of the aforementioned files wrt the prediction file is depicted below. It is important to maintain the structure so that the required files can be imported and read successfully.

.

├── prediction.py

├── model

├── params

├── GraphCast_small - ERA5 1979-2015 - resolution 1.0 - pressure levels 13 - mesh 2to5 - precipitation input and output.npz

├── stats

├── diffs_stddev_by_level.nc

├── mean_by_level.nc

├── stddev_by_level.nc

With the prediction code being provided by Deepmind, all the above functions culminate with the predictions being made using the snippet below.

# Includes the packages imported and constants assigned.

# The functions created for the inputs, targets and forcings also go here.

with open(r'model/params/GraphCast_small - ERA5 1979-2015 - resolution 1.0 - pressure levels 13 - mesh 2to5 - precipitation input and output.npz', 'rb') as model:

ckpt = checkpoint.load(model, graphcast.CheckPoint)

params = ckpt.params

state = {}

model_config = ckpt.model_config

task_config = ckpt.task_config

with open(r'model/stats/diffs_stddev_by_level.nc', 'rb') as f:

diffs_stddev_by_level = xarray.load_dataset(f).compute()

with open(r'model/stats/mean_by_level.nc', 'rb') as f:

mean_by_level = xarray.load_dataset(f).compute()

with open(r'model/stats/stddev_by_level.nc', 'rb') as f:

stddev_by_level = xarray.load_dataset(f).compute()

def construct_wrapped_graphcast(model_config:graphcast.ModelConfig, task_config:graphcast.TaskConfig):

predictor = graphcast.GraphCast(model_config, task_config)

predictor = casting.Bfloat16Cast(predictor)

predictor = normalization.InputsAndResiduals(predictor, diffs_stddev_by_level = diffs_stddev_by_level, mean_by_level = mean_by_level, stddev_by_level = stddev_by_level)

predictor = autoregressive.Predictor(predictor, gradient_checkpointing = True)

return predictor

@hk.transform_with_state

def run_forward(model_config, task_config, inputs, targets_template, forcings):

predictor = construct_wrapped_graphcast(model_config, task_config)

return predictor(inputs, targets_template = targets_template, forcings = forcings)

def with_configs(fn):

return functools.partial(fn, model_config = model_config, task_config = task_config)

def with_params(fn):

return functools.partial(fn, params = params, state = state)

def drop_state(fn):

return lambda **kw: fn(**kw)[0]

run_forward_jitted = drop_state(with_params(jax.jit(with_configs(run_forward.apply))))

class Predictor:

@classmethod

def predict(cls, inputs, targets, forcings) -> xarray.Dataset:

predictions = rollout.chunked_prediction(run_forward_jitted, rng = jax.random.PRNGKey(0), inputs = inputs, targets_template = targets, forcings = forcings)

return predictions

if __name__ == '__main__':

# The code for creating inputs, targets, forcings & processing will be here.

predictions = Predictor.predict(values['inputs'], values['targets'], values['forcings'])

predictions.to_dataframe().to_csv('predictions.csv', sep = ',')

Above, I have provided the code for each process that will be undertaken:

While executing, it is important to bring all the processes together for a seamless implementation.

For simplicity, I have uploaded the code along with the docker image and container files, which can be used to create an environment to execute the prediction program.

In the universe of weather prediction, we currently have contributors like Accuweather, IBM, multiple meteomatics models. Graphcast proves to be an interesting and in many cases, a more efficient addition to this collection. However it also has some attributes that are far from optimal. In a rare moment of thought, I came up with the following insights:

It is important to note that Graphcast is a fairly new addition to the weather prediction scene, changes and additions will definitely be made to improve the ease of access and usability. Given the lead they have wrt efficiency and performance, they are sure to capitalize on it.

Resources:

Best of luck on your journey in data science and thank you for reading 🙂

Graphcast: How to Get Things Done was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Graphcast: How to Get Things Done

Go Here to Read this Fast! Graphcast: How to Get Things Done

Did you know that for most common types of things, you don’t necessarily need data anymore to train object detection or even instance segmentation models?

Let’s get real on a given example. Let’s assume you have been given the task to build an instance segmentation model for the following classes:

Arguably, data would be easy to find for such classes: plenty of images of those animals are available on the internet. But if we need to build a commercially viable product for instance segmentation, we still need two things:

Both of these tasks can be very time consuming and/or cost some significant amount of money.

Let’s explore another path: the use of free, available models. To do so, we’ll use a 2-step process to generate both the data and the associated labels:

Note that, at the date of publication of this article, images generated with Stable Diffusion are kind of in a grey area, and can be used for commercial use. But the regulation may change in the future.

All the codes used in this post are available in this repository.

I generated the data with Stable Diffusion. Before actually generating the data, let’s quickly give a few information about stable diffusion and how to use it.

For that, I used the following repository: https://github.com/AUTOMATIC1111/stable-diffusion-webui

It is very complete and frequently updated, allowing to use a lot of tools and plugins. It is very easy to install, on any distribution, by following the instructions in the readme. You can also find some very useful tutorials on how to use effectively Stable Diffusion:

Without going into the details of how the stable diffusion model is trained and works (there are plenty of good resources for that), it’s good to know that actually there is more than one model.

There are several “official” versions of the model released by Stability AI, such as Stable Diffusion 1.5, 2.1 or XL. These official models can be easily downloaded on the HuggingFace of Stability AI.

But since Stable Diffusion is open source, anyone can train their own model. There is a huge number of available models on the website Civitai, sometimes trained for specific purposes, such as fantasy images, punk images or realistic images.

For our need, I will use two models including one specifically trained for realistic image generation, since I want to generate realistic images of animals.

The used models and hyperparameters are the following:

To automate image generation with different settings, I used a specific feature script called X/Y/Z plot with prompt S/R for each axis.

The “prompt S/R” means search and replace, allowing to search for a string in the original prompt and replace it with other strings. Using X/Y/Z plot and prompt S/R on each axis, it allows to generate images for any combination of the possible given values (just like a hyperparameter grid search).

Here are the parameters I used on each axis:

Using this, I can easily generate in one go images of the following prompt “a realistic picture of a <animal> <action> <location>” with all the values proposed in the parameters.

All in all, it would generate images for 4 animals, 5 actions and 6 locations: so 120 possibilities. Adding to that, I used a batch count of 2 and 2 different models, increasing the generated images to 480 to create my dataset (120 for each animal class). Below are some examples of the generated images.

As we can see, most of the pictures are realistic enough. We will now get the instance masks, so that we can then train a segmentation model.

To get the labels, we will use SAM model to generate masks, and we will then manually filter out masks that are not good enough, as well as unrealistic images (often called hallucinations).

To generate the raw masks, let’s use SAM model. The SAM model requires input prompts (not a textual prompt): either a bounding box or a few point locations. This allows the model to generate the mask from this input prompt.

In our case, we will do the most simple input prompt: the center point. Indeed, in most images generated by Stable Diffusion, the main object is centered, allowing us to efficiently use SAM with always the same input prompt and absolutely no labeling. To do so, we use the following function:

This function will first instantiate a SAM predictor, given a model type and a checkpoint (to download here). It will then loop over the images in the input folder and do the following:

A few things to note:

Here are a few examples of images with their masks:

As we can see, once selected, the masks are quite accurate and it took virtually no time to label.

Not all the masks were correctly computed in the previous subsection. Indeed, sometimes the object was not centered, thus the mask prediction was off. Sometimes, for some reason, the mask is just wrong and would need more input prompts to make it work.

One quick workaround is to simply either select the best mask between the 2 computed ones, or simply remove the image from the dataset if no mask was good enough. Let’s do that with the following code:

This code loops over all the generated images with Stable Diffusion and does the following for each image:

The expected keyboard events are the following:

Running this script may take some time, since you have to go through all the images. Assuming 1 second per image, it would take about 10 minutes for 600 images. This is still much faster than actually labeling images with masks, that usually takes at least 30 second per mask for high quality masks. Moreover, this allows to effectively filter out any unrealistic image.

Running this script on the generated 480 images took me less than 5 minutes. I selected the masks and filtered unrealistic images, so that I ended up with 412 masks. Next step is to train the model.

Before training the YOLO segmentation model, we need to create the dataset properly. Let’s go through these steps.

This code loops through all the image and does the following:

One tricky part in this code is in the mask to polygon conversion, done by the mask2yolo function. This makes use of shapely and rasterio libraries to make this conversion efficiently. Of course, you can find the fully working in the repository.

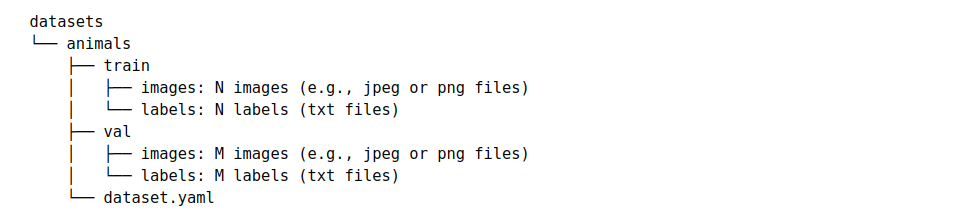

In the end, you would end up with the following structure in your datasets folder:

This is the expected structure to train a model using the YOLOv8 library: it’s finally time to train the model!

We can now train the model. Let’s use a YOLOv8 nano segmentation model. Training a model is just two lines of code with the Ultralytics library, as we can see in the following gist:

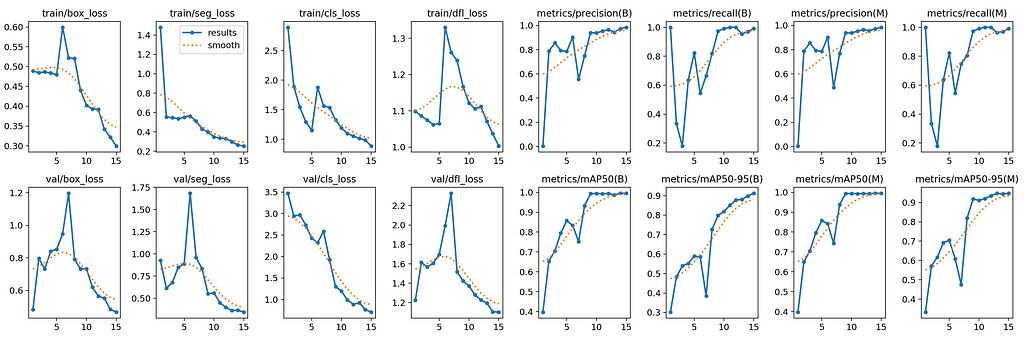

After 15 epochs of training on the previously prepared dataset, the results are the following:

As we can see, the metrics are quite high with a mAP50–95 close to 1, suggesting good performances. Of course, the dataset diversity being quite limited, those good performances are mostly likely caused by overfitting in some extent.

For a more realistic evaluation, next we’ll evaluate the model on a few real images.

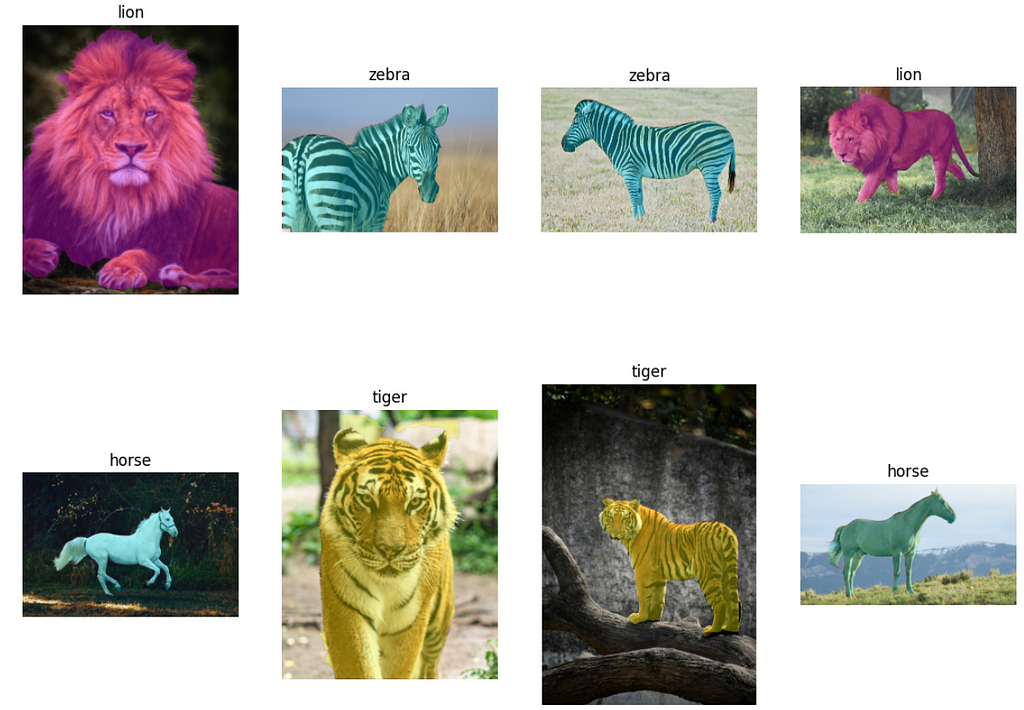

From Unsplash, I got a few images from each class and tested the model on this data. The results are right below:

On these 8 real images, the model performed quite well: the animal class is successfully predicted, and the mask seems quite accurate. Of course, to evaluate properly this model, we would need a proper labeled dataset images and segmentation masks of each class.

With absolutely no images and no labels, we could train a segmentation model for 4 classes: horse, lion, tiger and zebra. To do so, we leveraged three amazing tools:

While we couldn’t properly evaluate the trained model because we lack a labeled test dataset, it seems promising on a few images. Do not take this post as self-sufficient way to train any instance segmentation, but more as a method to speed up and boost the performances in your next projects. From my own experience, the use of synthetic data and tools like SAM can greatly improve your productivity in building production-grade computer vision models.

Of course, all the code to do this on your own is fully available in this repository, and will hopefully help you in your next computer vision project!

How to Train an Instance Segmentation Model with No Training Data was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

How to Train an Instance Segmentation Model with No Training Data

Go Here to Read this Fast! How to Train an Instance Segmentation Model with No Training Data

How to fine-tune a GPT model to generate a TED description-like text

Originally appeared here:

Text Generation with GPT

A step-by-step guide on how to build one in five easy steps, with code already written for you.

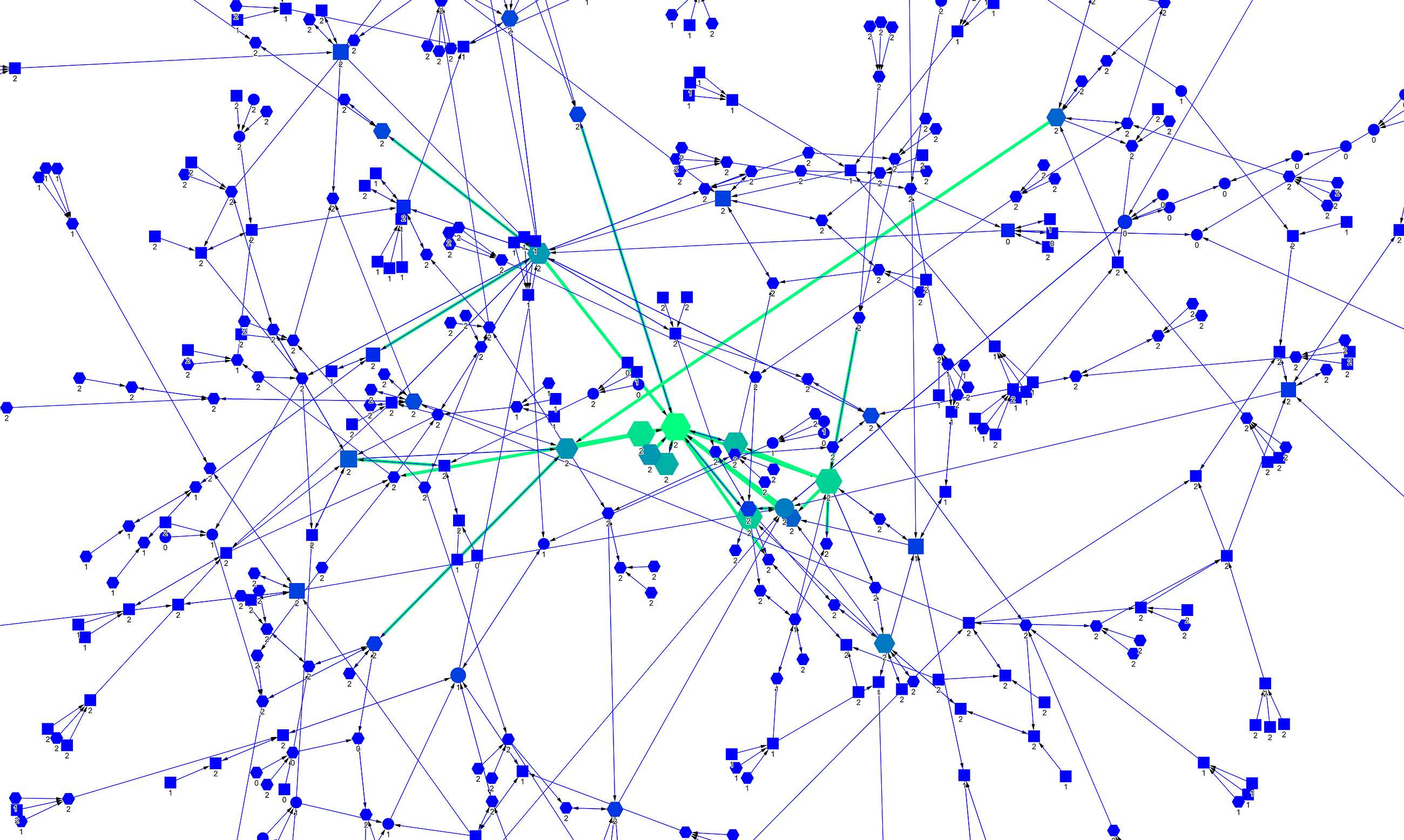

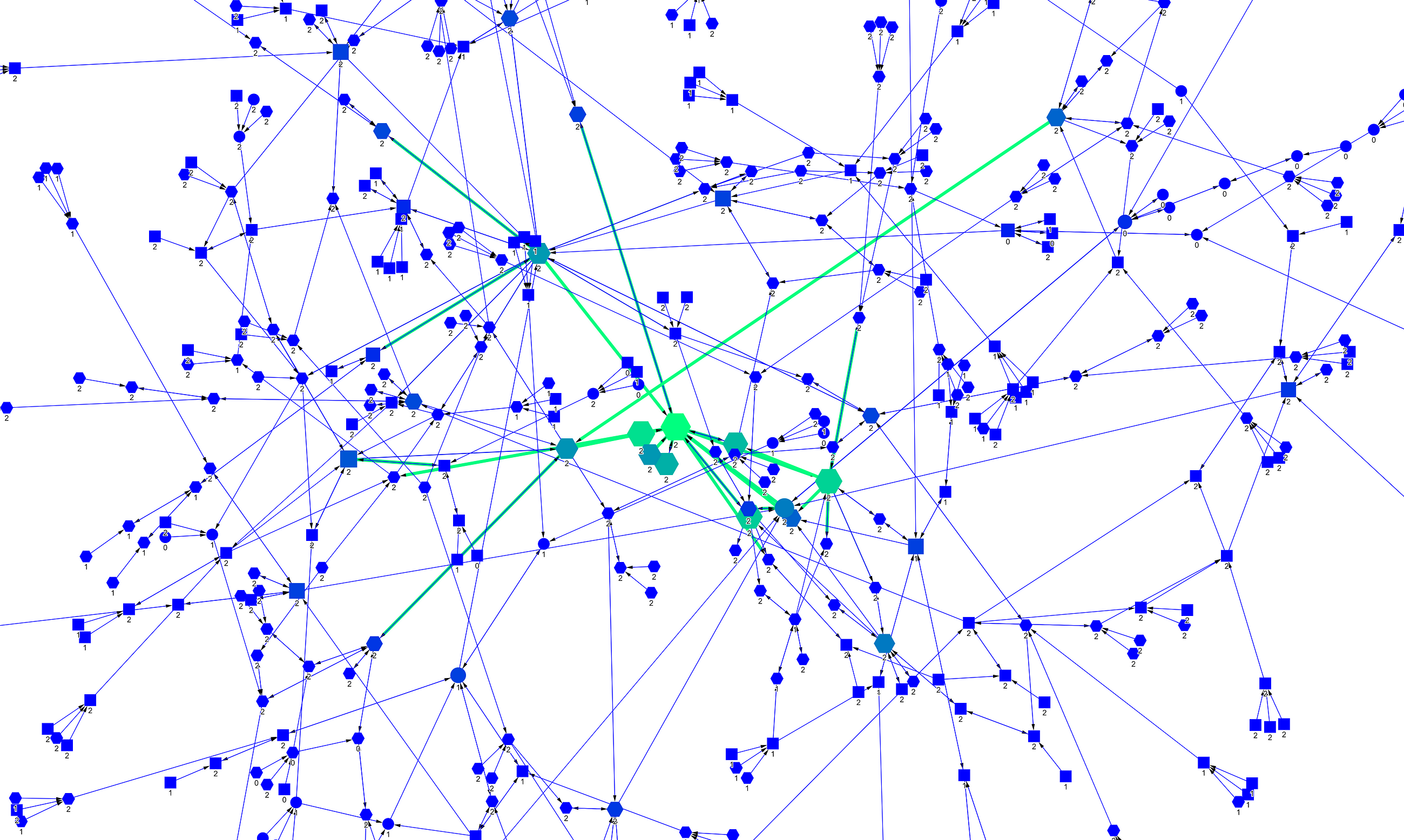

Originally appeared here:

An Interactive Visualisation for your Graph Neural Network Explanations

Go Here to Read this Fast! An Interactive Visualisation for your Graph Neural Network Explanations