Originally appeared here:

Exclusive: Seagate explains why it didn’t sell a 60TB SSD in 2016 — and when it plans to finally release a 60TB HDD to the world

Tag: technews

-

Exclusive: Seagate explains why it didn’t sell a 60TB SSD in 2016 — and when it plans to finally release a 60TB HDD to the world

You see, it’s all about priorities and expectations. -

“Perfect” memory that could one day replace three types of storage gets very early prototype — SOT-MRAM is cache, system memory and storage rolled into one

Newly developed SOT-MRAM memory combines high-speed performance with low power consumption.“Perfect” memory that could one day replace three types of storage gets very early prototype — SOT-MRAM is cache, system memory and storage rolled into one“Perfect” memory that could one day replace three types of storage gets very early prototype — SOT-MRAM is cache, system memory and storage rolled into one -

A Weekend AI Project: Using Speech Recognition, PTT, and a Large Action Model on a Raspberry Pi

Making “à la Rabbit prototype” with Python, Push-to-Talk, Local, and Cloud LLMs

Originally appeared here:

A Weekend AI Project: Using Speech Recognition, PTT, and a Large Action Model on a Raspberry Pi -

Faster DataFrame Serialization

Read and write dataframes up to ten times faster than Parquet with StaticFrame NPZ

Photo by Author The Apache Parquet format provides an efficient binary representation of columnar table data, as seen with widespread use in Apache Hadoop and Spark, AWS Athena and Glue, and Pandas DataFrame serialization. While Parquet offers broad interoperability with performance superior to text formats (such as CSV or JSON), it is as much as ten times slower than NPZ, an alternative DataFrame serialization format introduced in StaticFrame.

StaticFrame (an open-source DataFrame library of which I am an author) builds upon NumPy NPY and NPZ formats to encode DataFrames. The NPY format (a binary encoding of array data) and the NPZ format (zipped bundles of NPY files) are defined in a NumPy Enhancement Proposal from 2007. By extending the NPZ format with specialized JSON metadata, StaticFrame provides a complete DataFrame serialization format that supports all NumPy dtypes.

This article extends work first presented at PyCon USA 2022 with further performance optimizations and broader benchmarking.

The Challenge of Serializing DataFrames

DataFrames are not just collections of columnar data with string column labels, such as found in relational databases. In addition to columnar data, DataFrames have labelled rows and columns, and those row and column labels can be of any type or (with hierarchical labels) many types. Further, it is common to store metadata with a name attribute, either on the DataFrame or on the axis labels.

As Parquet was originally designed just to store collections of columnar data, the full range of DataFrame characteristics is not directly supported. Pandas supplies this additional information by adding JSON metadata into the Parquet file.

Further, Parquet supports a minimal selection of types; the full range of NumPy dtypes is not directly supported. For example, Parquet does not natively support unsigned integers or any date types.

While Python pickles are capable of efficiently serializing DataFrames and NumPy arrays, they are only suitable for short-term caches from trusted sources. While pickles are fast, they can become invalid due to code changes and are insecure to load from untrusted sources.

Another alternative to Parquet, originating in the Arrow project, is Feather. While Feather supports all Arrow types and succeeds in being faster than Parquet, it is still at least two times slower reading DataFrames than NPZ.

Parquet and Feather support compression to reduce file size. Parquet defaults to using “snappy” compression, while Feather defaults to “lz4”. As the NPZ format prioritizes performance, it does not yet support compression. As will be shown below, NPZ outperforms both compressed and uncompressed Parquet files by significant factors.

DataFrame Serialization Performance Comparisons

Numerous publications offer DataFrame benchmarks by testing just one or two datasets. McKinney and Richardson (2020) is an example, where two datasets, Fannie Mae Loan Performance and NYC Yellow Taxi Trip data, are used to generalize about performance. Such idiosyncratic datasets are insufficient, as both the shape of the DataFrame and the degree of columnar type heterogeneity can significantly differentiate performance.

To avoid this deficiency, I compare performance with a panel of nine synthetic datasets. These datasets vary along two dimensions: shape (tall, square, and wide) and columnar heterogeneity (columnar, mixed, and uniform). Shape variations alter the distribution of elements between tall (e.g., 10,000 rows and 100 columns), square (e.g., 1,000 rows and columns), and wide (e.g., 100 rows and 10,000 columns) geometries. Columnar heterogeneity variations alter the diversity of types between columnar (no adjacent columns have the same type), mixed (some adjacent columns have the same type), and uniform (all columns have the same type).

The frame-fixtures library defines a domain-specific language to create deterministic, randomly-generated DataFrames for testing; the nine datasets are generated with this tool.

To demonstrate some of the StaticFrame and Pandas interfaces evaluated, the following IPython session performs basic performance tests using %time. As shown below, a square, uniformly-typed DataFrame can be written and read with NPZ many times faster than uncompressed Parquet.

>>> import numpy as np

>>> import static_frame as sf

>>> import pandas as pd

>>> # an square, uniform float array

>>> array = np.random.random_sample((10_000, 10_000))

>>> # write peformance

>>> f1 = sf.Frame(array)

>>> %time f1.to_npz('/tmp/frame.npz')

CPU times: user 710 ms, sys: 396 ms, total: 1.11 s

Wall time: 1.11 s

>>> df1 = pd.DataFrame(array)

>>> %time df1.to_parquet('/tmp/df.parquet', compression=None)

CPU times: user 6.82 s, sys: 900 ms, total: 7.72 s

Wall time: 7.74 s

>>> # read performance

>>> %time f2 = f1.from_npz('/tmp/frame.npz')

CPU times: user 2.77 ms, sys: 163 ms, total: 166 ms

Wall time: 165 ms

>>> %time df2 = pd.read_parquet('/tmp/df.parquet')

CPU times: user 2.55 s, sys: 1.2 s, total: 3.75 s

Wall time: 866 msPerformance tests provided below extend this basic approach by using frame-fixtures for systematic variation of shape and type heterogeneity, and average results over ten iterations. While hardware configuration will affect performance, relative characteristics are retained across diverse machines and operating systems. For all interfaces the default parameters are used, except for disabling compression as needed. The code used to perform these tests is available at GitHub.

Read Performance

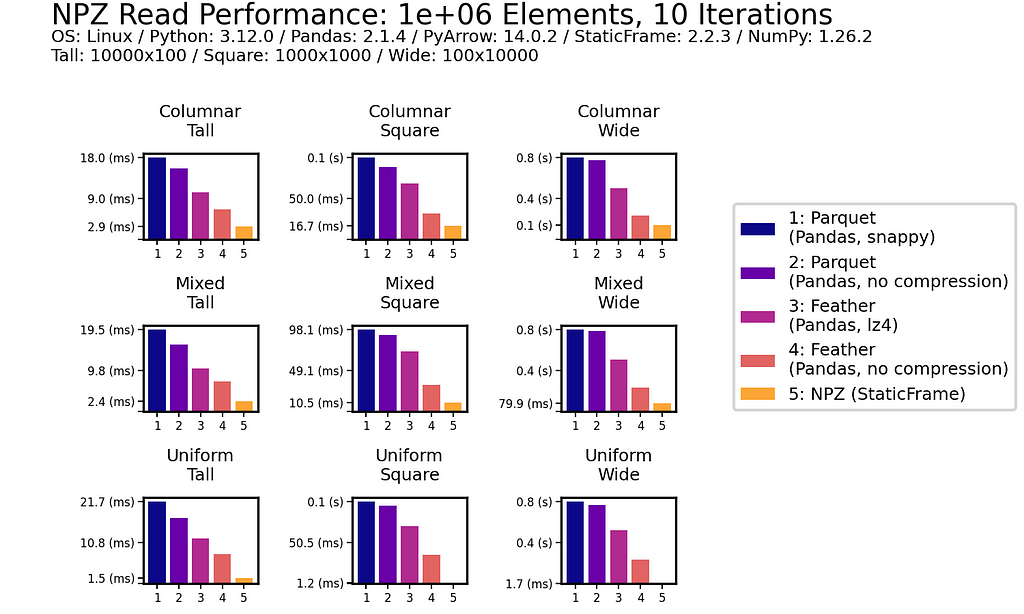

As data is generally read more often then it is written, read performance is a priority. As shown for all nine DataFrames of one million (1e+06) elements, NPZ significantly outperforms Parquet and Feather with every fixture. NPZ read performance is over ten times faster than compressed Parquet. For example, with the Uniform Tall fixture, compressed Parquet reading is 21 ms compared to 1.5 ms with NPZ.

The chart below shows processing time, where lower bars correspond to faster performance.

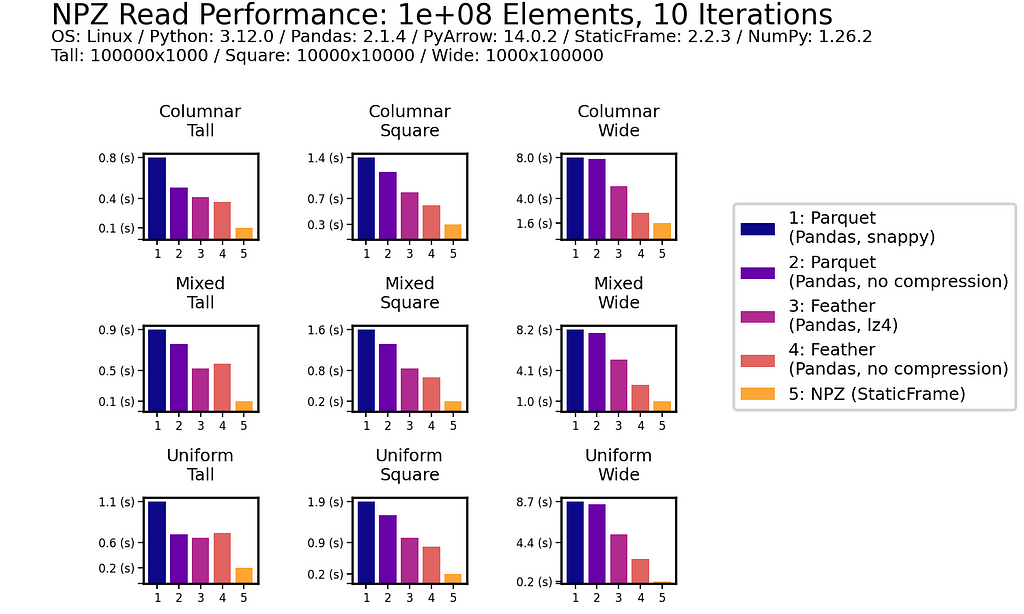

This impressive NPZ performance is retained with scale. Moving to 100 million (1e+08) elements, NPZ continues to perform at least twice as fast as Parquet and Feather, regardless of if compression is used.

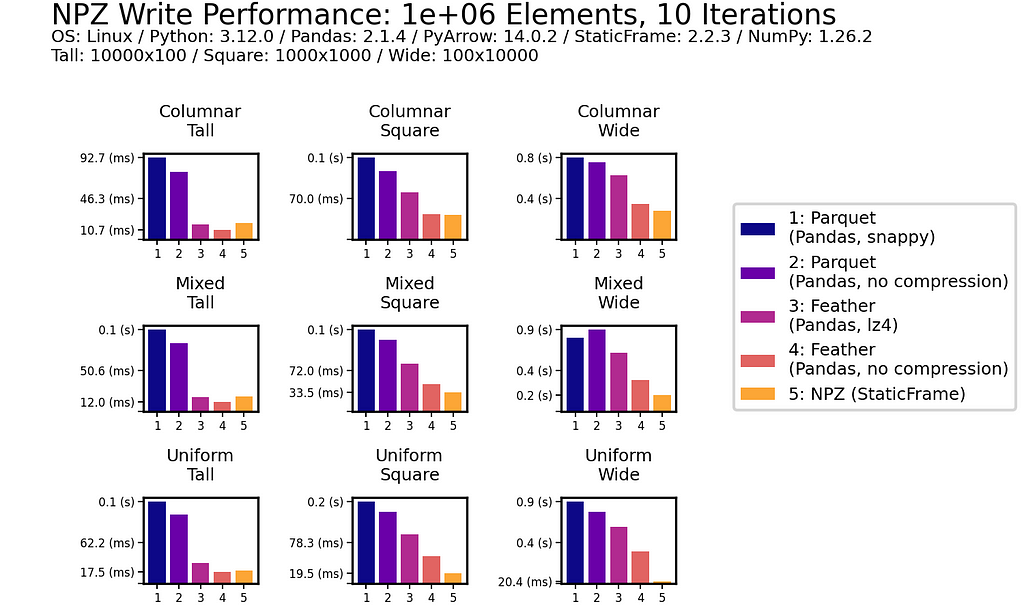

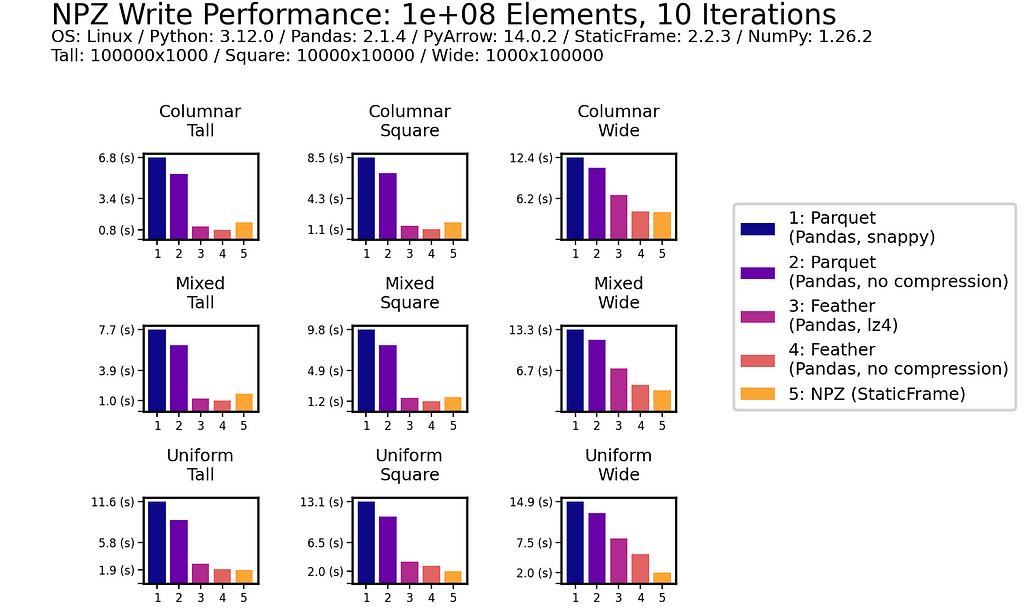

Write Performance

In writing DataFrames to disk, NPZ outperforms Parquet (both compressed and uncompressed) in all scenarios. For example, with the Uniform Square fixture, compressed Parquet writing is 200 ms compared to 18.3 ms with NPZ. NPZ write performance is generally comparable to uncompressed Feather: in some scenarios NPZ is faster, in others, Feather is faster.

As with read performance, NPZ write performance is retained with scale. Moving to 100 million (1e+08) elements, NPZ continues to be at least twice as fast as Parquet, regardless of if compression is used or not.

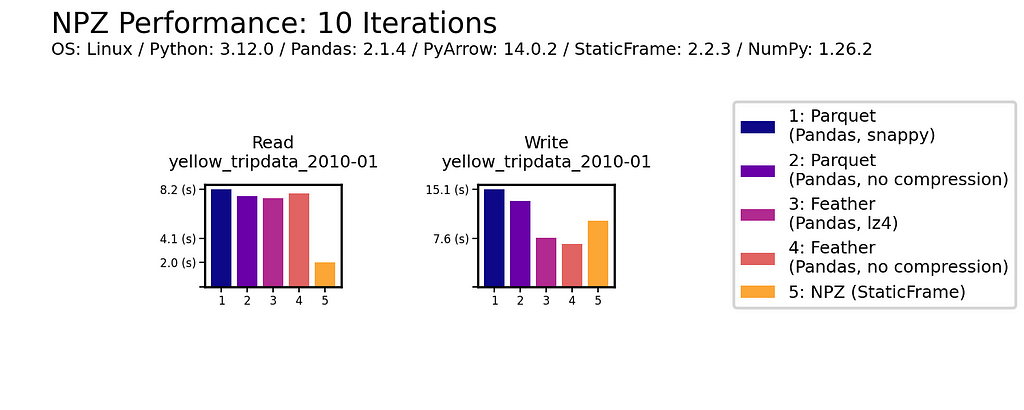

Idiosyncratic Performance

As an additional reference, we will also benchmark the same NYC Yellow Taxi Trip data (from January 2010) used in McKinney and Richardson (2020). This dataset contains almost 300 million (3e+08) elements in a tall, heterogeneously typed DataFrame of 14,863,778 rows and 19 columns.

NPZ read performance is shown to be around four times faster than Parquet and Feather (with or without compression). While NPZ write performance is faster than Parquet, Feather writing here is fastest.

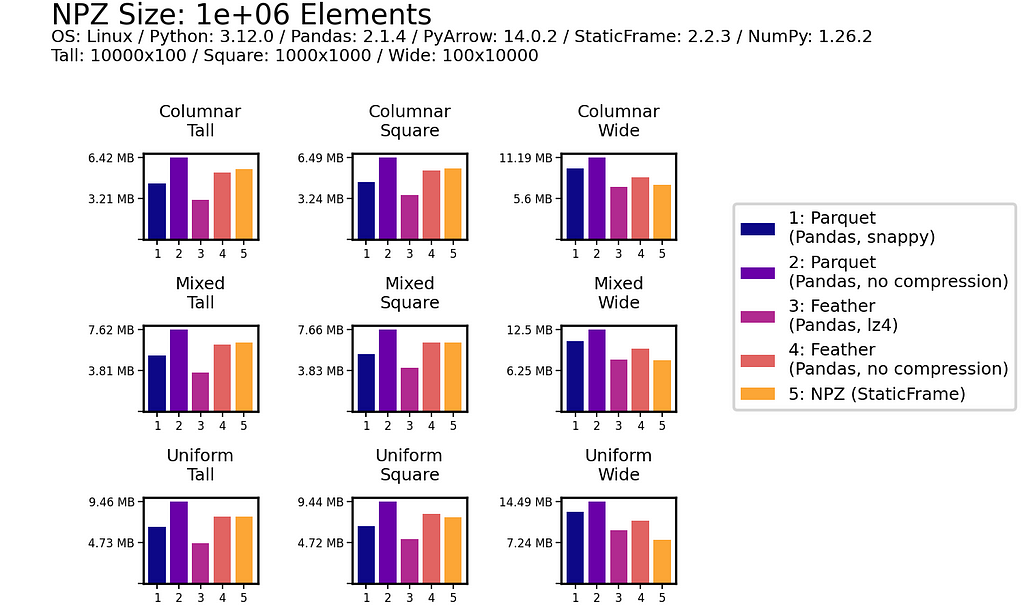

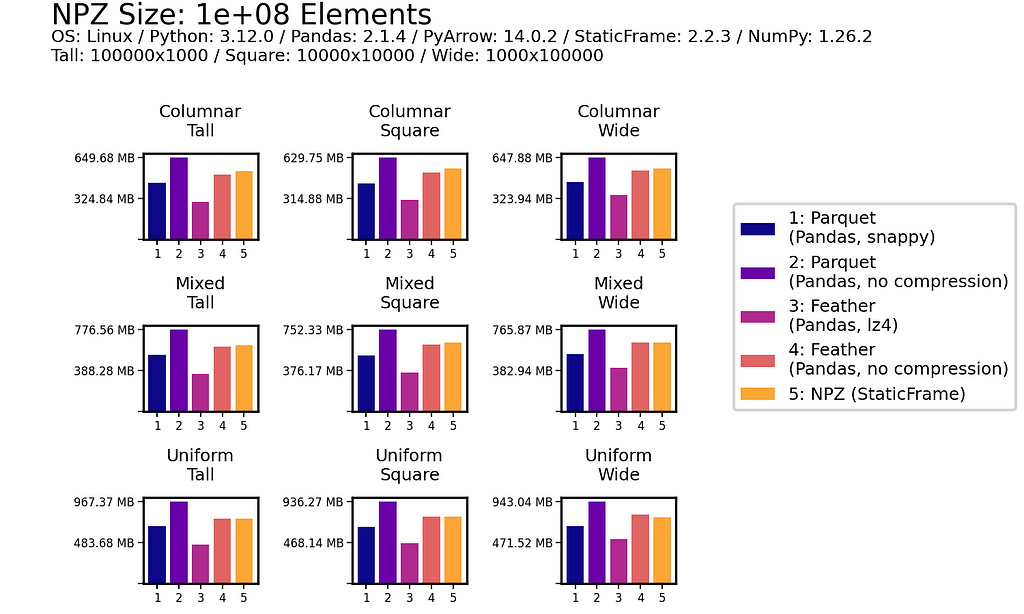

File Size

As shown below for one million (1e+06) element and 100 million (1e+08) element DataFrames, uncompressed NPZ is generally equal in size on disk to uncompressed Feather and always smaller than uncompressed Parquet (sometimes smaller than compressed Parquet too). As compression provides only modest file-size reductions for Parquet and Feather, the benefit of uncompressed NPZ in speed might easily outweigh the cost of greater size.

Serializing DataFrames

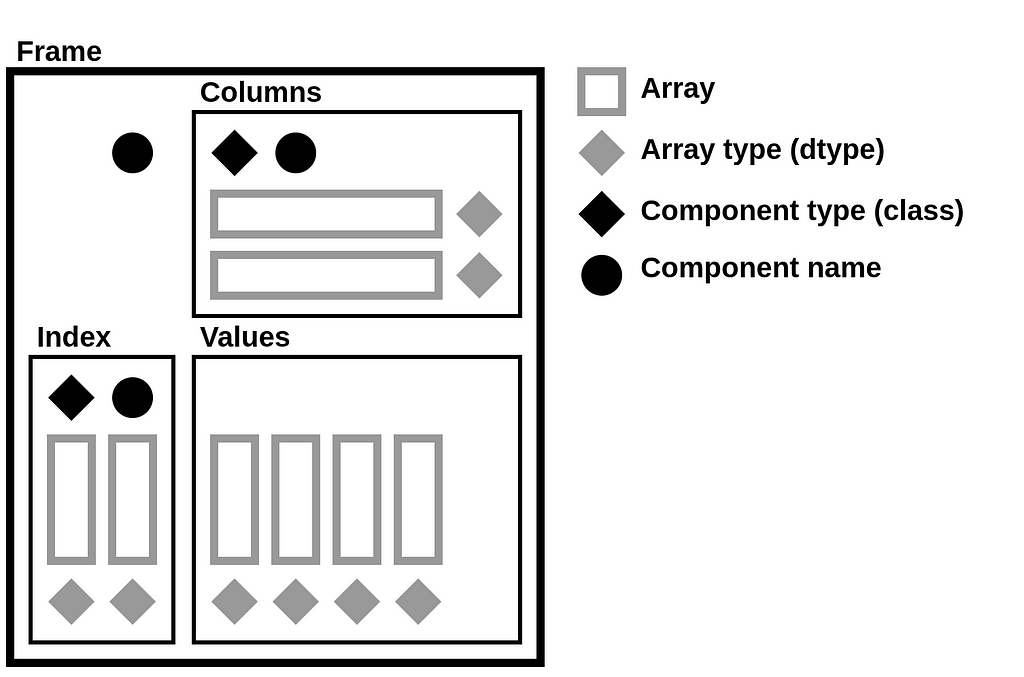

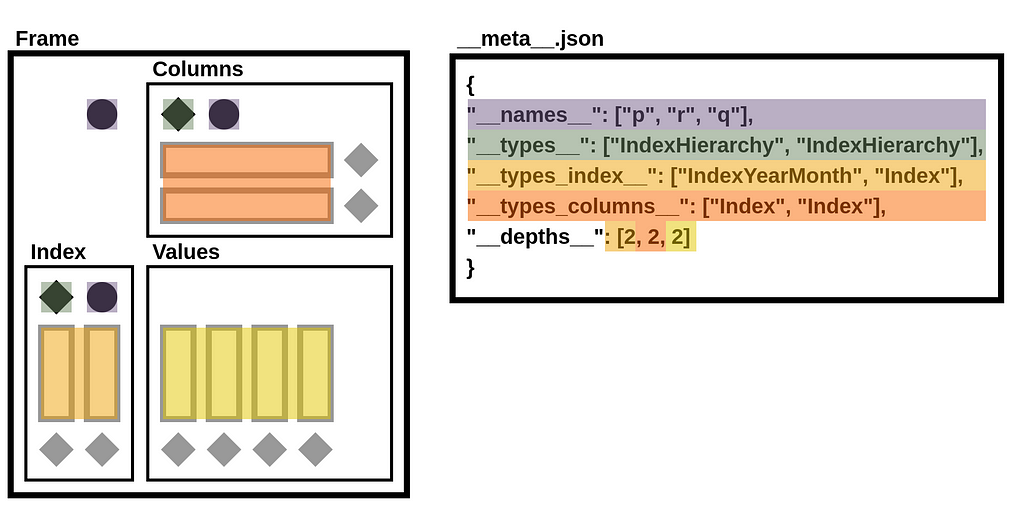

StaticFrame stores data as a collection of 1D and 2D NumPy arrays. Arrays represent columnar values, as well as variable-depth index and column labels. In addition to NumPy arrays, information about component types (i.e., the Python class used for the index and columns), as well as the component name attributes, are needed to fully reconstruct a Frame. Completely serializing a DataFrame requires writing and reading these components to a file.

DataFrame components can be represented by the following diagram, which isolates arrays, array types, component types, and component names. This diagram will be used to demonstrate how an NPZ encodes a DataFrame.

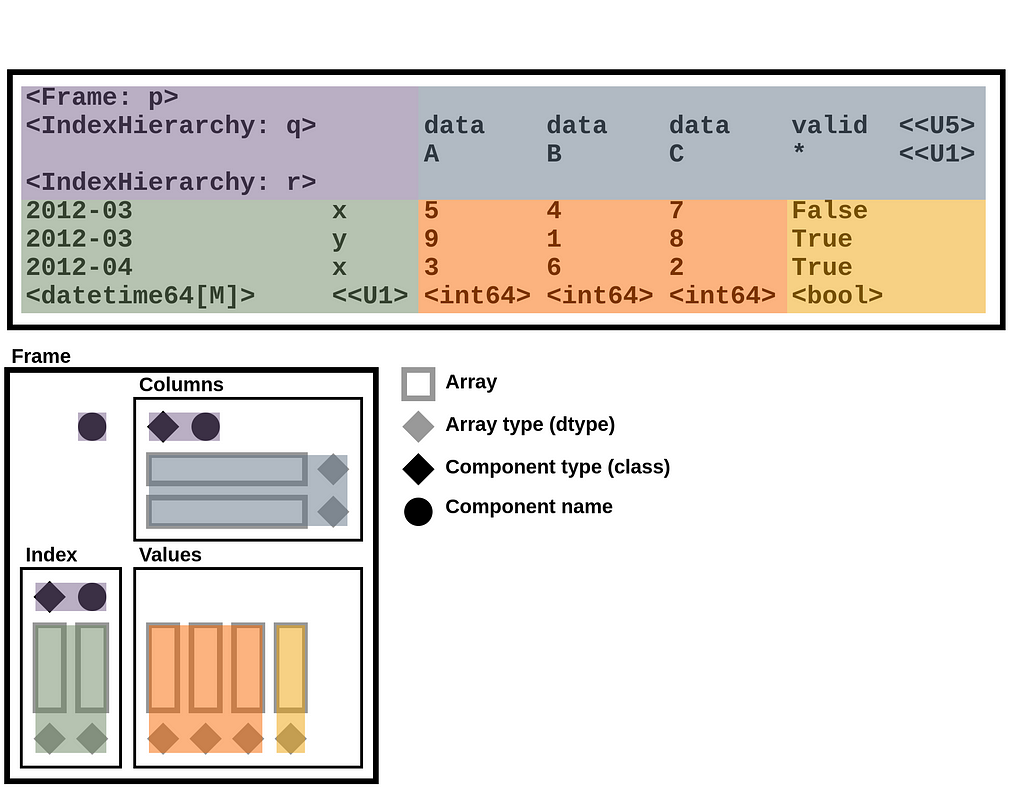

The components of that diagram map to components of a Frame string representation in Python. For example, given a Frame of integers and Booleans with hierarchical labels on both the index and columns (downloadable via GitHub with StaticFrame’s WWW interface), StaticFrame provides the following string representation:

>>> frame = sf.Frame.from_npz(sf.WWW.from_file('https://github.com/static-frame/static-frame/raw/master/doc/source/articles/serialize/frame.npz', encoding=None))

>>> frame

<Frame: p>

<IndexHierarchy: q> data data data valid <<U5>

A B C * <<U1>

<IndexHierarchy: r>

2012-03 x 5 4 7 False

2012-03 y 9 1 8 True

2012-04 x 3 6 2 True

<datetime64[M]> <<U1> <int64> <int64> <int64> <bool>The components of the string representation can be mapped to the DataFrame diagram by color:

Encoding an Array in NPY

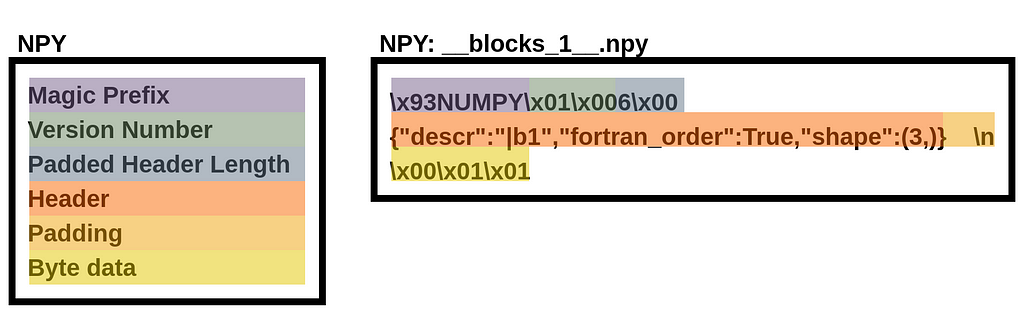

A NPY stores a NumPy array as a binary file with six components: (1) a “magic” prefix, (2) a version number, (3) a header length and (4) header (where the header is a string representation of a Python dictionary), and (5) padding followed by (6) raw array byte data. These components are shown below for a three-element binary array stored in a file named “__blocks_1__.npy”.

Given a NPZ file named “frame.npz”, we can extract the binary data by reading the NPY file from the NPZ with the standard library’s ZipFile:

>>> from zipfile import ZipFile

>>> with ZipFile('/tmp/frame.npz') as zf: print(zf.open('__blocks_1__.npy').read())

b'x93NUMPYx01x006x00{"descr":"|b1","fortran_order":True,"shape":(3,)} nx00x01x01As NPY is well supported in NumPy, the np.load() function can be used to convert this file to a NumPy array. This means that underlying array data in a StaticFrame NPZ is easily extractable by alternative readers.

>>> with ZipFile('/tmp/frame.npz') as zf: print(repr(np.load(zf.open('__blocks_1__.npy'))))

array([False, True, True])As a NPY file can encode any array, large two-dimensional arrays can be loaded from contiguous byte data, providing excellent performance in StaticFrame when multiple contiguous columns are represented by a single array.

Building a NPZ File

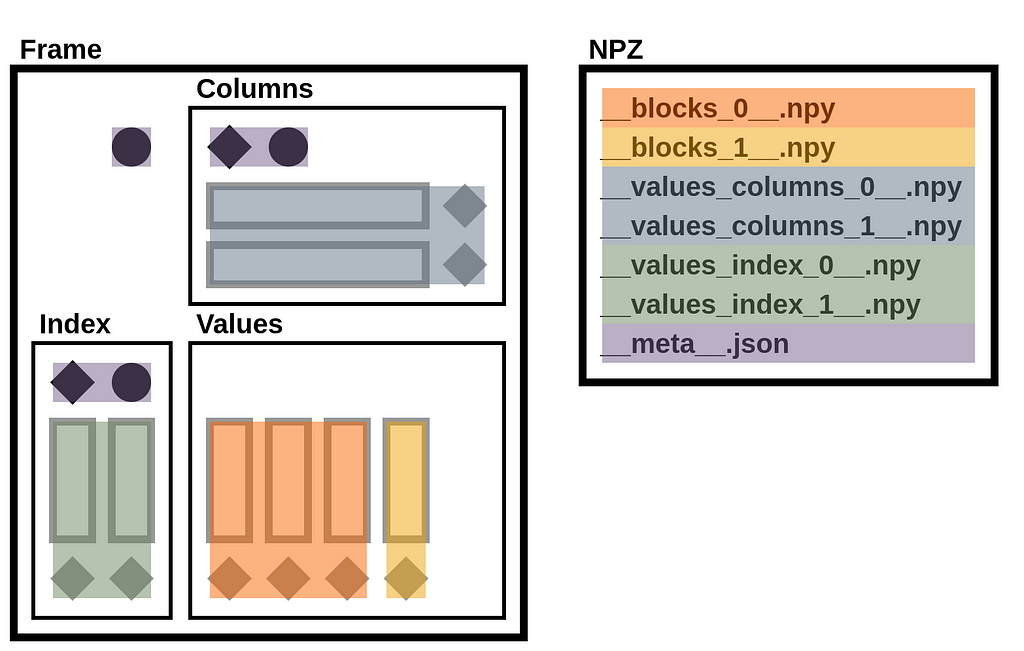

A StaticFrame NPZ is a standard uncompressed ZIP file that contains array data in NPY files and metadata (containing component types and names) in a JSON file.

Given the NPZ file for the Frame above, we can list its contents with ZipFile. The archive contains six NPY files and one JSON file.

>>> with ZipFile('/tmp/frame.npz') as zf: print(zf.namelist())

['__values_index_0__.npy', '__values_index_1__.npy', '__values_columns_0__.npy', '__values_columns_1__.npy', '__blocks_0__.npy', '__blocks_1__.npy', '__meta__.json']The illustration below maps these files to components of the DataFrame diagram.

StaticFrame extends the NPZ format to include metadata in a JSON file. This file defines name attributes, component types, and depth counts.

>>> with ZipFile('/tmp/frame.npz') as zf: print(zf.open('__meta__.json').read())

b'{"__names__": ["p", "r", "q"], "__types__": ["IndexHierarchy", "IndexHierarchy"], "__types_index__": ["IndexYearMonth", "Index"], "__types_columns__": ["Index", "Index"], "__depths__": [2, 2, 2]}'In the illustration below, components of the __meta__.json file are mapped to components of the DataFrame diagram.

As a simple ZIP file, tools to extract the contents of a StaticFrame NPZ are ubiquitous. On the other hand, the ZIP format, given its history and broad features, incurs performance overhead. StaticFrame implements a custom ZIP reader optimized for NPZ usage, which contributes to the excellent read performance of NPZ.

Conclusion

The performance of DataFrame serialization is critical to many applications. While Parquet has widespread support, its generality compromises type specificity and performance. StaticFrame NPZ can read and write DataFrames up to ten-times faster than Parquet with or without compression, with similar (or only modestly larger) file sizes. While Feather is an attractive alternative, NPZ read performance is still generally twice as fast as Feather. If data I/O is a bottleneck (and it often is), StaticFrame NPZ offers a solution.

Faster DataFrame Serialization was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Faster DataFrame Serialization -

Introducing the Quad-Tile Chart & Squaremap: Squarify Your Data

Learn about Quad-Tile Charts and create your own with Python

Introducing the Quad-Tile Chart & Squaremap: Squarify Your DataIntroducing the Quad-Tile Chart & Squaremap: Squarify Your Data -

Alaves vs Barcelona live stream: Can you watch for free?

Barcelona takes on Alaves today. The match is on ESPN+ in the US, but we have some different ways you can watch a live stream for free.Go Here to Read this Fast! Alaves vs Barcelona live stream: Can you watch for free?

Originally appeared here:

Alaves vs Barcelona live stream: Can you watch for free? -

Bayern vs Monchengladbach live stream: Can you watch for free?

Bayern Munich takes on Monchengladbach in Bundesliga action Saturday. Here’s how to watch a live stream if you live in the US.Go Here to Read this Fast! Bayern vs Monchengladbach live stream: Can you watch for free?

Originally appeared here:

Bayern vs Monchengladbach live stream: Can you watch for free? -

Everton vs Tottenham live stream: Can you watch for free?

Tottenham face off against Everton in a Premier League showdown on Saturday, and we have several different ways you can watch a live stream for free.Go Here to Read this Fast! Everton vs Tottenham live stream: Can you watch for free?

Originally appeared here:

Everton vs Tottenham live stream: Can you watch for free? -

Demand forecast — a value-driven approach with 5 key insights

Demand Forecast — A Value-Driven Approach with 5 Insights

The end goal of forecast is not accuracy, but tangible value creation. Let’s explore 5 insights to maximise the value of demand forecasts

Photo by Daoudi Aissa on Unsplash Intro

Hey there! January is the perfect time for planning and making a big impact. As a data scientist, you’re often asked to build forecast models, and you may believe that accuracy is always the golden standard. However, there’s a twist: the real magic lies not just in accuracy but in understanding the bigger picture and focusing on value and impact. Let’s uncover these important aspects together.

1. Value is the ultimate goal of demand forecast

Regarding forecasts, we should first align on one thing: our ultimate goal is about creating real value. Real value can manifest as tangible financial benefits, such as cost reductions and revenue increases, or as time and resources that you free up from a forecast process. There are many pathways which start from demand forecast and end in value creation. Forecast accuracy is like our trusty compass that helps us navigate toward the goal, but it’s not the treasure we’re hunting for.

Your to-dos as a data scientist:

- Discuss with your manager and team the purpose of the demand forecast. Is its goal to set accurate sales targets? To lower inventory levels? What are the underlying concerns behind these forecast numbers?

- Create a simple business case to translate forecast accuracy metrics (bias, MAPE) into financial terms. If this task seems daunting, ask for help from your friends on the business side. Together, you will learn A LOT about the business and the value of your demand forecast.

- Evaluate your business case to identify the most crucial aspect of the forecasting exercise. Is it reducing bias (particularly over-forecasting) to decrease inventory levels? Is it assessing the impact of discounts on various products categories (which might be better served by an elasticity model)? Or is it more about lowering MAPE to prevent the supply team from constantly reacting to unpredictable purchase orders in a crisis

By clearly connecting the dots between forecasting elements and their value, you’ll feel more confident about where to direct your energy and brainpower in this forecasting exercise.

2. Small tweaks in the process can go a long way

In forecasts, you can add value in two areas: process and model. As data scientists, we may be hyper-focused on the model, however sometimes, a small tweak in the process can go a long way. The process that produces the forecast can determine its quality, usually in a negative way. Meanwhile, the process that begins with the forecast is the pathway leading to value creation. Without a good process, it would be hard for even the best model to create any value.

Your to-dos as a data scientist:

- Learn about the “best practices” in forecasting. This can be tricky since different industries and business models have their own definitions of what “best practices” are. But some principles are universally valid. For instance, forecasts should be generated automatically on a regular basis; manual overrides should be rare and only for solid reasons; and forecasts ought to trigger clear decisions and actions like preparing production, adjusting inventory, or ramping up promotions.

- Check out these “best practices” and see if you’ve covered all your bases. If yes, awesome! You’re ready for the next challenge. If not, dig a bit deeper. Ask yourself who or what is holding things back. What are the smallest changes that could improve the whole forecasting process? I’d really recommend grabbing a coffee with a key player in this area. You might be surprised at the impact you can have by swaying just one person in the forecast process.

3. Clearer process mapping enables better model-building

Even when the process is too ingrained to change, having a clear understanding of the process is still tremendously valuable. It allows you to focus on the key features that are most pertinent in the chain of decisions & actions.

For instance, if production plans need to be finalised two weeks in advance, there’s no need to focus on forecasts for the upcoming week. Likewise, if key decisions are made at the product family level, then it would be a waste of time to look at the accuracy at the individual product level. Let the (unchangeable) process details define the boundaries for your modelling, saving you from the futile task of boiling the ocean.

Your to-dos as a data scientist:

- Pair up with a business-savvy buddy and sketch out a diagram of the forecasting process. Make sure each step includes these elements: the decision being made, the inputs for the decision, who’s making the decision, and the outcomes that follow. Remember, this isn’t an easy task and we’re not aiming for perfection. Gather as much info as you can and piece it together on paper.

- Next, take a look at your diagram (which might look a bit overwhelming with all its circles and such) and try to pinpoint the most critical decisions in the entire process. Figure out what kind of forecast is essential for making solid decisions at these points: do you need a 6-month forecast at the product family level, or a weekly forecast for each specific product package variant? These are the crucial issues that your top-notch modelling skills and data science knowledge will tackle.

4. Improve explainability for better alignment and adoption

On the modelling side, explainability should be a top priority, as it significantly enhances the adoption of the forecasts. Since our ultimate goal is value creation, forecasts must be used in business operations to generate tangible value.

This could involve using them in promotion planning to increase revenue or in setting inventory targets to reduce stock levels. People often have the choice to trust or distrust the forecast in their daily tasks. (Ever been in a meeting where the forecast is dismissed because no one understands the numbers?) Without trust, there is no adoption of the forecast, and consequently, little value can be created.

On the contrary, when the forecast numbers come with an intuitive explanation, people are more likely to trust and use them. As a result, the value of an accurate forecast can be realised in their daily tasks and decisions.

Your to-dos as a data scientist:

- Think about the forecasting process and consider whether people want and need a better understanding of your forecast model. I’d say if the forecast is used for humans to make medium or long-term decisions (like budgeting, pricing, or capacity planning), explaining it is crucial to build trust in the data and prompt a decision.

- You also need to grasp how decision-makers intuitively interpret or anticipate forecast numbers. Then, tailor your explanation to speak their language. This is the tricky part — you’ll have to rework your feature importance, Shap values, and regression coefficients into terms like “the impact of a 1% price increase.” Don’t hesitate to ask your business-savvy friend for help and test your explanation on them to see if it makes sense

5. Simulate scenarios to facilitate decision-making

Scenario simulation naturally extends from explainability. While an explainable model helps you understand forecasts based on anticipated key drivers (for example, a 10% price increase), scenario simulation enables you to explore and assess various alternatives of these anticipations or plans. You can evaluate the risks and benefits of each option. This approach is incredibly powerful in strategic decision-making.

So, if you’re tasked with creating a forecast to determine next year’s promotion budget, it’s crucial to align with stakeholders on the key drivers you want to explore (such as discount levels, packaging format, timing, etc.) and the potential scenarios. Build your forecast around these key drivers to ensure not only accuracy, but also that the model’s explanations and scenarios “make sense”. This might mean anticipating an increase in demand when prices drop or as holidays approach. But of course, you need to figure out, together with the key stakeholders, about what “make sense” really means in your business.

Your to-dos as a data scientist:

- Chat with people who make decisions to figure out which hypothetical scenarios they want to be prepared for. Have them identify key factors and set the scene: a 10% inflation spike, supply disruption of a crucial raw material, a natural disaster, and so on. Ask them to rank these scenarios and factors in order of importance, so that you can prioritise.

- Next, see how your forecast model stacks up. Try to create simulated forecasts for some of these scenarios and factors, starting always with the most important ones.

- Check with your business-savvy friend to ensure your simulations are realistic. You might need a few tries to tweak your models and get everything just right. Like with explanations, using business language to narrate the story is key in this task. Don’t hesitate to ask for help. It’s a learning opportunity for both you and whoever assists you.

Wrapping up

Alright, I know this seems like a lot to take in. You might be thinking, “So, in addition to crunching data and training models, do I also need to delve into process analysis, come up with an explanatory model, and even build a simulation engine for forecasting?”

No need to worry, that’s not exactly what’s expected. Look at the bigger picture, will help you pinpoint the key aspects for your forecasting model, figure out the best way to build them, and connect with the right people to enhance the value of your forecast. Sure, you’ll have to add a few extra tasks to your usual routine of data crunching and model tuning, but I promise it’ll be a rewarding experience — plus, you’ll get to make some business-savvy friends along the way!

If you want to go deeper than this simple framework, I have also compiled a comprehensive list of questions in this article to cover all aspects related to demand forecast. Have fun with your forecast project and maximise your impact on the world!

Demand forecast — a value-driven approach with 5 key insights was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Demand forecast — a value-driven approach with 5 key insightsGo Here to Read this Fast! Demand forecast — a value-driven approach with 5 key insights

-

Nuggets vs Trail Blazers live stream: Can you watch the NBA game for free?

Here is how to watch the Nuggets vs Trail Blazers via a live stream.Originally appeared here:

Nuggets vs Trail Blazers live stream: Can you watch the NBA game for free?