NASA will analyze and explore two different landing options for its Mars Sample Return program, though it will take almost two years to do so and is expected to announce its decision in late 2026. The agency had to temporarily hit pause on the program after an independent review found that it could cost between $8 billion and $11 billion, which is way above budget.

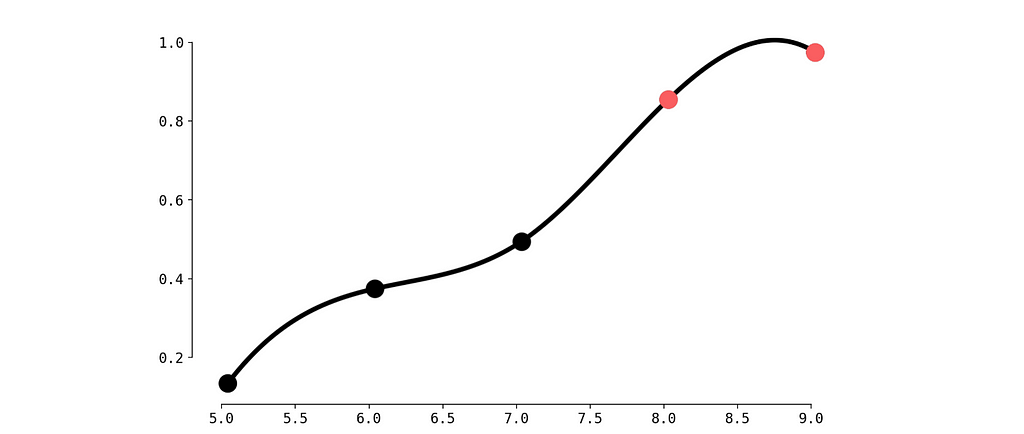

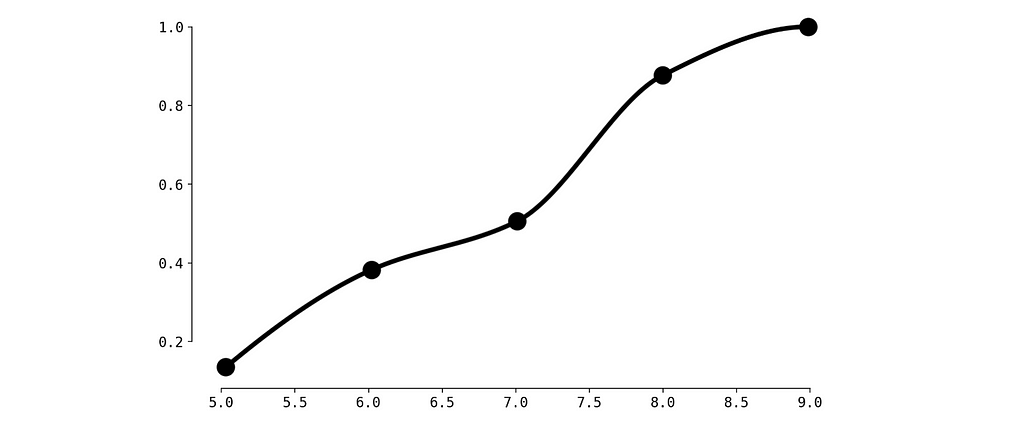

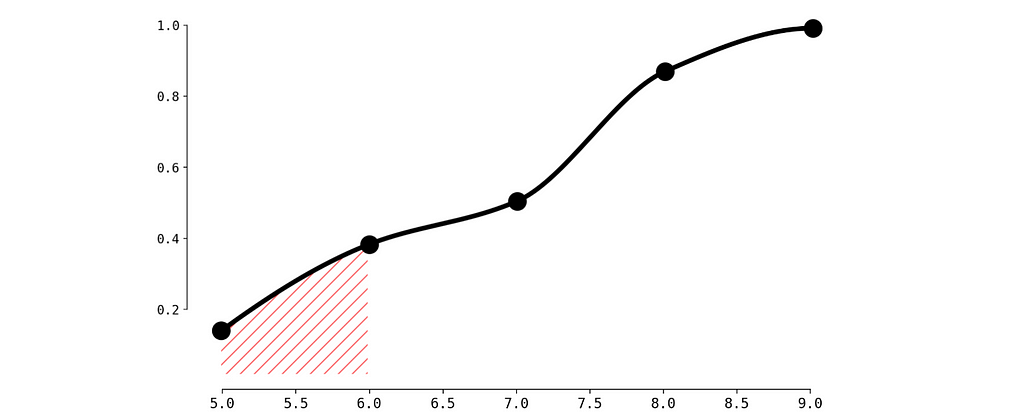

The first method NASA is evaluating is called the “sky crane,” in which a vehicle will head to Mars, get close to the surface with the help of a parachute, pick up the samples the Perseverance rover had collected using cables or other mechanisms and then fly away. NASA previously used this method to place the Curiosity and Perseverance rovers on the planet.

Meanwhile, the second option requires the help of commercial space companies. Last year, the agency asked SpaceX, Blue Origin, Lockheed Martin and other companies to submit proposals on how to get the collected Martian samples back to Earth. Whichever option the agency chooses will carry a smaller version of the Mars Ascent Vehicle than originally planned. The Mars Ascent Vehicle is a lightweight rocket that will take the samples from the planet’s surface into Martian orbit. It will also have to be capable of transporting a container that can fit 30 sample tubes. Once the sample container is in orbit, a European Space Agency orbiter will capture it and bring it back home.

Early last year, NASA’s Jet Propulsion Laboratory had to lay off 530 employees and cut off 100 contract workers mainly due to budget issues related to this mission. NASA requested $950 million for the program, but only $300 million was allocated for it. The independent review that found that the mission would cost above budget also found that it might not be able to bring the samples back to Earth by 2040. According to a previous report by The Washington Post, the US government found the return date “unacceptable.”

In a teleconference, NASA administrator Bill Nelson revealed either of the two methods the agency is now considering would cost a lot less than what it would originally spend. The sky crane would reportedly cost NASA between $6.6 billion and $7.7 billion, while working with a private space company would cost between $5.8 billion and $7.1 billion. Either option would also be able to retrieve the samples and bring them back sometime between 2035 and 2039. Scientists believe the samples Perseverance has been collecting could help us determine whether there was life on Mars and whether its soil contains chemicals and substances that could be harmful to future human spacefarers.

This article originally appeared on Engadget at https://www.engadget.com/science/space/nasa-will-decide-how-to-bring-soil-samples-back-from-mars-in-2026-141519710.html?src=rss

Go Here to Read this Fast! NASA will decide how to bring soil samples back from Mars in 2026

Originally appeared here:

NASA will decide how to bring soil samples back from Mars in 2026