Go Here to Read this Fast! Quordle today – hints and answers for Monday, January 22 (game #728)

Originally appeared here:

Quordle today – hints and answers for Monday, January 22 (game #728)

Go Here to Read this Fast! Quordle today – hints and answers for Monday, January 22 (game #728)

Originally appeared here:

Quordle today – hints and answers for Monday, January 22 (game #728)

OpenAI has suspended the developer behind Dean.Bot, a ChatGPT-powered bot designed to impersonate Democratic presidential candidate Dean Phillips to help bolster his campaign, according to The Washington Post. The chatbot was created by AI startup Delphi for the super PAC We Deserve Better, which supports Phillips.

Dean.Bot didn’t all-out pretend to be Phillips himself; before engaging with Dean.Bot, website visitors would be shown a disclaimer describing the nature of the chatbot. Still, this type of use goes directly against OpenAI’s policies. A spokesperson for the company confirmed the developer’s suspension in a statement to the Post. It comes just weeks after OpenAI published a lengthy blog post about the measures it’s taking to prevent the misuse of its technology ahead of the 2024 elections, specifically citing “chatbots impersonating candidates” as an example of what’s not allowed.

OpenAI also said in its blog post that it does not “allow people to build applications for political campaigning and lobbying.” Per an earlier story by The Washington Post, the intent of Dean.Bot was to engage with potential supporters and spread the candidate’s message. Following the Post’s inquiry, Delphi initially removed ChatGPT from the bot and kept it running with other, open-source tools before ultimately taking it town altogether on Friday night once OpenAI stepped in.

If you visit the site now, you’ll still be greeted by the disclaimer — but the chatbot itself is down due to “technical difficulties,” presenting visitors with the message, “Apologies, DeanBot is away campaigning right now!” Engadget has reached out to OpenAI for comment.

This article originally appeared on Engadget at https://www.engadget.com/openai-suspends-developer-over-chatgpt-bot-that-impersonated-a-presidential-candidate-214854456.html?src=rss

Originally appeared here:

OpenAI suspends developer over ChatGPT bot that impersonated a presidential candidate

If you’re like us, you probably stuff your iPhone and AirPods into your pockets, purse, or bag before leaving your house in the morning and decant them somewhere at day’s end. However, unless you have a dedicated place for your gear, misplacing one of your much-loved items is pretty easy.

Courant’s Mag:3 Classics Device Charging Tray aims to fix that. It features multiple ways to charge your favorite gear in a handy tray that keeps all your everyday carry items in the same place.

Originally appeared here:

Mag:3 Classics Device Charging Tray review: a place for everything, and everything in its place

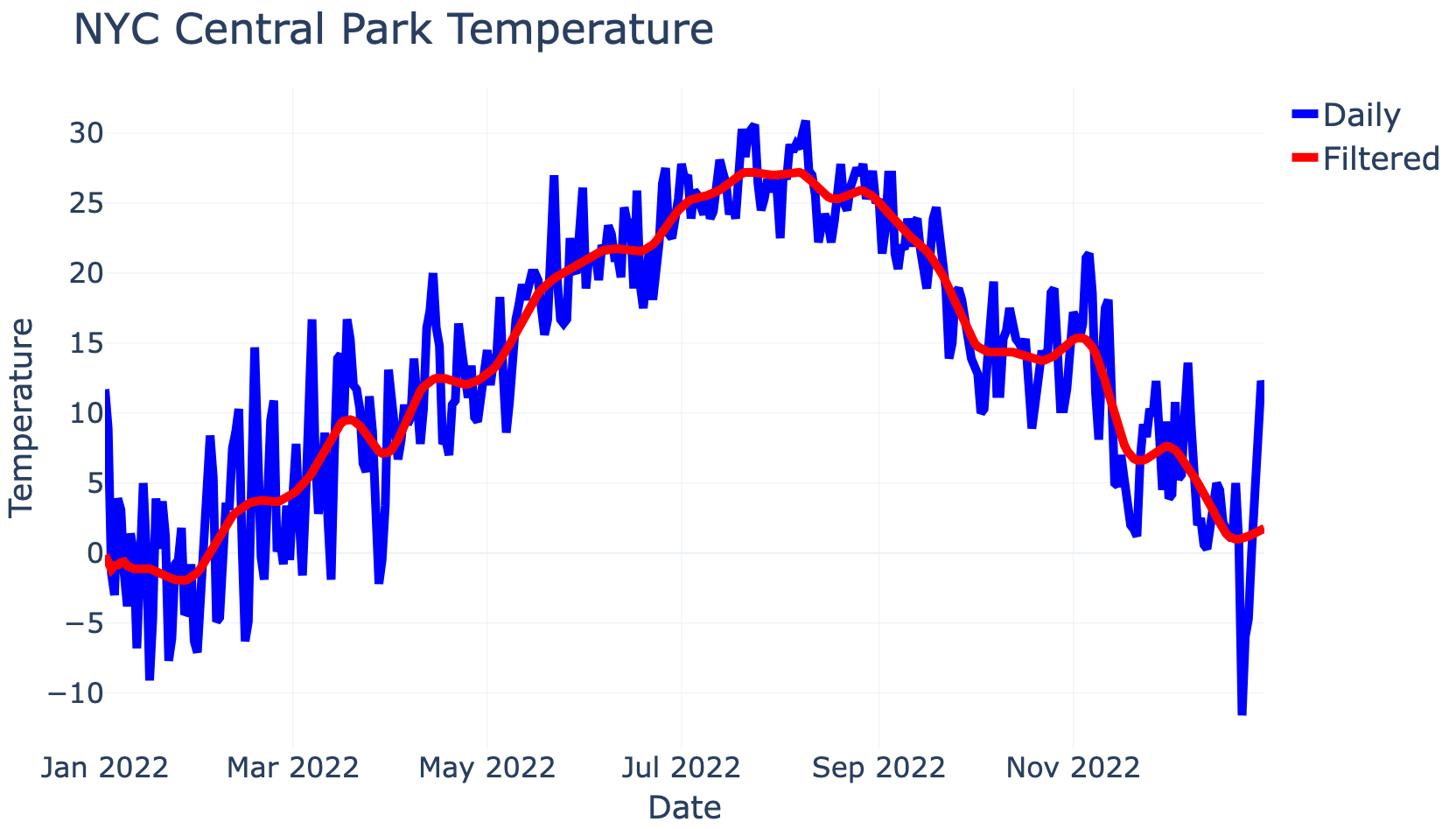

When working with time-series data, it can be important to apply filtering to remove noise. This story shows how to implement a low-pass…

Originally appeared here:

How to Low-Pass Filter in Google BigQuery

Go Here to Read this Fast! How to Low-Pass Filter in Google BigQuery

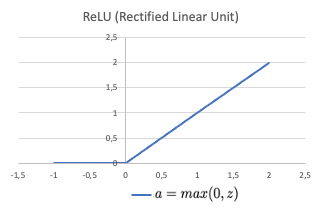

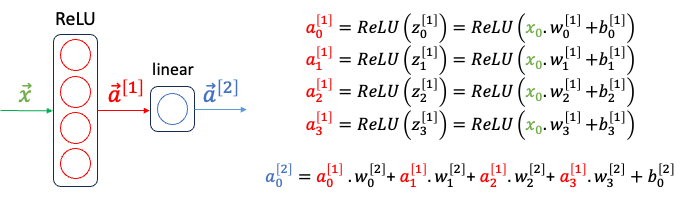

Activation functions play an integral role in Neural Networks (NNs) since they introduce non-linearity and allow the network to learn more complex features and functions than just a linear regression. One of the most commonly used activation functions is Rectified Linear Unit (ReLU), which has been theoretically shown to enable NNs to approximate a wide range of continuous functions, making them powerful function approximators.

In this post, we study in particular the approximation of Continuous NonLinear (CNL) functions, the main purpose of using a NN over a simple linear regression model. More precisely, we investigate 2 sub-categories of CNL functions: Continuous PieceWise Linear (CPWL), and Continuous Curve (CC) functions. We will show how these two function types can be represented using a NN that consists of one hidden layer, given enough neurons with ReLU activation.

For illustrative purposes, we consider only single feature inputs yet the idea applies to multiple feature inputs as well.

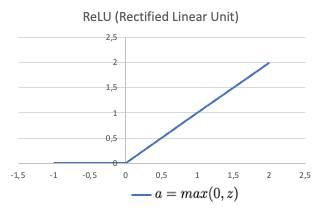

ReLU is a piecewise linear function that consists of two linear pieces: one that cuts off negative values where the output is zero, and one that provides a continuous linear mapping for non negative values.

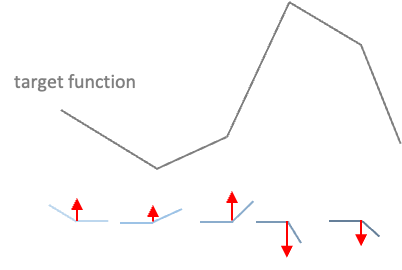

CPWL functions are continuous functions with multiple linear portions. The slope is consistent on each portion, than changes abruptly at transition points by adding new linear functions.

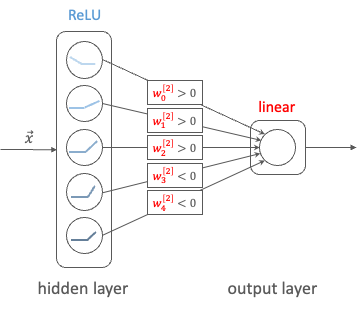

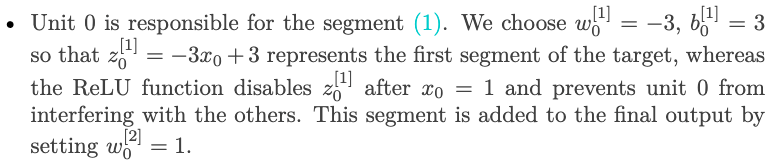

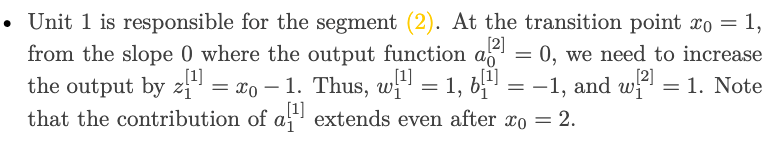

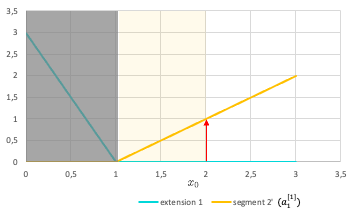

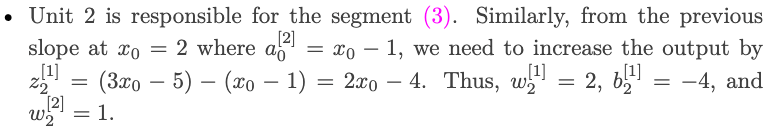

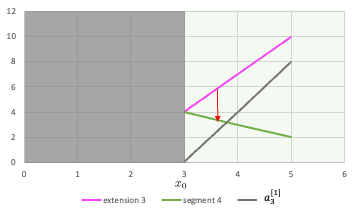

In a NN with one hidden layer using ReLU activation and a linear output layer, the activations are aggregated to form the CPWL target function. Each unit of the hidden layer is responsible for a linear piece. At each unit, a new ReLU function that corresponds to the changing of slope is added to produce the new slope (cf. Fig.2). Since this activation function is always positive, the weights of the output layer corresponding to units that increase the slope will be positive, and conversely, the weights corresponding to units that decreases the slope will be negative (cf. Fig.3). The new function is added at the transition point but does not contribute to the resulting function prior to (and sometimes after) that point due to the disabling range of the ReLU activation function.

Example

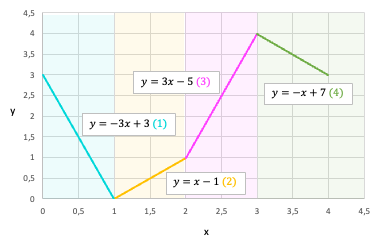

To make it more concrete, we consider an example of a CPWL function that consists of 4 linear segments defined as below.

To represent this target function, we will use a NN with 1 hidden layer of 4 units and a linear layer that outputs the weighted sum of the previous layer’s activation outputs. Let’s determine the network’s parameters so that each unit in the hidden layer represents a segment of the target. For the sake of this example, the bias of the output layer (b2_0) is set to 0.

The next type of continuous nonlinear function that we will study is CC function. There is not a proper definition for this sub-category, but an informal way to define CC functions is continuous nonlinear functions that are not piecewise linear. Several examples of CC functions are: quadratic function, exponential function, sinus function, etc.

A CC function can be approximated by a series of infinitesimal linear pieces, which is called a piecewise linear approximation of the function. The greater the number of linear pieces and the smaller the size of each segment, the better the approximation is to the target function. Thus, the same network architecture as previously with a large enough number of hidden units can yield good approximation for a curve function.

However, in reality, the network is trained to fit a given dataset where the input-output mapping function is unknown. An architecture with too many neurons is prone to overfitting, high variance, and requires more time to train. Therefore, an appropriate number of hidden units must not be too small to properly fit the data, nor too large to lead to overfitting. Moreover, with a limited number of neurons, a good approximation with low loss has more transition points in restricted domain, rather than equidistant transition points in an uniform sampling way (as shown in Fig.10).

In this post, we have studied how ReLU activation function allows multiple units to contribute to the resulting function without interfering, thus enables continuous nonlinear function approximation. In addition, we have discussed about the choice of network architecture and number of hidden units in order to obtain a good approximation result.

I hope that this post is useful for your Machine Learning learning process!

Further questions to think about:

*Unless otherwise noted, all images are by the author

How ReLU Enables Neural Networks to Approximate Continuous Nonlinear Functions? was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

How ReLU Enables Neural Networks to Approximate Continuous Nonlinear Functions?

This is how I created coupons using OpenAI API on Python, in a few lines of code.

Originally appeared here:

Customer profiling with Artificial Intelligence: Building Grocery Coupons from Everyday Lists using…

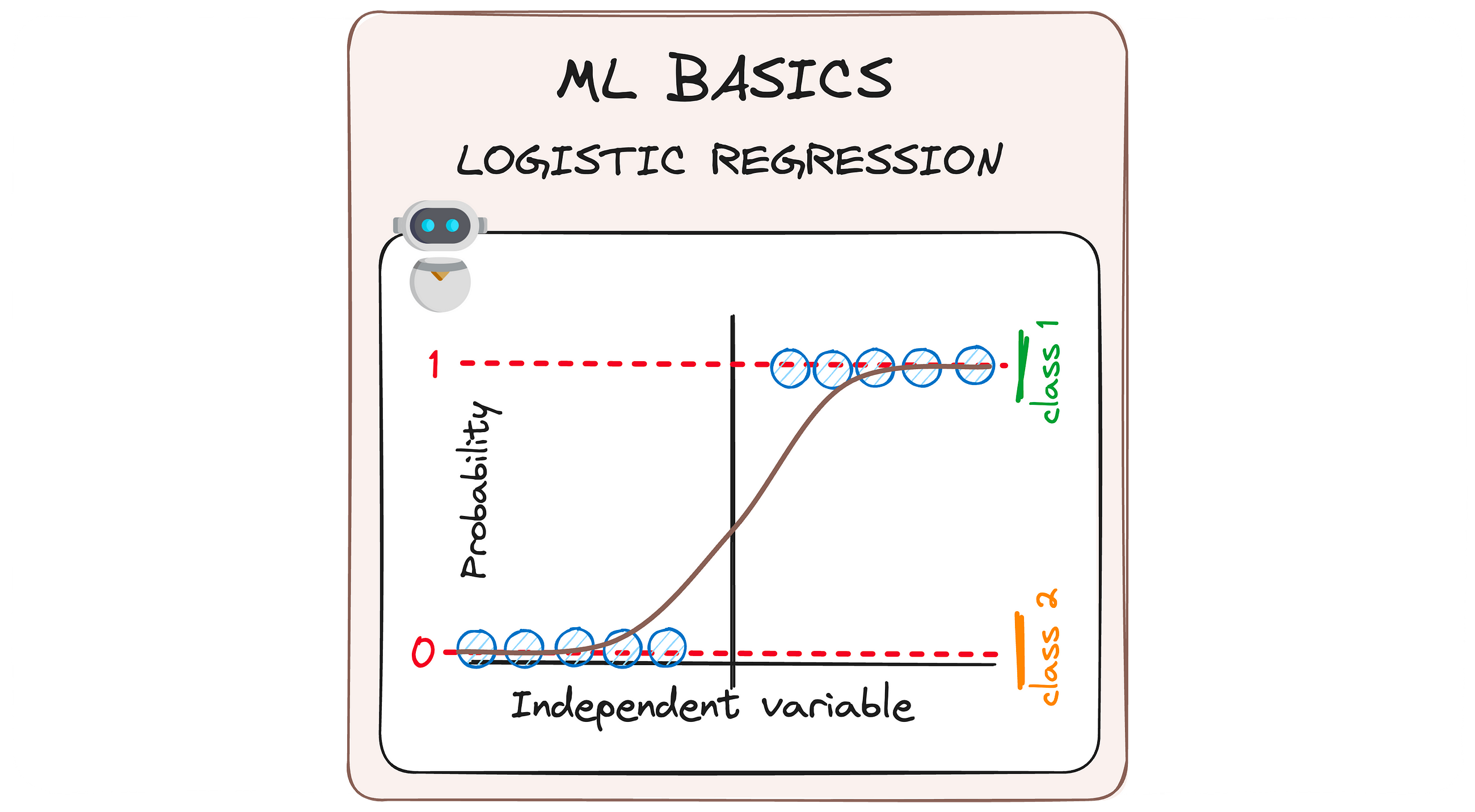

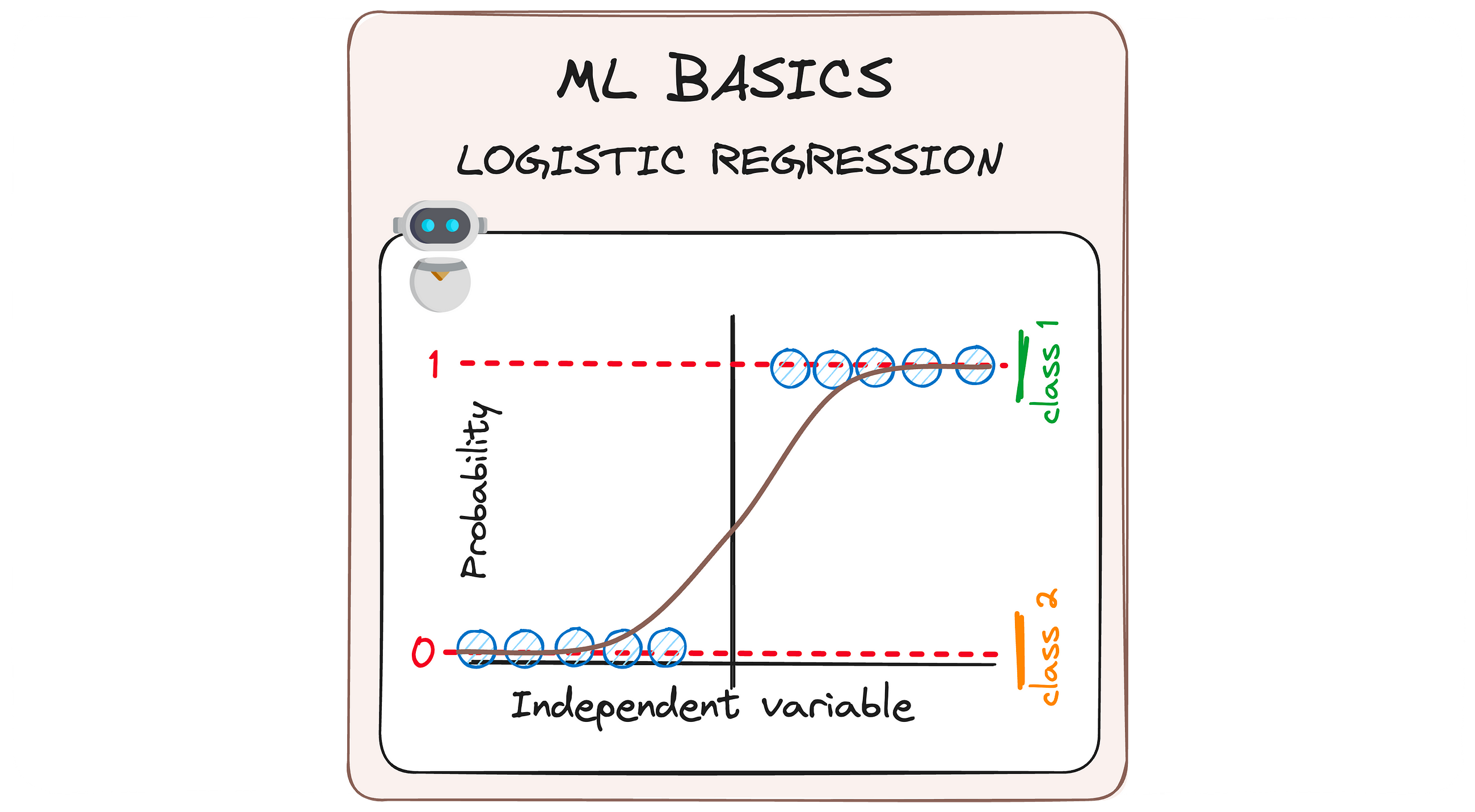

MLBasics #2: Demystifying Machine Learning Algorithms with The Simplicity of Logistic Regression

Originally appeared here:

Breaking down Logistic Regression to its basics

Go Here to Read this Fast! Breaking down Logistic Regression to its basics

Amazon upgraded its Echo Show 8 display late last year to give it a sleeker design and faster Alexa responses, and you can get it right now at the lowest price we’ve seen it hit. The third-gen, 2023 Echo Show 8 is 40 percent off on Amazon, bringing it down to just $90. It normally costs $150. The display comes in two colors, Charcoal and Glacier White, and the discount applies to both.

The 2023 Echo Show 8 brought upgrades inside and out to the smart home gadget. It has spatial audio with room calibration that should make for much fuller sound than the previous models were able to achieve. The improvements carry over to video calling, which benefits from crisper audio and a 13-megapixel camera.

Amazon debuted its new Adaptive Content feature alongside the 2023 Echo Show 8, which changes what’s shown on the screen based on where you are in the room. If you’re standing far away, it’ll display easily digestible information in large font, like the weather or news headlines. As you get closer, it’ll switch to a more detailed view. It can also show personalized content for anyone enrolled in visual ID, surfacing your favorite playlists and other content.

The Echo Show 8 also boasts 40 percent faster response times for Alexa thanks to its upgraded processor. For privacy-conscious buyers, it has a physical camera shutter that’s controlled with a slider on the top of the device, so you know for sure that it’s not watching. There’s also a button to turn off the mic and camera.

Follow @EngadgetDeals on Twitter and subscribe to the Engadget Deals newsletter for the latest tech deals and buying advice.

This article originally appeared on Engadget at https://www.engadget.com/the-2023-amazon-echo-show-8-is-back-down-to-its-record-low-price-of-90-184306499.html?src=rss

Go Here to Read this Fast! The 2023 Amazon Echo Show 8 is back down to its record-low price of $90

Originally appeared here:

The 2023 Amazon Echo Show 8 is back down to its record-low price of $90