Tag: tech

-

Audi’s new Q6 e-tron EV has smart taillights to communicate with fellow motorists

Audi hopes its new active digital light signature can help warn drivers behind through innovative OLED panels.Audi’s new Q6 e-tron EV has smart taillights to communicate with fellow motoristsAudi’s new Q6 e-tron EV has smart taillights to communicate with fellow motorists -

Top tech conferences: The ultimate tech events guide for April 2024

We’ve compiled the ultimate list of technology events so you don’t have to.Go Here to Read this Fast! Top tech conferences: The ultimate tech events guide for April 2024

Originally appeared here:

Top tech conferences: The ultimate tech events guide for April 2024 -

Another Ivanti service has been forced to patch multiple security flaws

Ivanti patched more than two dozen flaws in Avalanche MDM service, including two critical vulnerabilities.Go Here to Read this Fast! Another Ivanti service has been forced to patch multiple security flaws

Originally appeared here:

Another Ivanti service has been forced to patch multiple security flaws -

Samsung Galaxy Z Flip 6 predicted price: how much is this foldable likely to cost?

The Samsung Galaxy Z Flip 6 could be a relatively affordable foldable phone choice. Here’s what we know about the price.

The Samsung Galaxy Z Flip 6 could be a relatively affordable foldable phone choice. Here’s what we know about the price.Originally appeared here:

Samsung Galaxy Z Flip 6 predicted price: how much is this foldable likely to cost? -

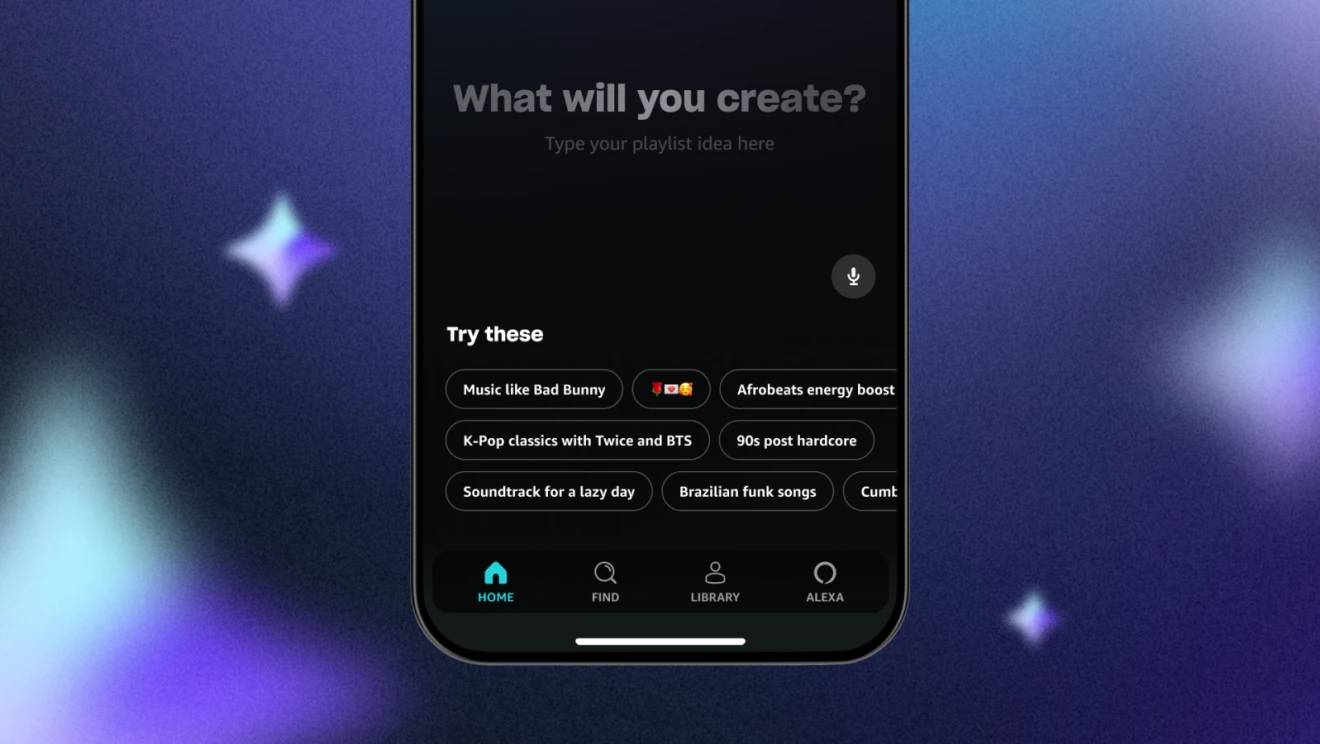

Amazon Music is coming for Spotify’s AI playlists with new Maestro feature

Following the release of Spotify’s AI playlist generator, Amazon Music has joined the craze with its own AI feature Maestro.

Following the release of Spotify’s AI playlist generator, Amazon Music has joined the craze with its own AI feature Maestro.Originally appeared here:

Amazon Music is coming for Spotify’s AI playlists with new Maestro feature -

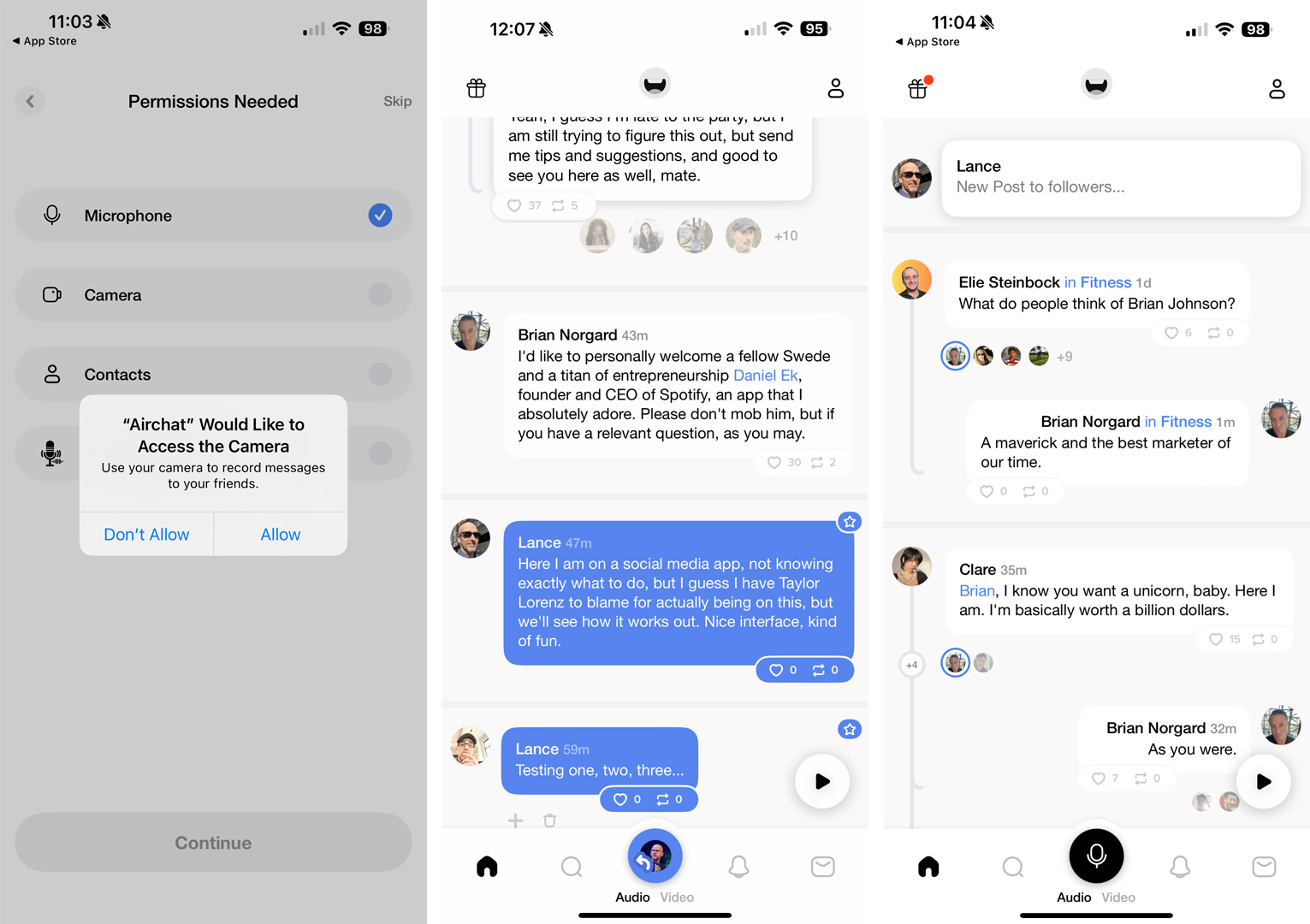

Airchat wants to make talk-only social media a thing, and I don’t even hate it

Airchat is a new audio-based social media platform that leaves typing behind and promises “just talk”.Airchat wants to make talk-only social media a thing, and I don’t even hate itAirchat wants to make talk-only social media a thing, and I don’t even hate it

Airchat is a new audio-based social media platform that leaves typing behind and promises “just talk”.Airchat wants to make talk-only social media a thing, and I don’t even hate itAirchat wants to make talk-only social media a thing, and I don’t even hate it -

Pushing RL Boundaries: Integrating Foundational Models, e.g.

Pushing RL Boundaries: Integrating Foundational Models, e.g. LLMs and VLMs, into Reinforcement Learning

In-Depth Exploration of Integrating Foundational Models such as LLMs and VLMs into RL Training Loop

Authors: Elahe Aghapour, Salar Rahili

Overview:

With the rise of the transformer architecture and high-throughput compute, training foundational models has turned into a hot topic recently. This has led to promising efforts to either integrate or train foundational models to enhance the capabilities of reinforcement learning (RL) algorithms, signaling an exciting direction for the field. Here, we’re discussing how foundational models can give reinforcement learning a major boost.

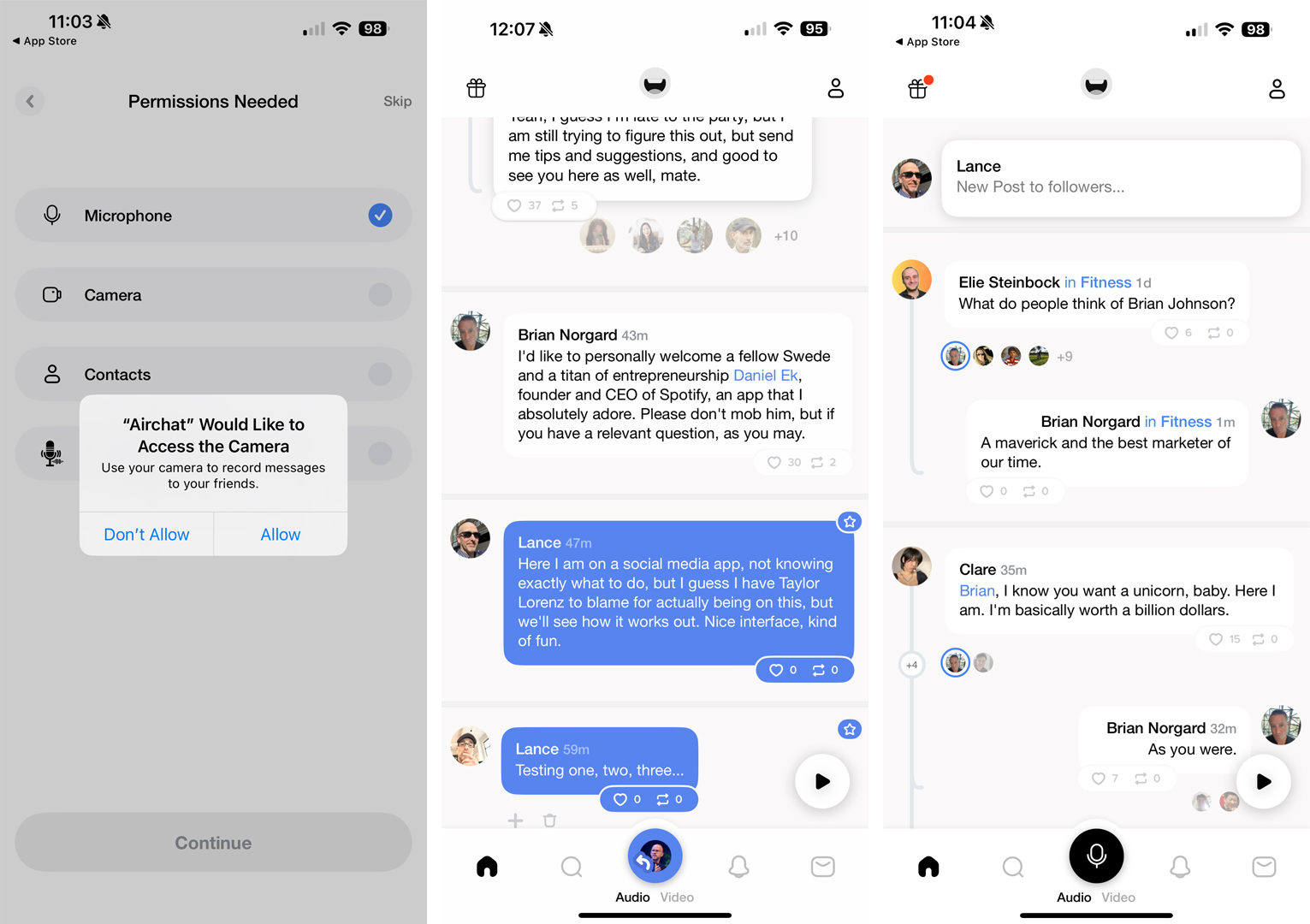

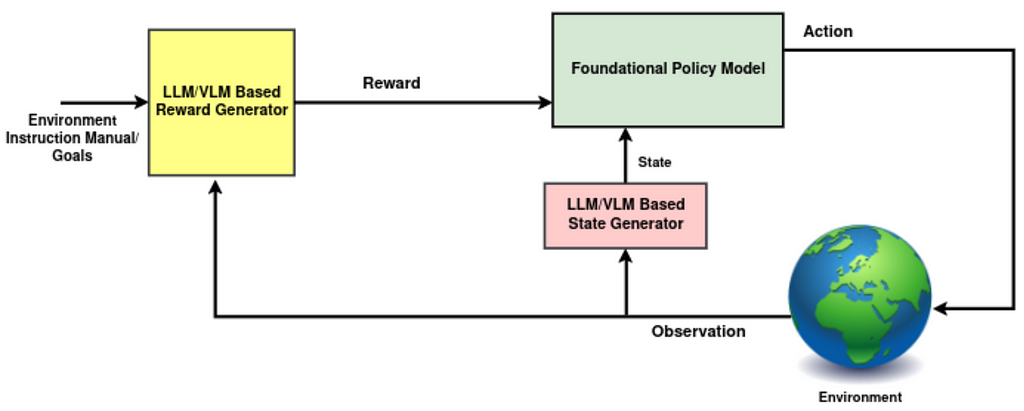

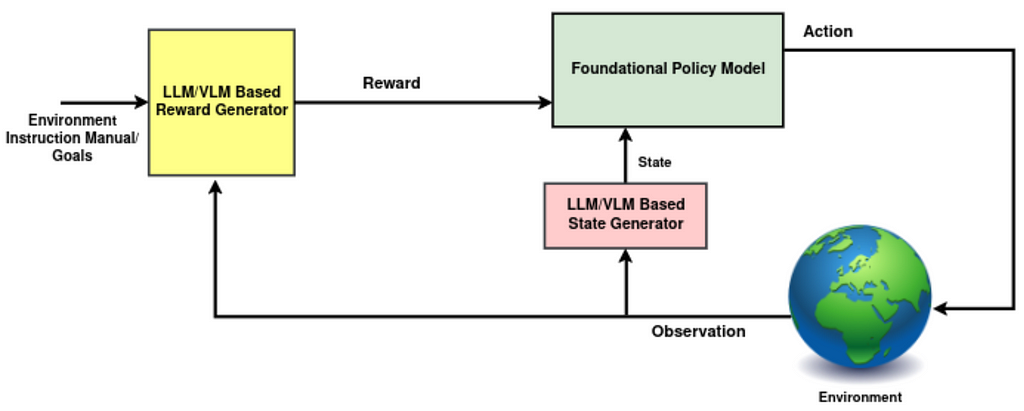

Before diving into the latest research on how foundational models can give reinforcement learning a major boost, let’s engage in a brainstorming session. Our goal is to pinpoint areas where pre-trained foundational models, particularly Large Language Models (LLMs) or Vision-Language Models (VLMs), could assist us, or how we might train a foundational model from scratch. A useful approach is to examine each element of the reinforcement learning training loop individually, to identify where there might be room for improvement:

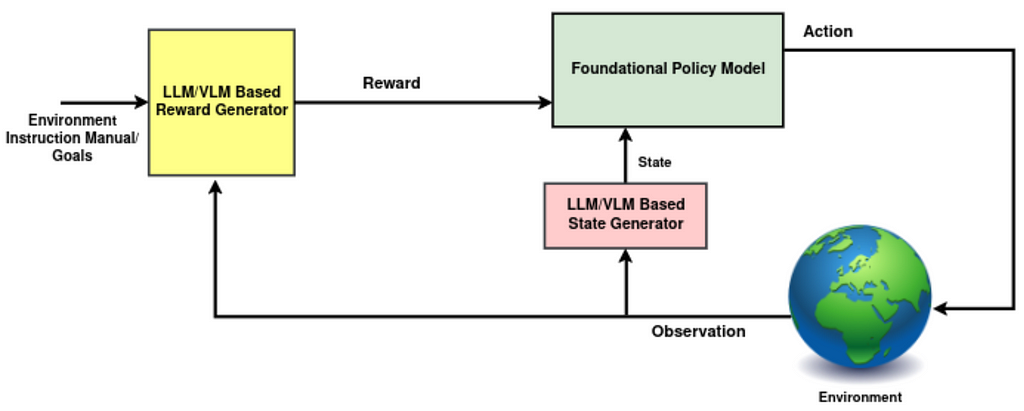

Fig 1: Overview of foundation models in RL (Image by author) 1- Environment: Given that pre-trained foundational models understand the causal relationships between events, they can be utilized to forecast environmental changes resulting from current actions. Although this concept is intriguing, we’re not yet aware of any specific studies that focus on it. There are two primary reasons holding us back from exploring this idea further for now.

- While the reinforcement learning training process demands highly accurate predictions for the next step observations, pre-trained LLMs/VLMs haven’t been directly trained on datasets that enable such precise forecasting and thus fall short in this aspect. It’s important to note, as we highlighted in our previous post, that a high-level planner, particularly one used in lifelong learning scenarios, could effectively incorporate a foundational model.

- Latency in environment steps is a critical factor that can constrain the RL algorithm, especially when working within a fixed budget for training steps. The presence of a very large model that introduces significant latency can be quite restrictive. Note that while it might be challenging, distillation into a smaller network can be a solution here.

2- State (LLM/VLM Based State Generator): While experts often use the terms observation and state interchangeably, there are distinctions between them. A state is a comprehensive representation of the environment, while an observation may only provide partial information. In the standard RL framework, we don’t often discuss the specific transformations that extract and merge useful features from observations, past actions, and any internal knowledge of the environment to produce “state”, the policy input. Such a transformation could be significantly enhanced by employing LLMs/VLMs, which allow us to infuse the “state” with broader knowledge of the world, physics, and history (refer to Fig. 1, highlighted in pink).

3- Policy (Foundational Policy Model): Integrating foundational models into the policy, the central decision-making component in RL, can be highly beneficial. Although employing such models to generate high-level plans has proven successful, transforming the state into low-level actions has challenges we’ll delve into later. Fortunately, there has been some promising research in this area recently.

4- Reward (LLM/VLM Based Reward Generator): Leveraging foundational models to more accurately assess chosen actions within a trajectory has been a primary focus among researchers. This comes as no surprise, given that rewards have traditionally served as the communication channel between humans and agents, setting goals and guiding the agent towards what is desired.

- Pre-trained foundational models come with a deep knowledge of the world, and injecting this kind of understanding into our decision-making processes can make those decisions more in tune with human desires and more likely to succeed. Moreover, using foundational models to evaluate the agent’s actions can quickly trim down the search space and equip the agent with a head start in understanding, as opposed to starting from scratch.

- Pre-trained foundational models have been trained on internet-scale data generated mostly by humans, which has enabled them to understand worlds similarly to humans. This makes it possible to use foundational models as cost-effective annotators. They can generate labels or assess trajectories or rollouts on a large scale.

1- Foundational models in reward

It is challenging to use foundational models to generate low level control actions as low level actions are highly dependent on the setting of the agent and are underrepresented in foundational models’ training dataset. Hence, the foundation model application is generally focused on high level plans rather than low level actions. Reward bridges the gap between high-level planner and low level actions where foundation models can be used. Researchers have adopted various methodologies integrating foundation models for reward assignment. However, the core principle revolves around employing a VLM/LLM to effectively track the progress towards a subgoal or task.

1.a Assigning reward values based on similarity

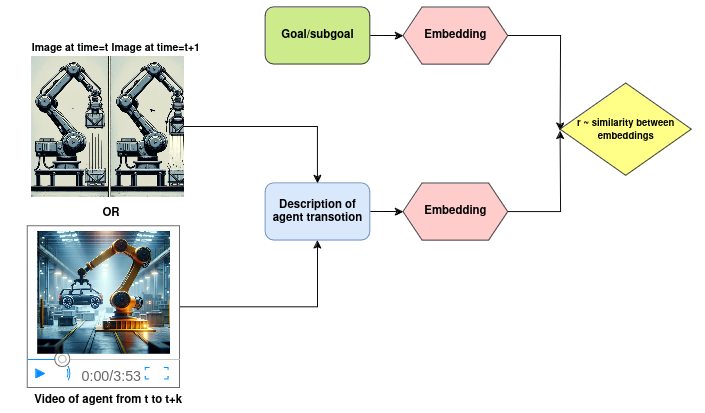

Consider the reward value as a signal that indicates whether the agent’s previous action was beneficial in moving towards the goal. A sensible method involves evaluating how closely the previous action aligns with the current objective. To put this approach into practice, as can be seen in Fig. 2, it’s essential to:

– Generate meaningful embeddings of these actions, which can be done through images, videos, or text descriptions of the most recent observation.

– Generate meaningful representations of the current objective.

– Assess the similarity between these representations.Fig 2. Reward values based on similarity (Image by author). Let’s explore the specific mechanics behind the leading research in this area.

Dense and well-shaped reward functions enhance the stability and training speed of the RL agent. Intrinsic rewards address this challenge by rewarding the agent for novel states’ exploration. However, in large environments where most of the unseen states are irrelevant to the downstream task, this approach becomes less effective. ELLM uses background knowledge of LLM to shape the exploration. It queries LLM to generate a list of possible goals/subgoals given a list of the agent’s available actions and a text description of the agent current observation, generated by a state captioner. Then, at each time step, the reward is computed by the semantic similarity, cosine similarity, between the LLM generated goal and the description of the agent’s transition.

LiFT has a similar framework but also leverages CLIP4Clip-style VLMs for reward assignment. CLIP4Clip is pre-trained to align videos and corresponding language descriptions through contrastive learning. In LiFT, the agent is rewarded based on the alignment score, cosine similarity, between the task instructions and videos of the agent’s corresponding behavior, both encoded by CLIP4CLIP.

UAFM has a similar framework where the main focus is on robotic manipulation tasks, e.g., stacking a set of objects. For reward assignment, they measure the similarity between the agent state image and the task description, both embedded by CLIP. They finetune CLIP on a small amount of data from the simulated stacking domain to be more aligned in this use case.

1.b Assigning rewards through reasoning on auxiliary tasks:

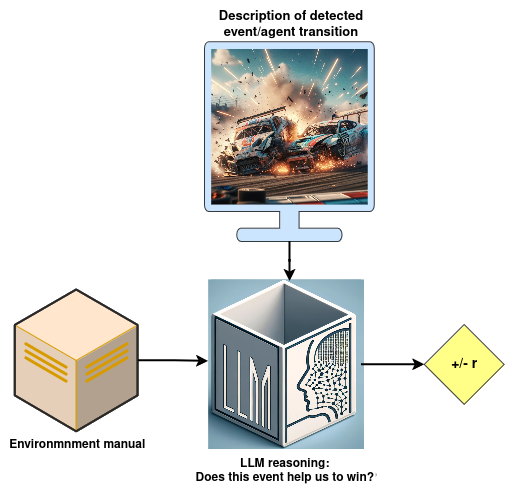

In scenarios where the foundational model has the proper understanding of the environment, it becomes feasible to directly pass the observations within a trajectory to the model, LLM/VLM. This evaluation can be done either through straightforward QA sessions based on the observations or by verifying the model’s capability in predicting the goal only by looking at the observation trajectory.

Fig 3. Assigning reward through reasoning (Image by author). Read and Reward integrates the environment’s instruction manual into reward generation through two key components, as can be seen in Fig. 3:

- QA extraction module: it creates a summary of game objectives and features. This LLM-based module, RoBERTa-large, takes in the game manual and a question, and extracts the corresponding answer from the text. Questions are focused on the game objective, and agent-object interaction, identified by their significance using TF-IDF. For each critical object, a question as: “What happens when the player hits a <object>?” is added to the question set. A summary is then formed with the concatenation of all non-empty question-answer pairs.

- Reasoning module: During gameplay, a rule-based algorithm detects “hit” events. Following each “hit” event, the LLM based reasoning module is queried with the summary of the environment and a question: “Should you hit a <object of interaction> if you want to win?” where the possible answer is limited to {yes, no}. A “yes” response adds a positive reward, while “no” leads to a negative reward.

EAGER introduces a unique method for creating intrinsic rewards through a specially designed auxiliary task. This approach presents a novel concept where the auxiliary task involves predicting the goal based on the current observation. If the model predicts accurately, this indicates a strong alignment with the intended goal, and thus, a larger intrinsic reward is given based on the prediction confidence level. To achieve this goal, To accomplish this, two modules are employed:

- Question Generation (QG): This component works by masking all nouns and adjectives in the detailed objective provided by the user.

- Question Answering (QA): This is a model trained in a supervised manner, which takes the observation, question masks, and actions, and predicts the masked tokens.

(P.S. Although this work does not utilize a foundational model, we’ve included it here due to its intriguing approach, which can be easily adapted to any pre-trained LLM)

1.c Generating reward function code

Up to this point, we’ve discussed generating reward values directly for the reinforcement learning algorithms. However, running a large model at every step of the RL loop can significantly slow down the speed of both training and inference. To bypass this bottleneck, one strategy involves utilizing our foundational model to generate the code for the reward function. This allows for the direct generation of reward values at each step, streamlining the process.

For the code generation schema to work effectively, two key components are required:

1- A code generator, LLM, which receives a detailed prompt containing all the necessary information to craft the code.

2- A refinement process that evaluates and enhances the code in collaboration with the code generator.

Let’s look at the key contributions for generating reward code:R2R2S generates reward function code through two main components:

- LLM based motion descriptor: This module uses a pre-defined template to describe robot movements, and leverages Large Language Models (LLMs) to understand the motion. The Motion Descriptor fills in the template, replacing placeholders e.g. “Destination Point Coordinate” with specific details, to describe the desired robot motion within a pre-defined template.

- LLM based reward coder: this component generates the reward function by processing a prompt containing: a motion description, a list of functions with their description that LLM can use to generate the reward function code, an example code of how the response should look like, and constraints and rules the reward function must follow.

Text2Reward develops a method to generate dense reward functions as an executable code within iterative refinement. Given the subgoal of the task, it has two key components:

- LLM-based reward coder: generates reward function code. Its prompt consists of: an abstract of observation and available actions, a compact pythonic style environment to represent the configuration of the objects, robot, and callable functions; a background knowledge for reward function design (e.g. “reward function for task X typically includes a term for the distance between object x and y”), and a few-shot examples. They assume access to a pool of instruction, and reward function pairs that top k similar instructions are retrieved as few-shot examples.

- LLM-Based Refinement: once the reward code is generated, the code is executed to identify the syntax errors and runtime errors. These feedbacks are integrated into subsequent prompts to generate more refined reward functions. Additionally, human feedback is requested based on a task execution video by the current policy.

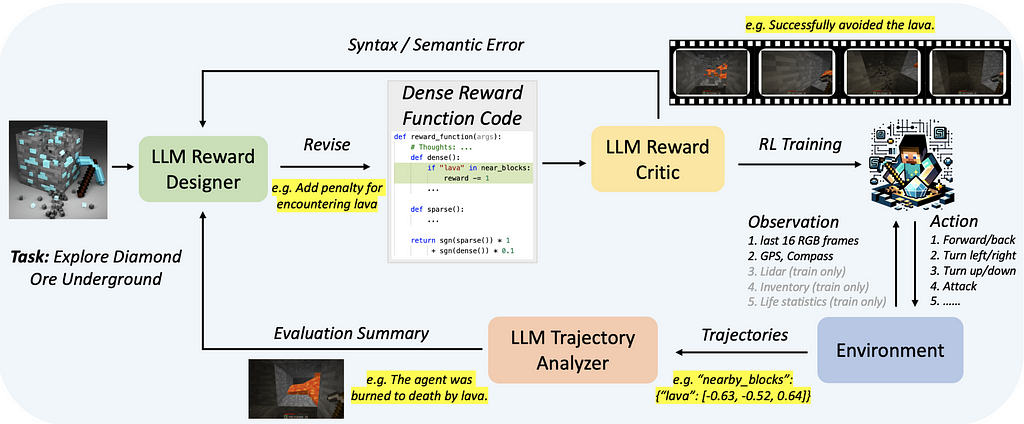

Auto MC-Reward has a similar algorithm to Text2Reward, to generate the reward function code, see Fig. 4. The main difference is in the refinement stage where it has two modules, both LLMs:

- LLM-Based Reward Critic: It evaluates the code and provides feedback on whether the code is self-consistent and free of syntax and semantic errors.

- LLM-Based Trajectory Analyser: It reviews the historical information of the interaction between the trained agent and the environment and uses it to guide the modifications of the reward function.

Fig 4. Overview of Auto MC-Reward (paper taken from Auto MC-Reward paper) EUREKA generates reward code without the need for task-specific prompting, predefined reward templates, or predefined few-shot examples. To achieve this goal, it has two stages:

- LLM-based code generation: The raw environment code, the task, generic reward design and formatting tips are fed to the LLM as context and LLM returns the executable reward code with a list of its components.

- Evolutionary search and refinement: At each iteration, EUREKA queries the LLM to generate several i.i.d reward functions. Training an agent with executable reward functions provides feedback on how well the agent is performing. For a detailed and focused analysis of the rewards, the feedback also includes scalar values for each component of the reward function. The LLM takes top-performing reward code along with this detailed feedback to mutate the reward code in-context. In each subsequent iteration, the LLM uses the top reward code as a reference to generate K more i.i.d reward codes. This iterative optimization continues until a specified number of iterations has been reached.

Within these two steps, EUREKA is able to generate reward functions that outperform expert human-engineered rewards without any task specific templates.

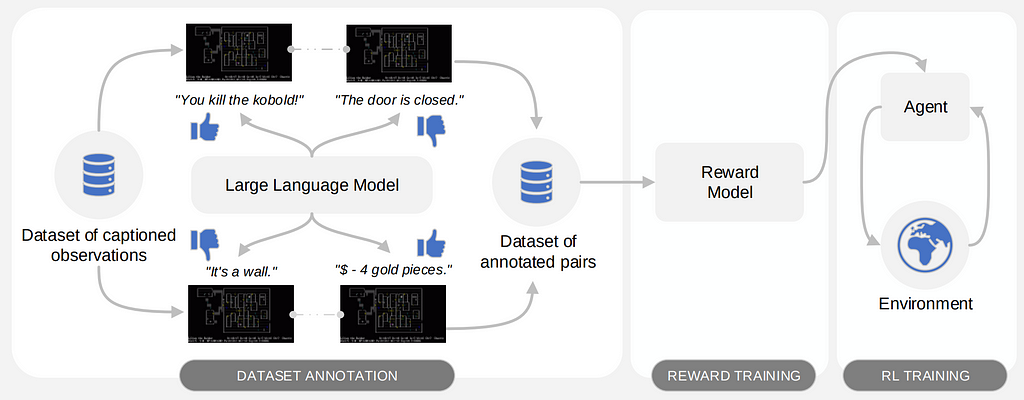

1.d. Train a reward model based on preferences (RLAIF)

An alternative method is to use a foundational model to generate data for training a reward function model. The significant successes of Reinforcement Learning with Human Feedback (RLHF) have recently drawn increased attention towards employing trained reward functions on a larger scale. The heart of such algorithms is the use of a preference dataset to train a reward model which can subsequently be integrated into reinforcement learning algorithms. Given the high cost associated with generating preference data (e.g., action A is preferable to action B) through human feedback, there’s growing interest in constructing this dataset by obtaining feedback from an AI agent, i.e. VLM/LLM. Training a reward function, using AI-generated data and integrating it within a reinforcement learning algorithm, is known as Reinforcement Learning with AI Feedback (RLAIF).

MOTIF requires access to a passive dataset of observations with sufficient coverage. Initially, LLM is queried with a summary of desired behaviors within the environment and a text description of two randomly sampled observations. It then generates the preference, selecting between 1, 2, or 0 (indicating no preference), as seen in Fig. 5. This process constructs a dataset of preferences between observation pairs. Subsequently, this dataset is used to train a reward model employing preference based RL techniques.

Fig 5. A schematic representation of the three phases of MOTIF (image taken from MOTIF paper) 2- Foundation models as Policy

Achieving the capability to train a foundational policy that not only excels in tasks previously encountered but also possesses the ability to reason about and adapt to new tasks using past learning, is an ambition within the RL community. Such a policy would ideally generalize from past experiences to tackle novel situations and, through environmental feedback, achieve goals previously unseen with human-like adaptability.

However, several challenges stand in the way of training such agents. Among these challenges are:

- The necessity of managing a very large model, which introduces significant latency into the decision-making process for low-level control actions.

- The requirement to collect a vast amount of interaction data across a wide array of tasks to enable effective learning.

- Additionally, the process of training a very large network from scratch using RL introduces extra complexities. This is because backpropagation efficiency inherently is weaker in RL compared to supervised training methods .

Up to now, it’s mostly been teams with substantial resources and top-notch setups who’ve really pushed the envelope in this domain.

AdA paved the way for training an RL foundation model within the X.Land 2.0 3D environment. This model achieves human time-scale adaptation on held-out test tasks without any further training. The model’s success is founded on three ingredients:

- The core of the AdA’s learning mechanism is a Transformer-XL architecture from 23 to 265 million parameters, employed alongside the Muesli RL algorithm. Transformer-XL takes in a trajectory of observations, actions, and rewards from time t to T and outputs a sequence of hidden states for each time step. The hidden state is utilized to predict reward, value, and action distribution π. The combination of both long-term and short-term memory is critical for fast adaptation. Long-term memory is achieved through slow gradient updates, whereas short-term memory can be captured within the context length of the transformer. This unique combination allows the model to preserve knowledge across multiple task attempts by retaining memory across trials, even though the environment resets between trials.

- The model benefits from meta-RL training across 1⁰⁴⁰ different partially observable Markov decision processes (POMDPs) tasks. Since transformers are meta-learners, no additional meta step is required.

- Given the size and diversity of the task pool, many tasks will either be too easy or too hard to generate a good training signal. To tackle this, they used an automated curriculum to prioritize tasks that are within its capability frontier.

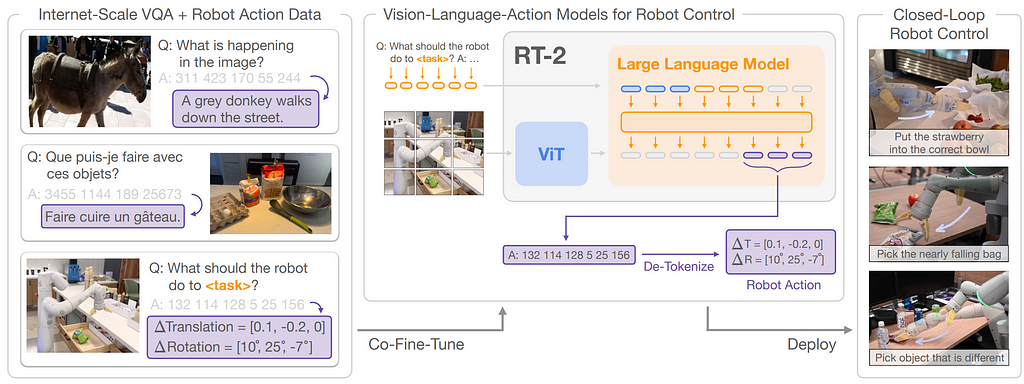

RT-2 introduces a method to co-finetune a VLM on both robotic trajectory data and vision-language tasks, resulting in a policy model called RT-2. To enable vision-language models to generate low-level actions, actions are discretized into 256 bins and represented as language tokens.

By representing actions as language tokens, RT-2 can directly utilize pre-existing VLM architectures without requiring substantial modifications. Hence, VLM input includes robot camera image and textual task description formatted similarly to Vision Question Answering tasks and the output is a series of language tokens that represent the robot’s low-level actions; see Fig. 6.

Fig 6. RT-2 overview (image taken from RT-2 paper) They noticed that co-finetuning on both types of data with the original web data leads to more generalizable policies. The co-finetuning process equips RT-2 with the ability to understand and execute commands that were not explicitly present in its training data, showcasing remarkable adaptability. This approach enabled them to leverage internet-scale pretraining of VLM to generalize to novel tasks through semantic reasoning.

3- Foundation Models as State Representation

In RL, a policy’s understanding of the environment at any given moment comes from its “state” which is essentially how it perceives its surroundings. Looking at the RL block diagram, a reasonable module to inject world knowledge into is the state. If we can enrich observations with general knowledge useful for completing tasks, the policy can pick up new tasks much faster compared to RL agents that begin learning from scratch.

PR2L introduces a novel approach to inject the background knowledge of VLMs from internet scale data into RL.PR2L employs generative VLMs which generate language in response to an image and a text input. As VLMs are proficient in understanding and responding to visual and textual inputs, they can provide a rich source of semantic features from observations to be linked to actions.

PR2L, queries a VLM with a task-relevant prompt for each visual observation received by the agent, and receives both the generated textual response and the model’s intermediate representations. They discard the text and use some or all of the models intermediate representation generated for both the visual and text input and the VLM’s generated textual response as “promptable representations”. Due to the variable size of these representations, PR2L incorporates an encoder-decoder Transformer layer to embed all the information embedded in promptable representations into a fixed size embedding. This embedding, combined with any available non-visual observation data, is then provided to the policy network, representing the state of the agent. This innovative integration allows the RL agent to leverage the rich semantic understanding and background knowledge of VLMs, facilitating more rapid and informed learning of tasks.

Also Read Our Previous Post: Towards AGI: LLMs and Foundational Models’ Roles in the Lifelong Learning Revolution

References:

[1] ELLM: Du, Yuqing, et al. “Guiding pretraining in reinforcement learning with large language models.” 2023.

[2] Text2Reward: Xie, Tianbao, et al. “Text2reward: Automated dense reward function generation for reinforcement learning.” 2023.

[3] R2R2S: Yu, Wenhao, et al. “Language to rewards for robotic skill synthesis.” 2023.

[4] EUREKA: Ma, Yecheng Jason, et al. “Eureka: Human-level reward design via coding large language models.” 2023.

[5] MOTIF: Klissarov, Martin, et al. “Motif: Intrinsic motivation from artificial intelligence feedback.” 2023.

[6] Read and Reward: Wu, Yue, et al. “Read and reap the rewards: Learning to play atari with the help of instruction manuals.” 2024.

[7] Auto MC-Reward: Li, Hao, et al. “Auto MC-reward: Automated dense reward design with large language models for minecraft.” 2023.

[8] EAGER: Carta, Thomas, et al. “Eager: Asking and answering questions for automatic reward shaping in language-guided RL.” 2022.

[9] LiFT: Nam, Taewook, et al. “LiFT: Unsupervised Reinforcement Learning with Foundation Models as Teachers.” 2023.

[10] UAFM: Di Palo, Norman, et al. “Towards a unified agent with foundation models.” 2023.

[11] RT-2: Brohan, Anthony, et al. “Rt-2: Vision-language-action models transfer web knowledge to robotic control.” 2023.

[12] AdA: Team, Adaptive Agent, et al. “Human-timescale adaptation in an open-ended task space.” 2023.

[13] PR2L: Chen, William, et al. “Vision-Language Models Provide Promptable Representations for Reinforcement Learning.” 2024.

[14] Clip4Clip: Luo, Huaishao, et al. “Clip4clip: An empirical study of clip for end to end video clip retrieval and captioning.” 2022.

[15] Clip: Radford, Alec, et al. “Learning transferable visual models from natural language supervision.” 2021.

[16] RoBERTa: Liu, Yinhan, et al. “Roberta: A robustly optimized bert pretraining approach.” 2019.

[17] Preference based RL: SWirth, Christian, et al. “A survey of preference-based reinforcement learning methods.” 2017.

[18] Muesli: Hessel, Matteo, et al. “Muesli: Combining improvements in policy optimization.” 2021.

[19] Melo, Luckeciano C. “Transformers are meta-reinforcement learners.” 2022.

[20] RLHF: Ouyang, Long, et al. “Training language models to follow instructions with human feedback, 2022.

Pushing RL Boundaries: Integrating Foundational Models, e.g. was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Pushing RL Boundaries: Integrating Foundational Models, e.g.Go Here to Read this Fast! Pushing RL Boundaries: Integrating Foundational Models, e.g.

-

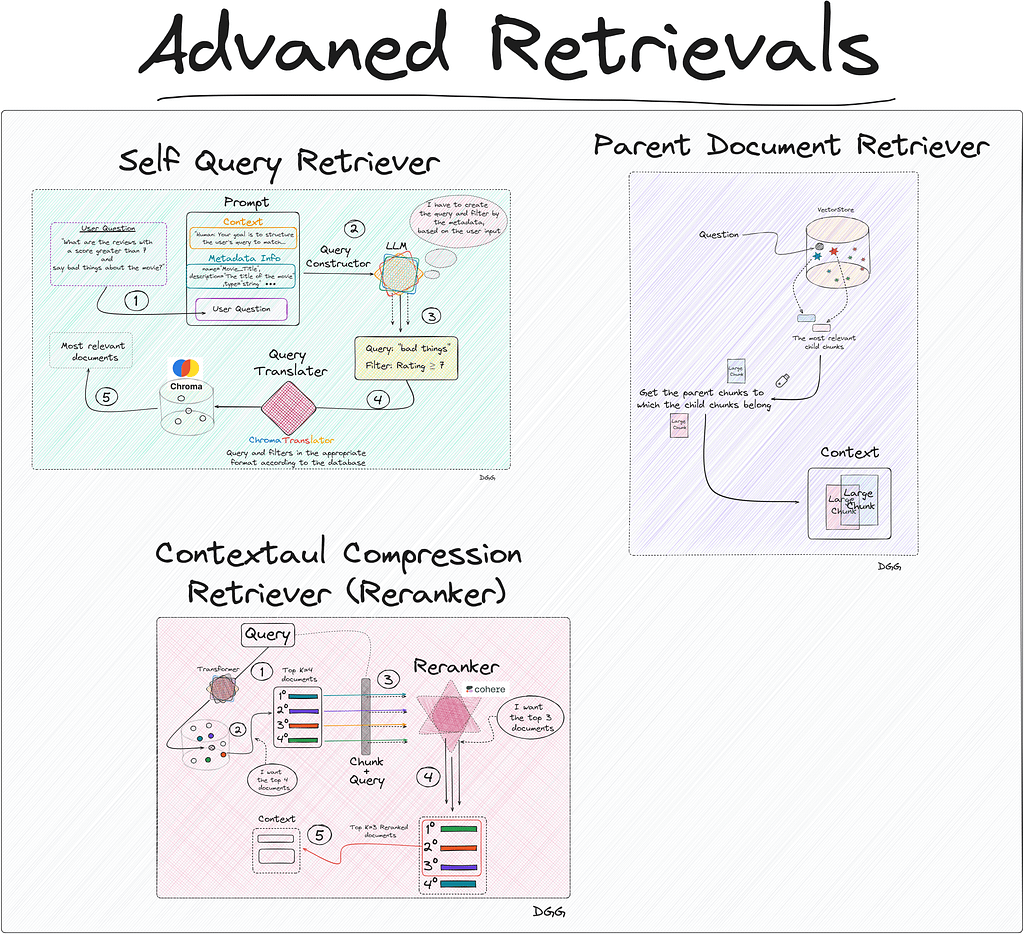

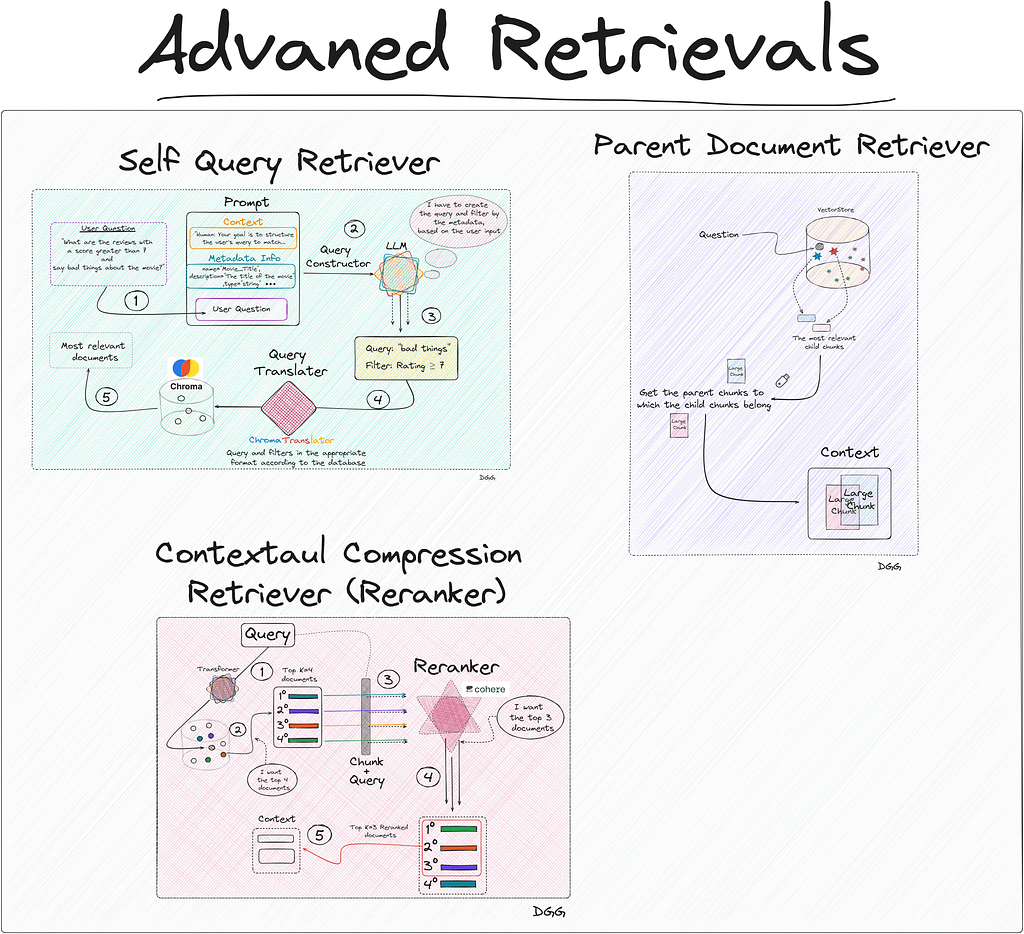

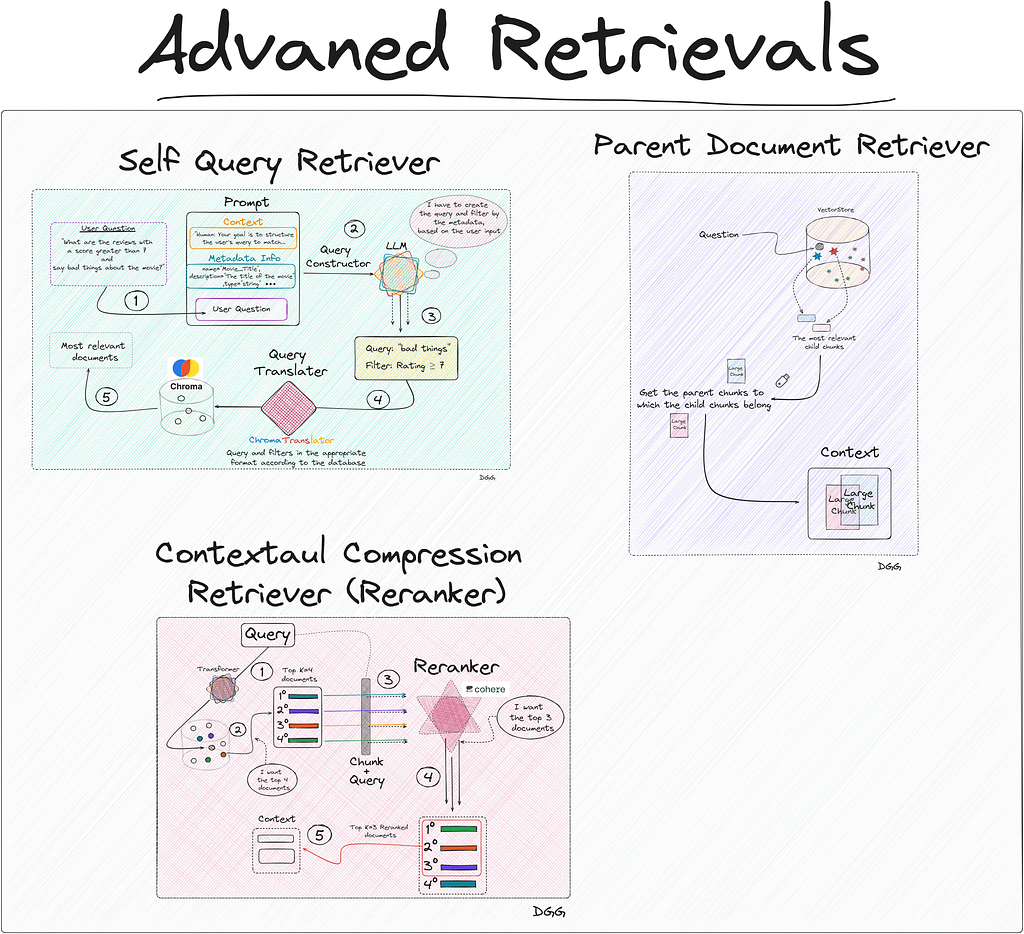

Advanced Retriever Techniques to Improve Your RAGs

Master Advanced Information Retrieval: Cutting-edge Techniques to Optimize the Selection of Relevant Documents with Langchain to Create Excellent RAGs

Content Table

· Introduction

· Vectore Store Creation

· Method: Naive Retriever

· Method: Parent Document Retriever

· Method: Self Query Retriever

∘ Query Constructor

∘ Query Translater

· Method: Contextual Compression Retriever (Reranking)

· ConclusionIntroduction

Let’s briefly remember what the 3 acronyms that make up the word RAG mean:

- Retrieval: The main objective of a RAG is to collect the most relevant documents/chunks regarding the query.

- Augmented: Create a well-structured prompt so that when the call is made to the LLM, it knows perfectly what its purpose is, what the context is and how it should respond.

- Generation: This is where the LLM comes into play. When the model is given good context (provided by the “Retrieval” step) and has clear instructions (provided by the “Augmented” step), it will generate high-value responses for the user.

As we can see, the generation of the response to a user’s query (If we apply a RAG for the purpose of Q&A), depends directly on how well we have built the “Augmented” and especially the “Retrieval”.

In this article we are going to focus exclusively on the “Retrieval” part. In this important process of returning the most relevant documents, the concept of vector store appears.

Overview of the techniques shown in this article (Image by Author). To create these retrievals, we will use the Langchain library.

Overview of the technologies used in this article (Image by Author). The vectore store is nothing more than a vector database, which stores documents in vector format. This vector representation comes from the use of transformers. I’m not saying something you don’t know at the moment.

It is clear that the more robust and complete this vector store is, the better retriever we can run. We already know that the creation of this database is an art in itself. Depending on the size of the chunks or the embedding model we use, our RAG will be better or worse.

I make a clarification here:

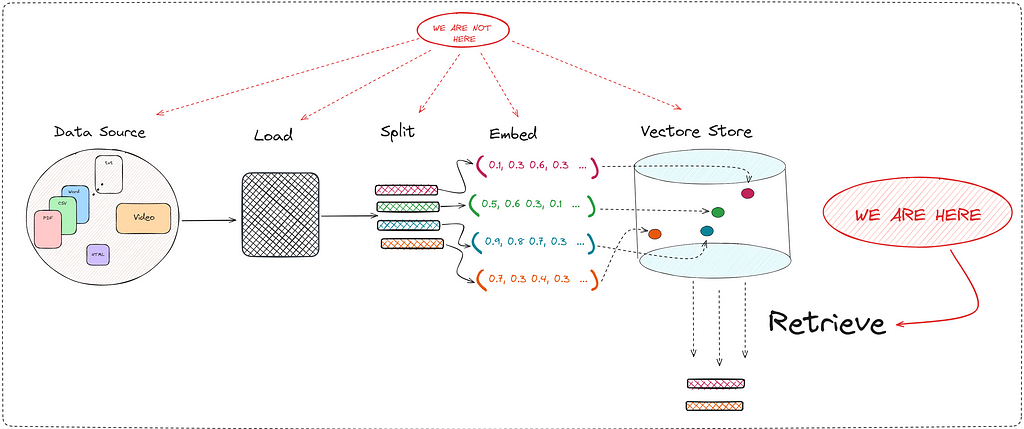

In this post we are NOT going to discuss how to create this vector store.

In this post we are going to discuss some of the techniques used to retrieve relevant documents.Since a picture is worth a thousand words, I suggest you take a look at the following:

A RAG encompasses a series of well-defined steps. This post will only cover the retriever part (Image by Author). Therefore, I reiterate that in this post we are going to deeply study one of the many important steps in creating a good RAG tool. The “Retrieve” step is key since it directly improves the context that the LLM has when generating a response.

The methods we will study are:

- Naive Retriever

- Parent Document Retriever

- Self-Query Retriever

- Contextual Compression Retriever (Reranking)

You can find the project with the notebooks here. And you can also take a look at my github:

damiangilgonzalez1995 – Overview

Vectore Store Creation

To expose these methods, a practical use case will be carried out to improve the explanation. Therefore, we are going to create a RAG about reviews of the John Wick movies.

So that the reader can follow each step of this post, they can access the repository that I have created. In it you will find the code for each of the methods, in addition to the documents used to create the vector store. The jupyter notebook in charge of this task can be found in the git repository, and is the file called “0_create_vectore_db.ipynb”.

In relation to the data source of our RAG, there are 4 csv’s each corresponding to the reviews obtained for each of the films in the John Wick saga. The files contain the following information:

Dataset of the project (Image by Author). As you can see, the “Review” field will be the target of our retriever. The other fields being important to store as metadata:

- Movie_Title

- Review_Date

- Review_Title

- Review_Url

- Author

- Rating

To read and convert each row of our files into the “Document” format, we execute the following code:

from langchain_community.document_loaders.csv_loader import CSVLoader

from datetime import datetime, timedelta

documents = []

for i in range(1, 4):

loader = CSVLoader(

encoding="utf8",

file_path=f"data/john_wick_{i}.csv",

metadata_columns=["Review_Date", "Review_Title", "Review_Url", "Author", "Rating"]

)

movie_docs = loader.load()

for doc in movie_docs:

# We add metadate about the number of the movi

doc.metadata["Movie_Title"] = f"John Wick {i}"

# convert "Rating" to an `int`, if no rating is provided - None

doc.metadata["Rating"] = int(doc.metadata["Rating"]) if doc.metadata["Rating"] else 5

documents.extend(movie_docs)We already have our documents in “Document” format:

print(documents[0])

Document(page_content=": 0nReview: The best way I can describe John Wick is to picture Taken but instead of Liam Neeson it's Keanu Reeves and instead of his daughter it's his dog. That's essentially the plot of the movie. John Wick (Reeves) is out to seek revenge on the people who took something he loved from him. It's a beautifully simple premise for an action movie - when action movies get convoluted, they get bad i.e. A Good Day to Die Hard. John Wick gives the viewers what they want: Awesome action, stylish stunts, kinetic chaos, and a relatable hero to tie it all together. John Wick succeeds in its simplicity.", metadata={'source': 'data/john_wick_1.csv', 'row': 0, 'Review_Date': '6 May 2015', 'Review_Title': ' Kinetic, concise, and stylish; John Wick kicks ass.n', 'Review_Url': '/review/rw3233896/?ref_=tt_urv', 'Author': 'lnvicta', 'Rating': 8, 'Movie_Title': 'John Wick 1', 'last_accessed_at': datetime.datetime(2024, 4, 8, 11, 49, 47, 92560)})We only have to create a vector database (Vectore Store) locally. For this, I have used Chroma. Also keep in mind that it is necessary to use an embedding model, which will transform our documents into vector format for storage. Everything mentioned can be seen in the following piece of code:

from langchain_community.vectorstores import Chroma

from langchain_openai import OpenAIEmbeddings

import os

from dotenv import load_dotenv

load_dotenv()

os.environ["OPENAI_API_KEY"] = os.getenv('OPENAI_KEY')

embeddings = OpenAIEmbeddings(model="text-embedding-3-small")

db = Chroma.from_documents(documents=documents, embedding=embeddings, collection_name="doc_jonhWick", persist_directory="./jonhWick_db")This will create a database on our premises called “JonhWick_db”. This will be the database that our RAG will use and from where our retriever will obtain the most relevant documents regarding the user’s queries.

Now is the time to present the different methods for creating a retriever.

Method: Naive Retriever

Code in 1_naive_retriever.ipynb file.

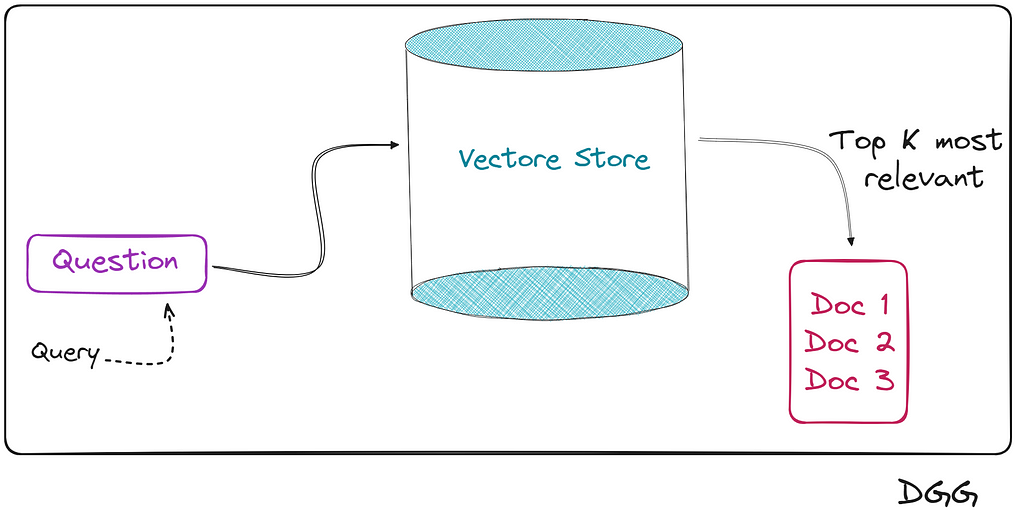

This method is the simplest, in fact its name indicates it. We use this adjective to identify this method for the simple reason that when entering the query into our database, we hope (naively) that it will return the most relevant documents/chunks.

Basically what happens is that we encode the user query with the same transformer with which we created the vector store. Once its vector representation is obtained, we calculate the similarity by calculating the cosine, the distance, etc.

And we collect the top K documents closest/similar to the query.

The flow of this type of retriever can be seen in the following image:

Simplified representation of a Naive retriever (Image by Author). Keeping the scheme in mind, let’s see how all this looks in the code. We read the database:

from langchain_community.vectorstores import Chroma

from langchain_openai import OpenAIEmbeddings

import os

from dotenv import load_dotenv

load_dotenv()

os.environ["OPENAI_API_KEY"] = os.getenv('OPENAI_KEY')

embeddings = OpenAIEmbeddings(model="text-embedding-3-small")

vectordb= Chroma(persist_directory="./jonhWick_db",

embedding_function=embeddings,

collection_name="doc_jonhWick")pythAnd we create our retriever. We can configure the similarity calculation method, in addition to other parameters.

Retriever

# Specifying top k

naive_retriever = vectordb.as_retriever(search_kwargs={ "k" : 10})

# Similarity score threshold retrieval

# naive_retriever = db.as_retriever(search_kwargs={"score_threshold": 0.8}, search_type="similarity_score_threshold")

# Maximum marginal relevance retrieval

# naive_retriever = db.as_retriever(search_type="mmr")Actually, we have already created our “Naive Retriever”, but to see how it works, we will create the complete RAG that we remember is composed of the following components:

- R (Retrieval): Done

- A (Augmented): Not yet

- G (Generation): Not yet

Augmented & Generation

from langchain_core.prompts import ChatPromptTemplate

from langchain_openai import ChatOpenAI

# Augmented

TEMPLATE = """

You are happy assistant. Use the context provided below to answer the question.

If you do not know the answer, or are unsure, say you don't know.

Query:

{question}

Context:

{context}

"""

rag_prompt = ChatPromptTemplate.from_template(TEMPLATE)

# Generation

chat_model = ChatOpenAI()We already have the 3 components of our RAG. All that remains is to assemble them, and for this we will use the langchain chains to create a RAG.

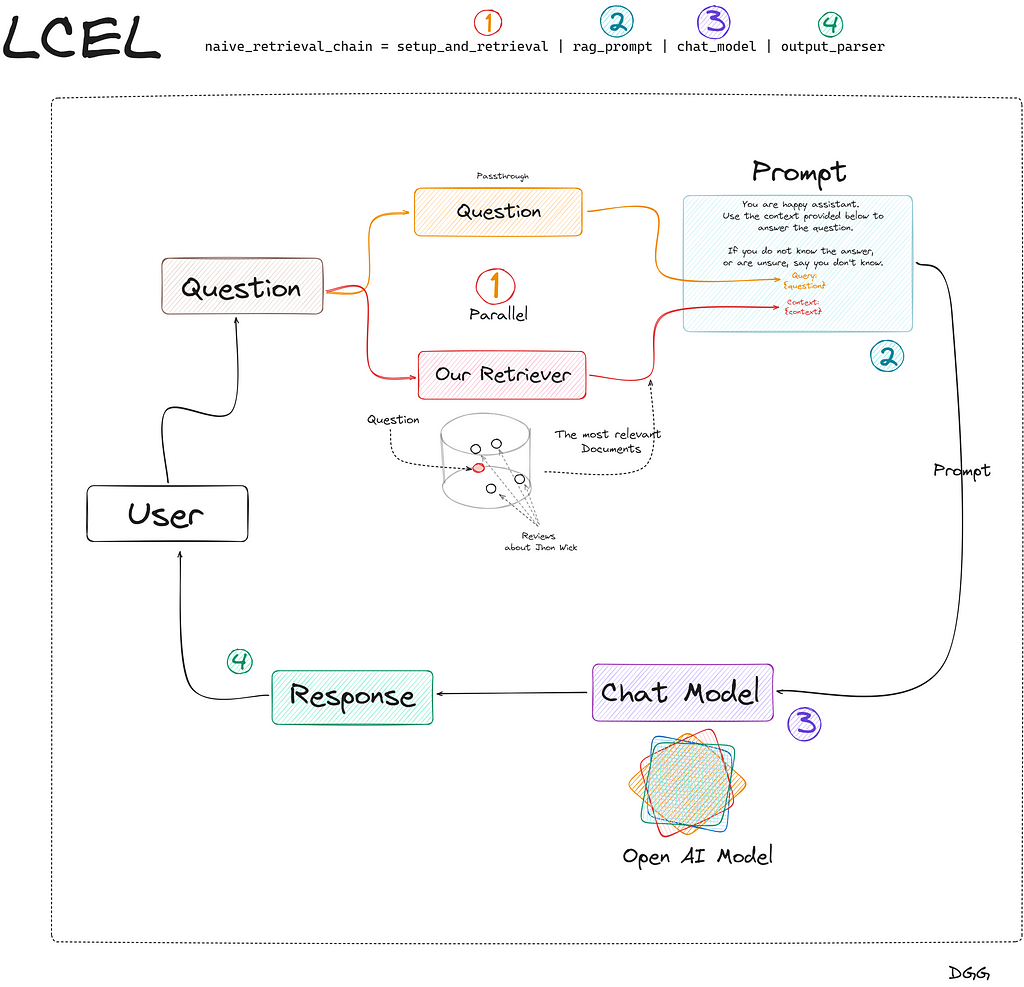

I don’t know if you know the language created by langchain for creating chains in a more efficient way. This language is known as LCEL (LangChain Expression Language). If you are new to this way of creating chains in langchain, I leave you a very good tutorial here:

Finally, we create our RAG using Langchain’s own chain creation language (LCEL):

from langchain_core.runnables import RunnablePassthrough, RunnableParallel

from operator import itemgetter

from langchain_core.output_parsers import StrOutputParser

setup_and_retrieval = RunnableParallel({"question": RunnablePassthrough(), "context": naive_retriever })

output_parser = StrOutputParser()

naive_retrieval_chain = setup_and_retrieval

| rag_prompt

| chat_model

| output_parser

naive_retrieval_chain.invoke( "Did people generally like John Wick?")

# response: 'Yes, people generally liked John Wick.'This is the simplest way to create a chain for a RAG. In the Jupyter notebook you can find the same chain but more robust. Since I don’t want us to get lost on this topic now, I have only shown the simplest form. Also so that we understand what is happening in the code above, I have created this very clarifying diagram:

Creation of a RAG with Langchain and its LCEL language (Image by Author). Great, we’re done creating our Naive RAG. Let’s move on to the next method.

Method: Parent Document Retriever

Code in 2_parent_document_retriever.ipynb file.

Imagine that we have created a RAG to recognize possible diseases by introducing some of their symptoms in the consultation. In the event that we have a Naive RAG, we may collect a series of possible diseases that only coincide in one or two symptoms, leaving our tool in a bit of a bad place.

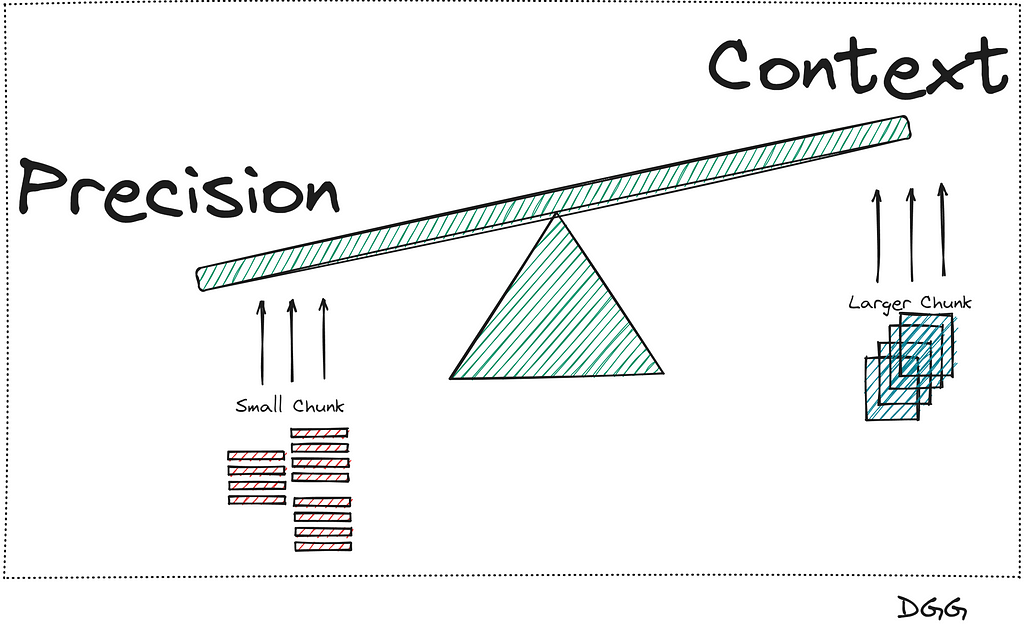

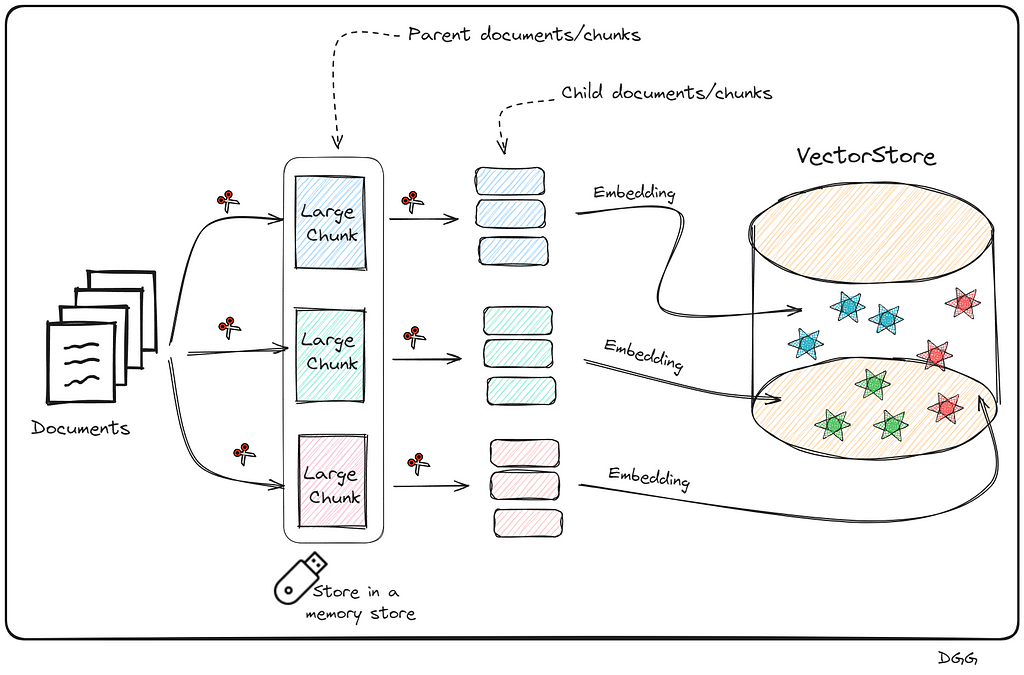

This is an ideal case to use Parent Doc Retriever. And the type of technique consists of cutting large chunks (parent chunk) into even smaller pieces (child chunk). By having small chunks, the information they contain is more concentrated and therefore, its informative value is not diluted between paragraphs of text.

There is a small problem in all this:

- If we want to be precise in searching for the most relevant documents, we need to break our documents into small chunks.

- But it is also very important to provide good context to the LLM, which is achieved by providing larger chunks.

What has been said can be seen in the following image:

Representation of the balance between these two concepts/metrics (Image by Author). It seems that there is no way out of the problem, since when we increase the precision, the context is reduced, and vice versa. This is when this method appears that will solve our lives.

The main idea is to further chop the large chunks (Parent chunks/documents) into smaller chunks (Child Chunks/documents). Once this is done, perform the search for the most relevant top K documents with the child chunks, and return the parents chunks to which the top K child document belongs.

We already have the main idea, now let’s get it down to earth. The best way to explain it is step by step:

- Obtain the documents and create the large chunks (Parent chunks)

- Perform a split of each of the parent chunks for the growth of the child chunks.

- Save the child chunks (Vector Representation) in the Vector Store.

- Save the parent chunks in memory (We do not need to create their vector representation).

What has been said can be seen in the following image:

Visual representation of how child chunks are created from parent chunks, and their storage. These are necessary steps to create a parent document retriever (Image by Author). This may seem very complex to create, since we have to create a new database with the small chunks, save the parent chunks in memory. Additionally, know which parent chunk each child chunk belongs to. Thank goodness Langchain exists and the way to build it is super simple.

Surely you have come to the conclusion that it is necessary to create a new vector store for this method. Furthermore, in the case of reviews of the John Wick movies, such as the data source with CSV files, it is not necessary to perform the first split (parent chunks). This is because we can consider each row of our csv files to be a chunk in itself.

Overall, let’s visualize the following image that reflects how this method works:

Visual representation of how a Parent Document Retriever works (Image by Author). Going to code it is represented as follows:

from langchain.retrievers import ParentDocumentRetriever

from langchain.storage import InMemoryStore

from langchain_text_splitters import RecursiveCharacterTextSplitter

from langchain_openai import OpenAIEmbeddings

from langchain_community.vectorstores import Chroma

# documents = Read csv files. Check jupyter notebook for more details

parent_docs = documents

# Embedding Model

embeddings = OpenAIEmbeddings(model="text-embedding-3-small")

# Splitters

child_splitter = RecursiveCharacterTextSplitter(chunk_size=200)

# We don't need a parent splitter because the data cames from CSV file, and each row is a parent doc.

# parent_splitter = RecursiveCharacterTextSplitter(chunk_size=800)

# Stores

store = InMemoryStore()

vectorstore = Chroma(embedding_function=embeddings, collection_name="fullDoc", persist_directory="./JohnWick_db_parentsRD")

parent_document_retriever = ParentDocumentRetriever(

vectorstore=vectorstore,

docstore=store,

child_splitter=child_splitter,

# parent_splitter =parent_splitter

)Something intuitive about what happens here is that the number of chunks in the vector store (number of child chunks) should be much higher than the number of documents stored in memory (parent chunks). With the following code we can check it:

print(f"Number of parent chunks is: {len(list(store.yield_keys()))}")

print(f"Number of child chunks is: {len(parent_document_retriever.vectorstore.get()['ids'])}")

'''

Number of parent chunks is: 75

Number of child chunks is: 3701

'''Great, we would already have our Parent Document Retriever, we just need to create our RAG based on this retriever and that would be it. It would be done exactly the same as in the previous method. I attach the code for creating the chain in langchain. To see more details, take a look at the jupyter notebook.

setup_and_retrieval = RunnableParallel({"question": RunnablePassthrough(), "context": parent_document_retriever })

output_parser = StrOutputParser()

parent_retrieval_chain = setup_and_retrieval | rag_prompt | chat_model | output_parserNote that it is exactly the same as in the previous case, only with the small difference that in the “setup_and_retrieval” variable, we configure that we want to use our “parent_document_retriever”, instead of the “naive_retriever”.

Method: Self Query Retriever

Code in 3_self_query_retriever.ipynb file.

This is possibly one of the most optimal methods to improve the efficiency of our retriever.

Its main feature is that it is capable of performing searches in the vector store, applying filters based on the metadata.

We know that when we apply a “Naive retrieval”, we are calculating the similarity of all the chunks of the vector database with the query. The more chunks the vector store has, the more similarity calculations will have to be done. Now, imagine being able to do a prior filter based on the metadata, and after selecting the chunks that meet the conditions imposed in relation to the metadata, calculate similarities. This can drastically reduce computational and time cost.

Let’s look at a use case to fully understand when to apply this type of retreival.

Let’s imagine that we have stored in our vector database a large number of experiences and leisure offers (Ex: surf classes, zip line, gastronomic route, etc.). The description of the experience is what we have encoded, using our embedding model. Additionally, each offer has 3 key values or metadata: Date, price and place.

Let’s imagine that a user is looking for an experience of this style: An experience in nature, that is for the whole family and safe. Furthermore, the price must be less than $50 and the place is California.

Something is clear here

WE DO NOT WANT YOU TO RETURN US ACTIVITY/EXPERIENCES THAT DO NOT MEET THE PRICE OR PLACE THAT THE USER REQUESTS.

Therefore, it does not make sense to calculate similarities with chunks/experiences that do not comply with the metadata filter.

This case is ideal for applying Self Query Retriever. What this type of retriever allows us is to perform a first filter through the metadata, and then perform the similarity calculation between the chunks that meet the metadata requirements and the user input.

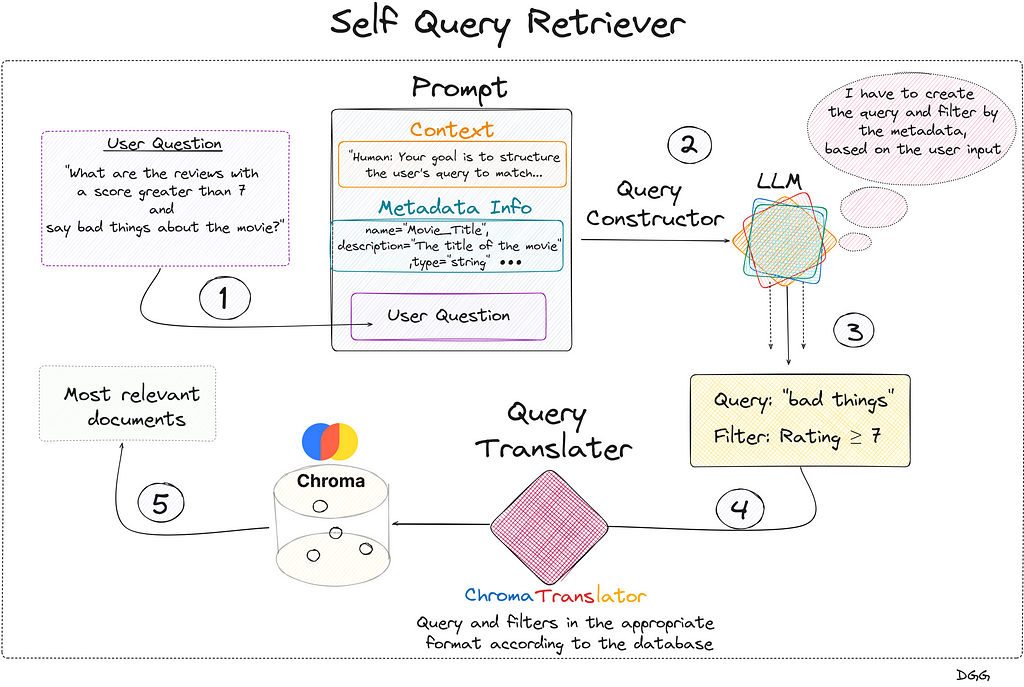

This technique can be summarized in two very specific steps:

- Query Constructor

- Query Translater

Query Constructor

The objective of the step called “Query Constructor” is to create the appropriate query and filters according to the user input.

Who is in charge of applying the corresponding filters and how do you know what they are?

For this we are going to use an LLM. This LLM will have to be able to decide which filters to apply and when. We will also have to explain beforehand what the metadata is and what each of them means. In short, the prompt must contain 3 key points:

- Context: Personality, how you should act, output format, etc.

- Metadata: Information about available metadata.

- Query: The user’s query/input/question.

The output generated by the LLM cannot be directly entered into the database. Therefore, the so-called “Query Translater” is needed.

Query Translater

This is a module in charge of translating the output of the LLM (Query Constructor) into the appropriate format to perform the query. Depending on the vector database you use, you will have to use one or the other. In my case I used Chroma db, therefore, I need a translator focused on this database. Luckily, Langchain has specific database translators for almost all of them.

As you may have already noticed, I am a big fan of diagrams. Let’s look at the following which provides quite a bit of clarity to the matter:

Visual representation of how a Self Query Retriever works (Image by Author). Regarding the previous image, we see that everything begins with the user’s query. We create the prompt that contains the 3 key fields and is introduced to the LLM that generates a response with two key fields: “Query” and “Filter”. This is fed into the query translator which translates these two fields into the correct format needed by Chroma DB. Performs the query and returns the most relevant documents based on the user’s initial question.

Something to emphasize is that the query entered by the user does not have to be the same as the one entered into the database. In the diagram shown, it can be seen that the LLM, taking into account the available metadata and the user’s question, detects that it can create a filter with the “Rating” metadata. It also creates a new query based on the user’s query.

Let’s look at all this in code. As I have explained, it is very important to provide the LLM with a detailed description of the metadata available in the vector store. This translates into the following piece of code:

from langchain.chains.query_constructor.base import AttributeInfo

from langchain.retrievers.self_query.base import SelfQueryRetriever

from langchain_openai import ChatOpenAI

from langchain.retrievers.self_query.chroma import ChromaTranslator

metadata_field_info = [

AttributeInfo(

name="Movie_Title",

description="The title of the movie",

type="string",

),

AttributeInfo(

name="Review_Date",

description="The date of the review",

type="string",

),

AttributeInfo(

name="Review_Title",

description="The title of the review",

type="string",

),

AttributeInfo(

name="Review_Url",

description="The URL of the review",

type="string",

),

AttributeInfo(

name="Author",

description="The author of the review",

type="string",

),

AttributeInfo(

name="Rating",

description="A 1 to 10 rating for the movie",

type="integer",

)

]To define our retrieval we must define the following points:

- The LLM to use

- The embedding model to be used

- The vector basis that is accessed

- A description of what information can be found in the

documents of this vector base. - The metadata description

- The Query translator you want to use

Let’s see what it looks like in code:

document_content_desription = "A review of the Jonh Wick movie."

embeddings = OpenAIEmbeddings(model="text-embedding-3-small")

chat_model = ChatOpenAI()

self_query_retriever = SelfQueryRetriever.from_llm(

llm=ChatOpenAI(temperature=0),

vectorstore =vectordb,

document_contents = document_content_desription,

metadata_field_info =metadata_field_info,

verbose = True,

structured_query_translator = ChromaTranslator()

)Let’s see with a very clear example how we have greatly improved our RAG by using this type of retriever. First we use a naive retriever and then a self query retriever.

Question = "Make a summary of the reviews that talk about John Wick 3 and have a score higher than 7"

response = naive_retrieval_chain.invoke(Question)

print(response)

'''

I don't know the answer.

'''

------------------------------------------------------------------------

response = self_retrieval_chain.invoke(Question)

print(response)

'''

John Wick: Chapter 3 - Parabellum is quite literally

about consequences, dealing with the fallout of John's...

'''As we can see, there is a notable improvement.

Method: Contextual Compression Retriever (Reranking)

Code in 4_contextual_compression_retriever(reranking).ipynb file.

- Context Windows: The more documents we obtain from the vectore store, the more information the LLM will have to give a good answer.

- Recall: The more documents are retrieved from the vector store, the probability of obtaining irrelevant chunks is greater and therefore, the recall decreases (Not a good thing).

There seems to be no solution for this problem. When we increase one of the metrics, the other seems destined to decrease. Are we sure about that?

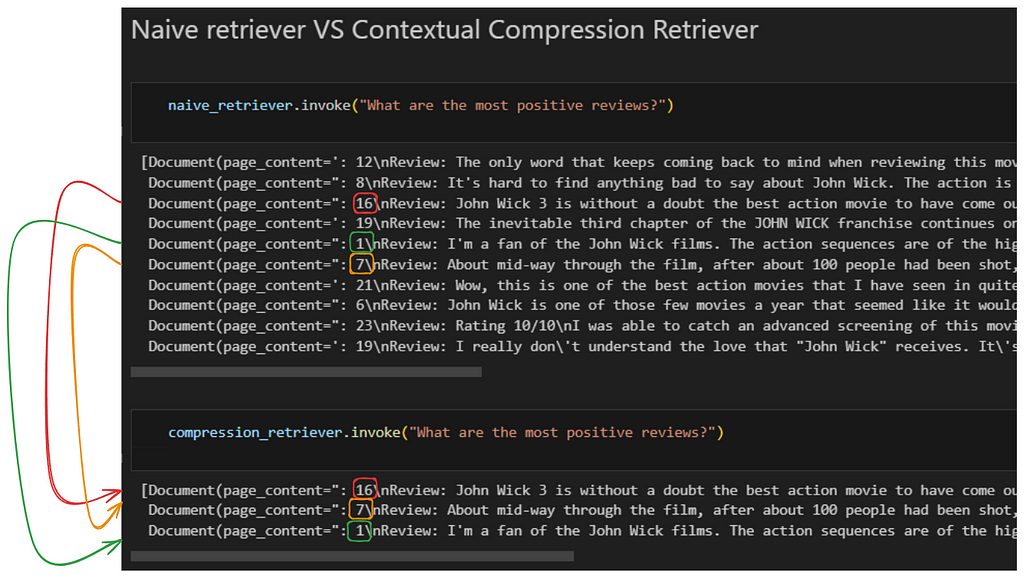

This is when this technique, compression retriever, is presented, focusing on the reranking technique. Let’s say that this technique consists of two very different steps:

- Step 1: Get a good amount of relevant docs based on the input/question. Normally we set the most relevant K.

- Step 2: Recalculate which of these documents are really relevant. discarding the other documents that are not really useful (Compression).

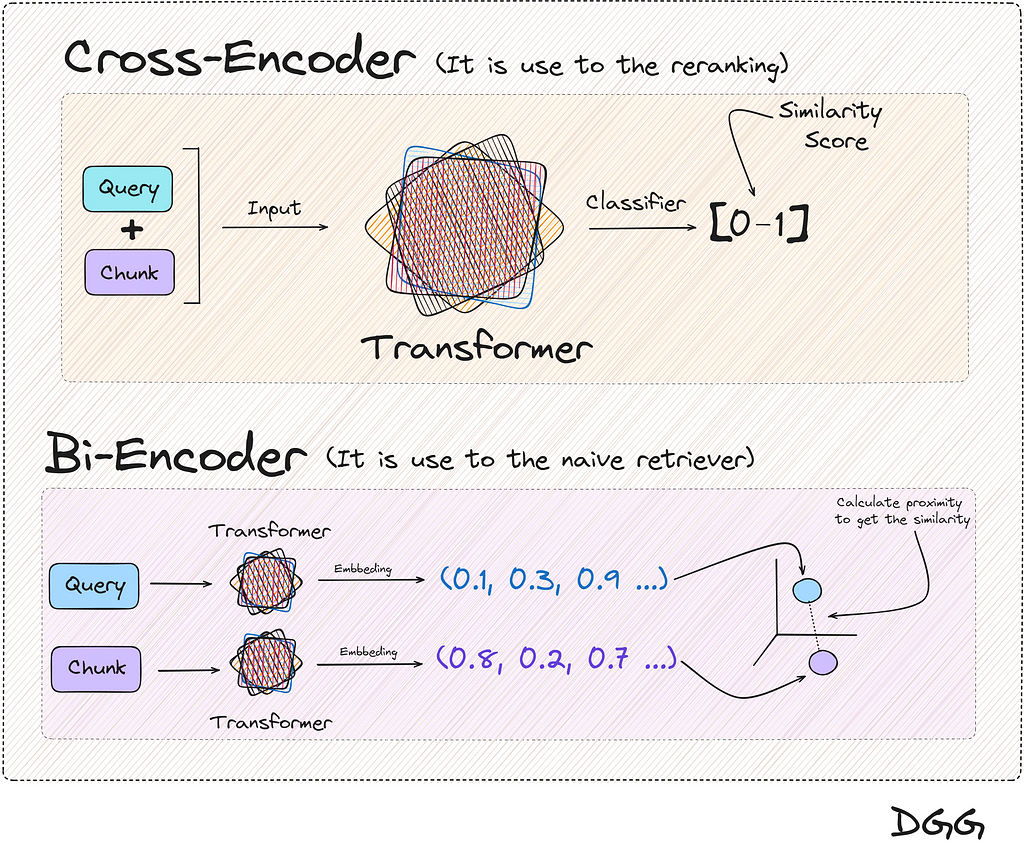

For the first step, what is known as Bi-Encoder is used, which is nothing more than what we usually use to make a basic RAG. Vectorize our documents. vectorize the query and calculate the similarity with any metric of our choice.

The second step is something different from what we are used to seeing. This recalculation/reranking is executed by the reranking model or cross-encoder.

These models expect two documents/texts as input, returning a similarity score between the pair.

If one of these two inputs is the query and the other is a chunk, we can calculate the similarity between the two.

These two methods can be displayed as follows:

Visual representation of the two methods presented in the post to calculate the similarity between texts (Image by Author). You will have realized that the two methods in the end provide the same result, a metric that reflects the similarity between two texts. And this is totally true, but there is a key feature:

The result returned by the cross encoder is much more reliable than with the Bi-encoder

Okay, it works better, then, because we don’t use it directly with all chunks, instead of just the top K chunks. Because it would be terribly expensive in time and money/computation. For this reason, we make a first filter of the chunks closest in similarity to the query, reducing the use of the reranking model to only K times.

A good question would be where to find the Cross-Encoder models? We are lucky that there are open source models that we can find in HuggingFace, but for the practical case of this post we are going to use the model made available by the company Cohere.

Cohere | The leading AI platform for enterprise

To better understand the architecture of this method, let’s look at a visual example.

Visual representation of how a Contextual Compression Retriever (Reranking) works (Image by Author). The image shows the steps:

- 1º) We obtain the query, which we encode in its vector form with a transformer and we introduce it into the vector base.

- 2º) Collect the documents most similar to the query from our database. We can use any retriever method.

- 3º) Next we use the Cohere cross-encoder model. In the example in the image, this model will be used a total of 4 times. Remember that the input of this model will be the query and a document/chunk, to collect the similarity of these two texts.

- 4º) The 4 calls have been made to this model in the previous step and 4 new values (between 0 and 1) of the similarity between the query and each of the documents have been obtained. As can be seen, the chunk number 1 obtained in the previous steps, after the reranking, is now in 4th place.

- 5º) We add the first 3 chunks most relevant to the context.

Returning again to the computational cost and time, if the cross-encoders were applied directly, think that with each new query, the similarity of the query with each of the documents should be calculated. Something that is not optimal at all.

On the other hand, using Bi-Encoders, the vector representation of the documents is the same for each new query.

We then have a much superior method that is expensive to execute, and on the other hand, another method that works well but does not have a large computational cost with each new query. All this ends with the conclusion of unifying these two methods for a better RAG. And this is known as the Contextual Compression with reranking method.

Let’s move on to the code part. Let’s remember that this method uses a retreiver, which in our case will be a Naive Retriever:

naive_retriever = vectordb.as_retriever(search_kwargs={ "k" : 10})Thanks to Langchain and its integration with Cohere, we only have to import the module that will execute the call to the Cohere cross-encoder model:

from langchain_cohere import CohereRerank

os.environ["COHERE_API_KEY"] = "YOUR API KEY FROM COHERE"

compressor = CohereRerank(top_n=3)Finally, we create our Contextual Compression Retriever with Langchain:

from langchain.retrievers.contextual_compression import ContextualCompressionRetriever

compression_retriever = ContextualCompressionRetriever(

base_compressor=compressor,

base_retriever=naive_retriever

)As simple as that. Let’s see a comparison between a Naive Retriever and a Reranking Retriever:

Example of how the reranking method recalculates the similarity between the query and the chunks. This causes the most relevant documents returned by the first retriever (In our case, Naive retriever), to be completely reordered. The 3 best are collected as shown (Image by Author). As we see, Naive returns us the top 10 chunks/documents. After performing the reranking and obtaining the 3 most relevant documents/chunks, there are noticeable changes. Notice how document number 16, which is in third position in relation to its relevance in the first retriever, becomes first position when performing the reranking.

Conclusion

We have seen that depending on the characteristics of the case where we want to apply a RAG, we will want to use one method or another. Furthermore, there may be the case in which one does not know which retriever method to use. For this, there are different libraries to evaluate your rags.

There are several tools for this purpose. Some of those options that I personally recommend are the combination of RAGAS and LangSmith.

Evaluating RAG pipelines with Ragas + LangSmith

I highly recommend following, learning and watching the videos of these people who are really what inspired me to make this article.

Thank you for reading!

If you find my work useful, you can subscribe to get an email every time that I publish a new article.

If you’d like, follow me on Linkedin!

Advanced Retriever Techniques to Improve Your RAGs was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Advanced Retriever Techniques to Improve Your RAGsGo Here to Read this Fast! Advanced Retriever Techniques to Improve Your RAGs

-

Apple hits 18 gigawatts of clean energy in its environmental push

Apple is continuing to step up its environmental efforts, revealing new projects to increase the use of clean energy, as well as to replenish fresh water it uses by 2030 ahead of Earth Day.

Apple is continuing to step up its environmental efforts, revealing new projects to increase the use of clean energy, as well as to replenish fresh water it uses by 2030 ahead of Earth Day.

An Apple solar farmApple made a pledge in 2020 to become completely carbon neutral by 2030, covering everything from its supply chain to power consumed to recharge its products. In an update on April 17, it seems Apple is well on its way to achieving the goal.

The update, published on Wednesday morning, includes claims that Apple and its suppliers now use more than 18 gigawatts of clean energy. This is up from the 13.7 gigawatts used by the supply chain as reported in 20203, and is triple the clean energy usage of 2020.

Go Here to Read this Fast! Apple hits 18 gigawatts of clean energy in its environmental push

Originally appeared here:

Apple hits 18 gigawatts of clean energy in its environmental push -

WhatsApp adds chat filters to help you more easily find specific messages

The filters will let you see all your messages, only those unread, or only those from group chats.Go Here to Read this Fast! WhatsApp adds chat filters to help you more easily find specific messages

Originally appeared here:

WhatsApp adds chat filters to help you more easily find specific messages