Originally appeared here:

Here are the new spacesuits astronauts will wear for tonight’s Starliner launch

Tag: tech

-

Here are the new spacesuits astronauts will wear for tonight’s Starliner launch

NASA astronauts Butch Wilmore and Suni Williams have been suiting up in new spacesuits ahead of the first crewed Boeing Starliner test flight tonight. -

Starliner astronauts arrive at launchpad for first crewed flight tonight

NASA astronauts Butch Wilmore and Suni Williams have arrived at the launchpad for the first crewed flight of the Starliner spacecraft.Go Here to Read this Fast! Starliner astronauts arrive at launchpad for first crewed flight tonight

Originally appeared here:

Starliner astronauts arrive at launchpad for first crewed flight tonight -

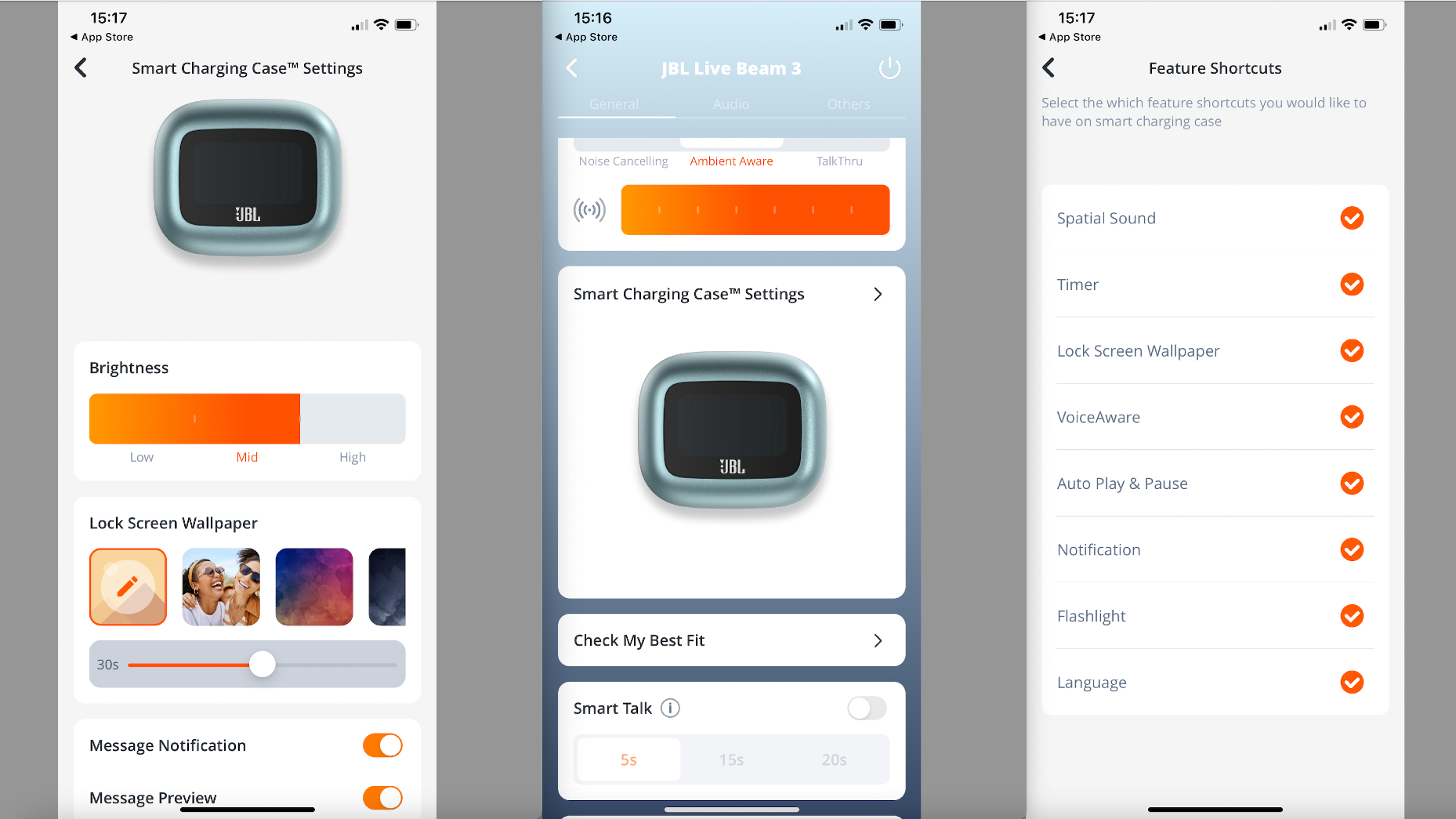

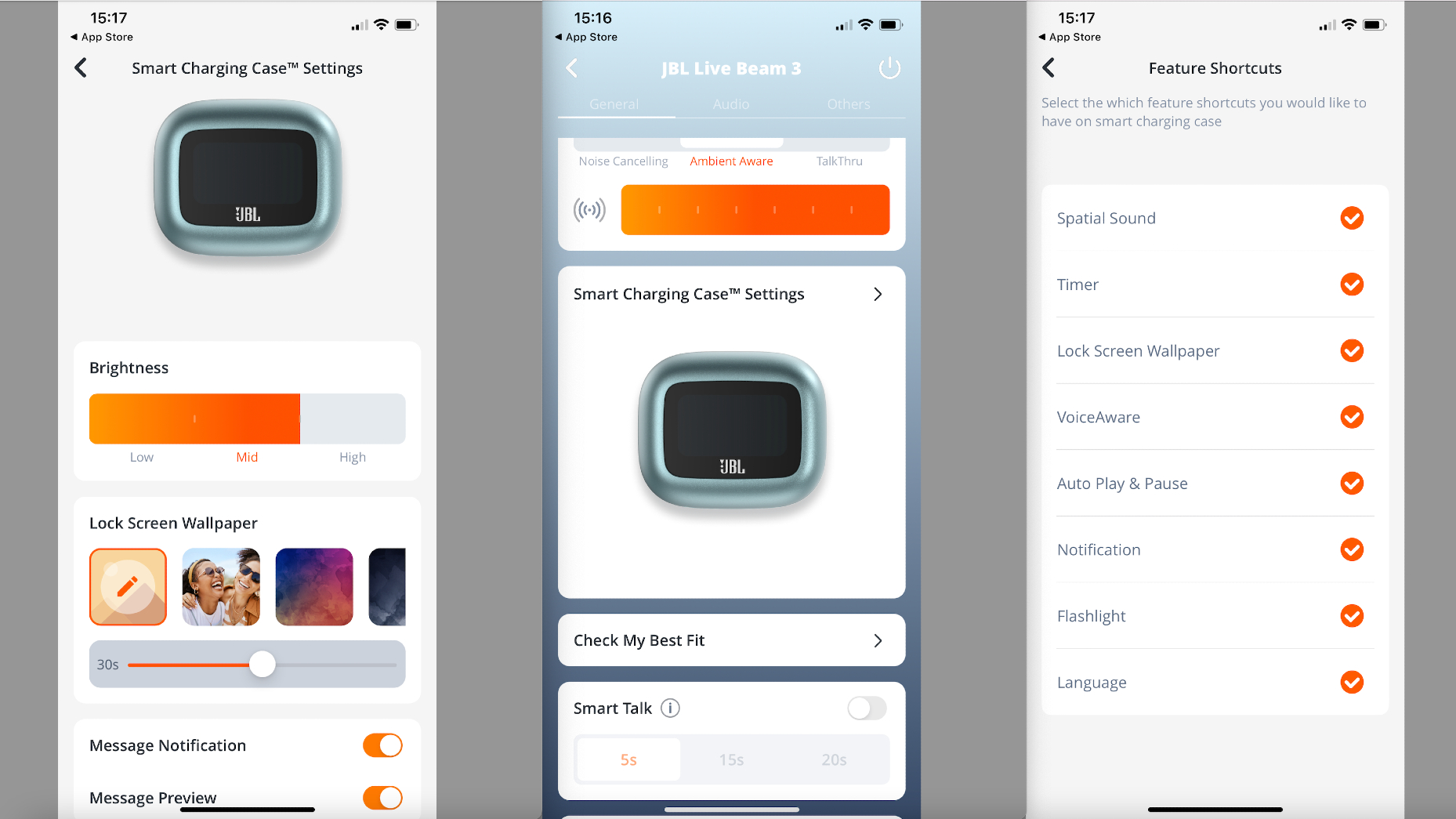

I thought screens on earbuds cases were a bit meh – but JBL just proved me wrong

JBL Live Beam 3 arrived with two other screen-enhanced options in January –and it’s a nice upgrade on the Tour Pro 2 case.

JBL Live Beam 3 arrived with two other screen-enhanced options in January –and it’s a nice upgrade on the Tour Pro 2 case.Originally appeared here:

I thought screens on earbuds cases were a bit meh – but JBL just proved me wrong -

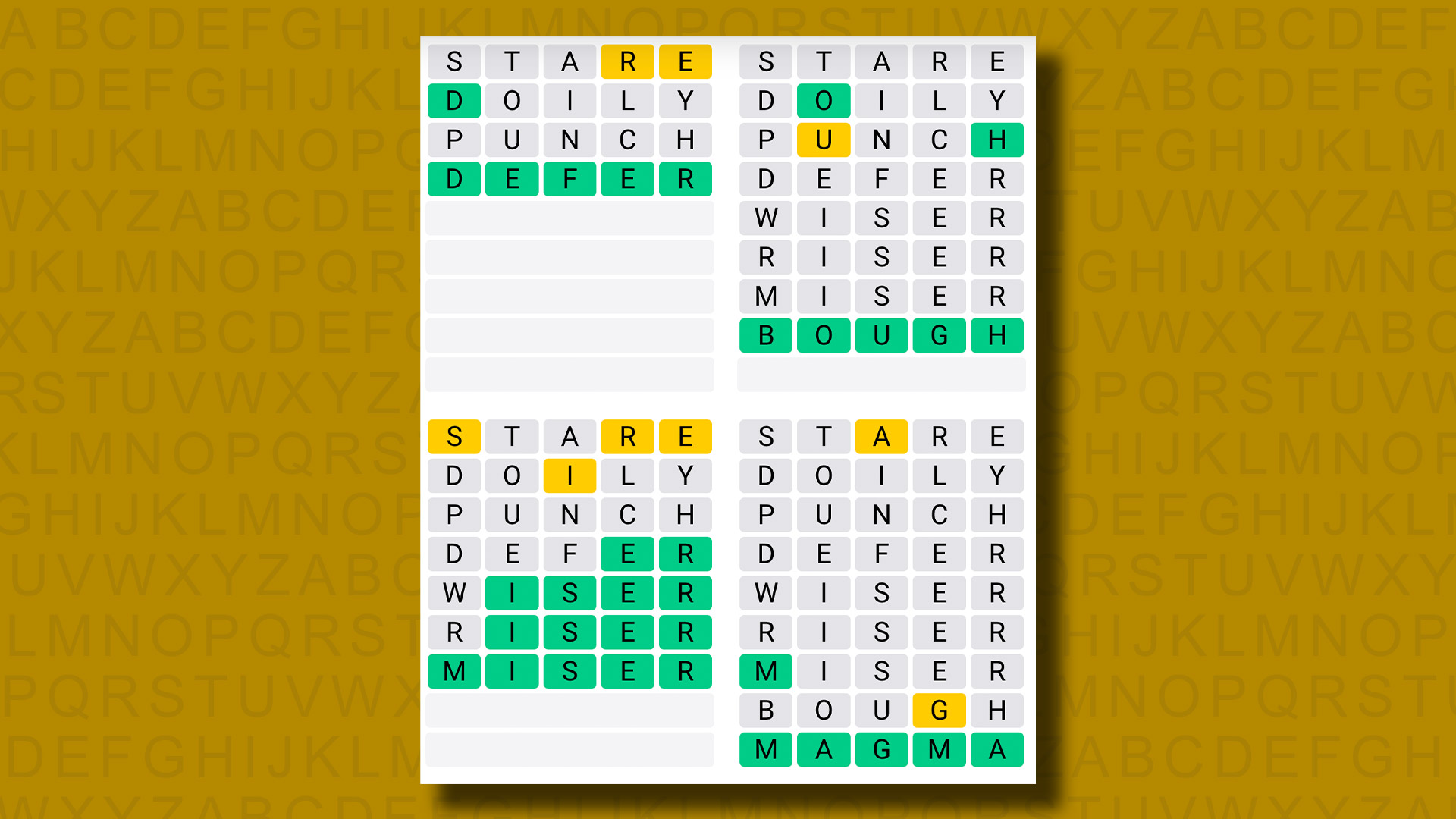

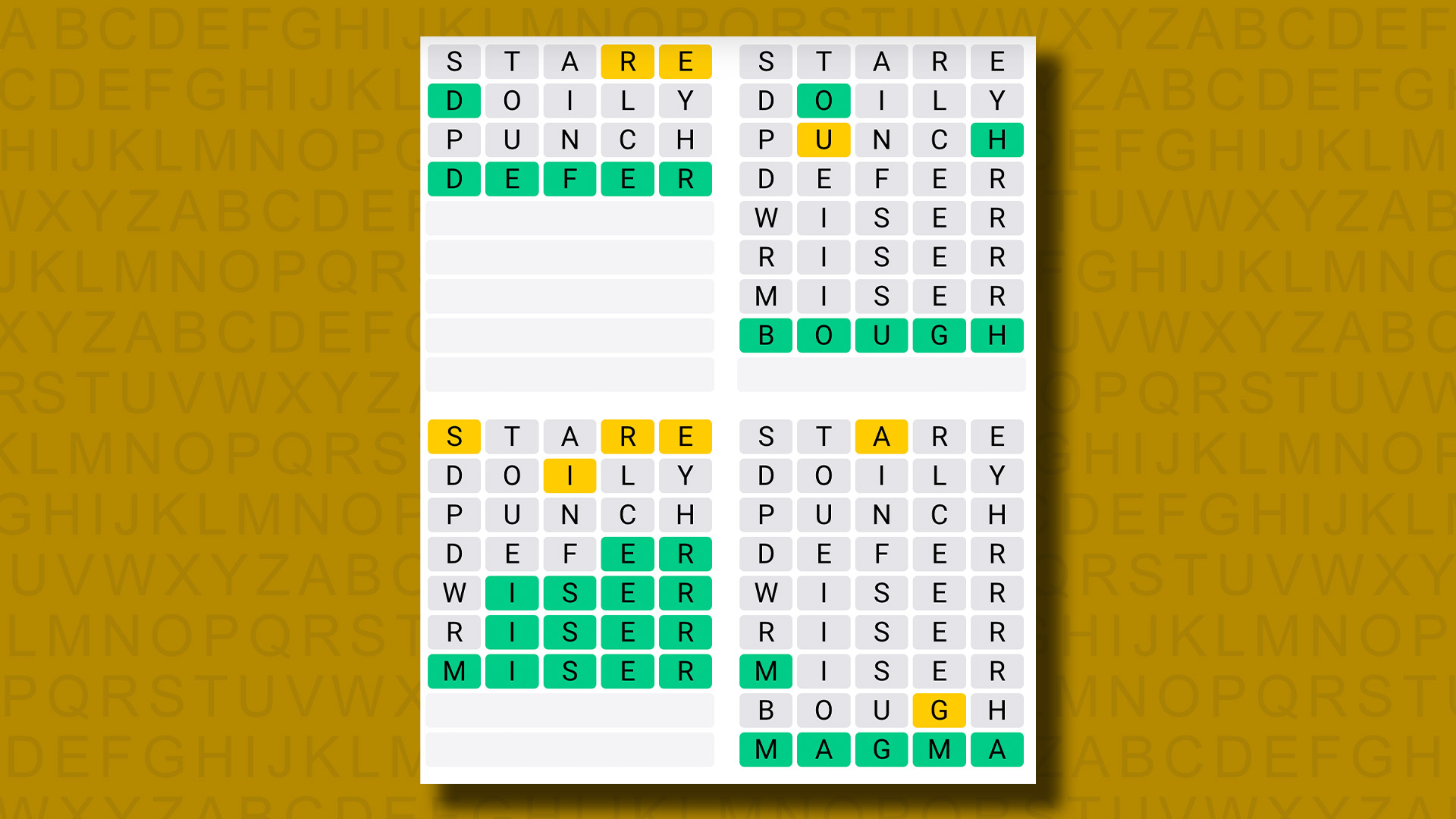

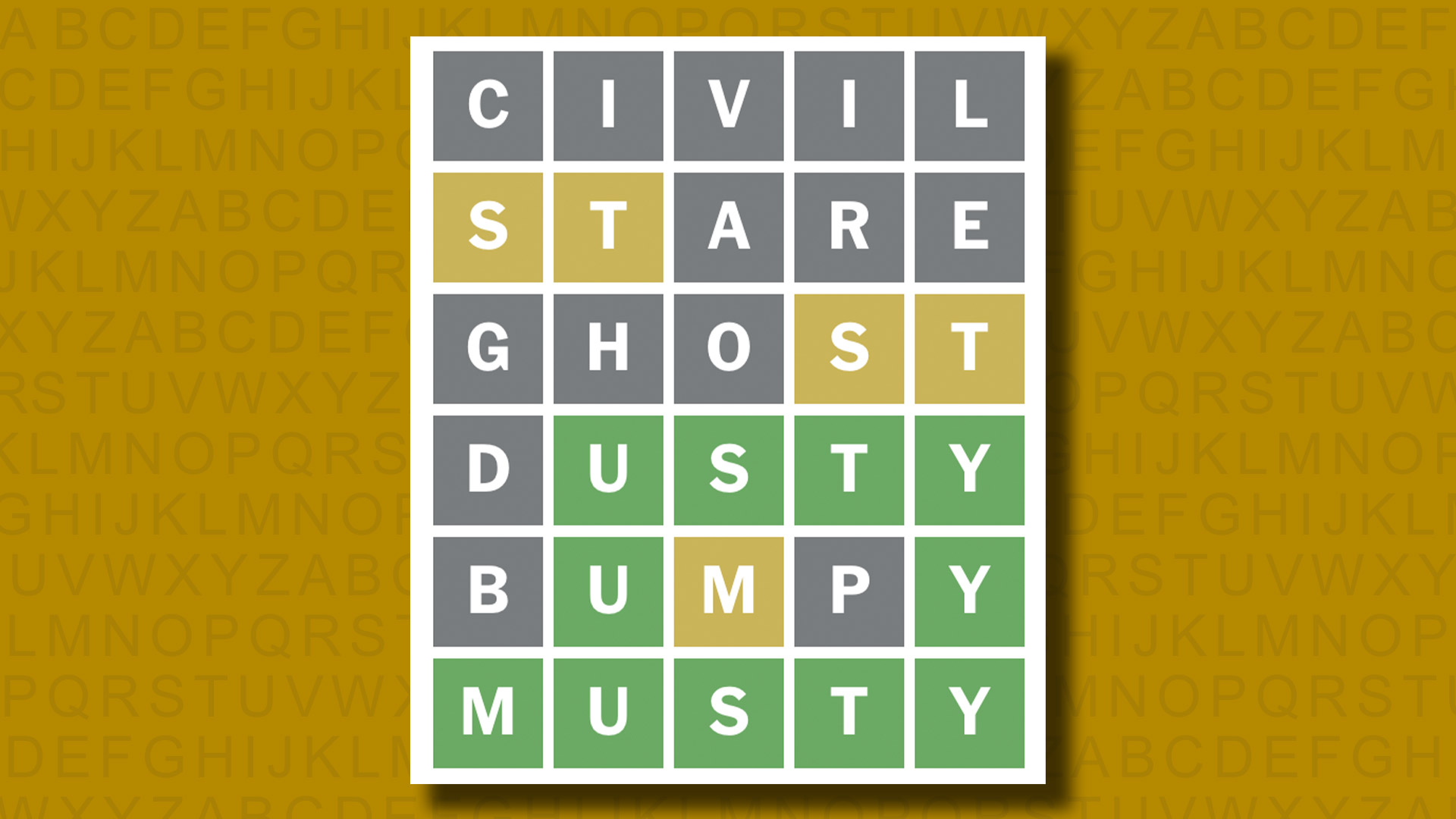

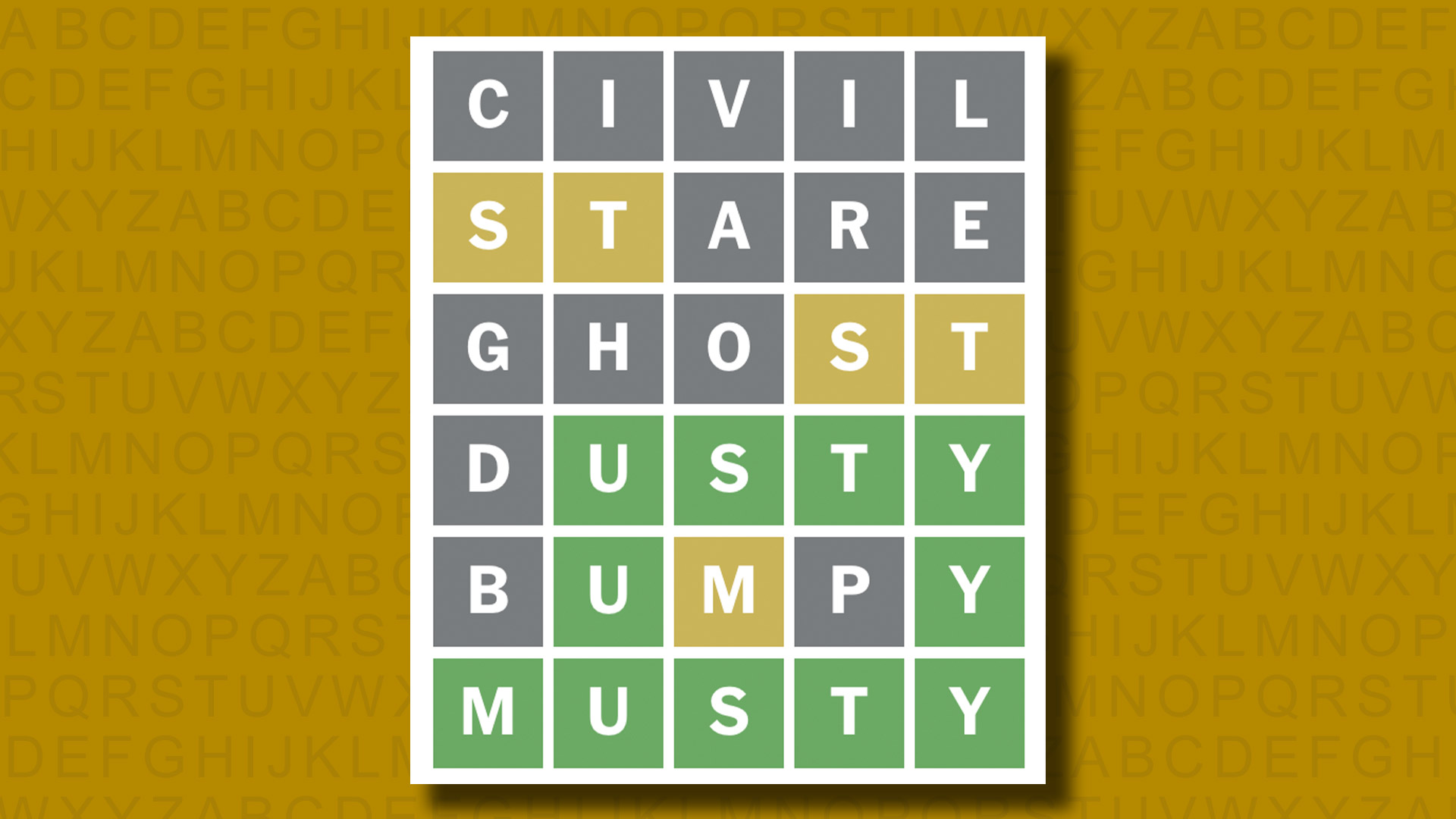

Quordle today – hints and answers for Tuesday, May 7 (game #834)

Looking for Quordle clues? We can help. Plus get the answers to Quordle today and past solutions.

Looking for Quordle clues? We can help. Plus get the answers to Quordle today and past solutions.Go Here to Read this Fast! Quordle today – hints and answers for Tuesday, May 7 (game #834)

Originally appeared here:

Quordle today – hints and answers for Tuesday, May 7 (game #834) -

NYT Wordle today — answer and hints for game #1053, Tuesday, May 7

Looking for Wordle hints? We can help. Plus get the answers to Wordle today and yesterday.

Looking for Wordle hints? We can help. Plus get the answers to Wordle today and yesterday.Go Here to Read this Fast! NYT Wordle today — answer and hints for game #1053, Tuesday, May 7

Originally appeared here:

NYT Wordle today — answer and hints for game #1053, Tuesday, May 7 -

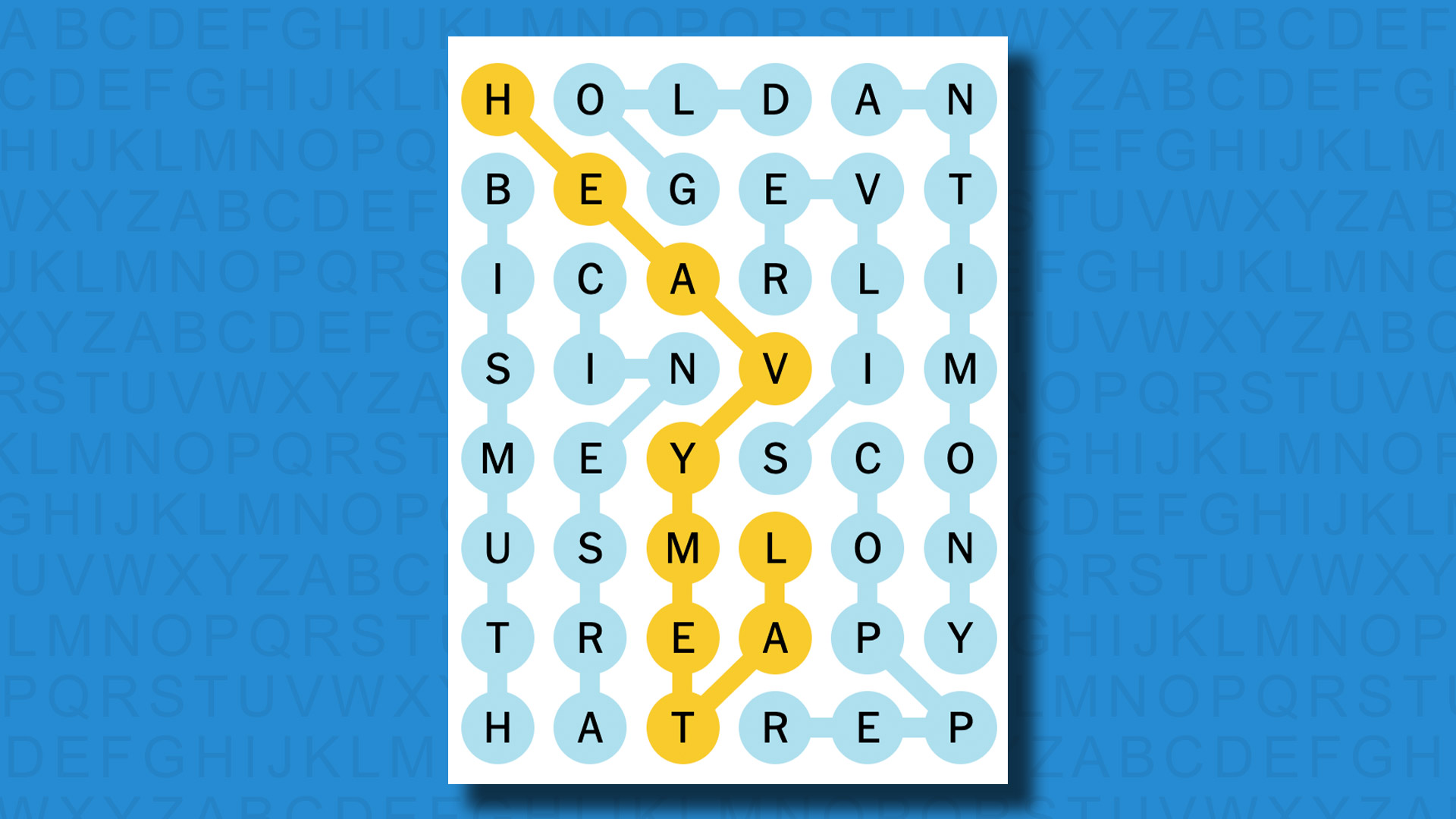

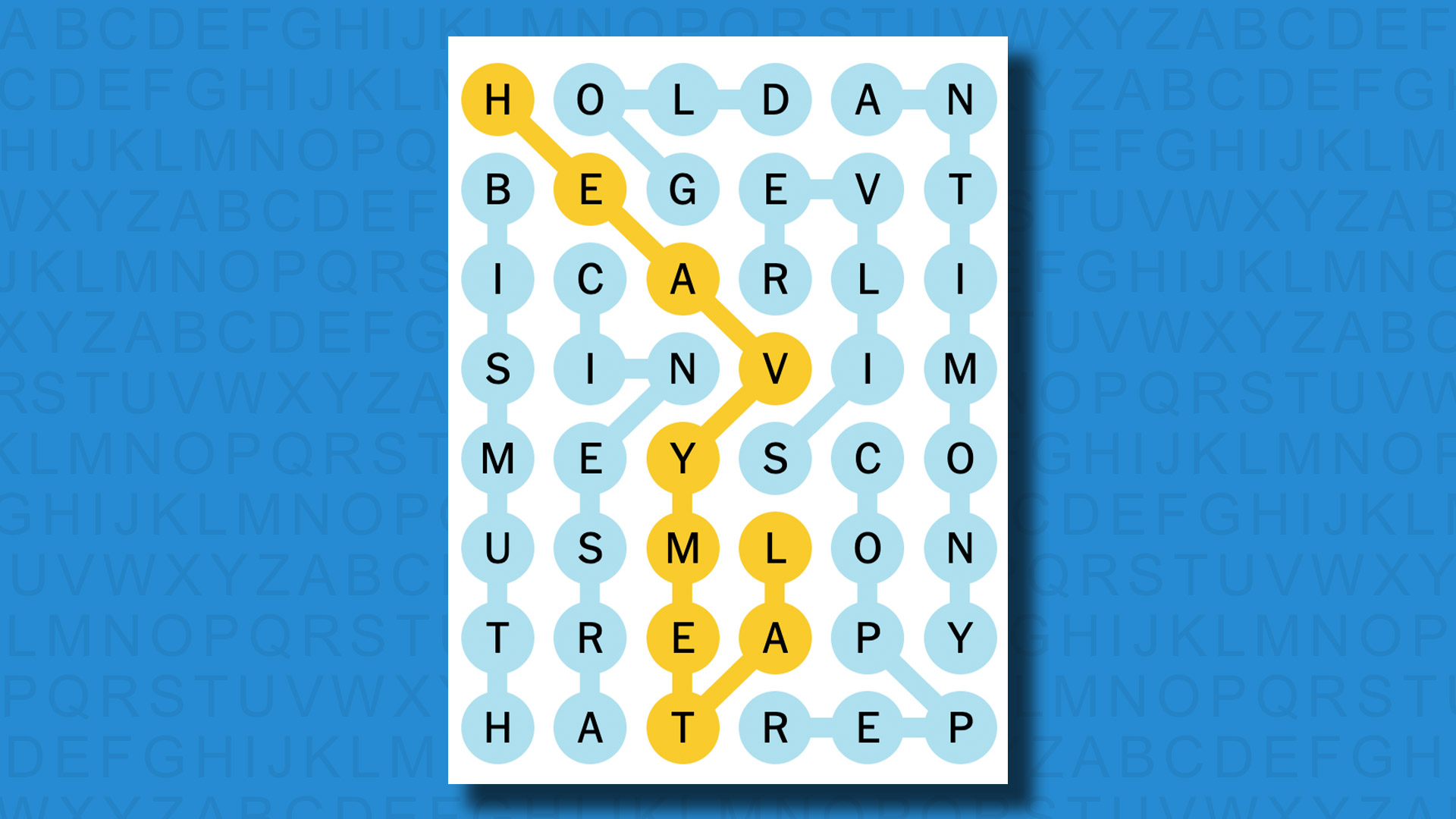

NYT Strands today — hints, answers and spangram for Tuesday, May 7 (game #65)

Looking for NYT Strands answers and hints? Here’s all you need to know to solve today’s game, including the Spangram.

Looking for NYT Strands answers and hints? Here’s all you need to know to solve today’s game, including the Spangram.Originally appeared here:

NYT Strands today — hints, answers and spangram for Tuesday, May 7 (game #65) -

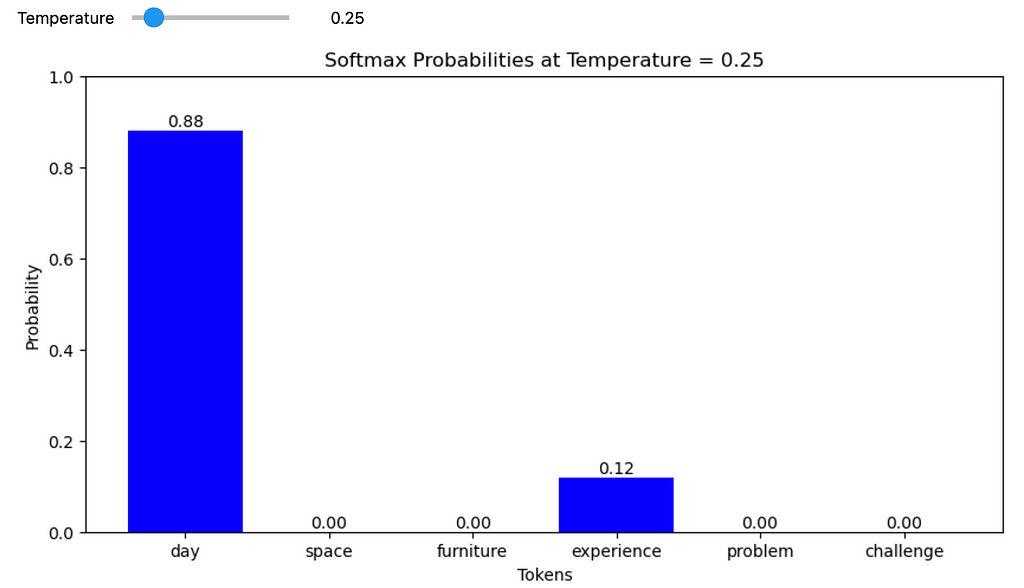

How does temperature impact next token prediction in LLMs?

TLDR

1. At a temperature of 1, the probability values are the same as those derived from the standard softmax function.

2. Raising the temperature inflates the probabilities of the less likely tokens, thereby broadening the range of potential candidates (or diversity) for the model’s next token prediction.

3. Lowering the temperature, on the other hand, makes the probability of the most likely token approach 1.0, boosting the model’s confidence. Decreasing the temperature effectively eliminates the uncertainty within the model.Introduction

Large Language Models (LLMs) are versatile generative models suited for a wide array of tasks. They can produce consistent, repeatable outputs or generate creative content by placing unlikely words together. The “temperature” setting allows users to fine-tune the model’s output, controlling the degree of predictability.Let’s take a hypothetical example to understand the impact of temperature on the next token prediction.

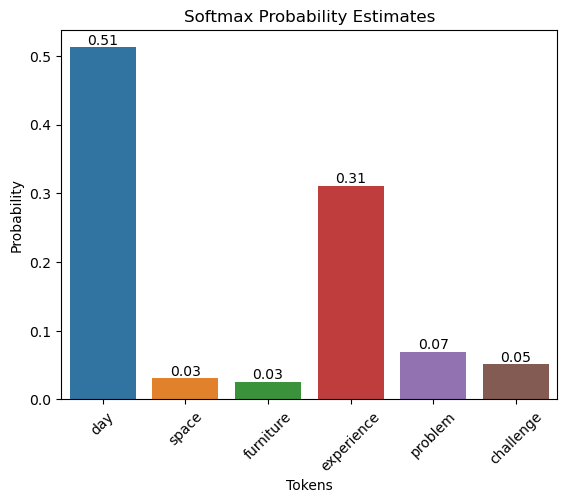

We asked an LLM to complete the sentence, “This is a wonderful _____.” Let’s assume the potential candidate tokens are:

| token | logit |

|------------|-------|

| day | 40 |

| space | 4 |

| furniture | 2 |

| experience | 35 |

| problem | 25 |

| challenge | 15 |The logits are passed through a softmax function so that the sum of the values is equal to one. Essentially, the softmax function generates probability estimates for each token.

Standard softmax function Let’s calculate the probability estimates in Python.

import numpy as np

import seaborn as sns

import pandas as pd

import matplotlib.pyplot as plt

from ipywidgets import interactive, FloatSlider

def softmax(logits):

exps = np.exp(logits)

return exps / np.sum(exps)

data = {

"tokens": ["day", "space", "furniture", "experience", "problem", "challenge"],

"logits": [5, 2.2, 2.0, 4.5, 3.0, 2.7]

}

df = pd.DataFrame(data)

df['probabilities'] = softmax(df['logits'].values)

df| No. | tokens | logits | probabilities |

|-----|------------|--------|---------------|

| 0 | day | 5.0 | 0.512106 |

| 1 | space | 2.2 | 0.031141 |

| 2 | furniture | 2.0 | 0.025496 |

| 3 | experience | 4.5 | 0.310608 |

| 4 | problem | 3.0 | 0.069306 |

| 5 | challenge | 2.7 | 0.051343 |ax = sns.barplot(x="tokens", y="probabilities", data=df)

ax.set_title('Softmax Probability Estimates')

ax.set_ylabel('Probability')

ax.set_xlabel('Tokens')

plt.xticks(rotation=45)

for bar in ax.patches:

ax.text(bar.get_x() + bar.get_width() / 2, bar.get_height(), f'{bar.get_height():.2f}',

ha='center', va='bottom', fontsize=10, rotation=0)

plt.show()

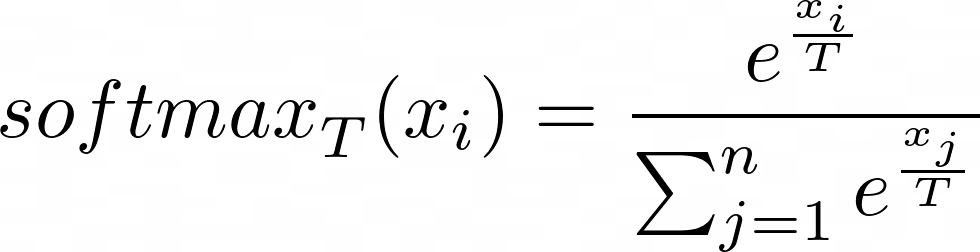

The softmax function with temperature is defined as follows:

where (T) is the temperature, (x_i) is the (i)-th component of the input vector (logits), and (n) is the number of components in the vector.

def softmax_with_temperature(logits, temperature):

if temperature <= 0:

temperature = 1e-10 # Prevent division by zero or negative temperatures

scaled_logits = logits / temperature

exps = np.exp(scaled_logits - np.max(scaled_logits)) # Numerical stability improvement

return exps / np.sum(exps)

def plot_interactive_softmax(temperature):

probabilities = softmax_with_temperature(df['logits'], temperature)

plt.figure(figsize=(10, 5))

bars = plt.bar(df['tokens'], probabilities, color='blue')

plt.ylim(0, 1)

plt.title(f'Softmax Probabilities at Temperature = {temperature:.2f}')

plt.ylabel('Probability')

plt.xlabel('Tokens')

# Add text annotations

for bar, probability in zip(bars, probabilities):

yval = bar.get_height()

plt.text(bar.get_x() + bar.get_width()/2, yval, f"{probability:.2f}", ha='center', va='bottom', fontsize=10)

plt.show()

interactive_plot = interactive(plot_interactive_softmax, temperature=FloatSlider(value=1, min=0, max=2, step=0.01, description='Temperature'))

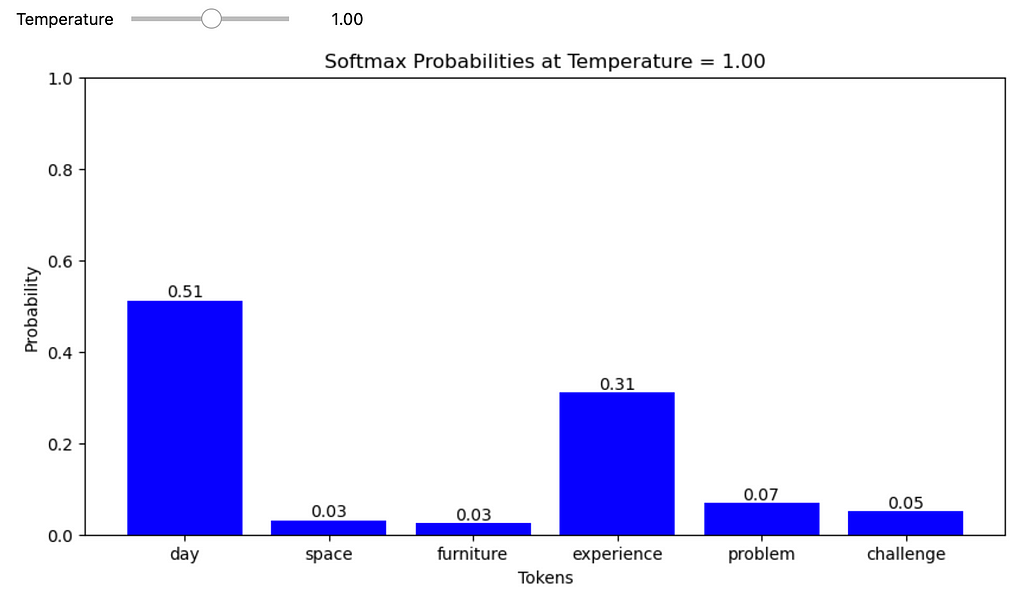

interactive_plotAt T = 1,

At a temperature of 1, the probability values are the same as those derived from the standard softmax function.

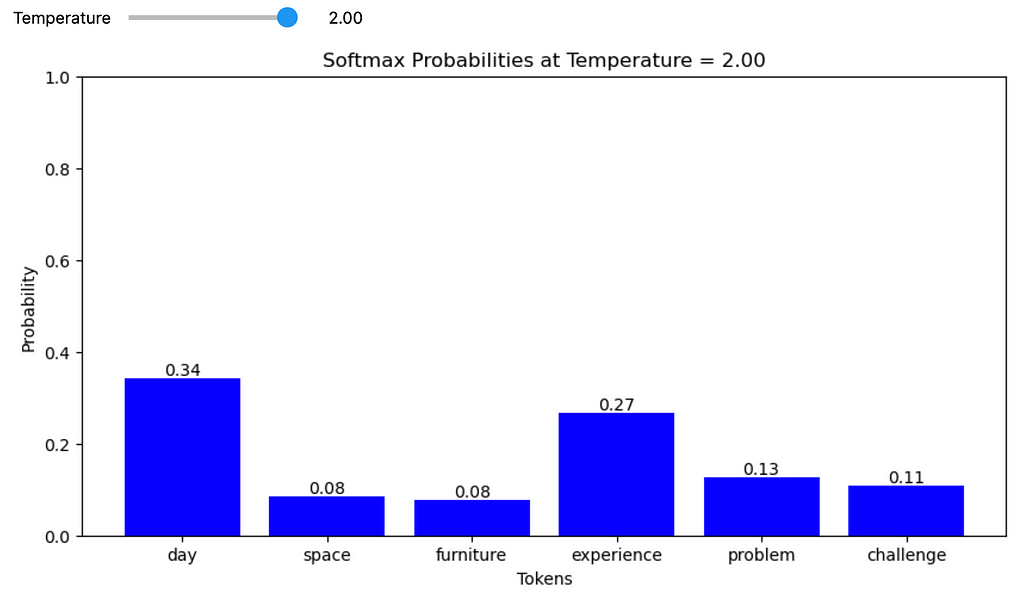

At T > 1,

Raising the temperature inflates the probabilities of the less likely tokens, thereby broadening the range of potential candidates (or diversity) for the model’s next token prediction.

At T < 1,

Lowering the temperature, on the other hand, makes the probability of the most likely token approach 1.0, boosting the model’s confidence. Decreasing the temperature effectively eliminates the uncertainty within the model.

Conclusion

LLMs leverage the temperature parameter to offer flexibility in their predictions. The model behaves predictably at a temperature of 1, closely following the original softmax distribution. Increasing the temperature introduces greater diversity, amplifying less likely tokens. Conversely, decreasing the temperature makes the predictions more focused, increasing the model’s confidence in the most probable token by reducing uncertainty. This adaptability allows users to tailor LLM outputs to a wide array of tasks, striking a balance between creative exploration and deterministic output.

Unless otherwise noted, all images are by the author.

How does temperature impact next token prediction in LLMs? was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

How does temperature impact next token prediction in LLMs?Go Here to Read this Fast! How does temperature impact next token prediction in LLMs?

-

Apple’s new Photos app will utilize generative AI for image editing

A new teaser on Apple’s website could be indicative of some of the company’s upcoming software plans, namely a new version of its ubiquitous Photos app that will tap generative AI to deliver Photoshop-grade editing capabilities for the average consumer, AppleInsider has learned.

A new teaser on Apple’s website could be indicative of some of the company’s upcoming software plans, namely a new version of its ubiquitous Photos app that will tap generative AI to deliver Photoshop-grade editing capabilities for the average consumer, AppleInsider has learned.

The new Clean Up feature will make removing objects significantly easierThe logo promoting Tuesday’s event on Apple’s website suddenly turned interactive earlier on Monday, allowing users to erase some or all of the logo with their mouse. While this was initially believed to be a nod towards an improved Apple Pencil, it could also be in reference to an improved editing feature Apple plans to unleash later this year.

People familiar with Apple’s next-gen operating systems have told AppleInsider that the iPad maker is internally testing an enhanced feature for its built-in Photos application that would make use of generative AI for photo editing. The feature is dubbed “Clean Up” in pre-release versions of Apple’s macOS 15, and is located inside the edit menu of a new version of the Photos application alongside existing options for adjustments, filters, and cropping.

Apple’s new Photos app will utilize generative AI for image editingApple’s new Photos app will utilize generative AI for image editing -

The Arlo Pro 5S is a 2K-resolution battery camera that won’t miss a beat

The Arlo Pro 5S 2K captures outdoor movement near-instantly, but brand-exclusive features make it harder to recommend to everyone.The Arlo Pro 5S is a 2K-resolution battery camera that won’t miss a beatThe Arlo Pro 5S is a 2K-resolution battery camera that won’t miss a beat -

I found the most customizable smart home accessory ever, and it’s 30% off

Govee’s new Neon Rope Light 2 makes it easy to decorate your home, has quickly become a staple in my household, and is available for only $70 in time for Mother’s Day.I found the most customizable smart home accessory ever, and it’s 30% offI found the most customizable smart home accessory ever, and it’s 30% off