Go Here to Read this Fast! Leaked photos of the Galaxy S25 Ultra confirm razor-thin bezels

Originally appeared here:

Leaked photos of the Galaxy S25 Ultra confirm razor-thin bezels

Go Here to Read this Fast! Leaked photos of the Galaxy S25 Ultra confirm razor-thin bezels

Originally appeared here:

Leaked photos of the Galaxy S25 Ultra confirm razor-thin bezels

Originally appeared here:

Trillion-dollar tech company emerges as key partner to help Google, Meta and other hyperscalers build an Nvidia-free AI future

Originally appeared here:

Apple Intelligence now takes up almost twice as much room on your iPhone as it used to

Just like Mr. Miyagi taught young Daniel LaRusso karate through repetitive simple chores, which ultimately transformed him into the Karate Kid, mastering foundational algorithms like linear regression lays the groundwork for understanding the most complex of AI architectures such as Deep Neural Networks and LLMs.

Through this deep dive into the simple yet powerful linear regression, you will learn many of the fundamental parts that make up the most advanced models built today by billion-dollar companies.

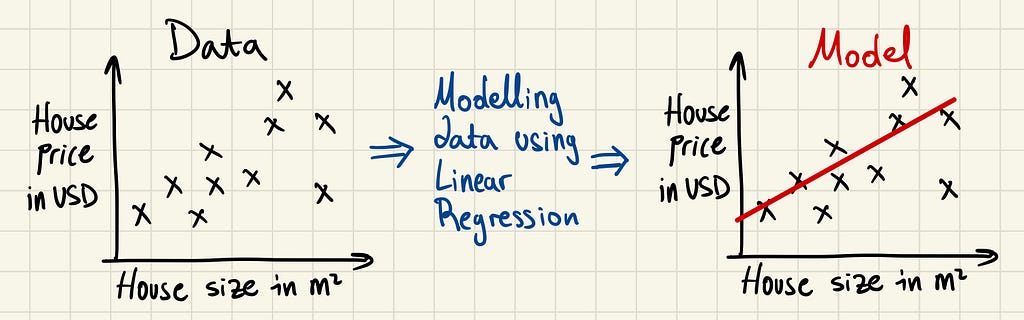

Linear regression is a simple mathematical method used to understand the relationship between two variables and make predictions. Given some data points, such as the one below, linear regression attempts to draw the line of best fit through these points. It’s the “wax on, wax off” of data science.

Once this line is drawn, we have a model that we can use to predict new values. In the above example, given a new house size, we could attempt to predict its price with the linear regression model.

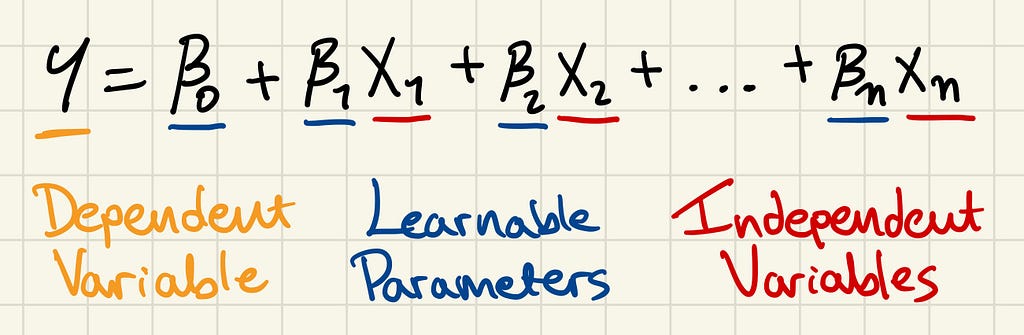

Y is the dependent variable, that which you want to calculate — the house price in the previous example. Its value depends on other variables, hence its name.

X are the independent variables. These are the factors that influence the value of Y. When modelling, the independent variables are the input to the model, and what the model spits out is the prediction or Ŷ.

β are parameters. We give the name parameter to those values that the model adjusts (or learns) to capture the relationship between the independent variables X and the dependent variable Y. So, as the model is trained, the input of the model will remain the same, but the parameters will be adjusted to better predict the desired output.

We require a few things to be able to adjust the parameters and achieve accurate predictions.

Let’s go over a cost function and training algorithm that can be used in linear regression.

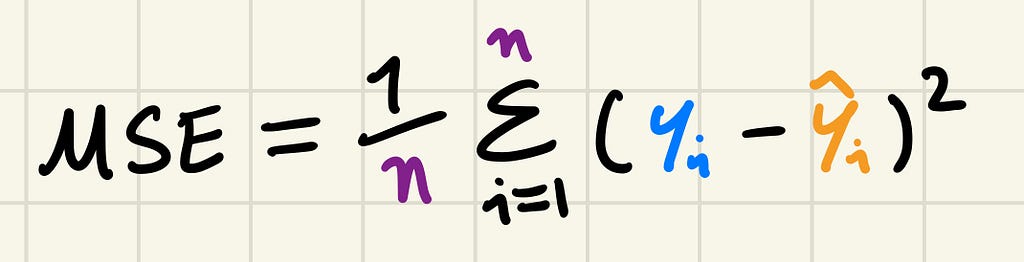

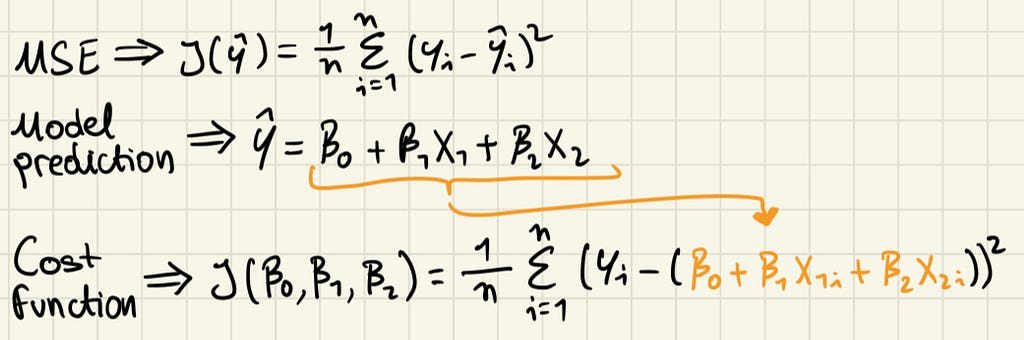

MSE is a commonly used cost function in regression problems, where the goal is to predict a continuous value. This is different from classification tasks, such as predicting the next token in a vocabulary, as in Large Language Models. MSE focuses on numerical differences and is used in a variety of regression and neural network problems, this is how you calculate it:

You will notice that as our prediction gets closer to the target value the MSE gets lower, and the further away they are the larger it grows. Both ways progress quadratically because the difference is squared.

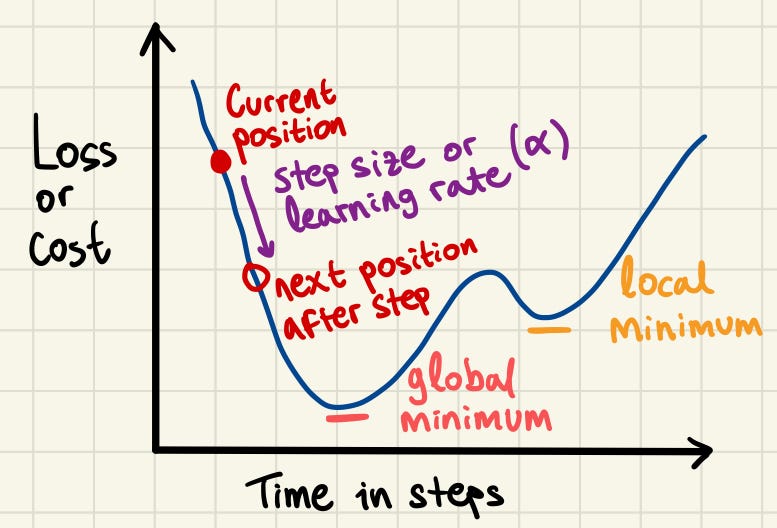

The concept of gradient descent is that we can travel through the “cost space” in small steps, with the objective of arriving at the global minimum — the lowest value in the space. The cost function evaluates how well the current model parameters predict the target by giving us the loss value. Randomly modifying the parameters does not guarantee any improvements. But, if we examine the gradient of the loss function with respect to each parameter, i.e. the direction of the loss after an update of the parameter, we can adjust the parameters to move towards a lower loss, indicating that our predictions are getting closer to the target values.

The steps in gradient descent must be carefully sized to balance progress and precision. If the steps are too large, we risk overshooting the global minimum and missing it entirely. On the other hand, if the steps are too small, the updates will become inefficient and time-consuming, increasing the likelihood of getting stuck in a local minimum instead of reaching the desired global minimum.

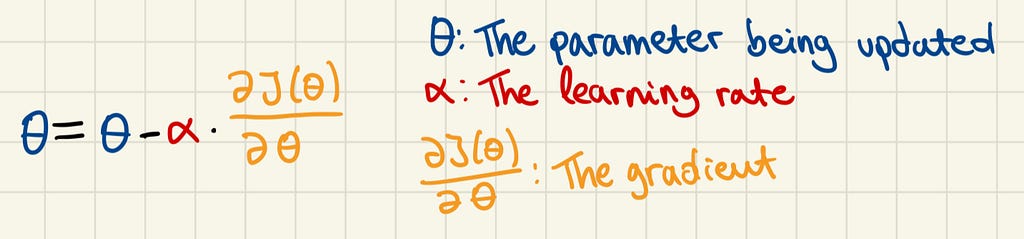

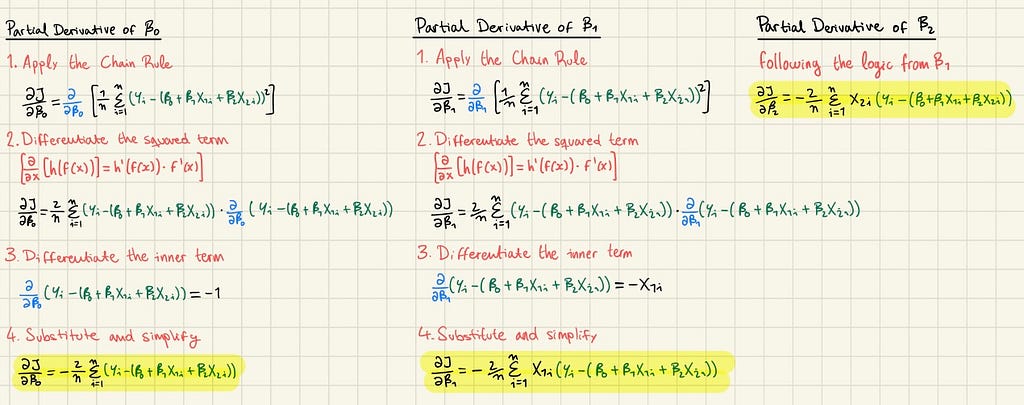

In the context of linear regression, θ could be β0 or β1. The gradient is the partial derivative of the cost function with respect to θ, or in simpler terms, it is a measure of how much the cost function changes when the parameter θ is slightly adjusted.

A large gradient indicates that the parameter has a significant effect on the cost function, while a small gradient suggests a minor effect. The sign of the gradient indicates the direction of change for the cost function. A negative gradient means the cost function will decrease as the parameter increases, while a positive gradient means it will increase.

So, in the case of a large negative gradient, what happens to the parameter? Well, the negative sign in front of the learning rate will cancel with the negative sign of the gradient, resulting in an addition to the parameter. And since the gradient is large we will be adding a large number to it. So, the parameter is adjusted substantially reflecting its greater influence on reducing the cost function.

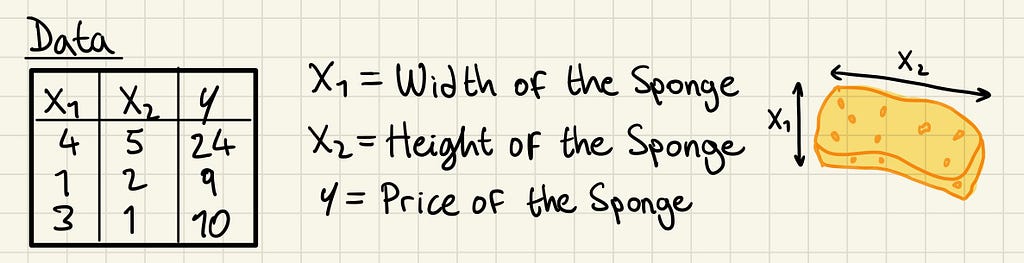

Let’s take a look at the prices of the sponges Karate Kid used to wash Mr. Miyagi’s car. If we wanted to predict their price (dependent variable) based on their height and width (independent variables), we could model it using linear regression.

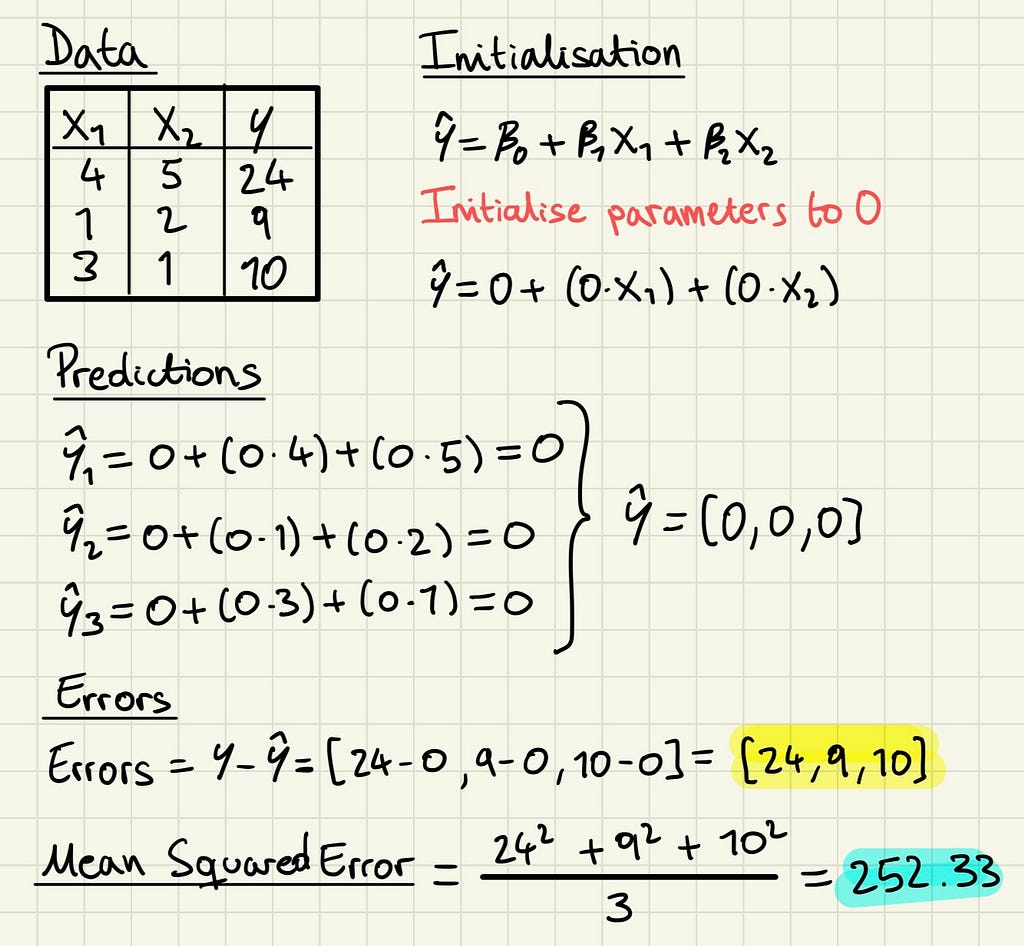

We can start with these three training data samples.

Now, let’s use the Mean Square Error (MSE) as our cost function J, and linear regression as our model.

The linear regression formula uses X1 and X2 for width and height respectively, notice there are no more independent variables since our training data doesn’t include more. That is the assumption we take in this example, that the width and height of the sponge are enough to predict its price.

Now, the first step is to initialise the parameters, in this case to 0. We can then feed the independent variables into the model to get our predictions, Ŷ, and check how far these are from our target Y.

Right now, as you can imagine, the parameters are not very helpful. But we are now prepared to use the Gradient Descent algorithm to update the parameters into more useful ones. First, we need to calculate the partial derivatives of each parameter, which will require some calculus, but luckily we only need to this once in the whole process.

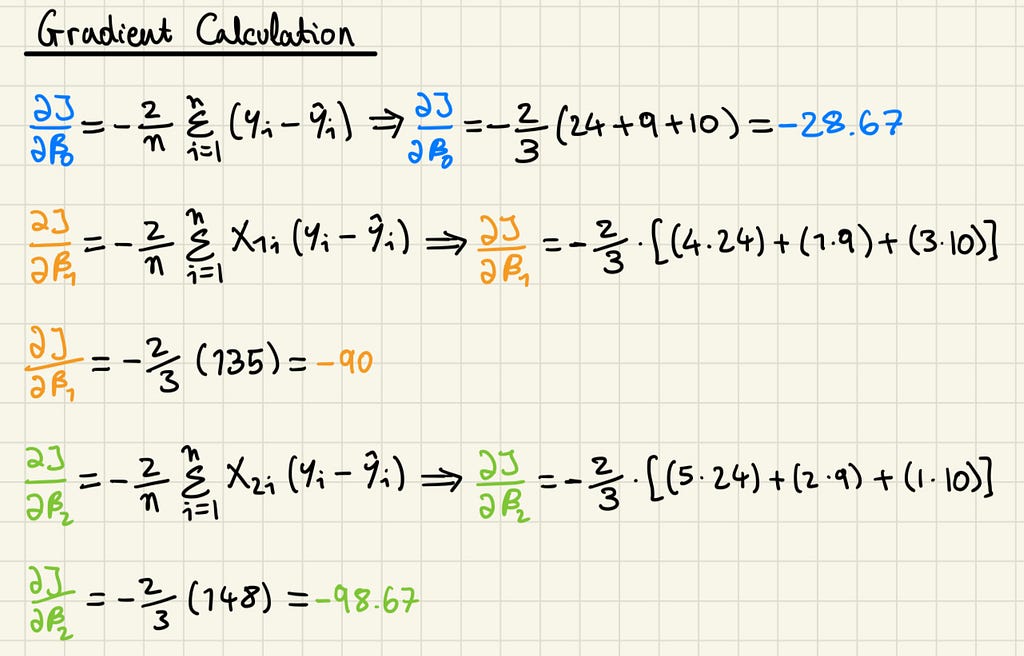

With the partial derivatives, we can substitute in the values from our errors to calculate the gradient of each parameter.

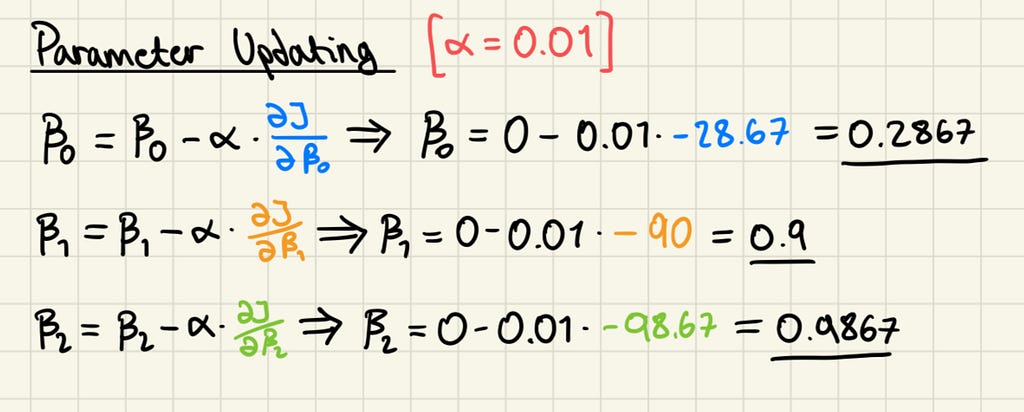

Notice there wasn’t any need to calculate the MSE, as it’s not directly used in the process of updating parameters, only its derivative is. It’s also immediately apparent that all gradients are negative, meaning that all can be increased to reduce the cost function. The next step is to update the parameters with a learning rate, which is a hyper-parameter, i.e. a configuration setting in a machine learning model that is specified before the training process begins. Unlike model parameters, which are learned during training, hyper-parameters are set manually and control aspects of the learning process. Here we arbitrarily use 0.01.

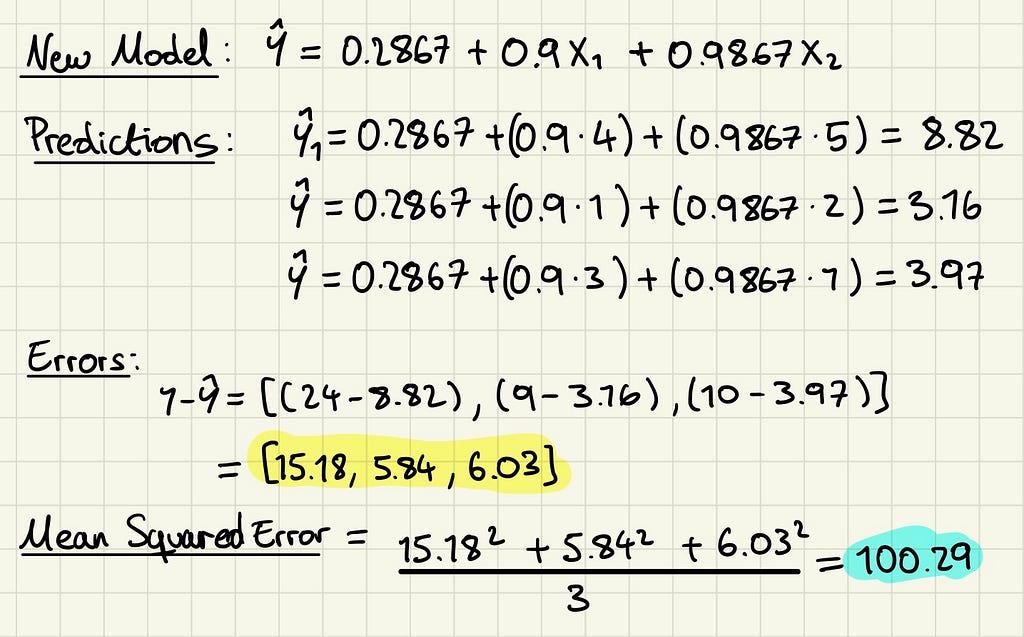

This has been the final step of our first iteration in the process of gradient descent. We can use these new parameter values to make new predictions and recalculate the MSE of our model.

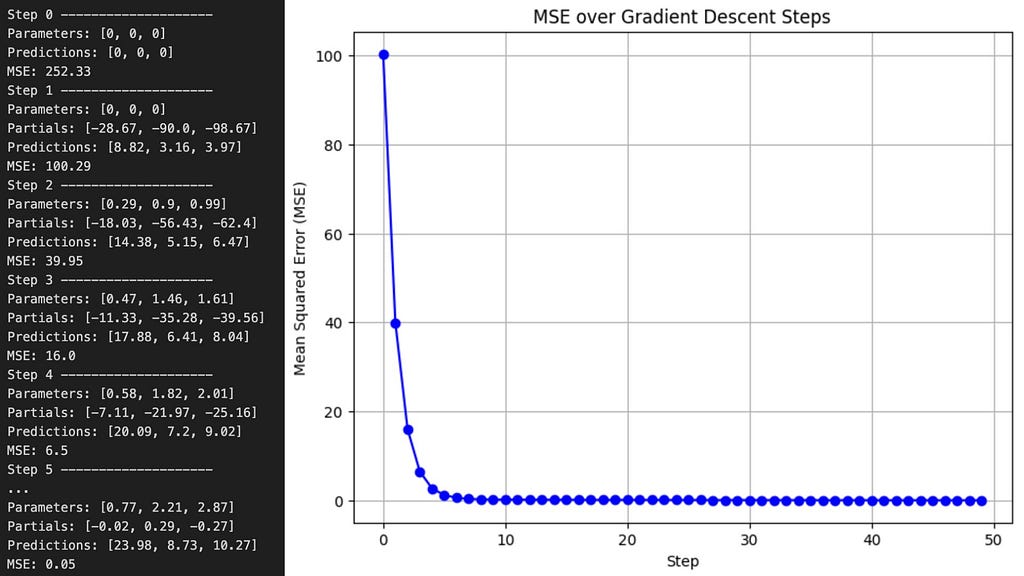

The new parameters are getting closer to the true sponge prices, and have yielded a much lower MSE, but there is a lot more training left to do. If we iterate through the gradient descent algorithm 50 times, this time using Python instead of doing it by hand — since Mr. Miyagi never said anything about coding — we will reach the following values.

Eventually we arrived to a pretty good model. The true values I used to generate those numbers were [1, 2, 3] and after only 50 iterations, the model’s parameters came impressively close. Extending the training to 200 steps, which is another hyper-parameter, with the same learning rate allowed the linear regression model to converge almost perfectly to the true parameters, demonstrating the power of gradient descent.

Many of the fundamental concepts that make up the complicated martial art of artificial intelligence, like cost functions and gradient descent, can be thoroughly understood just by studying the simple “wax on, wax off” tool that linear regression is.

Artificial intelligence is a vast and complex field, built upon many ideas and methods. While there’s much more to explore, mastering these fundamentals is a significant first step. Hopefully, this article has brought you closer to that goal, one “wax on, wax off” at a time.

Mastering the Basics: How Linear Regression Unlocks the Secrets of Complex Models was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Mastering the Basics: How Linear Regression Unlocks the Secrets of Complex Models

Marvel Rivals features an anti-cheat system similar to that on other popular Windows games. Such tools can get removed or disabled as part of the process of porting games to a new platform.

NetEase, the developer of the game, announced that it would be reversing the bans in a future update. The announcement was made on a Discord server.

Go Here to Read this Fast! XR headsets are about to have another make-or-break year

Originally appeared here:

XR headsets are about to have another make-or-break year

Go Here to Read this Fast! Block-breaking news: Notch teases a spiritual successor to Minecraft

Originally appeared here:

Block-breaking news: Notch teases a spiritual successor to Minecraft

Go Here to Read this Fast! If you have to watch one Netflix show this January 2025, stream this one

Originally appeared here:

If you have to watch one Netflix show this January 2025, stream this one

Go Here to Read this Fast! Samsung and Apple’s race to slim phones might skirt the sticker shock

Originally appeared here:

Samsung and Apple’s race to slim phones might skirt the sticker shock

Go Here to Read this Fast! 10 most anticipated movies of 2025, ranked

Originally appeared here:

10 most anticipated movies of 2025, ranked