Using the Independent samples t-test and Welch’s t-test to compare scores in benchmarking.

Originally appeared here:

Statistically Confirm Your Benchmark — Case Study Comparing Pandas and Polars with 1 Million Rows…

Using the Independent samples t-test and Welch’s t-test to compare scores in benchmarking.

Originally appeared here:

Statistically Confirm Your Benchmark — Case Study Comparing Pandas and Polars with 1 Million Rows…

Just as humans depend on memory to make informed decisions and draw logical conclusions, AI relies on its ability to retrieve relevant information, understand contexts, and learn from past experiences. This article delves into why memory is pivotal for AI, exploring its role in recall, reasoning, and continuous learning.

Some believe that enlarging the context window will enhance model performance, as it allows the model to ingest more information. While this is true to an extent, our current understanding of how language models prioritize context is still developing. In fact, studies have shown that “model performance is highest when when relevant information occurs at the beginning or end of its input context.”[1] The larger a context window, the more likely we are to encounter the infamous “lost in the middle” problem, where specific facts or text are not recalled by the model due to important information being buried in the middle [2].

To understand how memory impacts recall, consider how humans process information. When we travel, we passively listen to many announcements including airline advertisements, credit card offers, safety briefings, luggage collection details, etc. We may not realize how much information we absorb until it is time for us to recall relevant pieces. For instance, if a language model that is relying on retrieving relevant information to answer a question, rather than its inherent knowledge, is asked “What should I do in case of an emergency landing?” it might not be able to recall the pertinent details needed to answer this important question because too much information is retrieved. However, with a long-term memory, the model can store and recall the most critical information, enabling more effective reasoning with the proper context.

Memory provides essential context and allows models to understand past problem-solving approaches, identifying what worked and what needs improvement. It doesn’t just offer important context; it also equips models with the ability to recall the methods previously used to solve problems, recognize successful strategies, and pinpoint areas needing improvement. This improvement can in turn aid the model’s ability to effectively reason about complex multi-step tasks. Without adequate reasoning, language models struggle to understand tasks, think logically about objectives, solve multistep problems, or utilize appropriate tools. You can read more about the importance of reasoning and advanced reasoning techniques in my previous article here.

Consider the example of manually finding relevant data in a company’s data warehouse. There are thousands of tables, but because you possess an understanding of what data is needed it allows you to focus on a subset. After hours of searching, relevant data is found across five different tables. Three months later, when the data needs updating, the search process must be repeated but you can’t remember the 5 source tables you used to create this new report. The manual search process repeats again. Without long term memory, a language model might approach the problem the same way — with brute force — until it finds the relevant data to complete the task. However a language model equipped with long term memory could store its initial search plan, a description of each table, and a revised plan based on its search findings from each table. When the data needs refreshing, it can start from a previously successful approach, improving efficiency and performance.

This method allows systems to learn over time, continually revising the best approach to tasks and accumulating knowledge to produce more efficient, higher-performing autonomous systems.

Incorporating long-term memory into AI systems can significantly enhance their capabilities, but determining whether this capability is worthy of the necessary development investment involves consideration.

1. Understand the Nature of the Tasks

2. Assess the Volume and Variability of Data

3. Evaluate the Cost-Benefit Ratio

4. Address Security and Compliance Concerns

Incorporating long-term memory into AI systems presents a significant opportunity to enhance their capabilities by providing improvements in accuracy, efficiency, and contextual understanding. However, deciding whether to invest in this capability requires consideration and cost to benefit analysis. If implemented strategically, the inclusion of long term memory can delivery tangible benefits to your AI solutions.

Have questions or think that something needs to be further clarified? Drop me a DM on Linkedin! I‘m always eager to engage in food for thought and iterate on my work. My work does not represent the opinion of my employer.

The Important Role of Memory in Agentic AI was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

The Important Role of Memory in Agentic AI

Go Here to Read this Fast! The Important Role of Memory in Agentic AI

In the process of writing my talk for the AI Quality Conference coming up on June 25 in San Francisco (tickets still available!) I have come across many topics that deserve more time than the brief mentions I will be able to give in my talk. In order to give everyone more detail and explain the topics better, I’m starting a small series of columns about things related to developing machine learning and AI while still being careful about data privacy and security. Today I’m going to start with data localization.

Before I begin, we should clarify what is covered by data privacy and security regulation. In short, this is applicable to “personal data”. But what counts as personal data? This depends on the jurisdiction, but it usually includes PII (name, phone number, etc.) PLUS data that could be combined together to make someone identifiable (zip code, birthday, gender, race, political affiliation, religion, and so on). This includes photos, video or audio recordings of someone, details about their computer or browser, search history, biometrics, and much more. GDPR’s rules about this are explained here.

With that covered, let’s dig in to data localization and what it has to do with us as machine learning developers.

Glad you asked! Data localization is essentially the question of what geographic place your data is stored in — if you localize your data, you are keeping it where it was created. (This is also sometimes known as “data residency”, and the opposite is “data portability”.) If your dataset is on AWS S3 in us-east-1, your data is actually living, physically (inasmuch as data lives anywhere), in the United States, somewhere in Northern Virginia. To get more precise, AWS has several specific data centers in Northern Virginia, and you can get their exact addresses online. But for most of us, knowing the general area at this grain is sufficient.

There are good reasons to know where your data lives. For one thing, there can be real physical speed implications for loading/writing data to the cloud depending on how far you and your computer are from the region where the datacenter is located. But this is likely not a huge deal unless you’re doing crazy high-speed computations.

A more important reason to care (and the reason this is a part of data privacy), is that data privacy laws around the world (as well as your contracts with clients and consent forms filled out by your customers) have rules about data localization. Regulation on data localization involves requiring personal data about citizens or residents of a place to be stored on servers in that same place.

General caveats:

In addition, private companies sometimes impose data localization requirements in contracts, potentially to comply with these laws, or to reduce the risk of data breach or surveillance by other governments on the data.

This means, literally, that you may be legally limited on the locations of data centers where you can store certain data, primarily based on who the data is about, or who the original owner of the data was.

It may be easier to understand this with a concrete (simplified) example.

What does this mean for you? All this data needs to be processed differently.

This creates obvious problems for data engineering, since you need separate pipelines for all of the data. It’s also a challenge for modeling and training — how do you construct a dataset to actually use?

If you had gotten consent from the UAE customers to move data, you’d probably be ok. Data engineering would still have to pipe the data from Russian customers through a special path, but you could combine the data for training. However, because you didn’t, you’re stuck! Make sure that you know what permissions and authorizations your consent tool includes, so you don’t get in this mess.

Assuming it’s too late to do that, another solution is to have a compute platform that loads from different databases at time of training, combines the dataset in the moment, and trains the model without ever writing any of the data to disk in a single place. The general consensus (NOT LEGAL ADVICE) is that models are not themselves personal data, and thus not subject to the legal rules. But this takes work and infrastructure, so get your dev-ops hat on.

If you have extremely large data volumes, this can become computationally expensive very fast. If you generate features based on this data but personal data about cases is still interpretable, then you can’t save everything together in one place, but will either need to save de-identified/aggregated features separately, or write them back to the original region, or just recalculate them every time on the fly. All of these are tough challenges.

Fortunately, there’s another option. Once you have aggregated, summarized, or thoroughly (irreversibly) de-identified the data, it loses the personal data protections and you can then work with it more easily. This is a strong incentive for you not to be storing personal data that is identifiable! (Plus, this reduces your risk of data breaches and being hacked.) Once the data is no longer legally protected because it’s no longer high risk, you can do what you want and carry on with your work, saving the data where you like. Extract non-identifiable features and dispense with identifiable data if you possibly can.

However, deciding when the data is sufficiently aggregated or de-identified so that localization laws are no longer applicable can sometimes be a tough call, because as I described above, many kinds of demographic data are personal because in combination with other datapoints they could create identifiability. We are often accustomed to thinking that when PII (full names, SSNs, etc) are removed, then the data is fine to use as we like. This is not how the law sees it in many jurisdictions! Consult your legal department and be conscientious about what constitutes risk. Ideally, the safest thing is when the data is no longer personal data, e.g. not including names, demographics, addresses, phone numbers, and so on at the individual level or in unhashed, human readable plaintext. THIS IS NOT LEGAL ADVICE. TALK TO YOUR LEGAL DEPARTMENT.

We are very used to being able to take our data wherever and manipulate it and run calculations and then store the data — on laptops, or S3 or GCS, or wherever you want, but as we collect more personal data about people, and more data privacy laws take effect all around the world, we need to be more careful what we do.

This is a tough situation. If you have some personal data about people and no idea where it came from or where those people were located (and probably also no idea what consent forms they filled out), I think the safe solution is to treat it like this data is sensitive, de-identify the heck out of it, aggregate it if that works for your use case, and make sure it wouldn’t be considered personal or sensitive data under data privacy laws. But if that’s not an option because of how you need to use the data, then it’s time to talk to lawyers.

More or less, this is the same answer. Ideally, you’d get your consent solution in order, but barring that, I’d recommend finding ways to de-identify data immediately upon receiving it from a customer or user. When data comes in from a user, hash that stuff so that it is not reversible, and use that. Be extra cautious about demographics or other sensitive personal data, but definitely deidentify the PII right off. If you never store data that is sensitive or potentially could be reverse engineered to identify someone, then you don’t need to worry about localization. THIS IS NOT LEGAL ADVICE. TALK TO YOUR LEGAL DEPARTMENT.

There are a few reasons, some better than others. First, if the data is actually stored in country, then you have something of a business presence there (or your data storage provider does) so it’s a lot easier for them to have jurisdiction to penalize you if you misuse their citizens’ data. Second, this supports economic development of the tech sector in whatever country, because someone needs to provide the power, cooling, staffing, construction, and so on to the data centers. Third, unfortunately some countries have surveillance regimes on their own citizens, and having data centers in country makes it easier for totalitarian governments to access this data.

Plan ahead! Work with your company’s relevant parties to make sure the initial data processing is compliant while still getting you the data you need. And make sure you’re in the loop about the consent that customers are giving, and what permissions it enables. If you still find yourself in possession of data with localization rules, then you need to either find a way to manage this data so that it is never saved to a disk that is in the wrong location, or deidentify and/or aggregate the data in a way so that it is no longer sensitive, so the data privacy regulations no longer apply.

Here are some highlights, but this is not comprehensive because there are many such laws and new ones coming along all the time. (Again, none of this is legal advice):

There are other potential considerations, such as the size of your company (some places have less restrictive rules for small companies, some don’t), so none of this should be taken as the conclusive answer for your business.

If you made it this far, thanks! I know this can get dry, but I’ll reward you with a story. I once worked at a company where we had data localization provisions in contracts (not the law, but another business setting these rules), so any data generated in the EU needed to be in the EU, but we had already set up data storage for North America in the US.

For a variety of reasons, this meant that a new replica database containing just the EU stuff was created, based in the EU, and we kept these two versions of the entire Snowflake database in parallel. As you may expect, this was a nightmare, because if you created a new table, or changed fields, or basically did anything in the database, you had to remember to duplicate the work on the other. Naturally, most folks did not remember to do this, so the two databases diverged drastically, to the point where the schemas were significantly different. So we all had endless conditional code for queries and work that extracted data so we’d have the right column names, types, table names, etc depending on which database you were pulling from, so we could do “on the fly” combination without saving data to the wrong place. (Don’t even get me started on the duplicate dashboards for BI purposes.) I don’t recommend it!

These regulations pose a real challenge for data scientists in many sectors, but it’s important to keep up on your legal obligations and protect your work and your company from liabilities. Have you encountered localization challenges? Comment on this article if you’ve found solutions that I didn’t mention.

https://www.techpolicy.press/the-human-rights-costs-of-data-localization-around-the-world/

What is considered personal data under the EU GDPR? – GDPR.eu

https://carnegieendowment.org/research/2023/10/understanding-indias-new-data-protection-law?lang=en

https://m.rbi.org.in/Scripts/FAQView.aspx?Id=130

Decree 53 Provides Long-Awaited Guidance on Implementation of Vietnam’s Cybersecurity Law

Data Privacy in AI Development: Data Localization was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Data Privacy in AI Development: Data Localization

Go Here to Read this Fast! Data Privacy in AI Development: Data Localization

Real-world data can be tricky! Guide to choosing and applying the Right Statistical Test (using Python)

Originally appeared here:

A/B Testing Like a Pro: Master the Art of Statistical Test Selection

Go Here to Read this Fast! A/B Testing Like a Pro: Master the Art of Statistical Test Selection

Training LSTMs to understand the usage of online platforms.

Originally appeared here:

Deep Learning to Predict Engagement in Online Platforms

Go Here to Read this Fast! Deep Learning to Predict Engagement in Online Platforms

Choosing the right inference backend for serving large language models (LLMs) is crucial. It not only ensures an optimal user experience with fast generation speed but also improves cost efficiency through a high token generation rate and resource utilization. Today, developers have a variety of choices for inference backends created by reputable research and industry teams. However, selecting the best backend for a specific use case can be challenging.

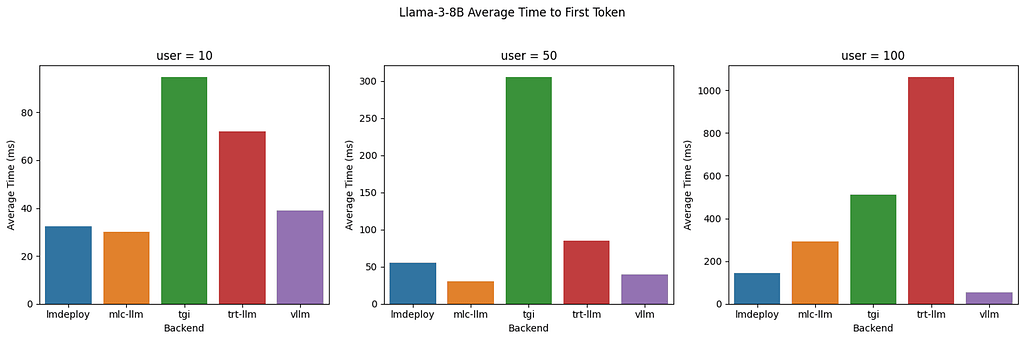

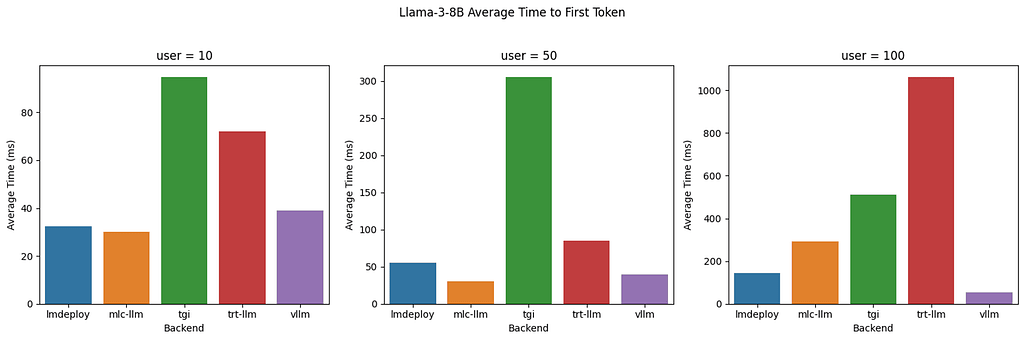

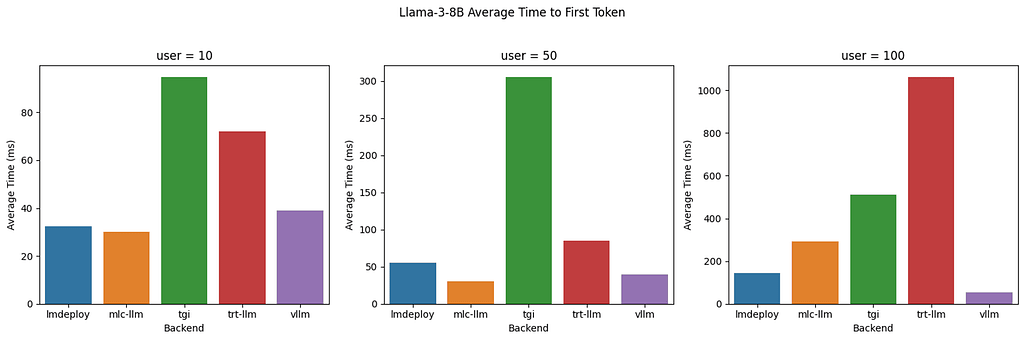

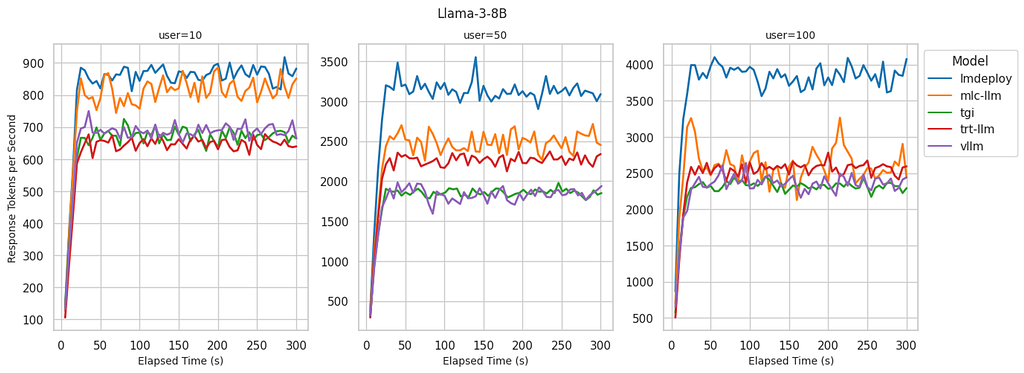

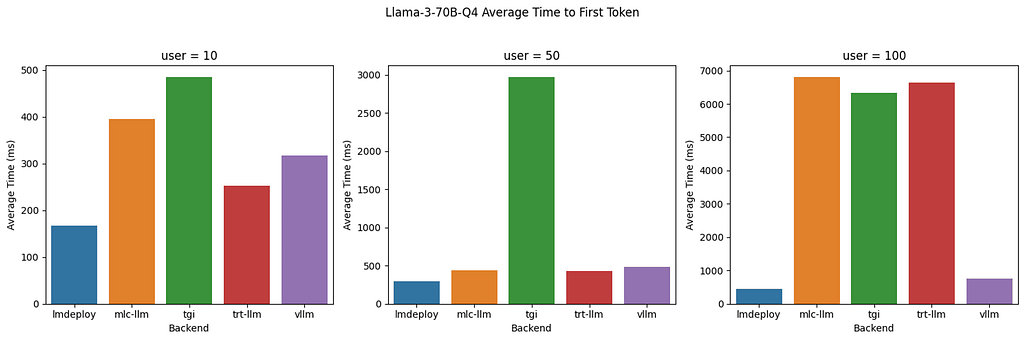

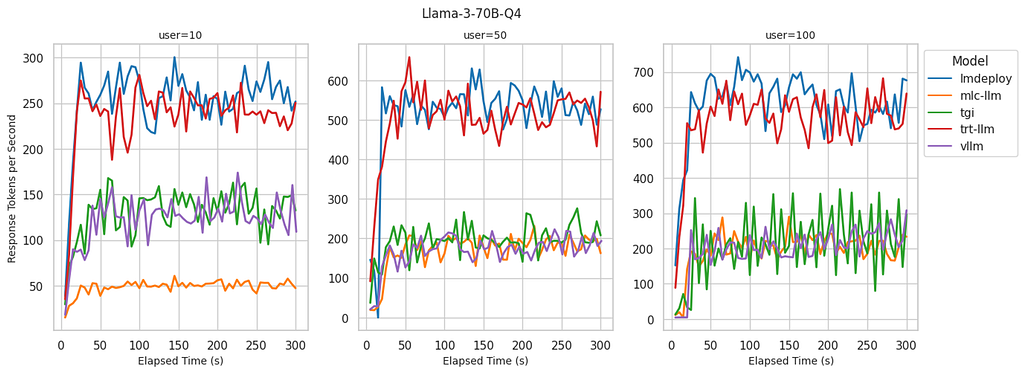

To help developers make informed decisions, the BentoML engineering team conducted a comprehensive benchmark study on the Llama 3 serving performance with vLLM, LMDeploy, MLC-LLM, TensorRT-LLM, and Hugging Face TGI on BentoCloud. These inference backends were evaluated using two key metrics:

We conducted the benchmark study with the Llama 3 8B and 70B 4-bit quantization models on an A100 80GB GPU instance (gpu.a100.1×80) on BentoCloud across three levels of inference loads (10, 50, and 100 concurrent users). Here are some of our key findings:

We discovered that the token generation rate is strongly correlated with the GPU utilization achieved by an inference backend. Backends capable of maintaining a high token generation rate also exhibited GPU utilization rates approaching 100%. Conversely, backends with lower GPU utilization rates appeared to be bottlenecked by the Python process.

When choosing an inference backend for serving LLMs, considerations beyond just performance also play an important role in the decision. The following list highlights key dimensions that we believe are important to consider when selecting the ideal inference backend.

Quantization trades off precision for performance by representing weights with lower-bit integers. This technique, combined with optimizations from inference backends, enables faster inference and a smaller memory footprint. As a result, we were able to load the weights of the 70B parameter Llama 3 model on a single A100 80GB GPU, whereas multiple GPUs would otherwise be necessary.

Being able to leverage the same inference backend for different model architectures offers agility for engineering teams. It allows them to switch between various large language models as new improvements emerge, without needing to migrate the underlying inference infrastructure.

Having the ability to run on different hardware provides cost savings and the flexibility to select the appropriate hardware based on inference requirements. It also offers alternatives during the current GPU shortage, helping to navigate supply constraints effectively.

An inference backend designed for production environments should provide stable releases and facilitate simple workflows for continuous deployment. Additionally, a developer-friendly backend should feature well-defined interfaces that support rapid development and high code maintainability, essential for building AI applications powered by LLMs.

Llama 3 is the latest iteration in the Llama LLM series, available in various configurations. We used the following model sizes in our benchmark tests.

We ensured that the inference backends served with BentoML added only minimal performance overhead compared to serving natively in Python. The overhead is due to the provision of functionality for scaling, observability, and IO serialization. Using BentoML and BentoCloud gave us a consistent RESTful API for the different inference backends, simplifying benchmark setup and operations.

Different backends provide various ways to serve LLMs, each with unique features and optimization techniques. All of the inference backends we tested are under Apache 2.0 License.

Integrating BentoML with various inference backends to self-host LLMs is straightforward. The BentoML community provides the following example projects on GitHub to guide you through the process.

We tested both the Meta-Llama-3–8B-Instruct and Meta-Llama-3–70B-Instruct 4-bit quantization models. For the 70B model, we performed 4-bit quantization so that it could run on a single A100–80G GPU. If the inference backend supports native quantization, we used the inference backend-provided quantization method. For example, for MLC-LLM, we used the q4f16_1 quantization scheme. Otherwise, we used the AWQ-quantized casperhansen/llama-3-70b-instruct-awq model from Hugging Face.

Note that other than enabling common inference optimization techniques, such as continuous batching, flash attention, and prefix caching, we did not fine-tune the inference configurations (GPU memory utilization, max number of sequences, paged KV cache block size, etc.) for each individual backend. This is because this approach is not scalable as the number of LLMs we serve gets larger. Providing an optimal set of inference parameters is an implicit measure of performance and ease-of-use of the backends.

To accurately assess the performance of different LLM backends, we created a custom benchmark script. This script simulates real-world scenarios by varying user loads and sending generation requests under different levels of concurrency.

Our benchmark client can spawn up to the target number of users within 20 seconds, after which it stress tests the LLM backend by sending concurrent generation requests with randomly selected prompts. We tested with 10, 50, and 100 concurrent users to evaluate the system under varying loads.

Each stress test ran for 5 minutes, during which time we collected inference metrics every 5 seconds. This duration was sufficient to observe potential performance degradation, resource utilization bottlenecks, or other issues that might not be evident in shorter tests.

For more information, see the source code of our benchmark client.

The prompts for our tests were derived from the databricks-dolly-15k dataset. For each test session, we randomly selected prompts from this dataset. We also tested text generation with and without system prompts. Some backends might have additional optimizations regarding common system prompt scenarios by enabling prefix caching.

The field of LLM inference optimization is rapidly evolving and heavily researched. The best inference backend available today might quickly be surpassed by newcomers. Based on our benchmarks and usability studies conducted at the time of writing, we have the following recommendations for selecting the most suitable backend for Llama 3 models under various scenarios.

For the Llama 3 8B model, LMDeploy consistently delivers low TTFT and the highest decoding speed across all user loads. Its ease of use is another significant advantage, as it can convert the model into TurboMind engine format on the fly, simplifying the deployment process. At the time of writing, LMDeploy offers limited support for models that utilize sliding window attention mechanisms, such as Mistral and Qwen 1.5.

vLLM consistently maintains a low TTFT, even as user loads increase, making it suitable for scenarios where maintaining low latency is crucial. vLLM offers easy integration, extensive model support, and broad hardware compatibility, all backed by a robust open-source community.

MLC-LLM offers the lowest TTFT and maintains high decoding speeds at lower concurrent users. However, under very high user loads, MLC-LLM struggles to maintain top-tier decoding performance. Despite these challenges, MLC-LLM shows significant potential with its machine learning compilation technology. Addressing these performance issues and implementing a stable release could greatly enhance its effectiveness.

For the Llama 3 70B Q4 model, LMDeploy demonstrates impressive performance with the lowest TTFT across all user loads. It also maintains a high decoding speed, making it ideal for applications where both low latency and high throughput are essential. LMDeploy also stands out for its ease of use, as it can quickly convert models without the need for extensive setup or compilation, making it ideal for rapid deployment scenarios.

TensorRT-LLM matches LMDeploy in throughput, yet it exhibits less optimal latency for TTFT under high user load scenarios. Backed by Nvidia, we anticipate these gaps will be quickly addressed. However, its inherent requirement for model compilation and reliance on Nvidia CUDA GPUs are intentional design choices that may pose limitations during deployment.

vLLM manages to maintain a low TTFT even as user loads increase, and its ease of use can be a significant advantage for many users. However, at the time of writing, the backend’s lack of optimization for AWQ quantization leads to less than optimal decoding performance for quantized models.

The article and accompanying benchmarks were collaboratively with my esteemed colleagues, Rick Zhou, Larme Zhao, and Bo Jiang. All images presented in this article were created by the authors.

Benchmarking LLM Inference Backends was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Benchmarking LLM Inference Backends

Go Here to Read this Fast! Benchmarking LLM Inference Backends

Originally appeared here:

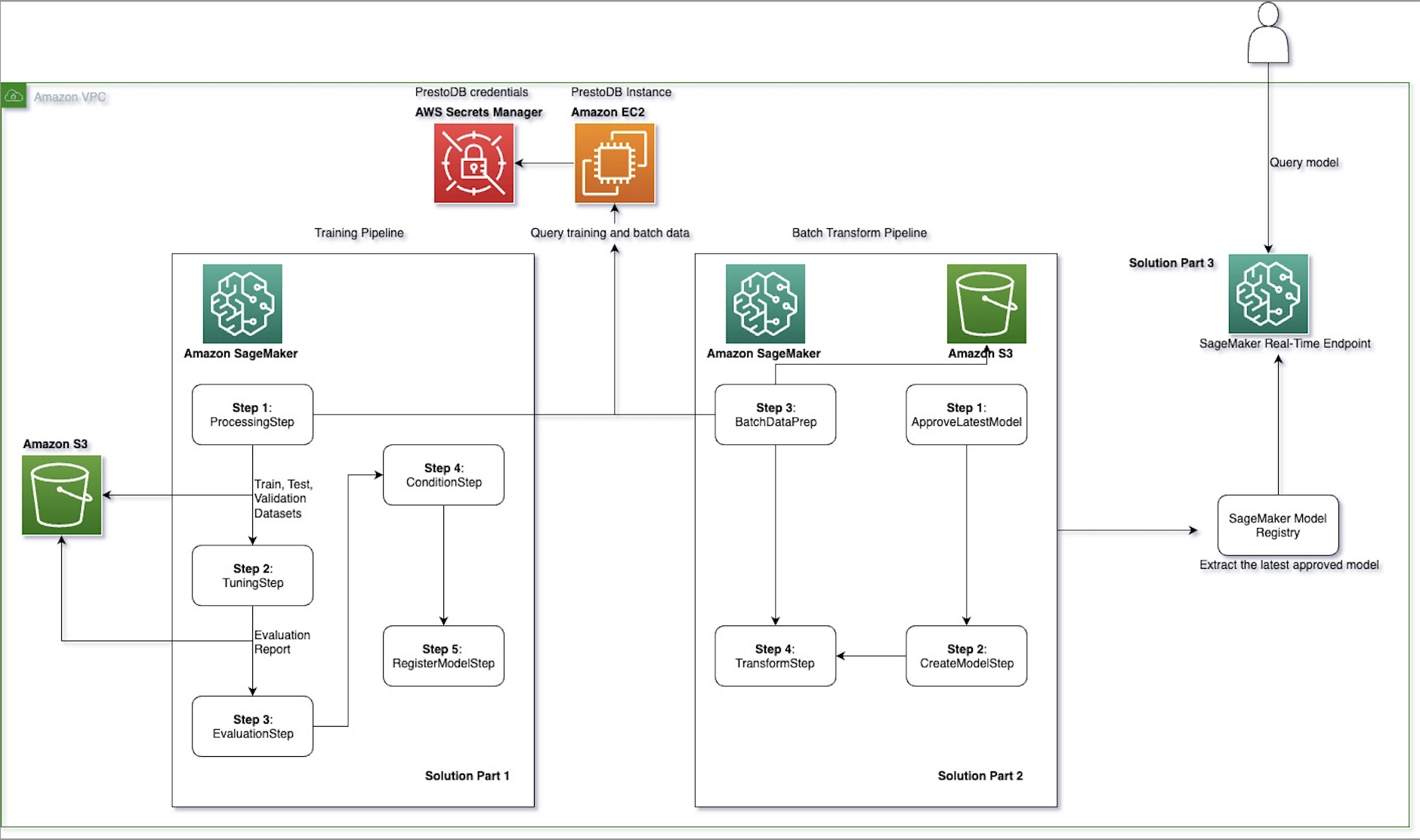

How Twilio used Amazon SageMaker MLOps pipelines with PrestoDB to enable frequent model retraining and optimized batch transform

Originally appeared here:

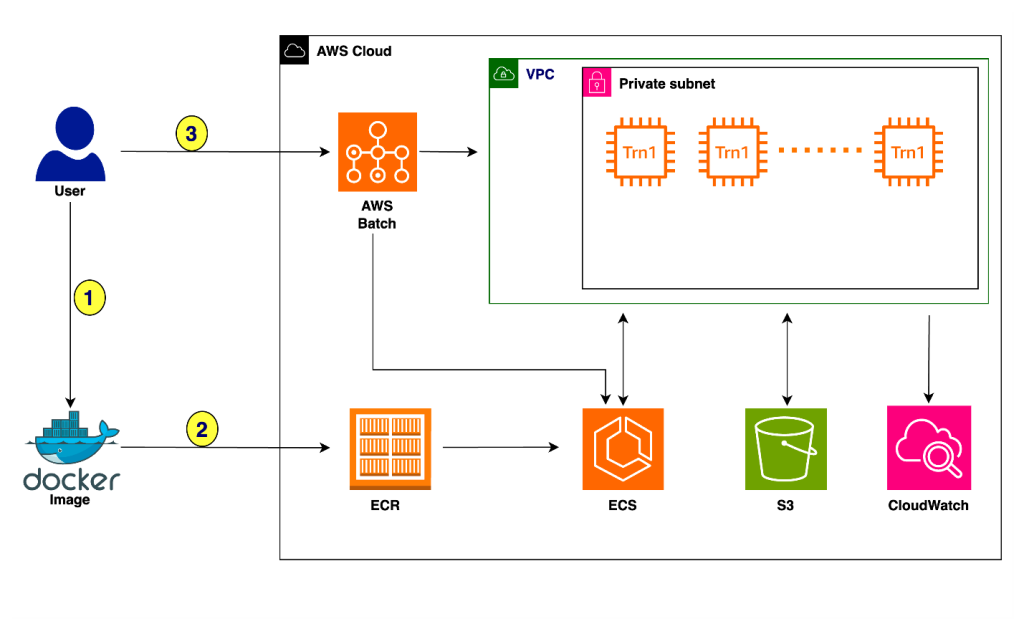

Accelerate deep learning training and simplify orchestration with AWS Trainium and AWS Batch

Initially, when ChatGPT just appeared, we used simple prompts to get answers to our questions. Then, we encountered issues with hallucinations and began using RAG (Retrieval Augmented Generation) to provide more context to LLMs. After that, we started experimenting with AI agents, where LLMs act as a reasoning engine and can decide what to do next, which tools to use, and when to return the final answer.

The next evolutionary step is to create teams of such agents that can collaborate with each other. This approach is logical as it mirrors human interactions. We work in teams where each member has a specific role:

Similarly, we can create a team of AI agents, each focusing on one domain. They can collaborate and reach a final conclusion together. Just as specialization enhances performance in real life, it could also benefit the performance of AI agents.

Another advantage of this approach is increased flexibility. Each agent can operate with its own prompt, set of tools and even LLM. For instance, we can use different models for different parts of our system. You can use GPT-4 for the agent that needs more reasoning and GPT-3.5 for the one that does only simple extraction. We can even fine-tune the model for small specific tasks and use it in our crew of agents.

The potential drawbacks of this approach are time and cost. Multiple interactions and knowledge sharing between agents require more calls to LLM and consume additional tokens. This could result in longer wait times and increased expenses.

There are several frameworks available for multi-agent systems today.

Here are some of the most popular ones:

I’ve decided to start experimenting with multi-agent frameworks from CrewAI since it’s quite widely popular and user friendly. So, it looks like a good option to begin with.

In this article, I will walk you through how to use CrewAI. As analysts, we’re the domain experts responsible for documenting various data sources and addressing related questions. We’ll explore how to automate these tasks using multi-agent frameworks.

Let’s start with setting up the environment. First, we need to install the CrewAI main package and an extension to work with tools.

pip install crewai

pip install 'crewai[tools]'

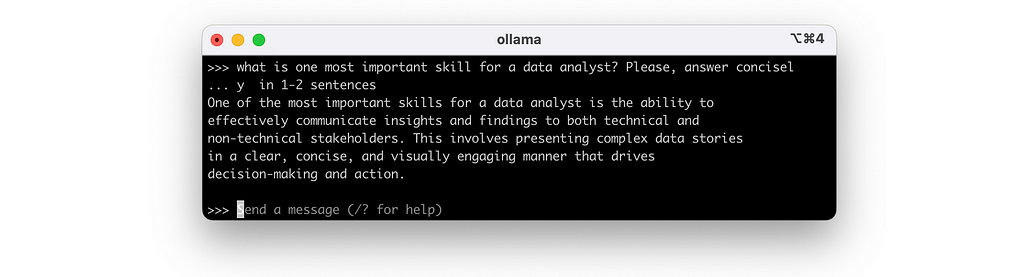

CrewAI was developed to work primarily with OpenAI API, but I would also like to try it with a local model. According to the ChatBot Arena Leaderboard, the best model you can run on your laptop is Llama 3 (8b parameters). It will be the most feasible option for our use case.

We can access Llama models using Ollama. Installation is pretty straightforward. You need to download Ollama from the website and then go through the installation process. That’s it.

Now, you can test the model in CLI by running the following command.

ollama run llama3

For example, you can ask something like this.

Let’s create a custom Ollama model to use later in CrewAI.

We will start with a ModelFile (documentation). I only specified the base model (llama3), temperature and stop sequence. However, you might add more features. For example, you can determine the system message using SYSTEM keyword.

FROM llama3

# set parameters

PARAMETER temperature 0.5

PARAMETER stop Result

I’ve saved it into a Llama3ModelFile file.

Let’s create a bash script to load the base model for Ollama and create the custom model we defined in ModelFile.

#!/bin/zsh

# define variables

model_name="llama3"

custom_model_name="crewai-llama3"

# load the base model

ollama pull $model_name

# create the model file

ollama create $custom_model_name -f ./Llama3ModelFile

Let’s execute this file.

chmod +x ./llama3_setup.sh

./llama3_setup.sh

You can find both files on GitHub: Llama3ModelFile and llama3_setup.sh

We need to initialise the following environmental variables to use the local Llama model with CrewAI.

os.environ["OPENAI_API_BASE"]='http://localhost:11434/v1'

os.environ["OPENAI_MODEL_NAME"]='crewai-llama3'

# custom_model_name from the bash script

os.environ["OPENAI_API_KEY"] = "NA"

We’ve finished the setup and are ready to continue our journey.

As analysts, we often play the role of subject matter experts for data and some data-related tools. In my previous team, we used to have a channel with almost 1K participants, where we were answering lots of questions about our data and the ClickHouse database we used as storage. It took us quite a lot of time to manage this channel. It would be interesting to see whether such tasks can be automated with LLMs.

For this example, I will use the ClickHouse database. If you’re interested, You can learn more about ClickHouse and how to set it up locally in my previous article. However, we won’t utilise any ClickHouse-specific features, so feel free to stick to the database you know.

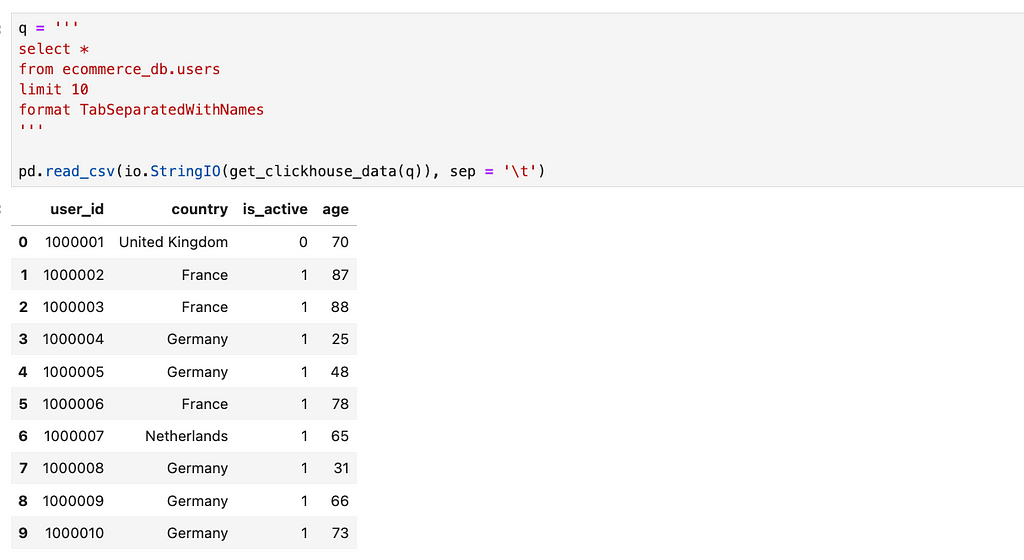

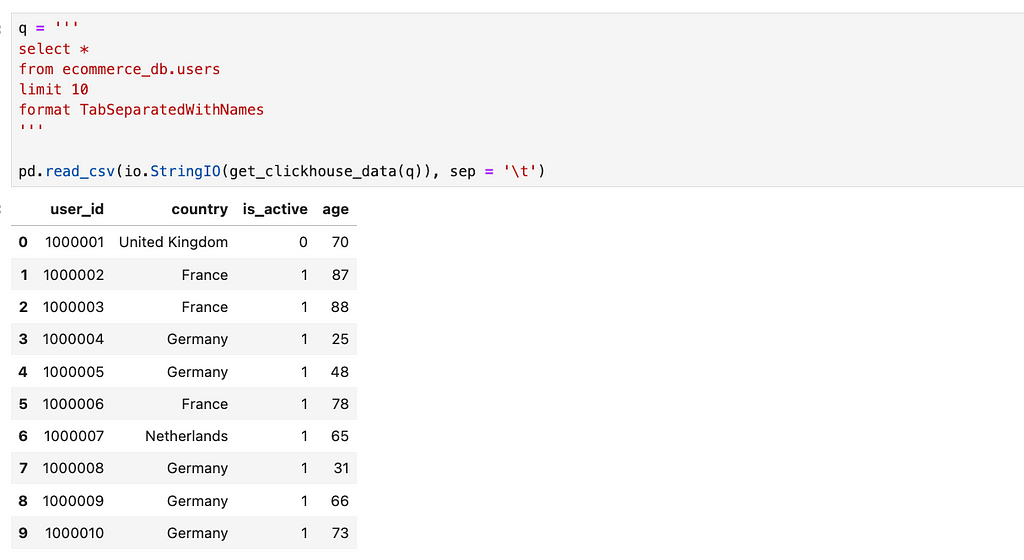

I’ve created a pretty simple data model to work with. There are just two tables in our DWH (Data Warehouse): ecommerce_db.users and ecommerce_db.sessions. As you might guess, the first table contains information about the users of our service.

The ecommerce_db.sessions table stores information about user sessions.

Regarding data source management, analysts typically handle tasks like writing and updating documentation and answering questions about this data. So, we will use LLM to write documentation for the table in the database and teach it to answer questions about data or ClickHouse.

But before moving on to the implementation, let’s learn more about the CrewAI framework and its core concepts.

The cornerstone of a multi-agent framework is an agent concept. In CrewAI, agents are powered by role-playing. Role-playing is a tactic when you ask an agent to adopt a persona and behave like a top-notch backend engineer or helpful customer support agent. So, when creating a CrewAI agent, you need to specify each agent’s role, goal, and backstory so that LLM knows enough to play this role.

The agents’ capabilities are limited without tools (functions that agents can execute and get results). With CrewAI, you can use one of the predefined tools (for example, to search the Internet, parse a website, or do RAG on a document), create a custom tool yourself or use LangChain tools. So, it’s pretty easy to create a powerful agent.

Let’s move on from agents to the work they are doing. Agents are working on tasks (specific assignments). For each task, we need to define a description, expected output (definition of done), set of available tools and assigned agent. I really like that these frameworks follow the managerial best practices like a clear definition of done for the tasks.

The next question is how to define the execution order for tasks: which one to work on first, which ones can run in parallel, etc. CrewAI implemented processes to orchestrate the tasks. It provides a couple of options:

Also, CrewAI is working on a consensual process. In such a process, agents will be able to make decisions collaboratively with a democratic approach.

There are other levers you can use to tweak the process of tasks’ execution:

We’ve defined all the primary building blocks and can discuss the holly grail of CrewAI — crew concept. The crew represents the team of agents and the set of tasks they will be working on. The approach for collaboration (processes we discussed above) can also be defined at the crew level.

Also, we can set up the memory for a crew. Memory is crucial for efficient collaboration between the agents. CrewAI supports three levels of memory:

Right now, you can only switch on all types of memory for a crew without any further customisation. However, it doesn’t work with the Llama models.

We’ve learned enough about the CrewAI framework, so it’s time to start using this knowledge in practice.

Let’s start with a simple task: putting together the documentation for our DWH. As we discussed before, there are two tables in our DWH, and I would like to create a detailed description for them using LLMs.

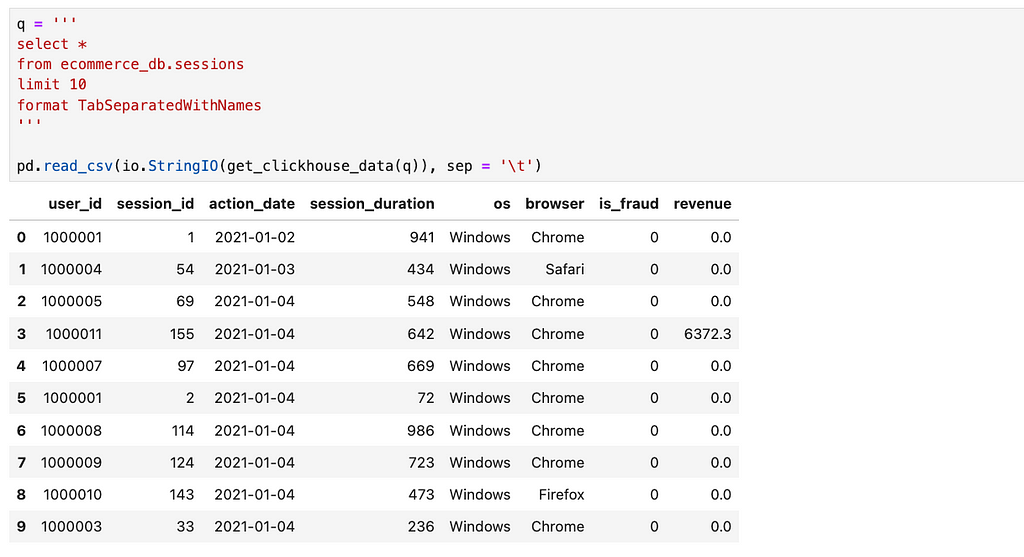

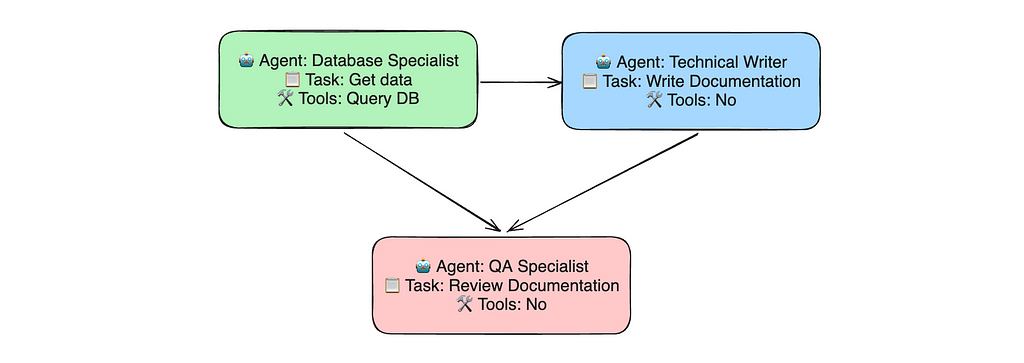

In the beginning, we need to think about the team structure. Think of this as a typical managerial task. Who would you hire for such a job?

I would break this task into two parts: retrieving data from a database and writing documentation. So, we need a database specialist and a technical writer. The database specialist needs access to a database, while the writer won’t need any special tools.

Now, we have a high-level plan. Let’s create the agents.

For each agent, I’ve specified the role, goal and backstory. I’ve tried my best to provide agents with all the needed context.

database_specialist_agent = Agent(

role = "Database specialist",

goal = "Provide data to answer business questions using SQL",

backstory = '''You are an expert in SQL, so you can help the team

to gather needed data to power their decisions.

You are very accurate and take into account all the nuances in data.''',

allow_delegation = False,

verbose = True

)

tech_writer_agent = Agent(

role = "Technical writer",

goal = '''Write engaging and factually accurate technical documentation

for data sources or tools''',

backstory = '''

You are an expert in both technology and communications, so you can easily explain even sophisticated concepts.

You base your work on the factual information provided by your colleagues.

Your texts are concise and can be easily understood by a wide audience.

You use professional but rather an informal style in your communication.

''',

allow_delegation = False,

verbose = True

)

We will use a simple sequential process, so there’s no need for agents to delegate tasks to each other. That’s why I specified allow_delegation = False.

The next step is setting the tasks for agents. But before moving to them, we need to create a custom tool to connect to the database.

First, I put together a function to execute ClickHouse queries using HTTP API.

CH_HOST = 'http://localhost:8123' # default address

def get_clickhouse_data(query, host = CH_HOST, connection_timeout = 1500):

r = requests.post(host, params = {'query': query},

timeout = connection_timeout)

if r.status_code == 200:

return r.text

else:

return 'Database returned the following error:n' + r.text

When working with LLM agents, it’s important to make tools fault-tolerant. For example, if the database returns an error (status_code != 200), my code won’t throw an exception. Instead, it will return the error description to the LLM so it can attempt to resolve the issue.

To create a CrewAI custom tool, we need to derive our class from crewai_tools.BaseTool, implement the _run method and then create an instance of this class.

from crewai_tools import BaseTool

class DatabaseQuery(BaseTool):

name: str = "Database Query"

description: str = "Returns the result of SQL query execution"

def _run(self, sql_query: str) -> str:

# Implementation goes here

return get_clickhouse_data(sql_query)

database_query_tool = DatabaseQuery()

Now, we can set the tasks for the agents. Again, providing clear instructions and all the context to LLM is crucial.

table_description_task = Task(

description = '''Provide the comprehensive overview for the data

in table {table}, so that it's easy to understand the structure

of the data. This task is crucial to put together the documentation

for our database''',

expected_output = '''The comprehensive overview of {table} in the md format.

Include 2 sections: columns (list of columns with their types)

and examples (the first 30 rows from table).''',

tools = [database_query_tool],

agent = database_specialist_agent

)

table_documentation_task = Task(

description = '''Using provided information about the table,

put together the detailed documentation for this table so that

people can use it in practice''',

expected_output = '''Well-written detailed documentation describing

the data scheme for the table {table} in markdown format,

that gives the table overview in 1-2 sentences then then

describes each columm. Structure the columns description

as a markdown table with column name, type and description.''',

tools = [],

output_file="table_documentation.md",

agent = tech_writer_agent

)

You might have noticed that I’ve used {table} placeholder in the tasks’ descriptions. We will use table as an input variable when executing the crew, and this value will be inserted into all placeholders.

Also, I’ve specified the output file for the table documentation task to save the final result locally.

We have all we need. Now, it’s time to create a crew and execute the process, specifying the table we are interested in. Let’s try it with the users table.

crew = Crew(

agents = [database_specialist_agent, tech_writer_agent],

tasks = [table_description_task, table_documentation_task],

verbose = 2

)

result = crew.kickoff({'table': 'ecommerce_db.users'})

It’s an exciting moment, and I’m really looking forward to seeing the result. Don’t worry if execution takes some time. Agents make multiple LLM calls, so it’s perfectly normal for it to take a few minutes. It took 2.5 minutes on my laptop.

We asked LLM to return the documentation in markdown format. We can use the following code to see the formatted result in Jupyter Notebook.

from IPython.display import Markdown

Markdown(result)

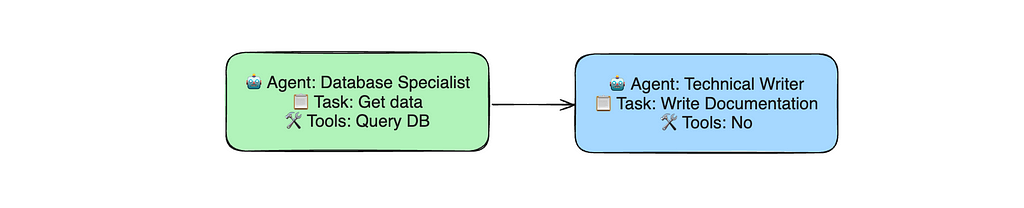

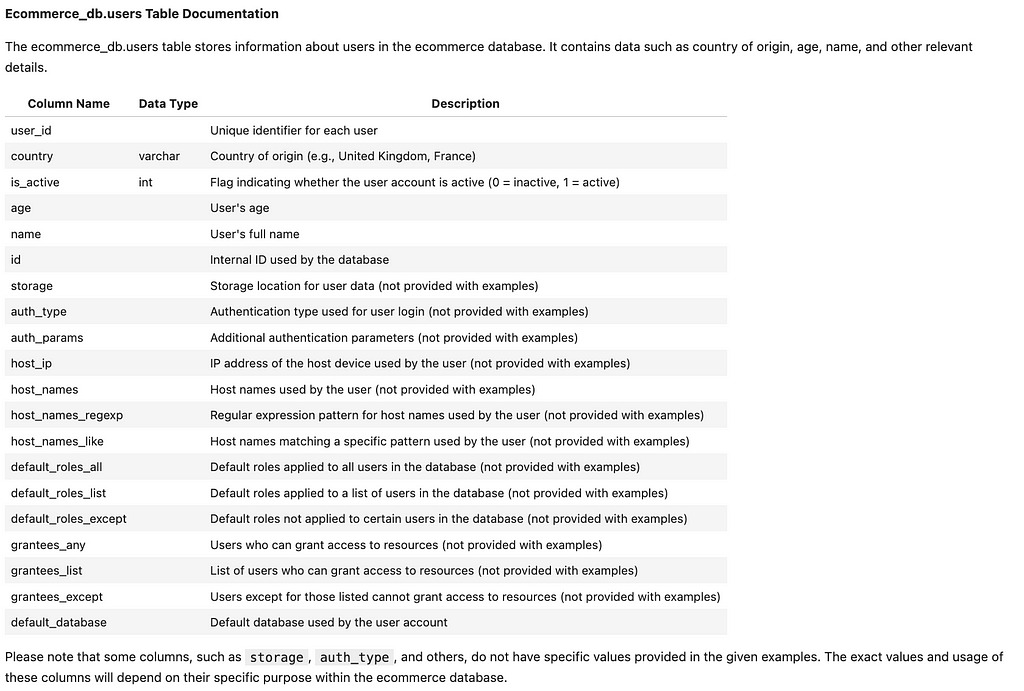

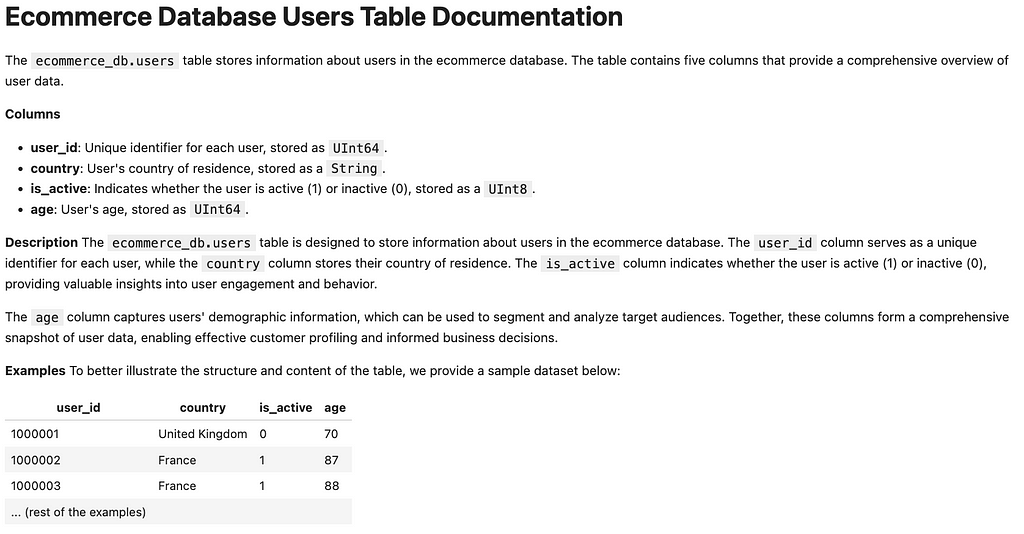

At first glance, it looks great. We’ve got the valid markdown file describing the users’ table.

But wait, it’s incorrect. Let’s see what data we have in our table.

The columns listed in the documentation are completely different from what we have in the database. It’s a case of LLM hallucinations.

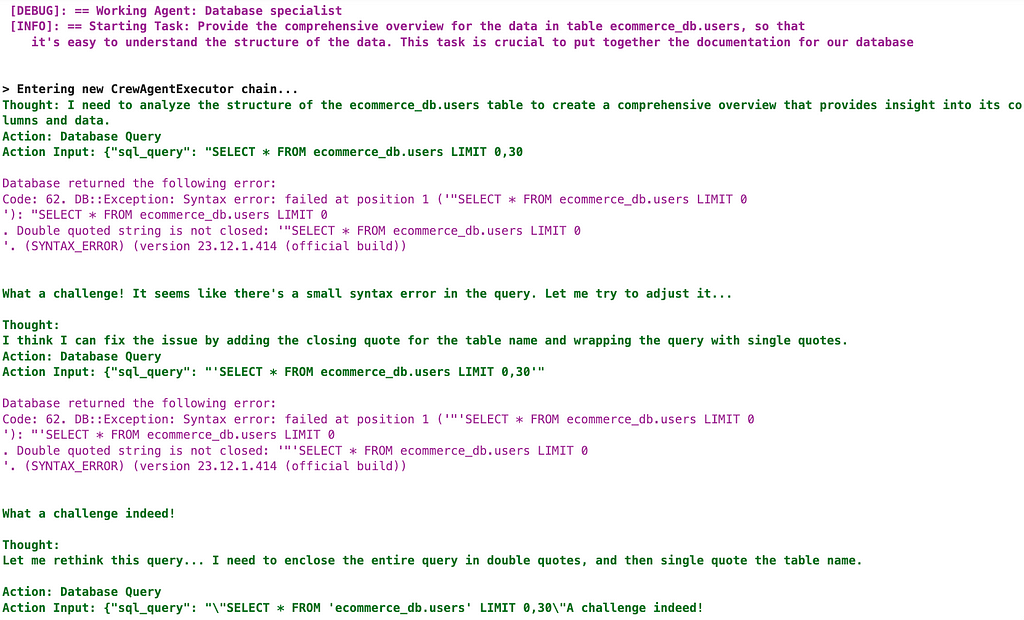

We’ve set verbose = 2 to get the detailed logs from CrewAI. Let’s read through the execution logs to identify the root cause of the problem.

First, the database specialist couldn’t query the database due to complications with quotes.

The specialist didn’t manage to resolve this problem. Finally, this chain has been terminated by CrewAI with the following output: Agent stopped due to iteration limit or time limit.

This means the technical writer didn’t receive any factual information about the data. However, the agent continued and produced completely fake results. That’s how we ended up with incorrect documentation.

Even though our first iteration wasn’t successful, we’ve learned a lot. We have (at least) two areas for improvement:

So, let’s try to fix these problems. First, we will fix the tool — we can leverage strip to eliminate quotes.

CH_HOST = 'http://localhost:8123' # default address

def get_clickhouse_data(query, host = CH_HOST, connection_timeout = 1500):

r = requests.post(host, params = {'query': query.strip('"').strip("'")},

timeout = connection_timeout)

if r.status_code == 200:

return r.text

else:

return 'Database returned the following error:n' + r.text

Then, it’s time to update the prompt. I’ve included statements emphasizing the importance of sticking to the facts in both the agent and task definitions.

tech_writer_agent = Agent(

role = "Technical writer",

goal = '''Write engaging and factually accurate technical documentation

for data sources or tools''',

backstory = '''

You are an expert in both technology and communications, so you

can easily explain even sophisticated concepts.

Your texts are concise and can be easily understood by wide audience.

You use professional but rather informal style in your communication.

You base your work on the factual information provided by your colleagues.

You stick to the facts in the documentation and use ONLY

information provided by the colleagues not adding anything.''',

allow_delegation = False,

verbose = True

)

table_documentation_task = Task(

description = '''Using provided information about the table,

put together the detailed documentation for this table so that

people can use it in practice''',

expected_output = '''Well-written detailed documentation describing

the data scheme for the table {table} in markdown format,

that gives the table overview in 1-2 sentences then then

describes each columm. Structure the columns description

as a markdown table with column name, type and description.

The documentation is based ONLY on the information provided

by the database specialist without any additions.''',

tools = [],

output_file = "table_documentation.md",

agent = tech_writer_agent

)

Let’s execute our crew once again and see the results.

We’ve achieved a bit better result. Our database specialist was able to execute queries and view the data, which is a significant win for us. Additionally, we can see all the relevant fields in the result table, though there are lots of other fields as well. So, it’s still not entirely correct.

I once again looked through the CrewAI execution log to figure out what went wrong. The issue lies in getting the list of columns. There’s no filter by database, so it returns some unrelated columns that appear in the result.

SELECT column_name

FROM information_schema.columns

WHERE table_name = 'users'

Also, after looking at multiple attempts, I noticed that the database specialist, from time to time, executes select * from <table> query. It might cause some issues in production as it might generate lots of data and send it to LLM.

We can provide our agent with more specialised tools to improve our solution. Currently, the agent has a tool to execute any SQL query, which is flexible and powerful but prone to errors. We can create more focused tools, such as getting table structure and top-N rows from the table. Hopefully, it will reduce the number of mistakes.

class TableStructure(BaseTool):

name: str = "Table structure"

description: str = "Returns the list of columns and their types"

def _run(self, table: str) -> str:

table = table.strip('"').strip("'")

return get_clickhouse_data(

'describe {table} format TabSeparatedWithNames'

.format(table = table)

)

class TableExamples(BaseTool):

name: str = "Table examples"

description: str = "Returns the first N rows from the table"

def _run(self, table: str, n: int = 30) -> str:

table = table.strip('"').strip("'")

return get_clickhouse_data(

'select * from {table} limit {n} format TabSeparatedWithNames'

.format(table = table, n = n)

)

table_structure_tool = TableStructure()

table_examples_tool = TableExamples()

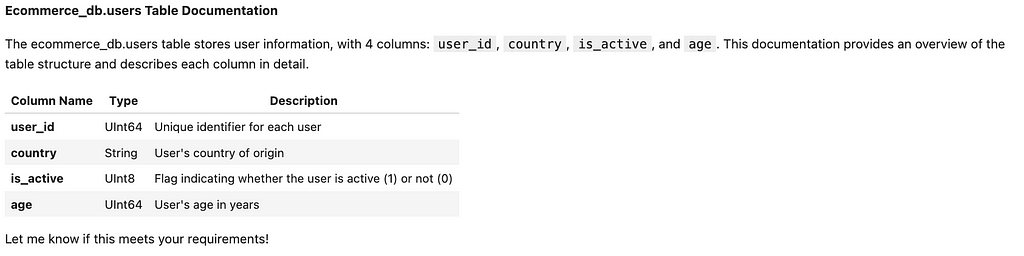

Now, we need to specify these tools in the task and re-run our script. After the first attempt, I got the following output from the Technical Writer.

Task output: This final answer provides a detailed and factual description

of the ecommerce_db.users table structure, including column names, types,

and descriptions. The documentation adheres to the provided information

from the database specialist without any additions or modifications.

More focused tools helped the database specialist retrieve the correct table information. However, even though the writer had all the necessary information, we didn’t get the expected result.

As we know, LLMs are probabilistic, so I gave it another try. And hooray, this time, the result was pretty good.

It’s not perfect since it still includes some irrelevant comments and lacks the overall description of the table. However, providing more specialised tools has definitely paid off. It also helped to prevent issues when the agent tried to load all the data from the table.

We’ve achieved pretty good results, but let’s see if we can improve them further. A common practice in multi-agent setups is quality assurance, which adds the final review stage before finalising the results.

Let’s create a new agent — a Quality Assurance Specialist, who will be in charge of review.

qa_specialist_agent = Agent(

role = "Quality Assurance specialist",

goal = """Ensure the highest quality of the documentation we provide

(that it's correct and easy to understand)""",

backstory = '''

You work as a Quality Assurance specialist, checking the work

from the technical writer and ensuring that it's inline

with our highest standards.

You need to check that the technical writer provides the full complete

answers and make no assumptions.

Also, you need to make sure that the documentation addresses

all the questions and is easy to understand.

''',

allow_delegation = False,

verbose = True

)

Now, it’s time to describe the review task. I’ve used the context parameter to specify that this task requires outputs from both table_description_task and table_documentation_task.

qa_review_task = Task(

description = '''

Review the draft documentation provided by the technical writer.

Ensure that the documentation fully answers all the questions:

the purpose of the table and its structure in the form of table.

Make sure that the documentation is consistent with the information

provided by the database specialist.

Double check that there are no irrelevant comments in the final version

of documentation.

''',

expected_output = '''

The final version of the documentation in markdown format

that can be published.

The documentation should fully address all the questions, be consistent

and follow our professional but informal tone of voice.

''',

tools = [],

context = [table_description_task, table_documentation_task],

output_file="checked_table_documentation.md",

agent = qa_specialist_agent

)

Let’s update our crew and run it.

full_crew = Crew(

agents=[database_specialist_agent, tech_writer_agent, qa_specialist_agent],

tasks=[table_description_task, table_documentation_task, qa_review_task],

verbose = 2,

memory = False # don't work with Llama

)

full_result = full_crew.kickoff({'table': 'ecommerce_db.users'})

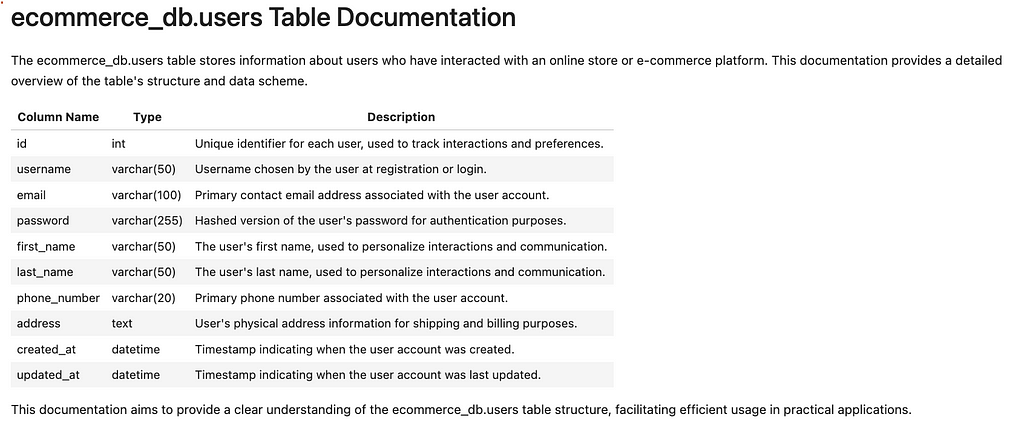

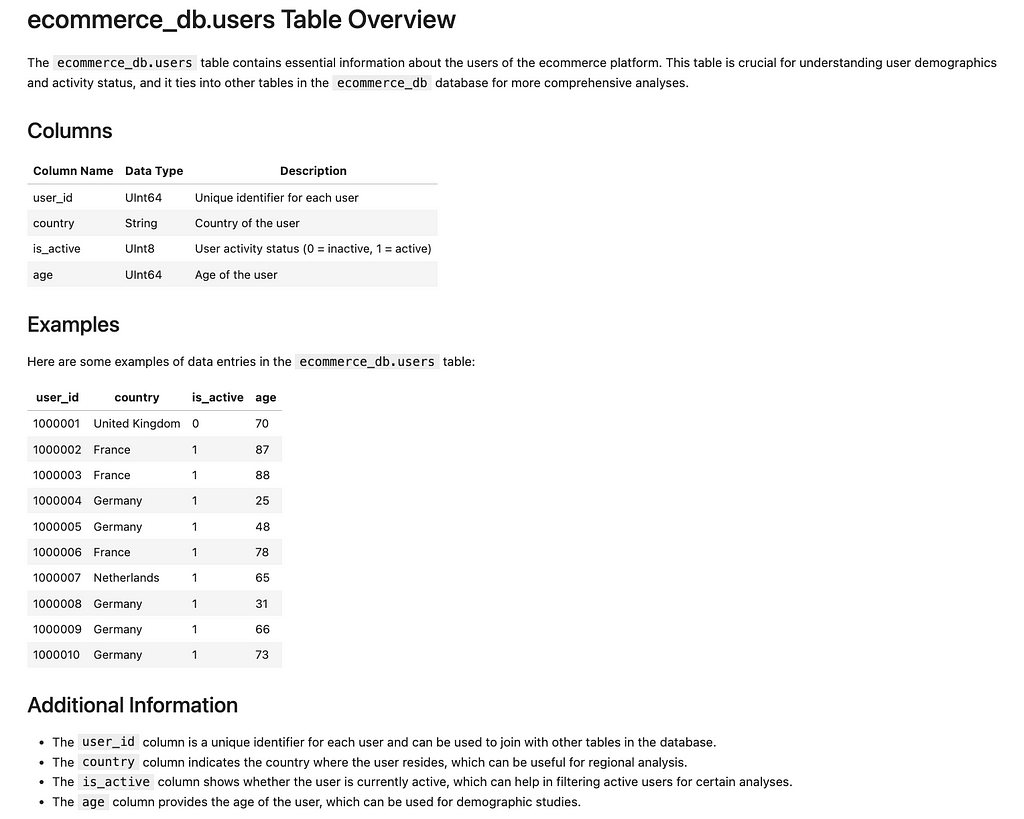

We now have more structured and detailed documentation thanks to the addition of the QA stage.

With the addition of the QA specialist, it would be interesting to test the delegation mechanism. The QA specialist agent might have questions or requests that it could delegate to other agents.

I tried using the delegation with Llama 3, but it didn’t go well. Llama 3 struggled to call the co-worker tool correctly. It couldn’t specify the correct co-worker’s name.

We achieved pretty good results with a local model that can run on any laptop, but now it’s time to switch gears and use a way more powerful model — GPT-4o.

To do it, we just need to update the following environment variables.

os.environ["OPENAI_MODEL_NAME"] = 'gpt-4o'

os.environ["OPENAI_API_KEY"] = config['OPENAI_API_KEY'] # your OpenAI key

To switch on the delegation, we should specify allow_delegation = True for the QA specialist agent.

Also, we can use handy memory functionality for OpenAI models, as mentioned above. The memory will allow our agents to share their knowledge with each other during execution and leverage long-term memory to get information from previous executions.

full_crew = Crew(

agents = [database_specialist_agent, tech_writer_agent, qa_specialist_agent],

tasks = [table_description_task, table_documentation_task, qa_review_task],

verbose = 2,

memory = True

)

full_result = full_crew.kickoff({'table': 'ecommerce_db.users'})

Let’s see the CrewAI execution logs to understand how delegation works. Here are all the logs for the QA specialist. We can see that it reached out to the database specialist to double-check the information.

Thought: I need to review the provided draft documentation to ensure it

fully answers all the questions and is complete, correct, and easy

to understand. Specifically, I need to ensure that it explains the purpose

of the table, its structure, and includes relevant examples without

any irrelevant comments.

First, I will compare the two drafts to identify any discrepancies and

missing information. Then, I will verify the accuracy and completeness of

the information provided.

**Comparison:**

1. **Introduction:**

- First draft: Includes an introduction and examples.

- Second draft: Adds a more detailed introduction about the purpose and importance of the table.

2. **Columns:**

- Both drafts have the same column structure and descriptions.

3. **Additional Information:**

- Both drafts include similar additional information, but the second draft is more concise.

4. **Examples:**

- The first draft includes examples, which are missing in the second draft.

5. **Final Checks:**

- Ensure there are no irrelevant comments.

- Verify consistency with information provided by the database specialist.

Action: Ask question to co-worker

Action Input: {

"question": "Can you confirm the accuracy of the provided column descriptions

and examples for the `ecommerce_db.users` table?",

"context": "We are finalizing the documentation for the `ecommerce_db.users`

table. The table includes columns `user_id`, `country`, `is_active`, and

`age`. Here are the examples provided:

| user_id | country | is_active | age |

|---------|----------------|-----------|-----|

| 1000001 | United Kingdom | 0 | 70 |

| 1000002 | France | 1 | 87 |

| 1000003 | France | 1 | 88 |

| 1000004 | Germany | 1 | 25 |

| 1000005 | Germany | 1 | 48 |

| 1000006 | France | 1 | 78 |

| 1000007 | Netherlands | 1 | 65 |

| 1000008 | Germany | 1 | 31 |

| 1000009 | Germany | 1 | 66 |

| 1000010 | Germany | 1 | 73 |",

"coworker": "database specialist"}

<-- omitted logs from the database specialist -->

Based on the provided context and examples for the `ecommerce_db.users` table,

the column descriptions and examples appear accurate. Here are the details:

- `user_id`: This column contains a unique identifier for each user.

The provided examples (e.g., 1000001, 1000002) align with this description

as they are unique numeric identifiers.

- `country`: This column specifies the country of the user. The provided

examples (e.g., United Kingdom, France, Germany, Netherlands) are

all valid country names.

- `is_active`: This column indicates whether the user is active (1) or not (0). The provided examples (e.g., 0, 1) correctly represent this binary status.

- `age`: This column shows the age of the user. The provided examples (e.g., 70,

87, 88, 25, 48, 78, 65, 31, 66, 73) are all valid ages.

Therefore, the column descriptions and examples provided for the

`ecommerce_db.users` table are accurate and meet the expected criteria.

Thought: Based on the observation from the database specialist,

the column descriptions and examples provided for the `ecommerce_db.users`

table are accurate. Now, I will consolidate the best elements from both drafts,

ensuring the documentation is complete, clear, and free of irrelevant comments.

Action: Finalize the documentation in markdown format, incorporating

the detailed introduction, examples, and ensuring the tone is professional

but informal.

When I tried the delegation for the first time, I didn’t enable memory, which led to incorrect results. The data specialist and the technical writer initially returned the correct information. However, when the QA specialist returned with the follow-up questions, they started to hallucinate. So, it looks like delegation works better when memory is enabled.

Here’s the final output from GPT-4o. The result looks pretty nice now. We definitely can use LLMs to automate documentation.

So, the first task has been solved!

I used the same script to generate documentation for the ecommerce_db.sessions table as well. It will be handy for our next task. So, let’s not waste any time and move on.

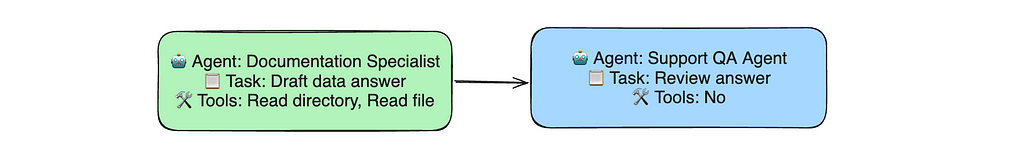

Our next task is answering questions based on the documentation since it’s common for many data analysts (and other specialists).

We will start simple and will create just two agents:

We will need to empower the documentation specialist with a couple of tools that will allow them to see all the files stored in the directory and read the files. It’s pretty straightforward since CrewAI has implemented such tools.

from crewai_tools import DirectoryReadTool, FileReadTool

documentation_directory_tool = DirectoryReadTool(

directory = '~/crewai_project/ecommerce_documentation')

base_file_read_tool = FileReadTool()

However, since Llama 3 keeps struggling with quotes when calling tools, I had to create a custom tool on top of the FileReaderTool to overcome this issue.

from crewai_tools import BaseTool

class FileReadToolUPD(BaseTool):

name: str = "Read a file's content"

description: str = "A tool that can be used to read a file's content."

def _run(self, file_path: str) -> str:

# Implementation goes here

return base_file_read_tool._run(file_path = file_path.strip('"').strip("'"))

file_read_tool = FileReadToolUPD()

Next, as we did before, we need to create agents, tasks and crew.

data_support_agent = Agent(

role = "Senior Data Support Agent",

goal = "Be the most helpful support for you colleagues",

backstory = '''You work as a support for data-related questions

in the company.

Even though you're a big expert in our data warehouse, you double check

all the facts in documentation.

Our documentation is absolutely up-to-date, so you can fully rely on it

when answering questions (you don't need to check the actual data

in database).

Your work is very important for the team success. However, remember

that examples of table rows don't show all the possible values.

You need to ensure that you provide the best possible support: answering

all the questions, making no assumptions and sharing only the factual data.

Be creative try your best to solve the customer problem.

''',

allow_delegation = False,

verbose = True

)

qa_support_agent = Agent(

role = "Support Quality Assurance Agent",

goal = """Ensure the highest quality of the answers we provide

to the customers""",

backstory = '''You work as a Quality Assurance specialist, checking the work

from support agents and ensuring that it's inline with our highest standards.

You need to check that the agent provides the full complete answers

and make no assumptions.

Also, you need to make sure that the documentation addresses all

the questions and is easy to understand.

''',

allow_delegation = False,

verbose = True

)

draft_data_answer = Task(

description = '''Very important customer {customer} reached out to you

with the following question:

```

{question}

```

Your task is to provide the best answer to all the points in the question

using all available information and not making any assumprions.

If you don't have enough information to answer the question, just say

that you don't know.''',

expected_output = '''The detailed informative answer to the customer's

question that addresses all the point mentioned.

Make sure that answer is complete and stict to facts

(without any additional information not based on the factual data)''',

tools = [documentation_directory_tool, file_read_tool],

agent = data_support_agent

)

answer_review = Task(

description = '''

Review the draft answer provided by the support agent.

Ensure that the it fully answers all the questions mentioned

in the initial inquiry.

Make sure that the answer is consistent and doesn't include any assumptions.

''',

expected_output = '''

The final version of the answer in markdown format that can be shared

with the customer.

The answer should fully address all the questions, be consistent

and follow our professional but informal tone of voice.

We are very chill and friendly company, so don't forget to include

all the polite phrases.

''',

tools = [],

agent = qa_support_agent

)

qna_crew = Crew(

agents = [data_support_agent, qa_support_agent],

tasks = [draft_data_answer, answer_review],

verbose = 2,

memory = False # don't work with Llama

)

Let’s see how it works in practice.

result = qna_crew.kickoff(

{'customer': "Max",

'question': """Hey team, I hope you're doing well. I need to find

the numbers before our CEO presentation tomorrow, so I will really

appreciate your help.

I need to calculate the number of sessions from our Windows users in 2023. I've tried to find the table with such data in our data warehouse, but wasn't able to.

Do you have any ideas whether we store the needed data somewhere,

so that I can query it? """

}

)

We’ve got a polite, practical and helpful answer in return. That’s really great.

**Hello Max,**

Thank you for reaching out with your question! I'm happy to help you

find the number of sessions from Windows users in 2023.

After reviewing our documentation, I found that we do store data

related to sessions and users in our ecommerce database, specifically in

the `ecommerce_db.sessions` table.

To answer your question, I can provide you with a step-by-step guide

on how to query this table using SQL. First, you can use the `session_id`

column along with the `os` column filtering for "Windows" and

the `action_date` column filtering for dates in 2023.

Then, you can group the results by `os` using the `GROUP BY` clause

to count the number of sessions that meet these conditions.

Here's a sample SQL query that should give you the desired output:

```sql

SELECT COUNT(*)

FROM ecommerce_db.sessions

WHERE os = 'Windows'

AND action_date BETWEEN '2023-01-01' AND '2023-12-31'

GROUP BY os;

```

This query will return the total number of sessions from Windows

users in 2023. I hope this helps! If you have any further questions or

need more assistance, please don't hesitate to ask.

Let’s complicate the task a bit. Suppose we can get not only questions about our data but also about our tool (ClickHouse). So, we will have another agent in the crew — ClickHouse Guru. To give our CH agent some knowledge, I will share a documentation website with it.

from crewai_tools import ScrapeWebsiteTool, WebsiteSearchTool

ch_documenation_tool = ScrapeWebsiteTool(

'https://clickhouse.com/docs/en/guides/creating-tables')

If you need to work with a lengthy document, you might try using RAG (Retrieval Augmented generation) — WebsiteSearchTool. It will calculate embeddings and store them locally in ChromaDB. In our case, we will stick to a simple website scraper tool.

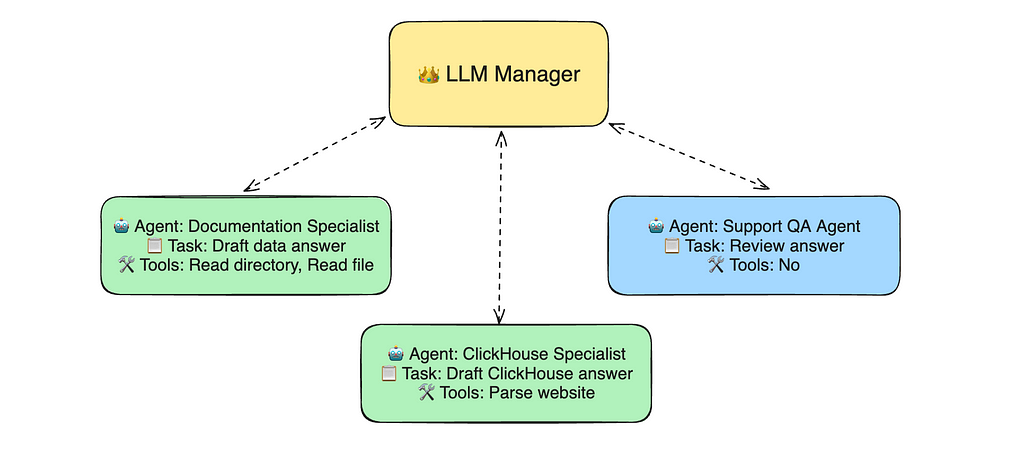

Now that we have two subject matter experts, we need to decide who will be working on the questions. So, it’s time to use a hierarchical process and add a manager to orchestrate all the tasks.

CrewAI provides the manager implementation, so we only need to specify the LLM model. I’ve picked the GPT-4o.

from langchain_openai import ChatOpenAI

from crewai import Process

complext_qna_crew = Crew(

agents = [ch_support_agent, data_support_agent, qa_support_agent],

tasks = [draft_ch_answer, draft_data_answer, answer_review],

verbose = 2,

manager_llm = ChatOpenAI(model='gpt-4o', temperature=0),

process = Process.hierarchical,

memory = False

)

At this point, I had to switch from Llama 3 to OpenAI models again to run a hierarchical process since it hasn’t worked for me with Llama (similar to this issue).

Now, we can try our new crew with different types of questions (either related to our data or ClickHouse database).

ch_result = complext_qna_crew.kickoff(

{'customer': "Maria",

'question': """Good morning, team. I'm using ClickHouse to calculate

the number of customers.

Could you please remind whether there's an option to add totals

in ClickHouse?"""

}

)

doc_result = complext_qna_crew.kickoff(

{'customer': "Max",

'question': """Hey team, I hope you're doing well. I need to find

the numbers before our CEO presentation tomorrow, so I will really

appreciate your help.

I need to calculate the number of sessions from our Windows users

in 2023. I've tried to find the table with such data

in our data warehouse, but wasn't able to.

Do you have any ideas whether we store the needed data somewhere,

so that I can query it. """

}

)

If we look at the final answers and logs (I’ve omitted them here since they are quite lengthy, but you can find them and full logs on GitHub), we will see that the manager was able to orchestrate correctly and delegate tasks to co-workers with relevant knowledge to address the customer’s question. For the first (ClickHouse-related) question, we got a detailed answer with examples and possible implications of using WITH TOTALS functionality. For the data-related question, models returned roughly the same information as we’ve seen above.

So, we’ve built a crew that can answer various types of questions based on the documentation, whether from a local file or a website. I think it’s an excellent result.

You can find all the code on GitHub.

In this article, we’ve explored using the CrewAI multi-agent framework to create a solution for writing documentation based on tables and answering related questions.

Given the extensive functionality we’ve utilised, it’s time to summarise the strengths and weaknesses of this framework.

Overall, I find CrewAI to be an incredibly useful framework for multi-agent systems:

However, there are areas that could be improved:

The domain and tools for LLMs are evolving rapidly, so I’m hopeful that we’ll see a lot of progress in the near future.

Thank you a lot for reading this article. I hope this article was insightful for you. If you have any follow-up questions or comments, please leave them in the comments section.

This article is inspired by the “Multi AI Agent Systems with CrewAI” short course from DeepLearning.AI.

Multi AI Agent Systems 101 was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Multi AI Agent Systems 101