Originally appeared here:

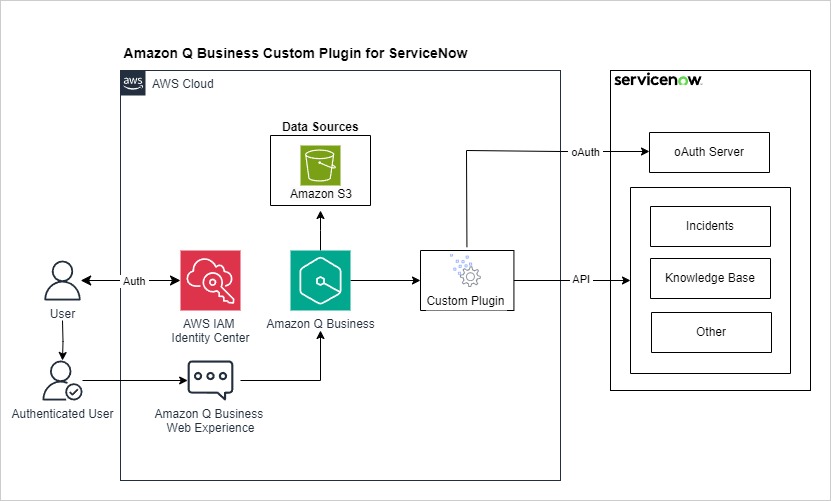

Using natural language in Amazon Q Business: From searching and creating ServiceNow incidents and knowledge articles to generating insights

Originally appeared here:

Using natural language in Amazon Q Business: From searching and creating ServiceNow incidents and knowledge articles to generating insights

Coding your road to Data Science: mastering key development skills

Originally appeared here:

Roadmap to Becoming a Data Scientist, Part 2: Software Engineering

Go Here to Read this Fast! Roadmap to Becoming a Data Scientist, Part 2: Software Engineering

It might be surprising, but in this article, I would like to talk about the knapsack problem, the classic optimisation problem that has been studied for over a century. According to Wikipedia, the problem is defined as follows:

Given a set of items, each with a weight and a value, determine which items to include in the collection so that the total weight is less than or equal to a given limit and the total value is as large as possible.

While product analysts may not physically pack knapsacks, the underlying mathematical model is highly relevant to many of our tasks. There are numerous real-world applications of the knapsack problem in product analytics. Here are a few examples:

Such and similar tasks are quite common, and many analysts encounter them regularly. So, in this article, I’ll explore different approaches to solving it, ranging from naive, simple techniques to more advanced methods such as linear programming.

Another reason I chose this topic is that linear programming is one of the most powerful and popular tools in prescriptive analytics — a type of analysis that focuses on providing stakeholders with actionable options to make informed decisions. As such, I believe it is an essential skill for any analyst to have in their toolkit.

Let’s dive straight into the case we’ll be exploring. Imagine we’re part of a marketing team planning activities for the upcoming month. Our objective is to maximize key performance indicators (KPIs), such as the number of acquired users and revenue while operating within a limited marketing budget.

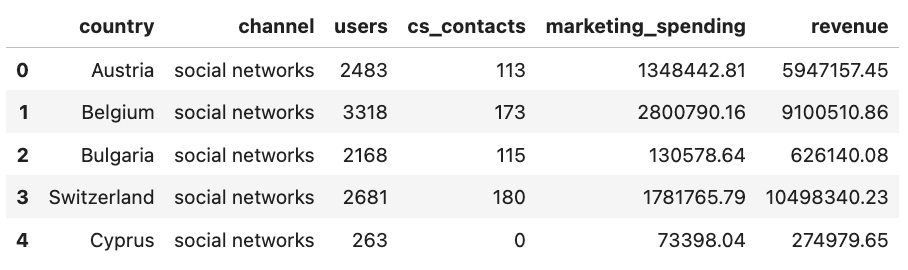

We’ve estimated the expected outcomes of various marketing activities across different countries and channels. Here is the data we have:

Note that the dataset is synthetic and randomly generated, so don’t try to infer any market-related insights from it.

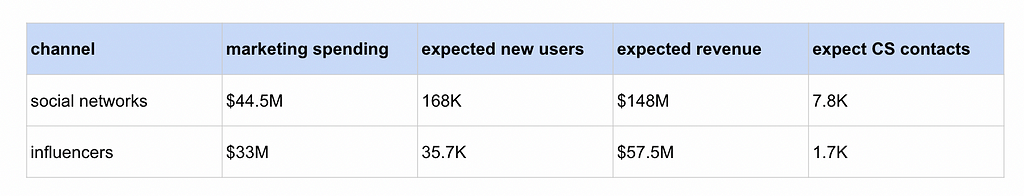

First, I’ve calculated the high-level statistics to get a view of the numbers.

Let’s determine the optimal set of marketing activities that maximizes revenue while staying within the $30M marketing budget.

At first glance, the problem may seem straightforward: we could calculate all possible combinations of marketing activities and select the optimal one. However, it might be a challenging task.

With 62 segments, there are 2⁶² possible combinations, as each segment can either be included or excluded. This results in approximately 4.6*10¹⁸ combinations — an astronomical number.

To better understand the computational feasibility, let’s consider a smaller subset of 15 segments and estimate the time required for one iteration.

import itertools

import pandas as pd

import tqdm

# reading data

df = pd.read_csv('marketing_campaign_estimations.csv', sep = 't')

df['segment'] = df.country + ' - ' + df.channel

# calculating combinations

combinations = []

segments = list(df.segment.values)[:15]

print('number of segments: ', len(segments))

for num_items in range(len(segments) + 1):

combinations.extend(

itertools.combinations(segments, num_items)

)

print('number of combinations: ', len(combinations))

tmp = []

for selected in tqdm.tqdm(combinations):

tmp_df = df[df.segment.isin(selected)]

tmp.append(

{

'selected_segments': ', '.join(selected),

'users': tmp_df.users.sum(),

'cs_contacts': tmp_df.cs_contacts.sum(),

'marketing_spending': tmp_df.marketing_spending.sum(),

'revenue': tmp_df.revenue.sum()

}

)

# number of segments: 15

# number of combinations: 32768

It took approximately 4 seconds to process 15 segments, allowing us to handle around 7,000 iterations per second. Using this estimate, let’s calculate the execution time for the full set of 62 segments.

2**62 / 7000 / 3600 / 24 / 365

# 20 890 800.6

Using brute force, it would take around 20.9 million years to get the answer to our question — clearly not a feasible option.

Execution time is entirely determined by the number of segments. Removing just one segment can reduce time twice. With this in mind, let’s explore possible ways to merge segments.

As usual, there are more small-sized segments than bigger ones, so merging them is a logical step. However, it’s important to note that this approach may reduce accuracy since multiple segments are aggregated into one. Despite this, it could still yield a solution that is “good enough.”

To simplify, let’s merge all segments that contribute less than 0.1% of revenue.

df['share_of_revenue'] = df.revenue/df.revenue.sum() * 100

df['segment_group'] = list(map(

lambda x, y: x if y >= 0.1 else 'other',

df.segment,

df.share_of_revenue

))

print(df[df.segment_group == 'other'].share_of_revenue.sum())

# 0.53

print(df.segment_group.nunique())

# 52

With this approach, we will merge ten segments into one, representing 0.53% of the total revenue (the potential margin of error). With 52 segments remaining, we can obtain the solution in just 20.4K years. While this is a significant improvement, it’s still not sufficient.

You may consider other heuristics tailored to your specific task. For instance, if your constraint is a ratio (e.g., contact rate = CS contacts / users ≤ 5%), you could group all segments where the constraint holds true, as the optimal solution will include all of them. In our case, however, I don’t see any additional strategies to reduce the number of segments, so brute force seems impractical.

That said, if the number of combinations is relatively small and brute force can be executed within a reasonable time, it can be an ideal approach. It’s simple to develop and provides accurate results.

Since brute force is not feasible for calculating all combinations, let’s consider a simpler algorithm to address this problem.

One possible approach is to focus on the top-performing segments. We can evaluate segment performance by calculating revenue per dollar spent, then sort all activities based on this ratio and select the top performers that fit within the marketing budget. Let’s implement it.

df['revenue_per_spend'] = df.revenue / df.marketing_spending

df = df.sort_values('revenue_per_spend', ascending = False)

df['spend_cumulative'] = df.marketing_spending.cumsum()

selected_df = df[df.spend_cumulative <= 30000000]

print(selected_df.shape[0])

# 48

print(selected_df.revenue.sum()/1000000)

# 107.92

With this approach, we selected 48 activities and got $107.92M in revenue.

Unfortunately, although the logic seems reasonable, it is not the optimal solution for maximizing revenue. Let’s look at a simple example with just three marketing activities.

Using the top markets approach, we would select France and achieve $68M in revenue. However, by choosing two other markets, we could achieve significantly better results — $97.5M. The key point is that our algorithm optimizes not only for maximum revenue but also for minimizing the number of selected segments. Therefore, this approach will not yield the best results, especially considering its inability to account for multiple constraints.

Since all simple approaches have failed, we must return to the fundamentals and explore the theory behind this problem. Fortunately, the knapsack problem has been studied for many years, and we can apply optimization techniques to solve it in seconds rather than years.

The problem we’re trying to solve is an example of Integer Programming, which is actually a subdomain of Linear Programming.

We’ll discuss this shortly, but first, let’s align on the key concepts of the optimization process. Each optimisation problem consists of:

With these basic concepts in mind, we can define Linear Programming as a scenario where the following conditions hold:

Integer Programming is very similar to Linear Programming, with one key difference: some or all decision variables must be integers. While this may seem like a minor change, it significantly impacts the solution approach, requiring more complex methods than those used in Linear Programming. One common technique is branch-and-bound. We won’t dive deeper into the theory here, but you can always find more detailed explanations online.

For linear optimization, I prefer the widely used Python package PuLP. However, there are other options available, such as Python MIP or Pyomo. Let’s install PuLP via pip.

! pip install pulp

Now, it’s time to define our task as a mathematical optimisation problem. There are the following steps for it:

Let’s go through the steps one by one. But first, we need to create the problem object and set the objective — maximization in our case.

from pulp import *

problem = LpProblem("Marketing_campaign", LpMaximize)

The next step is defining the decision variables — parameters that we can change during optimisation. Our main decision is either to run a marketing campaign or not. So, we can model it as a set of binary variables (0 or 1) for each segment. Let’s do it with the PuLP library.

segments = range(df.shape[0])

selected = LpVariable.dicts("Selected", segments, cat="Binary")

After that, it’s time to align on the objective function. As discussed, we want to maximise the revenue. The total revenue will be a sum of revenue from all the selected segments (where decision_variable = 1 ). Therefore, we can define this formula as the sum of the expected revenue for each segment multiplied by the decision binary variable.

problem += lpSum(

selected[i] * list(df['revenue'].values)[i]

for i in segments

)

The final step is to add constraints. Let’s start with a simple constraint: our marketing spending must be below $30M.

problem += lpSum(

selected[i] * df['marketing_spending'].values[i]

for i in segments

) <= 30 * 10**6

Hint: you can print problem to double check the objective function and constraints.

Now that we’ve defined everything, we can run the optimization and analyze the results.

problem.solve()

It takes less than a second to run the optimization, a significant improvement compared to the thousands of years that brute force would require.

Result - Optimal solution found

Objective value: 110162662.21000001

Enumerated nodes: 4

Total iterations: 76

Time (CPU seconds): 0.02

Time (Wallclock seconds): 0.02

Let’s save the results of the model execution — the decision variables indicating whether each segment was selected or not — into our dataframe.

df['selected'] = list(map(lambda x: x.value(), selected.values()))

print(df[df.selected == 1].revenue.sum()/10**6)

# 110.16

It works like magic, allowing you to obtain the solution quickly. Additionally, note that we achieved higher revenue compared to our naive approach: $110.16M versus $107.92M.

We’ve tested integer programming with a simple example featuring just one constraint, but we can extend it further. For instance, we can add additional constraints for our CS contacts to ensure that our Operations team can handle the demand in a healthy way:

# define the problem

problem_v2 = LpProblem("Marketing_campaign_v2", LpMaximize)

# decision variables

segments = range(df.shape[0])

selected = LpVariable.dicts("Selected", segments, cat="Binary")

# objective function

problem_v2 += lpSum(

selected[i] * list(df['revenue'].values)[i]

for i in segments

)

# Constraints

problem_v2 += lpSum(

selected[i] * df['marketing_spending'].values[i]

for i in segments

) <= 30 * 10**6

problem_v2 += lpSum(

selected[i] * df['cs_contacts'].values[i]

for i in segments

) <= 5000

problem_v2 += lpSum(

selected[i] * df['cs_contacts'].values[i]

for i in segments

) <= 0.042 * lpSum(

selected[i] * df['users'].values[i]

for i in segments

)

# run the optimisation

problem_v2.solve()

The code is straightforward, with the only tricky part being the transformation of the ratio constraint into a simpler linear form.

Another potential constraint you might consider is limiting the number of selected options, for example, to 10. This constraint could be pretty helpful in prescriptive analytics, for example, when you need to select the top-N most impactful focus areas.

# define the problem

problem_v3 = LpProblem("Marketing_campaign_v2", LpMaximize)

# decision variables

segments = range(df.shape[0])

selected = LpVariable.dicts("Selected", segments, cat="Binary")

# objective function

problem_v3 += lpSum(

selected[i] * list(df['revenue'].values)[i]

for i in segments

)

# constraints

problem_v3 += lpSum(

selected[i] * df['marketing_spending'].values[i]

for i in segments

) <= 30 * 10**6

problem_v3 += lpSum(

selected[i] for i in segments

) <= 10

# run the optimisation

problem_v3.solve()

df['selected'] = list(map(lambda x: x.value(), selected.values()))

print(df.selected.sum())

# 10

Another possible option to tweak our problem is to change the objective function. We’ve been optimising for the revenue, but imagine we want to maximise both revenue and new users at the same time. For that, we can slightly change our objective function.

Let’s consider the best approach. We could calculate the sum of revenue and new users and aim to maximize it. However, since revenue is, on average, 1000 times higher, the results might be skewed toward maximizing revenue. To make the metrics more comparable, we can normalize both revenue and users based on their total sums. Then, we can define the objective function as a weighted sum of these ratios. I would use equal weights (0.5) for both metrics, but you can adjust the weights to give more value to one of them.

# define the problem

problem_v4 = LpProblem("Marketing_campaign_v2", LpMaximize)

# decision variables

segments = range(df.shape[0])

selected = LpVariable.dicts("Selected", segments, cat="Binary")

# objective Function

problem_v4 += (

0.5 * lpSum(

selected[i] * df['revenue'].values[i] / df['revenue'].sum()

for i in segments

)

+ 0.5 * lpSum(

selected[i] * df['users'].values[i] / df['users'].sum()

for i in segments

)

)

# constraints

problem_v4 += lpSum(

selected[i] * df['marketing_spending'].values[i]

for i in segments

) <= 30 * 10**6

# run the optimisation

problem_v4.solve()

df['selected'] = list(map(lambda x: x.value(), selected.values()))

We obtained the optimal objective function value of 0.6131, with revenue at $104.36M and 136.37K new users.

That’s it! We’ve learned how to use integer programming to solve various optimisation problems.

You can find the full code on GitHub.

In this article, we explored different methods for solving the knapsack problem and its analogues in product analytics.

With this, I hope you’ve gained another valuable analytical tool for your toolkit.

Thank you a lot for reading this article. I hope this article was insightful for you. If you have any follow-up questions or comments, please leave them in the comments section.

All the images are produced by the author unless otherwise stated.

Linear Optimisations in Product Analytics was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Linear Optimisations in Product Analytics

Go Here to Read this Fast! Linear Optimisations in Product Analytics

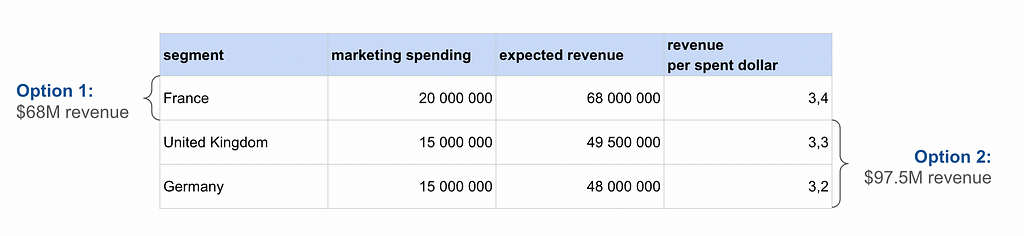

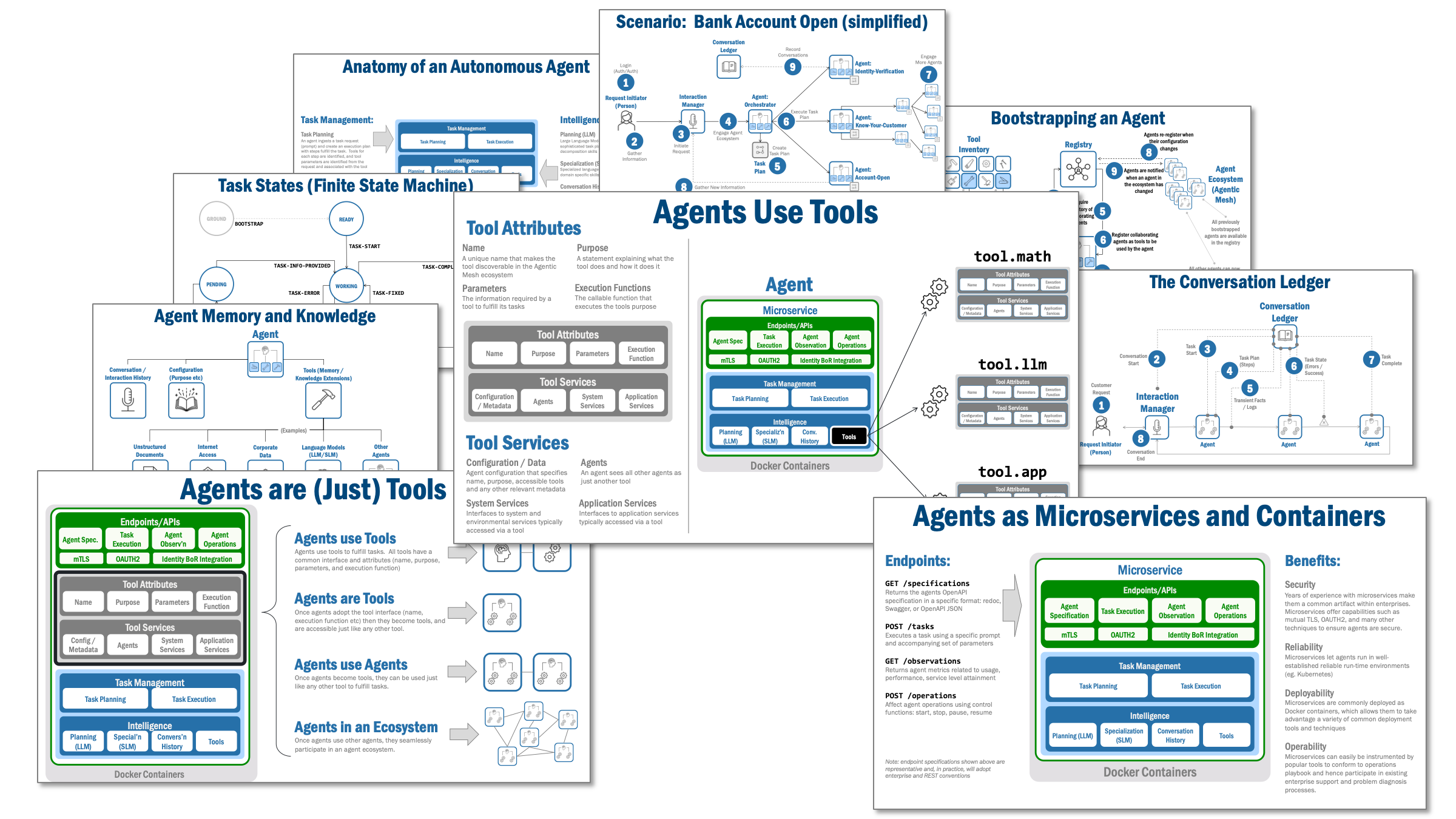

A blueprint for autonomous agents in an Agentic Mesh ecosystem.

Originally appeared here:

The Anatomy of an Autonomous Agent

Go Here to Read this Fast! The Anatomy of an Autonomous Agent

Learn how to visualize AI weather and create your own forecast for the holidays

Originally appeared here:

Will Your Christmas Be White? Ask An AI Weather Model!

Go Here to Read this Fast! Will Your Christmas Be White? Ask An AI Weather Model!

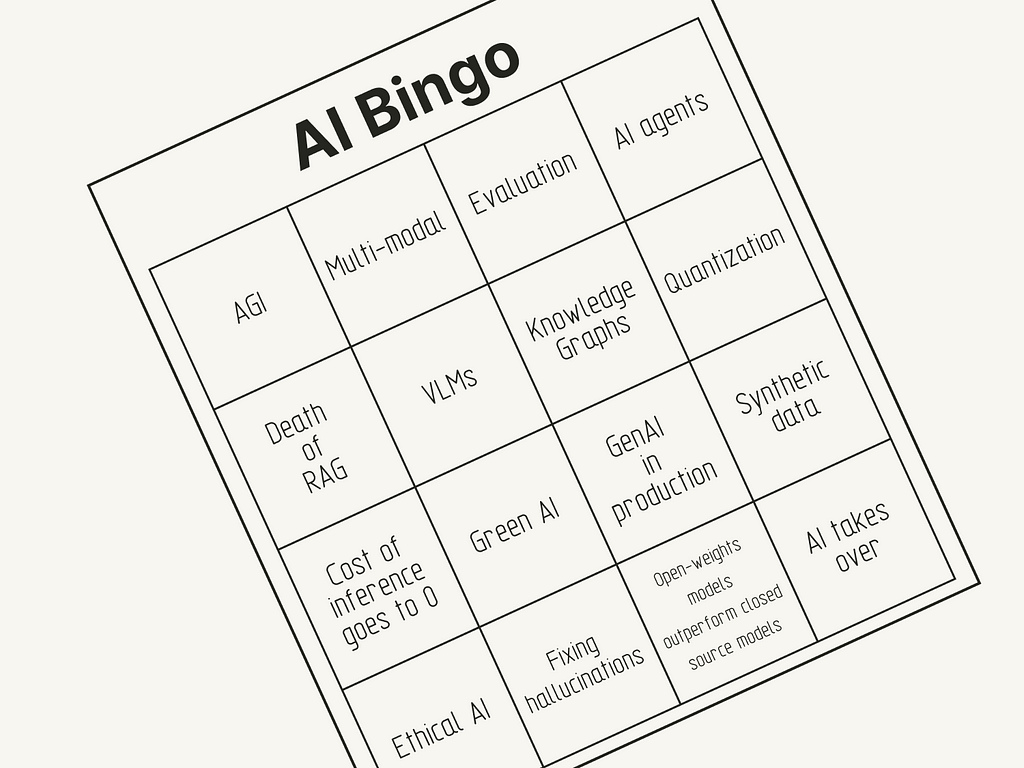

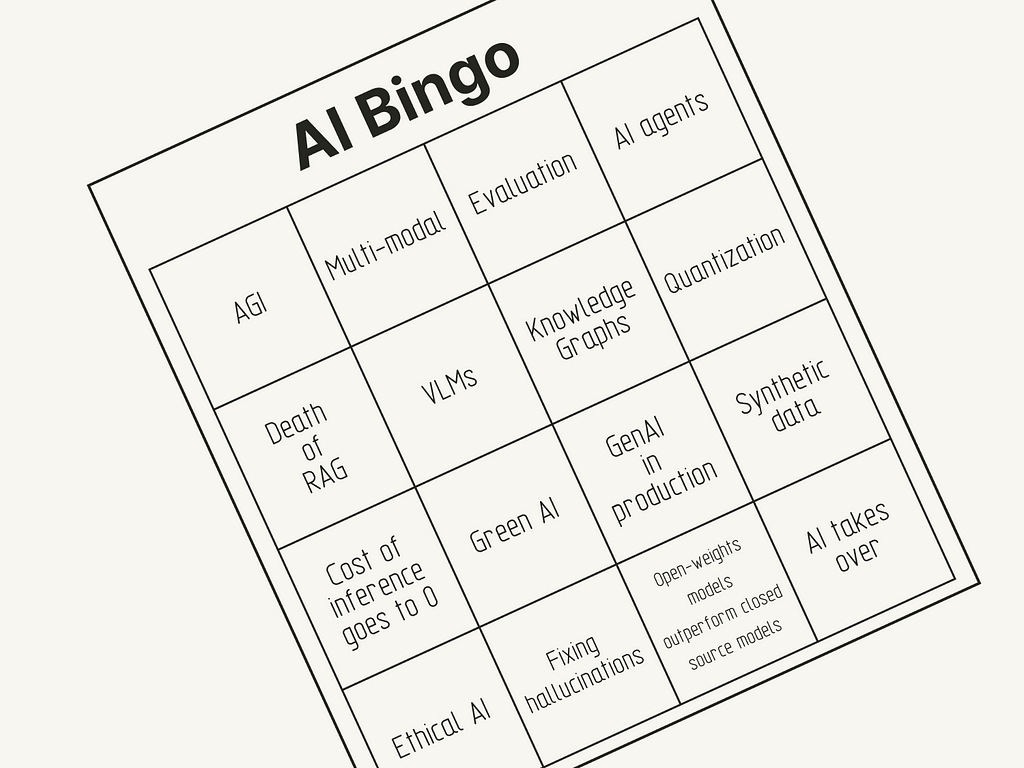

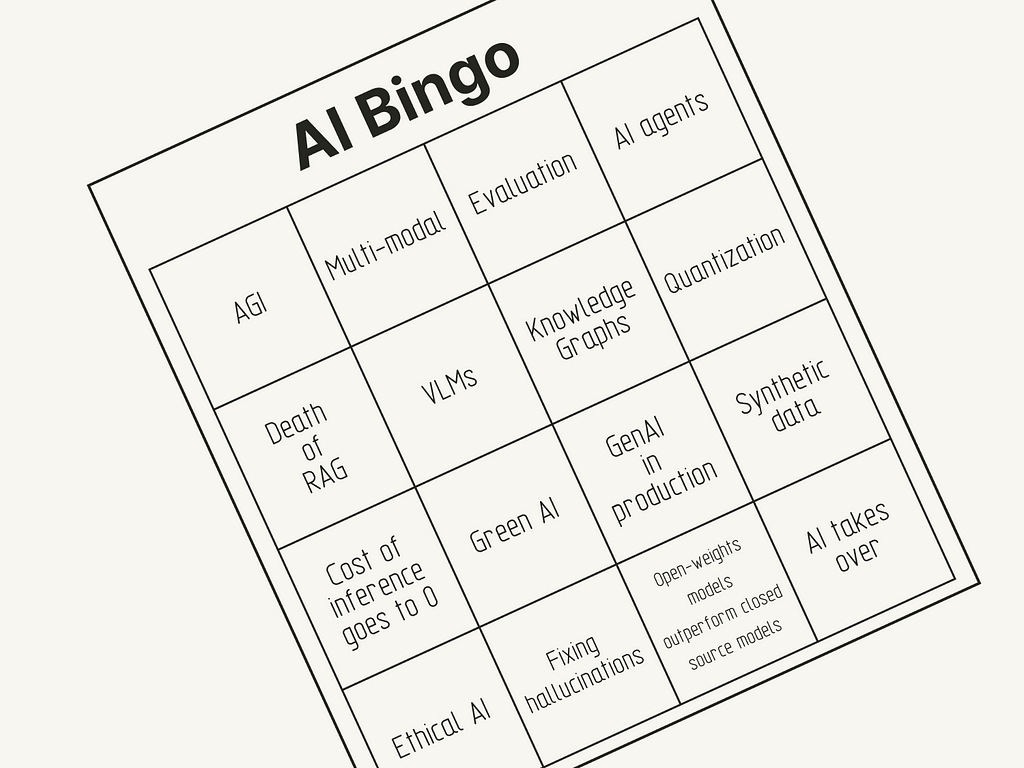

In 2023, building AI-powered applications felt full of promise, but the challenges were already starting to show. By 2024, we began experimenting with techniques to tackle the hard realities of making them work in production.

Last year, I reviewed the biggest trends in AI in 2023 and made predictions for 2024. This year, instead of a timeline, I want to focus on key themes: What trends emerged? Where did I get it wrong? And what can we expect for 2025?

If I have to summarize the AI space in 2024, it would be the “Captain, it’s Wednesday” meme. The amount of major releases this year was overwhelming. I don’t blame anyone in this space who’s feeling exhausted towards the end of this year. It’s been a crazy ride, and it’s been hard to keep up. Let’s review key themes in the AI space and see if I correctly predicted them last year.

Let’s start by looking at some generative AI solutions that made it to production. There aren’t many. As a survey by A16Z revealed in 2024, companies are still hesitant to deploy generative AI in customer-facing applications. Instead, they feel more confident using it for internal tasks, like document search or chatbots.

So, why aren’t there that many customer-facing generative AI applications in the wild? Probably because we are still figuring out how to evaluate them properly. This was one of my predictions for 2024.

Much of the research involved using another LLM to evaluate the output of an LLM (LLM-as-a-judge). While the approach may be clever, it’s also imperfect due to added cost, introduction of bias, and unreliability.

Looking back, I anticipated we would see this issue solved this year. However, looking at the landscape today, despite being a major topic of discussion, we still haven’t found a reliable way to evaluate generative AI solutions effectively. Although I think LLM-as-a-judge is the only way we’re able to evaluate generative AI solutions at scale, this shows how early we are in this field.

Although this one might have been obvious to many of you, I didn’t have this on my radar for 2024. With the releases of GPT4, Llama 3.2, and ColPali, multimodal foundation models were a big trend in 2024. While we, developers, were busy figuring out how to make LLMs work in our existing pipelines, researchers were already one step ahead. They were already building foundation models that could handle more than one modality.

“There is *absolutely no way in hell* we will ever reach human-level AI without getting machines to learn from high-bandwidth sensory inputs, such as vision.” — Yann LeCun

Take PDF parsing as an example of multimodal models’ usefulness beyond text-to-image tasks. ColPali’s researchers avoided the difficult steps of OCR and layout extraction by using visual language models (VLMs). Systems like ColPali and ColQwen2 process PDFs as images, extracting information directly without pre-processing or chunking. This is a reminder that simpler solutions often come from changing how you frame the problem.

Multimodal models are a bigger shift than they might seem. Document search across PDFs is just the beginning. Multimodality in foundation models will unlock entirely new possibilities for applications across industries. With more modalities, AI is no longer just about language — it’s about understanding the world.

Open-weight models are closing the performance gap to closed models. Fine-tuning them gives you a performance boost while still being lightweight. Quantization makes these models smaller and more efficient (see also Green AI) to run anywhere, even on small devices. Quantization pairs well with fine-tuning, especially since fine-tuning language models is inherently challenging (see QLoRA).

Together, these trends make it clear that the future isn’t just bigger models — it’s smarter ones.

I don’t think I explicitly mentioned this one and only wrote a small piece on this in the second quarter of 2024. So, I will not give myself a point here.

This year, AI agents and agentic workflows gained much attention, as Andrew Ng predicted at the beginning of the year. We saw Langchain and LlamaIndex move into incorporating agents, CrewAI gained a lot of momentum, and OpenAI came out with Swarm. This is another topic I hadn’t seen coming since I didn’t look into it.

“I think AI agentic workflows will drive massive AI progress this year — perhaps even more than the next generation of foundation models.” — Andrew Ng

Despite the massive interest in AI agents, they can be controversial. First, there is still no clear definition of “AI agent” and its capabilities. Are AI agents just LLMs with access to tools, or do they have other specific capabilities? Second, they come with added latency and cost. I have read many comments saying that agent systems aren’t suitable for production systems due to this.

But I think we have already been seeing some agentic pipelines in production with lightweight workflows, such as routing user queries to specific function calls. I think we will continue to see agents in 2025. Hopefully, we will get a clearer definition and picture.

Retrieval-Augmented Generation (RAG) gained significant attention in 2023 and remained a key topic in 2024, with many new variants emerging. However, it remains a topic of debate. Some argue it’s becoming obsolete with long-context models, while others question whether it’s even a new idea. While I think the criticism of the terminology is justified, I think the concept is here to stay (for a little while at least).

Every time a new long context model is released, some people predict it will be the end of RAG pipelines. I don’t think that’s going to happen. This whole discussion should be a blog post of its own, so I’m not going into depth here and saving the discussion for another one. Let me just say that I don’t think it’s one or the other. They are complements. Instead, we will probably be using long context models together with RAG pipelines.

Also, having a database in applications is not a new concept. The term ‘RAG,’ which refers to retrieving information from a knowledge source to enhance an LLM’s output, has faced criticism. Some argue it’s merely a rebranding of techniques long used in other fields, such as software engineering. While I think we will probably part from the term in the long run, the technique is here to stay.

Despite predictions of RAG’s demise, retrieval remains a cornerstone of AI pipelines. While I may be biased by my work in retrieval, it felt like this topic became more mainstream in AI this year. It started with many discussions around keyword search (BM25) as a baseline for RAG pipelines. It then evolved into a larger discussion around dense retrieval models, such as ColBERT or ColPali.

I completely missed this topic because I’m not too familiar with it. Knowledge graphs in RAG systems (e.g., Graph RAG) were another big topic. Since all I can say about knowledge graphs at this moment is that they seem to be a powerful external knowledge source, I will keep this section short.

The key topics of 2024 suggest that we are now realizing the limitations of building applications with foundation models. The hype around ChatGPT may have settled, but the drive to integrate foundation models into applications is still very much alive. It’s just way more difficult than we had anticipated.

“ The race to make AI more efficient and more useful, before investors lose their enthusiasm, is on.” — The Economist

2024 taught us that scaling foundation models isn’t enough. We need better evaluation, smarter retrieval, and more efficient workflows to make AI useful. The limitations we ran into this year aren’t signs of stagnation — they’re clues about what we need to fix next in 2025.

OK, now, for the interesting part, what are my 2025 predictions? This year, I want to make some bolder predictions for the next year to make it a little more fun:

I am curious to hear your predictions for the AI space in 2025!

PS: Funnily, I was researching recipes for Christmas cookies with ChatGPT a few days ago instead of using Google, which I was wondering about two years ago when ChatGPT was released.

Will We Be Using ChatGPT Instead of Google To Get a Christmas Cookie Recipe Next Year?

Subscribe for free to get notified when I publish a new story.

Get an email whenever Leonie Monigatti publishes.

Find me on LinkedIn, Twitter, and Kaggle!

2024 in Review: What I Got Right, Where I Was Wrong, and Bolder Predictions for 2025 was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

2024 in Review: What I Got Right, Where I Was Wrong, and Bolder Predictions for 2025

The evolution of AI in test automation: from locators to generative AI (Part 3)

Originally appeared here:

The Invisible Bug That Broke My Automation: How OCR Changed The Game

Go Here to Read this Fast! The Invisible Bug That Broke My Automation: How OCR Changed The Game

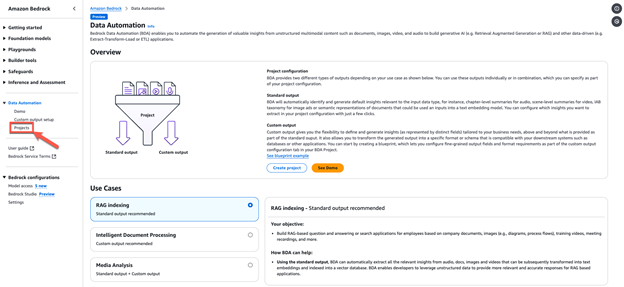

Originally appeared here:

Simplify multimodal generative AI with Amazon Bedrock Data Automation

Go Here to Read this Fast! Simplify multimodal generative AI with Amazon Bedrock Data Automation

You might damage your data science career progress without even realising it — but avoiding that fate isn’t too difficult

Originally appeared here:

Four Career-Savers Data Scientists Should Incorporate into Their Work

Go Here to Read this Fast! Four Career-Savers Data Scientists Should Incorporate into Their Work

In Computer Vision a fundamental goal is to extract meaningful information from static images or video sequences. To understand these signals, it is often helpful to visualize them.

For example when tracking individual cars on a highway, we could draw bounding boxes around them or in the case of detecting problems in a product line on a conveyor belt, we could use a distinct color for anomalies. But what if the extracted information is of a more numerical nature and you want to visualize the time dynamics of this signal?

Just showing the value as a number on the screen might not give you enough insight, especially when the signal is changing rapidly. In these cases a great way to visualize the signal is a plot with a time axis. In this post I am going to show you how you can combine the power of OpenCV and Matplotlib to create animated real-time visualizations of such signals.

The code and video I used for this project is available on GitHub:

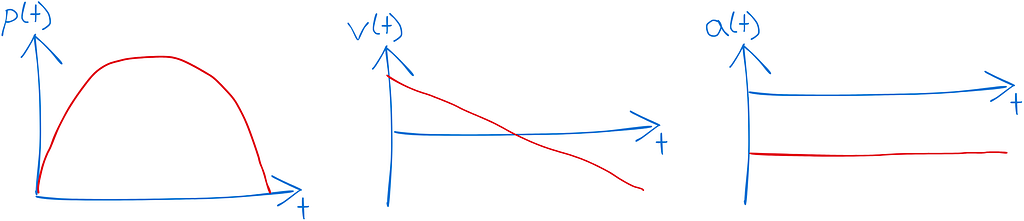

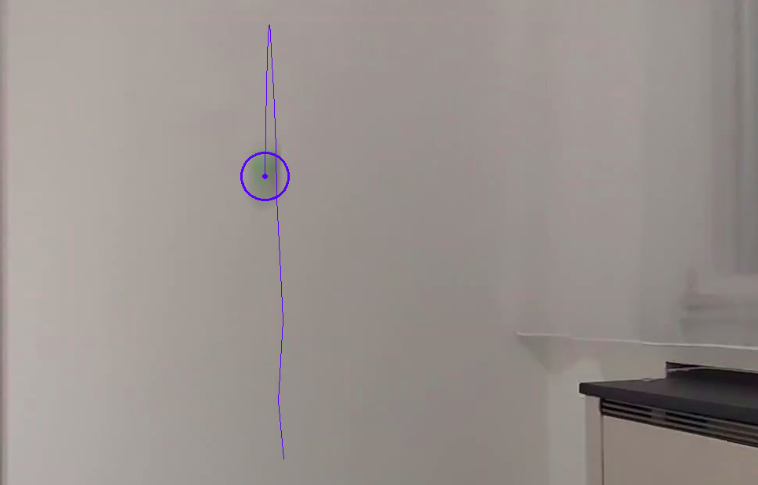

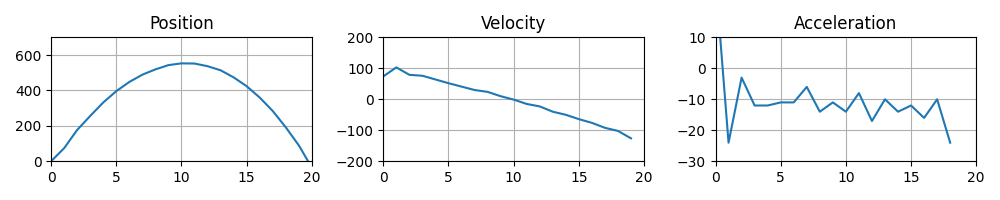

Let’s explore a toy problem where I recorded a video of a ball thrown vertically into the air. The goal is to track the ball in the video and plot it’s position p(t), velocity v(t) and acceleration a(t) over time.

Let’s define our reference frame to be the camera and for simplicity we only track the vertical position of the ball in the image. We expect the position to be a parabola, the velocity to linearly decrease and the acceleration to be constant.

In a first step we need to identify the ball in each frame of the video sequence. Since the camera remains static, an easy way to detect the ball is using a background subtraction model, combined with a color model to remove the hand in the frame.

First let’s get our video clip displayed with a simple loop using VideoCapture from OpenCV. We simply restart the video clip once it has reached its end. We also make sure to playback the video at the original frame rate by calculating the sleep_time in milliseconds based on the FPS of the video. Also make sure to release the resources at the end and close the windows.

import cv2

cap = cv2.VideoCapture("ball.mp4")

fps = int(cap.get(cv2.CAP_PROP_FPS))

while True:

ret, frame = cap.read()

if not ret:

cap.set(cv2.CAP_PROP_POS_FRAMES, 0)

continue

cv2.imshow("Frame", frame)

sleep_time = 1000 // fps

key = cv2.waitKey(sleep_time) & 0xFF

if key & 0xFF == ord("q"):

break

cap.release()

cv2.destroyAllWindows()

Let’s first work on extracting a binary segmentation mask for the ball. This essentially means that we want to create a mask that is active for pixels of the ball and inactive for all other pixels. To do this, I will combine two masks: a motion mask and a color mask. The motion mask extracts the moving parts and the color mask mainly gets rid of the hand in the frame.

For the color filter, we can convert the image to the HSV color space and select a specific hue range (20–100) that contains the green colors of the ball but no skin color tones. I don’t filter on the saturation or brightness values, so we can use the full range (0–255).

# filter based on color

hsv = cv2.cvtColor(frame, cv2.COLOR_BGR2HSV)

mask_color = cv2.inRange(hsv, (20, 0, 0), (100, 255, 255))

To create a motion mask we can use a simple background subtraction model. We use the first frame of the video for the background by setting the learning rate to 1. In the loop, we apply the background model to get the foreground mask, but don’t integrate new frames into it by setting the learning rate to 0.

...

# initialize background model

bg_sub = cv2.createBackgroundSubtractorMOG2(varThreshold=50, detectShadows=False)

ret, frame0 = cap.read()

if not ret:

print("Error: cannot read video file")

exit(1)

bg_sub.apply(frame0, learningRate=1.0)

while True:

...

# filter based on motion

mask_fg = bg_sub.apply(frame, learningRate=0)

In the next step, we can combine the two masks and apply a opening morphology to get rid of the small noise and we end up with a perfect segmentation of the ball.

# combine both masks

mask = cv2.bitwise_and(mask_color, mask_fg)

mask = cv2.morphologyEx(

mask, cv2.MORPH_OPEN, cv2.getStructuringElement(cv2.MORPH_ELLIPSE, (13, 13))

)

The only thing we’re left with is our ball in the mask. To track the center of the ball, I first extract the contour of the ball and then take the center of its bounding box as reference point. In case some noise would make it through our mask, I am filtering the detected contours by size and only look at the largest one.

# find largest contour corresponding to the ball we want to track

contours, _ = cv2.findContours(mask, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

if len(contours) > 0:

largest_contour = max(contours, key=cv2.contourArea)

x, y, w, h = cv2.boundingRect(largest_contour)

center = (x + w // 2, y + h // 2)

We can also add some annotations to our frame to visualize our detection. I am going to draw two circles, one for the center and one for the perimeter of the ball.

cv2.circle(frame, center, 30, (255, 0, 0), 2)

cv2.circle(frame, center, 2, (255, 0, 0), 2)

To keep track of the ball position, we can use a list. Whenever we detect the ball, we simply add the center position to the list. We can also visualize the trajectory by drawing lines between each of the segments in the tracked position list.

tracked_pos = []

while True:

...

if len(contours) > 0:

...

tracked_pos.append(center)

# draw trajectory

for i in range(1, len(tracked_pos)):

cv2.line(frame, tracked_pos[i - 1], tracked_pos[i], (255, 0, 0), 1)

Now that we can track the ball, let’s start exploring how we can plot the signal using matplotlib. In a first step, we can create the final plot at the end of our video first and then in a second step we worry about how to animate it in real-time. To show the position, velocity and acceleration we can use three horizontally aligned subplots:

fig, axs = plt.subplots(nrows=1, ncols=3, figsize=(10, 2), dpi=100)

axs[0].set_title("Position")

axs[0].set_ylim(0, 700)

axs[1].set_title("Velocity")

axs[1].set_ylim(-200, 200)

axs[2].set_title("Acceleration")

axs[2].set_ylim(-30, 10)

for ax in axs:

ax.set_xlim(0, 20)

ax.grid(True)

We are only interested in the y position in the image (array index 1), and to get a zero-offset position plot, we can subtract the first position.

pos0 = tracked_pos[0][1]

pos = np.array([pos0 - pos[1] for pos in tracked_pos])

For the velocity we can use the difference in position as an approximation and for the acceleration we can use the difference of the velocity.

vel = np.diff(pos)

acc = np.diff(vel)

And now we can plot these three values:

axs[0].plot(range(len(pos)), pos, c="b")

axs[1].plot(range(len(vel)), vel, c="b")

axs[2].plot(range(len(acc)), acc, c="b")

plt.show()

Now on to the fun part, we want to make this plot dynamic! Since we are working in an OpenCV GUI loop, we cannot directly use the show function from matplotlib, as this will just block the loop and not run our program. Instead we need to make use of some trickery ✨

The main idea is to draw the plots in memory into a buffer and then display this buffer in our OpenCV window. By manually calling the draw function of the canvas, we can force the figure to be rendered to a buffer. We can then get this buffer and convert it to an array. Since the buffer is in RGB format, but OpenCV uses BGR, we need to convert the color order.

fig.canvas.draw()

buf = fig.canvas.buffer_rgba()

plot = np.asarray(buf)

plot = cv2.cvtColor(plot, cv2.COLOR_RGB2BGR)

Make sure that the axs.plot calls are now inside the frame loop:

while True:

...

axs[0].plot(range(len(pos)), pos, c="b")

axs[1].plot(range(len(vel)), vel, c="b")

axs[2].plot(range(len(acc)), acc, c="b")

...

Now we can simply display the plot using the imshow function from OpenCV.

cv2.imshow("Plot", plot)

And voilà, you get your animated plot! However you will notice that the performance is quite low. Re-drawing the full plot every frame is quite expensive. To improve the performance, we need to make use of blitting. This is an advanced rendering technique, that draws static parts of the plot into a background image and only re-draws the changing foreground elements. To set this up, we first need to define a reference to each of our three plots before the frame loop.

pl_pos = axs[0].plot([], [], c="b")[0]

pl_vel = axs[1].plot([], [], c="b")[0]

pl_acc = axs[2].plot([], [], c="b")[0]

Then we need to draw the background of the figure once before the loop and get the background of each axis.

fig.canvas.draw()

bg_axs = [fig.canvas.copy_from_bbox(ax.bbox) for ax in axs]

In the loop, we can now change the data for each of the plots and then for each subplot we need to restore the region’s background, draw the new plot and then call the blit function to apply the changes.

# Update plot data

pl_pos.set_data(range(len(pos)), pos)

pl_vel.set_data(range(len(vel)), vel)

pl_acc.set_data(range(len(acc)), acc)

# Blit Pos

fig.canvas.restore_region(bg_axs[0])

axs[0].draw_artist(pl_pos)

fig.canvas.blit(axs[0].bbox)

# Blit Vel

fig.canvas.restore_region(bg_axs[1])

axs[1].draw_artist(pl_vel)

fig.canvas.blit(axs[1].bbox)

# Blit Acc

fig.canvas.restore_region(bg_axs[2])

axs[2].draw_artist(pl_acc)

fig.canvas.blit(axs[2].bbox)

And here we go, the plotting is sped up and the performance has drastically improved.

In this post, you learned how to apply simple Computer Vision techniques to extract a moving foreground object and track it’s trajectory. We then created an animated plot using matplotlib and OpenCV. The plotting is demonstrated on a toy example video with a ball being thrown vertically into the air. However, the tools and techniques used in this project are useful for all kinds of tasks and real-world applications! The full source code is available from my GitHub. I hope you learned something today, happy coding and take care!

All visualizations in this post were created by the author.

Dynamic Visualizations in Python was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Dynamic Visualizations in Python