Originally appeared here:

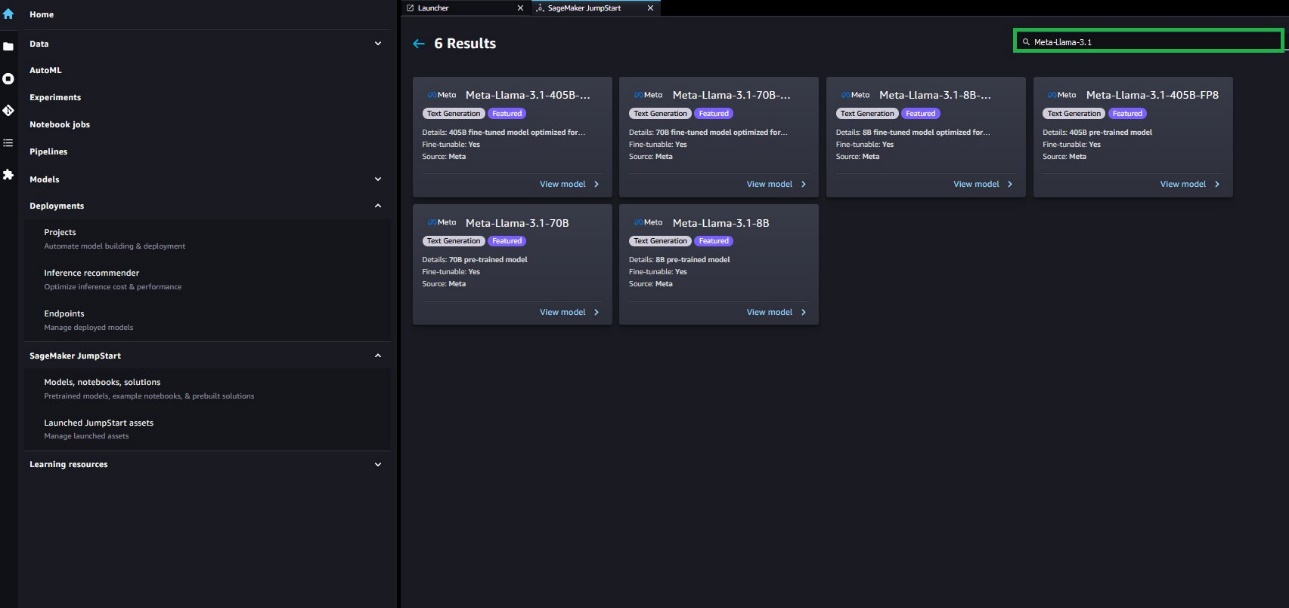

Fine-tune Meta Llama 3.1 models for generative AI inference using Amazon SageMaker JumpStart

Originally appeared here:

Fine-tune Meta Llama 3.1 models for generative AI inference using Amazon SageMaker JumpStart

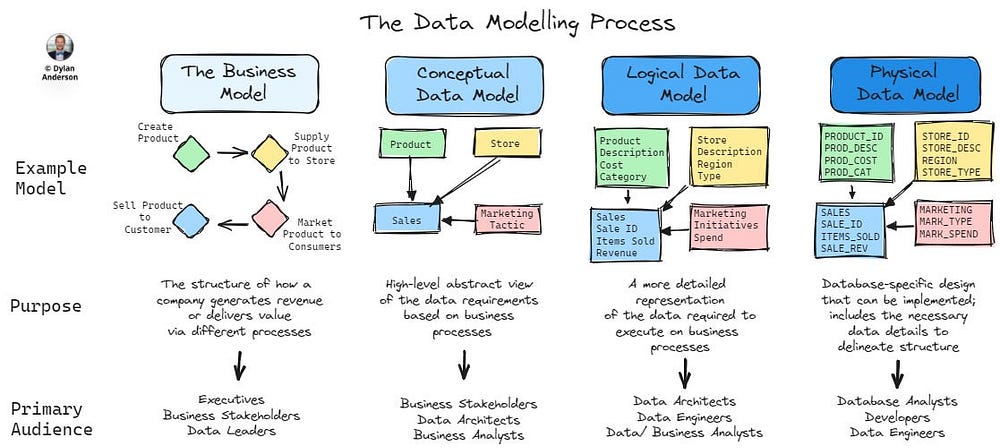

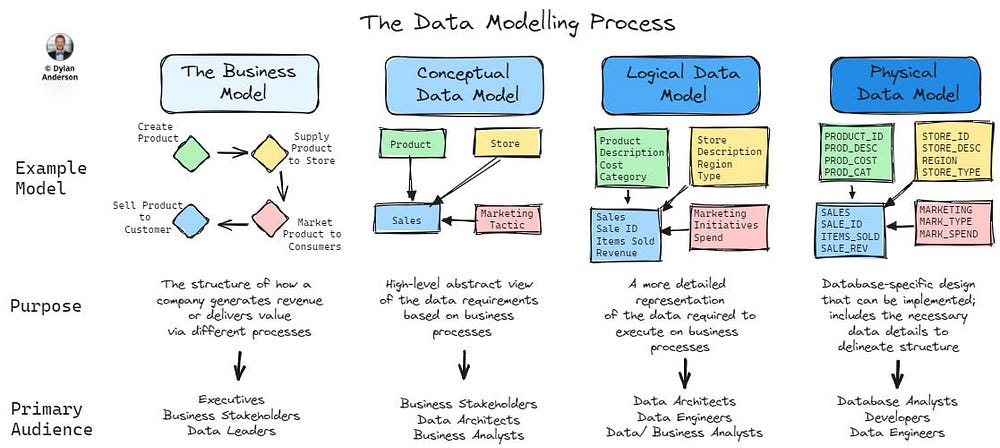

Getting to the bottom of what structuring your data responsibly really means

Originally appeared here:

The Forgotten Guiding Role of Data Modelling

Go Here to Read this Fast! The Forgotten Guiding Role of Data Modelling

Have you ever wondered the amount of calories you consume when you eat your dinner, for example? I do that all the time. Wouldn’t it be wonderful if you could simply pass a picture of your plate through an app and get an estimate of the total number of calories before you decide how far in you want to dip?

This calorie counter app that I created can help you achieve this. It is a Python application that uses Google’s Gemini-1.5-Pro-Latest model to estimate the number of calories in food items.

The app takes two inputs: a question about the food and an image of the food or food items, or simply, a plate of food. It outputs an answer to the question, the total number of calories in the image and a breakdown of calories by each food item in the image.

In this article, I will explain the entire end-to-end process of building the app from scratch, using Google’s Gemini-1.5-pro-latest (a Large Language generative AI model released by Google), and how I developed the front-end of the application using Streamlit.

It is worth noting here that with advancements in the world of AI, it is incumbent on data scientists to gradually shift from traditional deep learning to generative AI techniques in order to revolutionize their role. This is my main purpose of educating on this subject.

Let me start by briefly explaining Gemini-1.5-pro-latest and the streamlit framework, as they are the major components in the infrastructure of this calorie counter app.

Gemini-1.5-pro-latest is an advanced AI language model developed by Google. Since it is the latest version, it has enhanced capabilities over previous versions in the light of faster response times and improved accuracy when used in natural language processing and building applications.

This is a multi-modal model that works with both texts and images — an advancement from Google Gemini-pro model which only works with text prompts.

The model works by understanding and generating text, like humans, based on prompts given to it. In this article, this model will be used to to generate text for our calories counter app.

Gemini-1.5-pro-latest can be integrated into other applications to reinforce their AI capabilities. In this current application, the model uses generative AI techniques to break the uploaded image into individual food items . Based on its contextual understanding of the food items from its nutritional database, it uses image recognition and object detection to estimate the number of calories, and then totals up the calories for all items in the image.

Streamlit is an open-source Python framework that will manage the user interface. This framework simplifies web development so that throughout the project, you do not need to write any HTML and CSS codes for the front end.

Let us dive into building the app.

I will show you how to build the app in 5 clear steps.

For a start, go into your favorite code editor (mine is VS Code) and start a project file. Call it Calories-Counter, for example. This is the current working directory. Create a virtual environment (venv), activate it in your terminal, and then create the following files: .env, calories.py, requirements.txt.

Here’s a recommendation for the look of your folder structure:

Calories-Counter/

├── venv/

│ ├── xxx

│ ├── xxx

├── .env

├── calories.py

└── requirements.txt

Please note that Gemini-1.5-Pro works best with Python versions 3.9 and greater.

Like other Gemini models, Gemini-1.5-pro-latest is currently free for public use. Accessing it requires that you obtain an API key, which you can get from Google AI Studio by going to “Get API key” in this link. Once the key is generated, copy it for subsequent use in your code. Save this key as an environment variable in the .env file as follows.

GOOGLE_API_KEY="paste the generated key here"

Type the following libraries into your requirements.txt file.

In the terminal, install the libraries in requirements.txt with:

python -m pip install -r requirements.txt

Now, let’s start writing the Python script in calories.py. With the following code, import all required libraries:

# import the libraries

from dotenv import load_dotenv

import streamlit as st

import os

import google.generativeai as genai

from PIL import Image

Here’s how the various modules imported will be used:

The following lines will configure the API keys and load them from the environment variables store.

genai.configure(api_key=os.getenv("GOOGLE_API_KEY"))

load_dotenv()

Define a function that, when called, will load the Gemini-1.5-pro-latest and get the response, as follows:

def get_gemini_reponse(input_prompt,image,user_prompt):

model=genai.GenerativeModel('gemini-1.5-pro-latest')

response=model.generate_content([input_prompt,image[0],user_prompt])

return response.text

In the above function, you see that it takes as input, the input prompt that will be specified further down in the script, an image that will be supplied by the user, and a user prompt/question that will be supplied by the user. All that goes into the gemini model to return the response text.

Since Gemini-1.5-pro expects input images in the form of byte arrays, the next thing to do is write a function that processes the uploaded image, converting it to bytes.

def input_image_setup(uploaded_file):

# Check if a file has been uploaded

if uploaded_file is not None:

# Read the file into bytes

bytes_data = uploaded_file.getvalue()

image_parts = [

{

"mime_type": uploaded_file.type, # Get the mime type of the uploaded file

"data": bytes_data

}

]

return image_parts

else:

raise FileNotFoundError("No file uploaded")

Next, specify the input prompt that will determine the behaviour of your app. Here, we are simply telling Gemini what to do with the text and image that the app will be fed with by the user.

input_prompt="""

You are an expert nutritionist.

You should answer the question entered by the user in the input based on the uploaded image you see.

You should also look at the food items found in the uploaded image and calculate the total calories.

Also, provide the details of every food item with calories intake in the format below:

1. Item 1 - no of calories

2. Item 2 - no of calories

----

----

"""

The next step is to initialize streamlit and create a simple user interface for your calorie counter app.

st.set_page_config(page_title="Gemini Calorie Counter App")

st.header("Calorie Counter App")

input=st.text_input("Ask any question related to your food: ",key="input")

uploaded_file = st.file_uploader("Upload an image of your food", type=["jpg", "jpeg", "png"])

image=""

if uploaded_file is not None:

image = Image.open(uploaded_file)

st.image(image, caption="Uploaded Image.", use_column_width=True) #show the image

submit=st.button("Submit & Process") #creates a "Submit & Process" button

The above steps have all the pieces of the app. At this point, the user is able to open the app, enter a question and upload an image.

Finally, let’s put all the pieces together such that once the “Submit & Process” button is clicked, the user will get the required response text.

# Once submit&Process button is clicked

if submit:

image_data=input_image_setup(uploaded_file)

response=get_gemini_reponse(input_prompt,image_data,input)

st.subheader("The Response is")

st.write(response)

Now that the app development is complete, you can execute it in the terminal using the command:

streamlit run calories.py

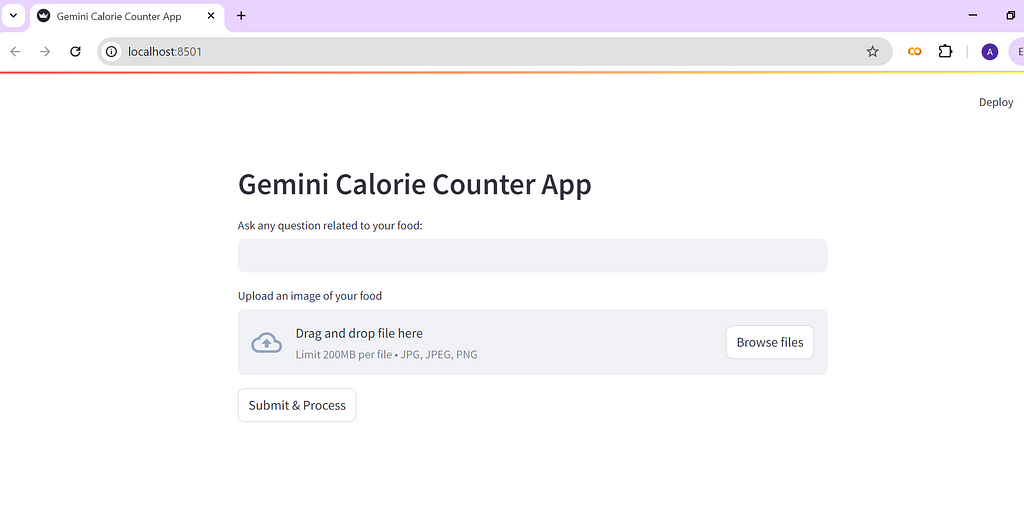

To interact with your app and see how it performs, view your Streamlit app in your browser using the local url or network URL generated.

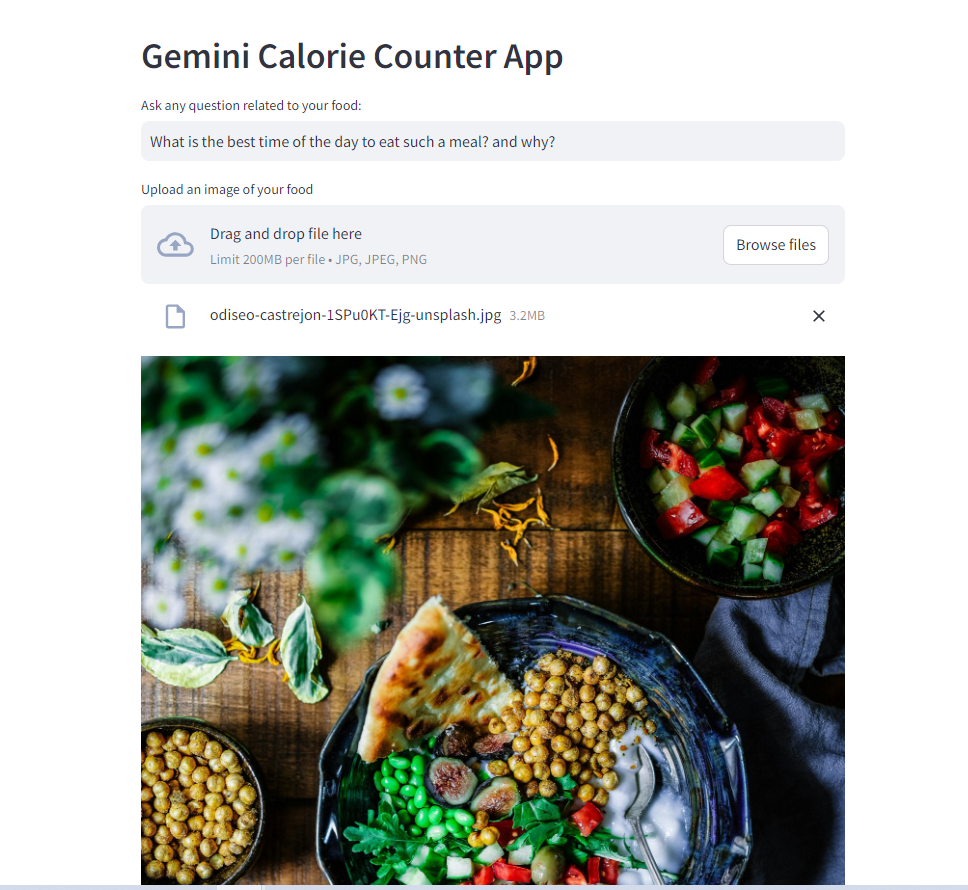

This how your Streamlit app looks like when it is first opened on the browser.

Once the user asks a question and uploads an image, here is the display:

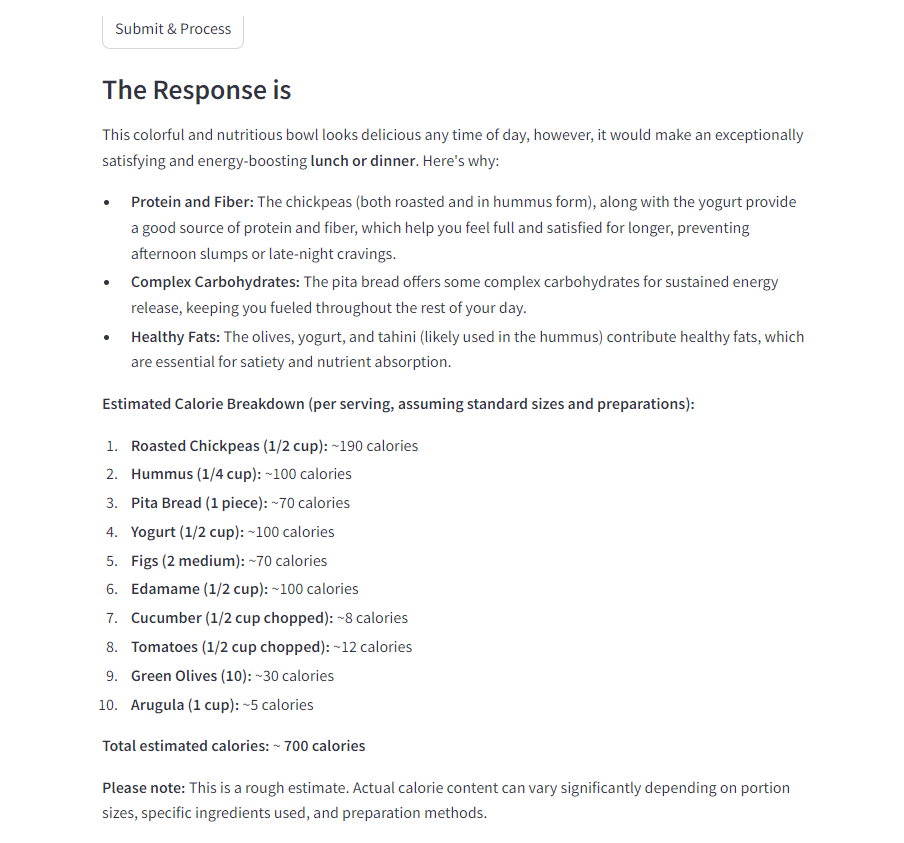

Once the user pushes the “Submit & Process” button, the response in the image below is generated at the bottom of the screen.

For external access, consider deploying your app using cloud services like AWS, Heroku, Streamlit Community Cloud. In this case, let’s use Streamlit Community Cloud to deploy the app for free.

On the top right of the app screen, click ‘Deploy’ and follow the prompts to complete the deployment.

After deployment, you can share the generated app URL to other users.

Just like other AI applications, the results outputed are the best estimates of the model, so, before completely relying on the app, please note the following as some of the potential risks:

To help reduce the risks that come with using the calorie counter, here are possible enhancements that could be integrated into its development:

Leveraging Gemini-1.5-Pro-Latest for Smarter Eating was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Leveraging Gemini-1.5-Pro-Latest for Smarter Eating

Go Here to Read this Fast! Leveraging Gemini-1.5-Pro-Latest for Smarter Eating

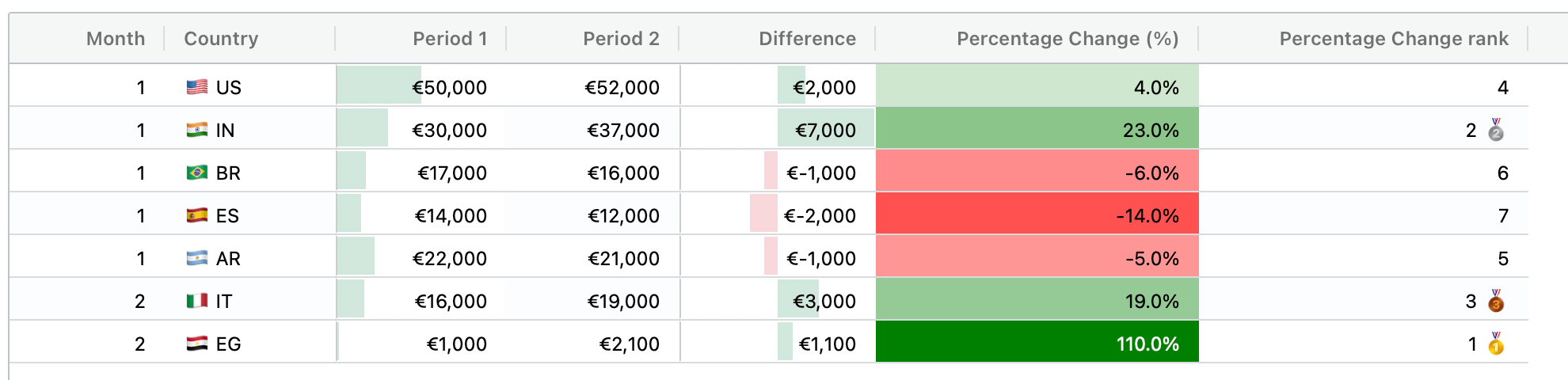

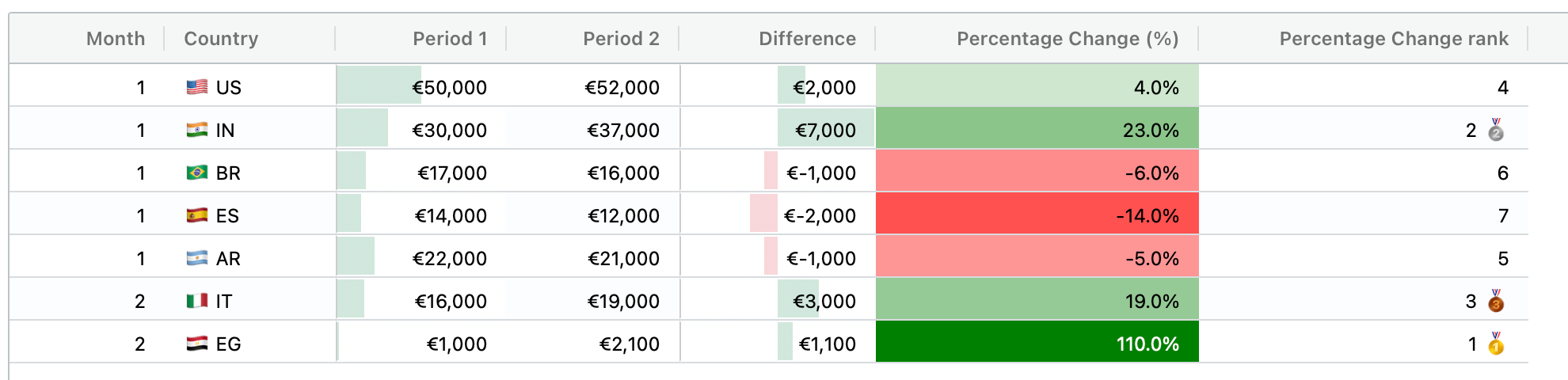

The pandas Styler is cool. But AgGrid is way cooler. Make your Streamlit dataframes interactive and stunning.

Originally appeared here:

How to Create Well-Styled Streamlit Dataframes, Part 2: using AgGrid

Go Here to Read this Fast! How to Create Well-Styled Streamlit Dataframes, Part 2: using AgGrid

A comparison between the SARIMA model and the Facebook Prophet model

Originally appeared here:

Understanding the Limitations of ARIMA Forecasting

Go Here to Read this Fast! Understanding the Limitations of ARIMA Forecasting

A Retrieval-Augmented Generation, or RAG, is a natural language process that involves combining traditional retrieval techniques with LLMs to generate a more accurate and relevant text by integrating the generation properties with the context provided by the retrievals. It has been used widely recently in the context of chatbots, providing the ability for companies to improve their automated communications with clients by using cutting-edge LLM models customized with their data.

Langflow is the graphical user interface of Langchain, a centralized development environment for LLMs. Back in October 2022, Langchain was released and by June 2023 it had become one of the most used open-source projects on GitHub. It took the AI community by storm, specifically for the framework developed to create and customize multiple LLMs with functionalities like integrations with the most relevant text generation and embedding models, the possibility of chaining LLM calls, the ability to manage prompts, the option of equipping vector databases to speed up calculations, and delivering smoothly the outcomes to external APIs and task flows.

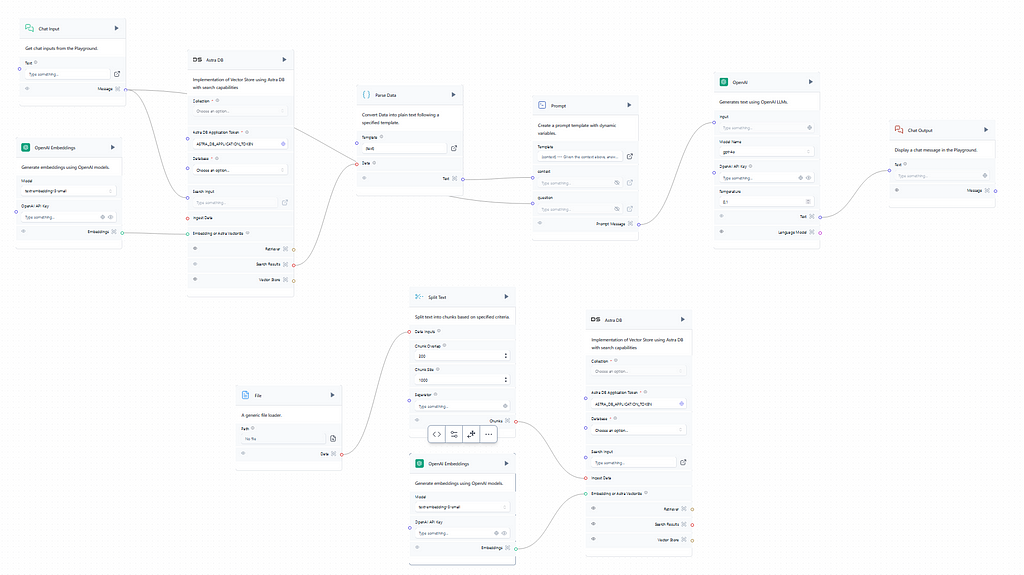

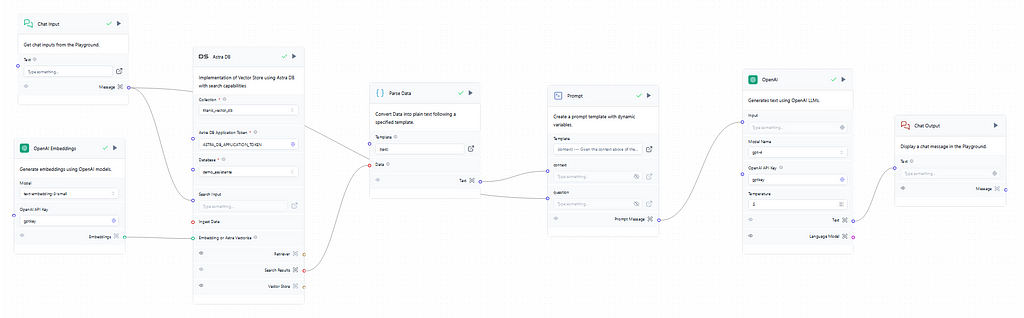

In this article, an end-to-end RAG Chatbot created with Langflow is going to be presented using the famous Titanic dataset. First, the sign-up needs to be made in the Langflow platform, here. To begin a new project some useful pre-built flows can be quickly customizable based on the user needs. To create a RAG Chatbot the best option is to select the Vector Store RAG template. Image 1 exhibits the original flow:

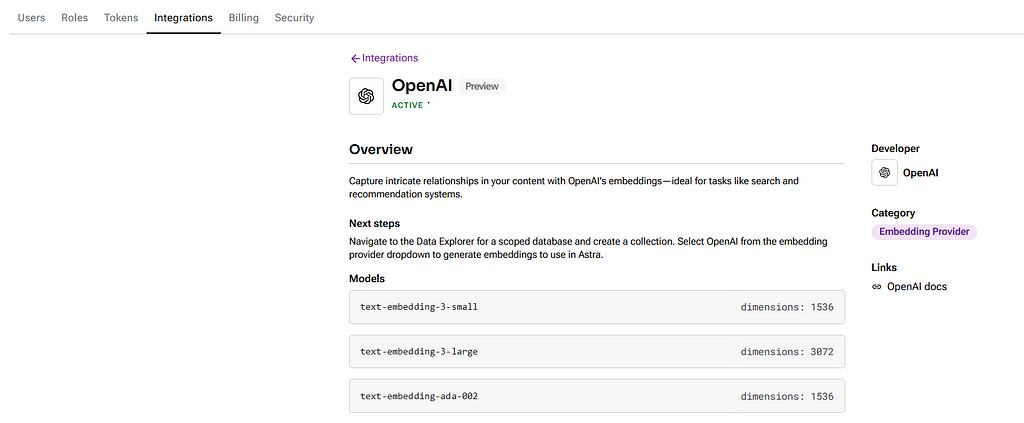

The template has OpenAI preselected for the embeddings and text generations, and those are the ones used in this article, but other options like Ollama, NVIDIA, and Amazon Bedrock are available and easily integrable by just setting up the API key. Before using the integration with an LLM provider is important to check if the chosen integration is active on the configurations, just like in Image 2 below. Also, global variables like API keys and model names can be defined to facilitate the input on the flow objects.

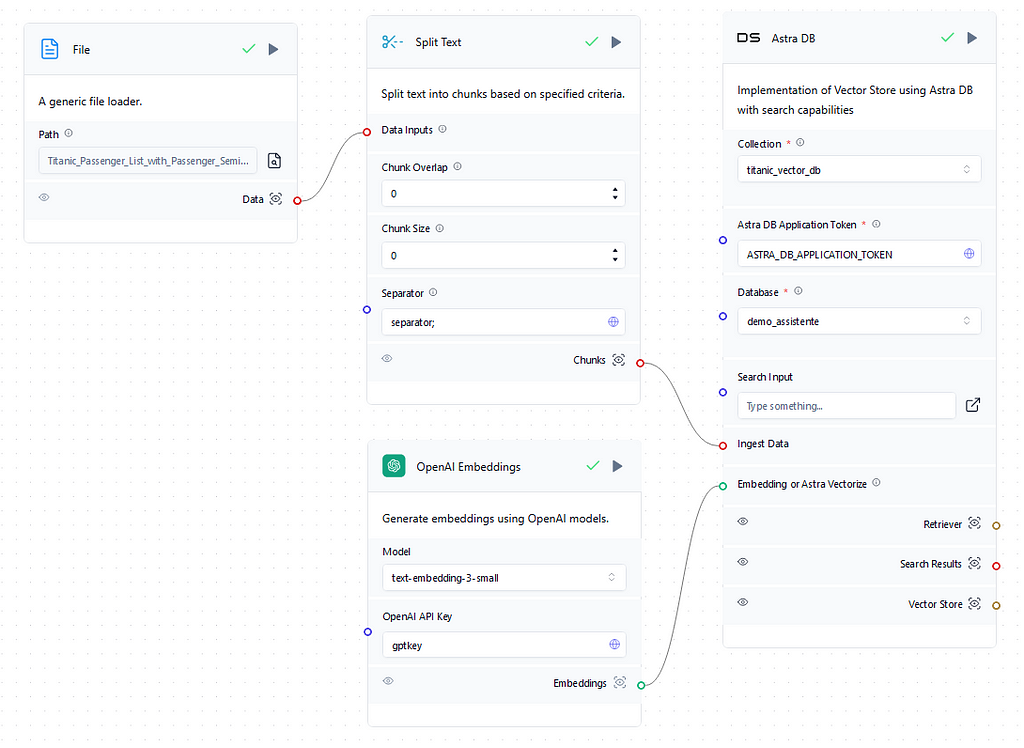

There are two different flows on the Vector Store Rag template, the one below displays the retrieval part of the RAG where the context is provided by uploading a document, splitting, embedding, and then saving it into a Vector Database on Astra DB that can be created easily on the flow interface. Currently, by default, the Astra DB object retrieves the Astra DB application token so it is not even necessary to gather it. Finally, the collection that will store the embedded values in the vector DB needs to be created. The collection dimension needs to match the one from the embedding model, which is available in the documentation, for proper storing of the embedding results. So if the chosen embedding model is OpenAI’s text-embedding-3-small therefore the created collection dimension has to be 1536. Image 3 below presents the complete retrieval flow.

The dataset used to enhance the chatbot context was the Titanic dataset (CC0 License). By the end of the RAG process, the chatbot should be able to provide specific details and answer complex questions about the passengers. But first, we update the file on a generic file loader object and then split it using the global variable “separator;” since the original format was CSV. Also, the chunk overlap and chunk size were set to 0 since each chunk will be a passenger by using the separator. If the input file is in straight text format it is necessary to apply the chunk overlap and size setups to properly create the embeddings. To finish the flow the vectors are stored in the titanic_vector_db on the demo_assistente database.

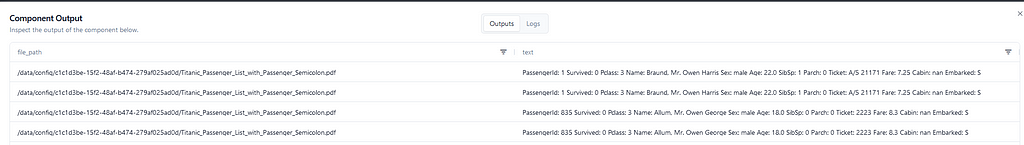

Moving to the generation flow of the RAG, displayed in Image 4, it is triggered with the user input on the chat which is then searched into the database to provide context for the prompt later on. So if the user asks something related to the name “Owen” on the input the search will run through the vector DB’s collection looking for “Owen” related vectors, retrieve and run them through the parser to convert them to text, and finally, the context necessary for the prompt later on is obtained. Image 5 shows the results of the search.

Back to the beginning, it is also critical to connect again the embedding model to the vector DB using the same model in the retrieval flow to run a valid search, otherwise, it would always come empty since the embedding models used in the retrieval and generation flows then would be different. Furthermore, this step evidences the massive performance benefits of using vector DBs in a RAG, where the context needs to be retrieved and passed to the prompt quickly before forging any type of response to the user.

In the prompt, shown in Image 6, the context comes from the parser already converted to text and the question comes from the original user input. The image below shows how the prompt can be structured to integrate the context with the question.

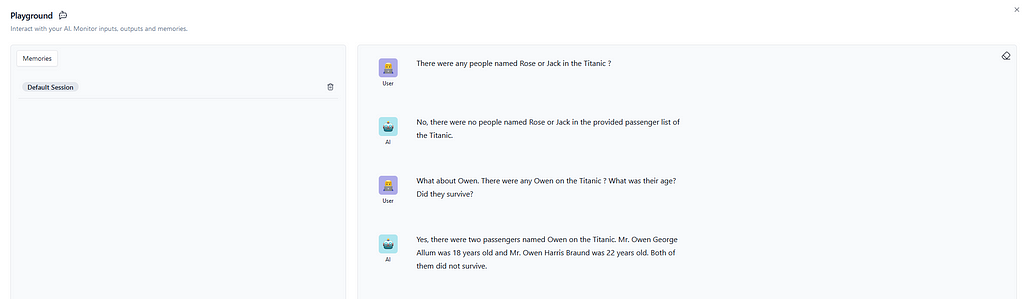

With the prompt written it is time for the text generation model. In this flow, the GPT4 model was chosen with a temperature of 0.5, a recommended standard for chatbots. The temperature controls the randomness of predictions made by a LLM. A lower temperature will generate more deterministic and straightforward answers, leading to a more predictable text. A higher one will generate more creative outputs even though if it is too high the model can easily hallucinate and produce incoherent text. Finally, just set the API key using the global variable with OpenAI’s API key and it’s as easy as that. Then, it’s time to run the flows and check the results on the playground.

The conversation in Image 7 clearly shows that the chatbot has correctly obtained the context and rightfully answered detailed questions about the passengers. And even though it might be disappointing to find out that there were not any Rose or Jack on the Titanic, unfortunately, that is true. And that’s it. The RAG chatbot is created, and of course, it can be enhanced to increase conversational performance and cover some possible misinterpretations, but this article demonstrates how easy Langflow makes it to adapt and customize LLMs.

Finally, to deploy the flow there are multiple possibilities. HuggingFace Spaces is an easy way to deploy the RAG chatbot with scalable hardware infrastructure and native Langflow that wouldn’t require any installations. Langflow can also be installed and used through a Kubernetes cluster, a Docker container, or directly in GCP by using a VM and Google Cloud Shell. For more information about deployment look at the documentation.

New times are coming and low-code solutions are starting to set the tone of how AI is going to be developed in the real world in the short future. This article presented how Langflow revolutionizes AI by centralizing multiple integrations with an intuitive UI and templates. Nowadays anyone with basic knowledge of AI can build a complex application that at the beginning of the decade would take a huge amount of code and deep learning frameworks expertise.

Creating a RAG Chatbot with Langflow and Astra DB was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Creating a RAG Chatbot with Langflow and Astra DB

Go Here to Read this Fast! Creating a RAG Chatbot with Langflow and Astra DB

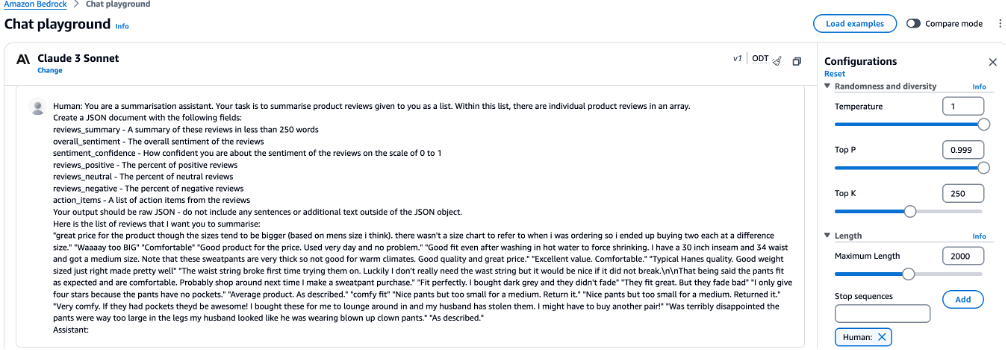

Originally appeared here:

Analyze customer reviews using Amazon Bedrock

Go Here to Read this Fast! Analyze customer reviews using Amazon Bedrock

Originally appeared here:

Accuracy evaluation framework for Amazon Q Business

Go Here to Read this Fast! Accuracy evaluation framework for Amazon Q Business

Originally appeared here:

Elevate healthcare interaction and documentation with Amazon Bedrock and Amazon Transcribe using Live Meeting Assistant

Originally appeared here:

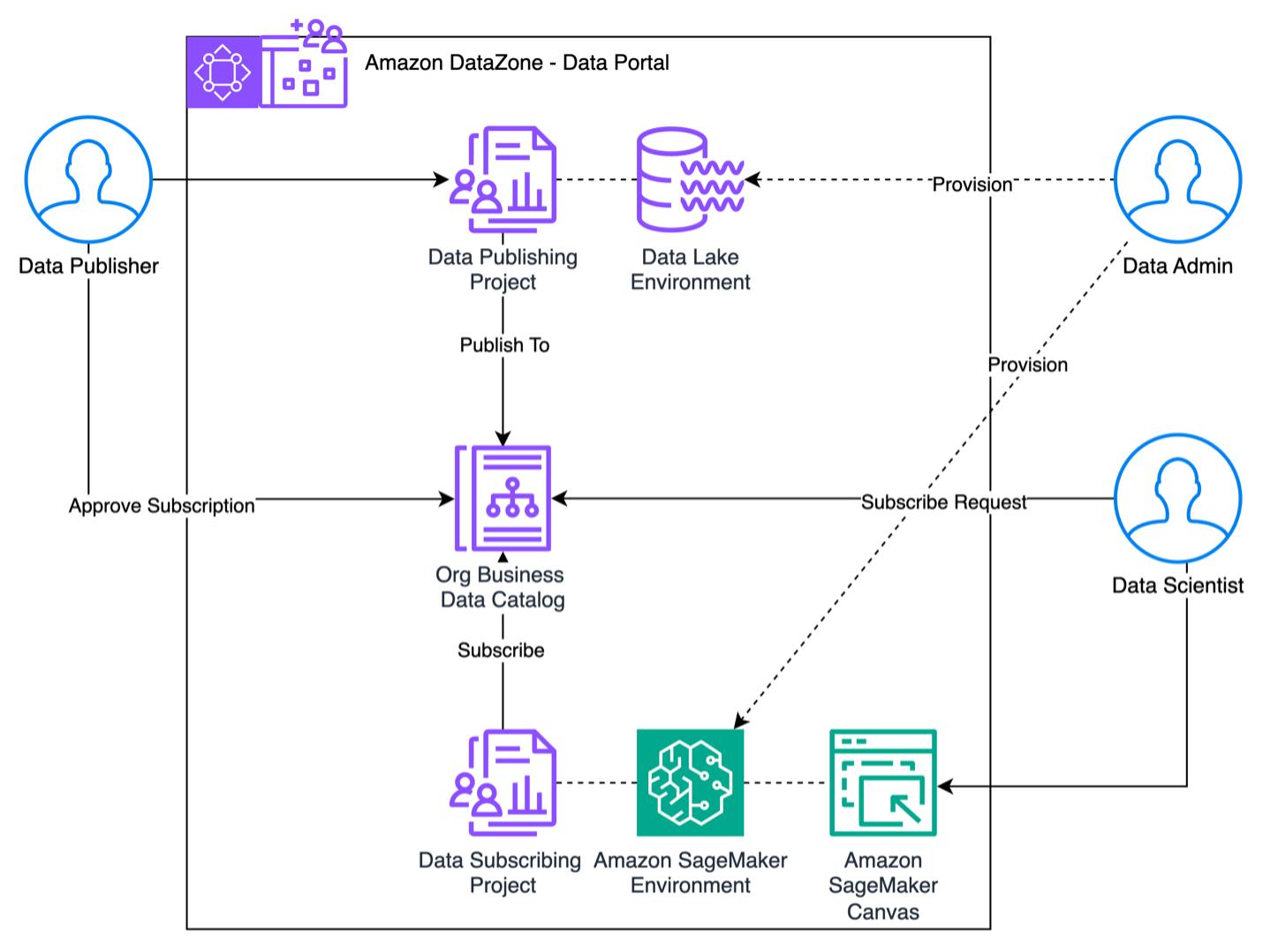

Unlock the power of data governance and no-code machine learning with Amazon SageMaker Canvas and Amazon DataZone