Statements of reasoning indicate types of argument

In a legal case at the trial level, the task of the trier of fact (whether judge or jury or administrative tribunal) is to assess the probative value of the evidence and to arrive at a conclusion about the facts. But what are a tribunal’s methods for performing that task? How many methods does a tribunal employ? There are at least three stages typical for any type of fact-finding institution.

First, a trier of fact must determine which items of available evidence are relevant for deciding which issues of fact. An item of evidence is relevant to proving a factual proposition if it tends to make that proposition more or less probable than it would be without that evidence.

Second, for each issue and set of relevant evidence, a trier of fact must evaluate the trustworthiness of each item of evidence. A person might use various criteria to evaluate the credibility of a witness’s testimony, or the trustworthiness of a document’s contents, or the probative value of a piece of physical evidence. It would be useful to determine which factors a tribunal tends to use in evaluating the credibility or trustworthiness of a particular piece of evidence. Also, can we determine priorities among those factors?

Third, a trier of fact needs to weigh competing evidence. A person needs to balance inconsistent but credible evidence, and then determine the net probative value of all the relevant evidence. There might be different approaches to resolving conflicts between the testimonies of two different witnesses, or of the same witness over time. Or there may be different methods for deciding between statements within different documents, or between testimony and written statements. Can we determine patterns or “soft rules” for doing such comparisons?

A particular type of sentence found in legal decisions provides important clues about the answers to such questions. A well-written legal decision expressly states at least some of the decision maker’s chains of intermediate inferences. Of particular importance are the sentences that state its evidential reasoning — which I will call “reasoning sentences.”

In this article, I discuss the distinguishing characteristics and usefulness of such reasoning sentences. I also discuss the linguistic features that make it possible for machine-learning (ML) models to automatically label reasoning sentences within legal decision documents. I discuss why the adequacy of the performance of those models depends upon the use case, and why even basic ML models can be suitable for the task. I conclude by positioning reasoning sentences within the broader task of using generative AI and large language models to address the challenges of argument mining.

The Characteristics and Usefulness of Reasoning Sentences

In a fact-finding legal decision, a statement of the evidential reasoning explains how the evidence and legal rules support the findings of fact. A reasoning sentence, therefore, is a statement by the tribunal describing some part of the reasoning behind those findings of fact. An example is the following sentence from a fact-finding decision by the Board of Veterans’ Appeals (BVA) in a claim for benefits for a service-related disability:

Also, the clinician’s etiological opinions are credible based on their internal consistency and her duty to provide truthful opinions.

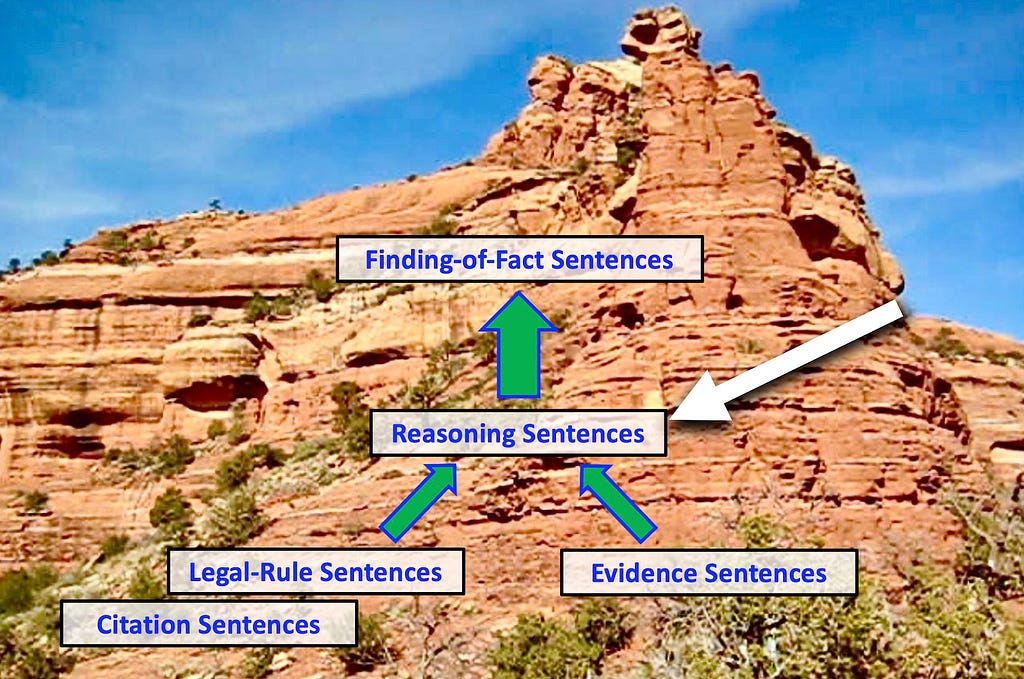

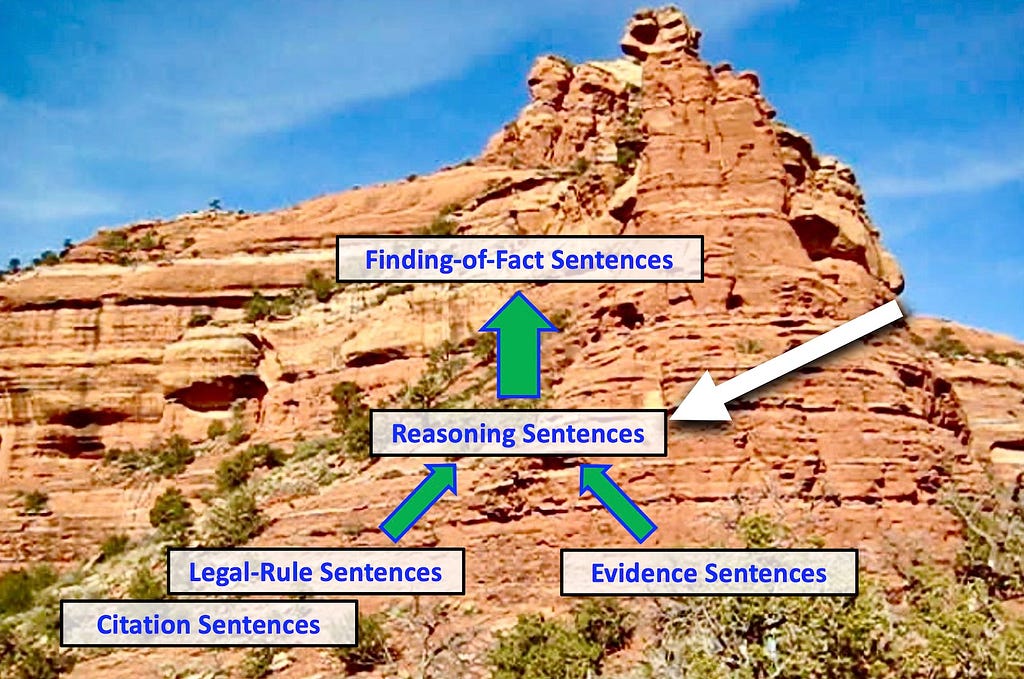

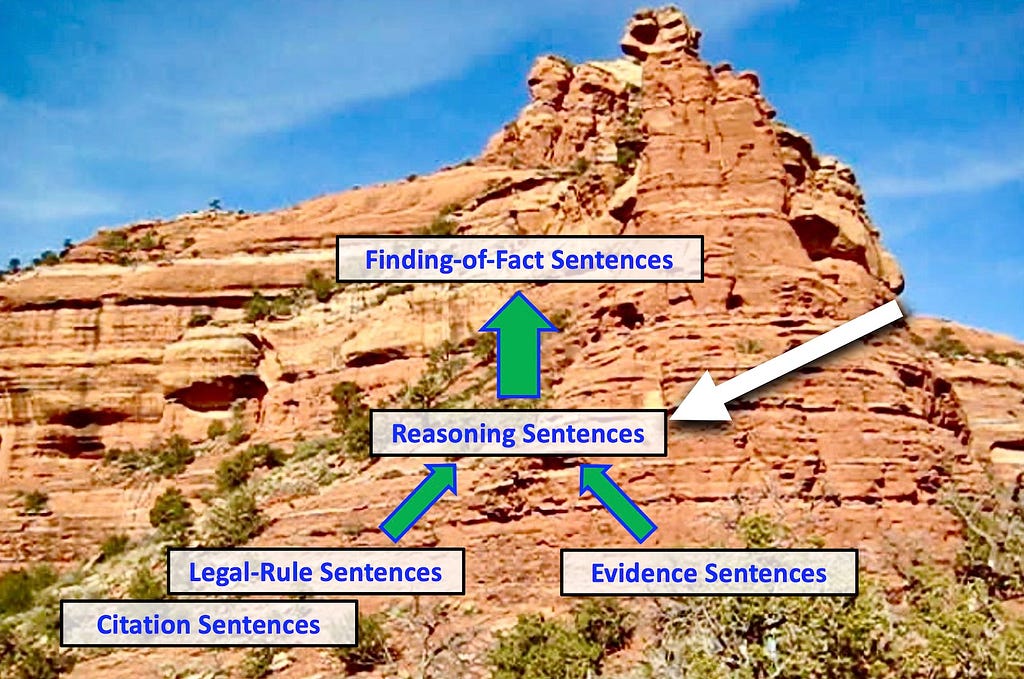

In other articles, I have discussed evidence sentences, legal-rule sentences, and finding sentences. In inferential terms, the evidence and legal rules function as premises, and the finding of fact functions as a conclusion. You can consider reasoning sentences as premises also, in the sense that they explain the probative value of the evidence.

For attorneys and parties involved in a case, reasoning sentences provide an official explanation of why a party’s argument based on the evidence was successful or not. The parties are entitled to hold the tribunal to its stated reasons. Attorneys for the parties can use those stated reasons to help develop arguments against the logic used by the tribunal, or to develop additional support for that logic. Such arguments can be made at the trial level or on appeal.

For attorneys not involved in the case, reasoning sentences can identify the methods of evidence assessment that a tribunal employed in a past case, even if those methods are not binding precedent for the tribunal. If attorneys can gather past cases that exhibit similar issues and evidence, then the reasoning used in those cases can provide possible lines of argument in new similar cases.

For those of us mining types of legal argument generally, we can classify cases by the types of reasoning or argument used. Moreover, if ML algorithms can learn to identify the sentences that state the reasoning of the tribunal, we may be able to automatically find similar cases within very large datasets.

For regulators or legislators, if a standard pattern of reasoning emerges from past cases, they may be able to codify it as a presumption in a regulation or statute, to make future fact-finding more efficient and uniform.

Legal researchers and commentators can at least recommend such patterns as “soft rules” to guide legal reasoning.

For all these reasons, an important focal point in argument mining from legal decisions is to identify, and learn how to use, sentences that state the decision’s reasoning.

Linguistic Features of Reasoning Sentences

In determining which sentences state the reasoning of the tribunal, lawyers consider a number of features.

First, a sentence is more likely to be a sentence about reasoning if the sentence does one or more of the following:

- Explicitly states what evidence is relevant to what issue of fact, or narrows the scope of evidence considered relevant to the issue;

- Contains an explicit statement about the credibility of a witness, or about the trustworthiness of an item of evidence;

- Contains a statement that two items of evidence are in conflict or are inconsistent;

- Compares the probative value of two items of evidence, or emphasizes which evidence is more important than other evidence; or

- States that evidence is lacking, insufficient, or non-existent.

Second, a reasoning sentence must state the reasoning of the trier of fact, not of someone else. That is, we must have a good basis to attribute the reasoning to the tribunal, as distinguished from it being merely the reasoning given by a witness, or an argument made by an attorney or party.

Many different linguistic features can warrant an attribution of the stated reasoning to the decision maker. Sometimes those features are within the contents of the sentence itself. For example, phrases that warrant attribution to the decision maker might be: the Board considers that, or the Board has taken into account that.

At other times, the location of the sentence within a paragraph or section of the decision is sufficient to warrant attribution to the trier of fact. For example, depending on the writing format of the tribunal, the decision might contain a section entitled “Reasons and Bases for the Decision,” or just “Discussion” or “Analysis.” Unqualified reasoning sentences within such sections are probably attributable to the tribunal, unless the sentence itself attributes the reasoning to a witness or party.

Machine-Learning Results

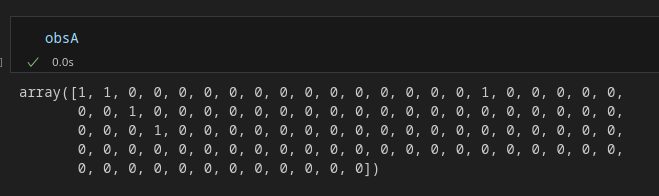

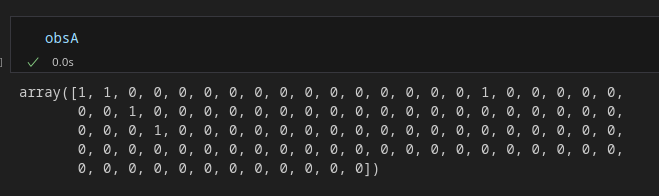

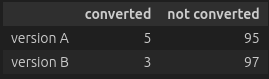

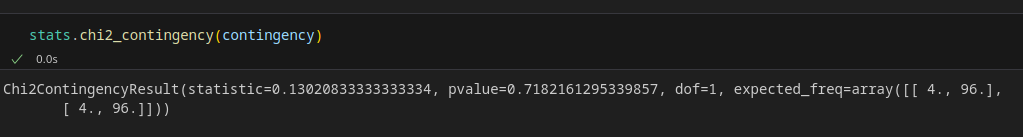

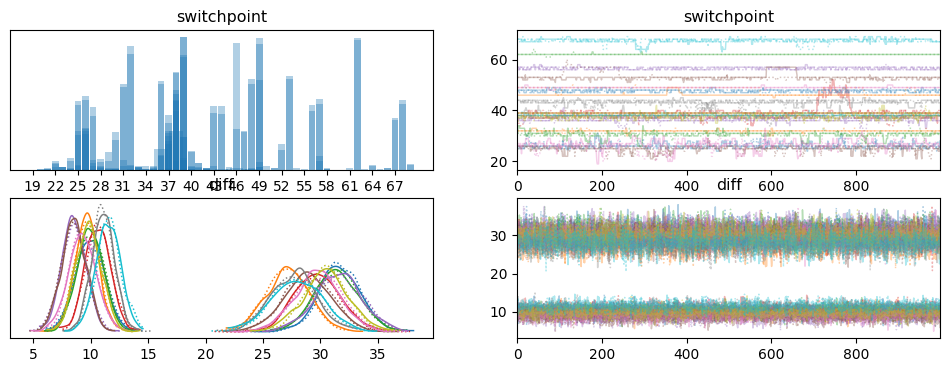

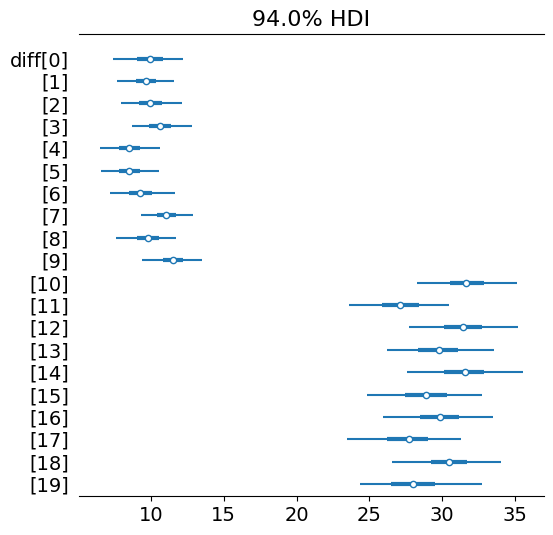

In our experiments, ML algorithms have the hardest time classifying reasoning sentences, compared to other sentence types. Nevertheless, trained models can still provide useful predictions about sentence type. We trained a Logistic Regression model on a dataset of 50 BVA decisions created by Hofstra Law’s Law, Logic & Technology Research Laboratory (LLT Lab). That dataset contains 5,797 manually labeled sentences after preprocessing, 710 of which are reasoning sentences. In a multi-class scenario, the model classified reasoning sentences with precision = 0.66 and recall = 0.52. We got comparable results with a neural network (“NN”) model that we later trained on the same BVA dataset, and we tested on 1,846 sentences. The model precision for reasoning sentences was 0.66, and the recall was 0.51.

It is tempting to dismiss such ML performance as too low to be useful. Before doing so, it is important to investigate the nature of the errors made, and the practical cost of an error given a use case.

Practical Error Analysis

Of the 175 sentences that the neural net model predicted to be reasoning sentences, 59 were misclassifications (precision = 0.66). Here the confusion was with several other types of sentences. Of the 59 sentences misclassified as reasoning sentences, 24 were actually evidence sentences, 15 were finding sentences, and 11 were legal-rule sentences.

Such confusion is understandable if the wording of a reasoning sentence closely tracks the evidence being evaluated, or the finding being supported, or the legal rule being applied. An evidence sentence might also use words or phrases that signify inference, but the inference being reported in the sentence is not that of the trier of fact, but is in fact part of the content of the evidence.

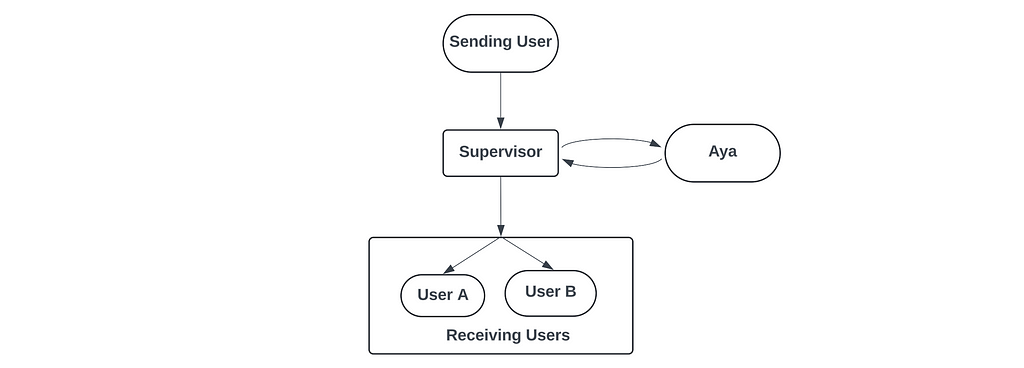

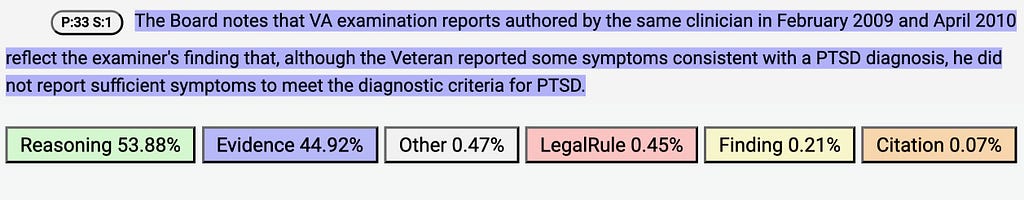

As an example of a false positive (or precision error), the trained NN model mistakenly predicted the following to be a reasoning sentence, when it is actually an evidence sentence (the model originally assigned a background color of green, which the expert reviewer manually changed to blue) (the screenshot is taken from the software application LA-MPS, developed by Apprentice Systems):

While this is an evidence sentence that primarily recites the findings reflected in the reports of an examiner from the Department of Veterans Affairs (VA), the NN model classified the sentence as stating the reasoning of the tribunal itself, probably due in part to the occurrence of the words ‘The Board notes that.’ The prediction scores of the model, however, indicate that the confusion was a reasonably close call (see below the sentence text): reasoning sentence (53.88%) vs. evidence sentence (44.92%).

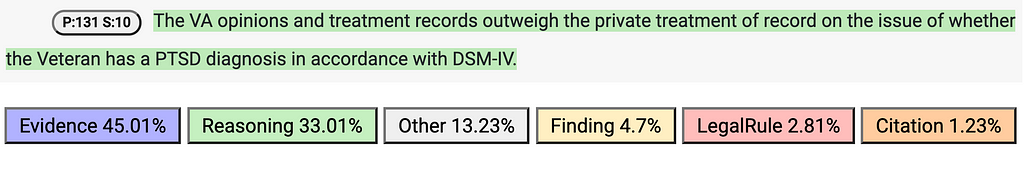

As an example of a false negative (or recall error), the NN model misclassified the following sentence as an evidence sentence, when clearly it is a reasoning sentence (the model originally assigned a background color of blue, which the expert reviewer manually changed to green):

This sentence refers to the evidence, but it does so in order to explain the tribunal’s reasoning that the probative value of the evidence from the VA outweighed that of the private treatment evidence. The prediction scores for the possible sentence roles (shown below the sentence text) show that the NN model erroneously predicted this to be an evidence sentence (score = 45.01%), although reasoning sentence also received a relatively high score (33.01%).

In fact, the wording of sentences can make their true classification highly ambiguous, even for lawyers. An example is whether to classify the following sentence as a legal-rule sentence or as a reasoning sentence:

No further development or corroborative evidence is required, provided that the claimed stressor is “consistent with the circumstances, conditions, or hardships of the veteran’s service.”

Given the immediate context within the decision, we manually labeled this sentence as stating a legal rule about when further development or corroborative evidence is required. But the sentence also contains wording consistent with a trier of fact’s reasoning within the specifics of a case. Based only on the sentence wording, however, even lawyers might reasonably classify this sentence in either category.

The cost of a classification error depends upon the use case and the type of error. For the purpose of extracting and presenting examples of legal reasoning, the precision and recall noted above might be acceptable to a user. A precision of 0.66 means that about 2 of every 3 sentences predicted to be reasoning sentences are correctly predicted, and a recall of 0.51 means that about half of the actual reasoning sentences are correctly detected. If high recall is not essential, and the goal is helpful illustration of past reasoning, such performance might be acceptable.

An error might be especially low-cost if it consists of confusing a reasoning sentence with an evidence sentence or legal-rule sentence that still contains insight about the reasoning at work in the case. If the user is interested in viewing different examples of possible arguments, then a sentence classified either as reasoning or evidence or legal rule might still be part of an illustrative argument pattern.

Such low precision and recall would be unacceptable, however, if the goal is to compile accurate statistics on the occurrence of arguments involving a particular kind of reasoning. Our confidence would be very low for descriptive or inferential statistics based on a sample drawn from a set of decisions in which the reasoning sentences were automatically labeled using such a model.

Summary

In sum, reasoning sentences can contain extremely valuable information about the types of arguments and reasoning at work in a decision.

First, they signal patterns of reasoning recognized by triers of fact in past cases, and they can suggest possible patterns of argument in future cases. We can gather illustrative sets of similar cases, examine the use of evidence and legal rules in combination, and illustrate their success or failure as arguments.

Second, if we extract a set of reasoning sentences from a large dataset, we can survey them to develop a list of factors for evaluating individual items of evidence, and to develop soft rules for comparing conflicting items of evidence.

It is worth noting also that if our goal is automated argument mining at scale, then identifying and extracting whole arguments rests on more classifiers than merely those for reasoning sentences. I have suggested in other articles that automatic classifiers are adequate for certain use cases in labeling evidence sentences, legal-rule sentences, and finding sentences. Perhaps auto-labeling such sentence types in past decisions can help large language models to address the challenges in argument mining — that is, help them to summarize reasoning in past cases and to recommend arguments in new cases.

Reasoning as the Engine Driving Legal Arguments was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Reasoning as the Engine Driving Legal Arguments

Go Here to Read this Fast! Reasoning as the Engine Driving Legal Arguments