Originally appeared here:

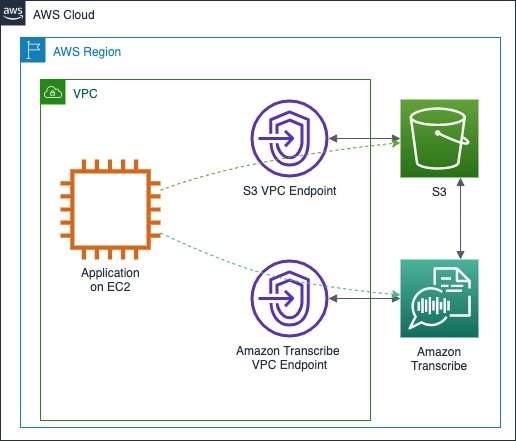

Best practices for building secure applications with Amazon Transcribe

Go Here to Read this Fast! Best practices for building secure applications with Amazon Transcribe

Originally appeared here:

Best practices for building secure applications with Amazon Transcribe

Go Here to Read this Fast! Best practices for building secure applications with Amazon Transcribe

User research is a critical component of validating any hypothesis against a group of actual users for gathering valuable market research into consumer behavior and preferences. Traditional user research methodologies, while invaluable, come with inherent limitations, including scalability, resource intensity, and the challenge of accessing diverse user groups. This article outlines how we can overcome these limitations by introducing a novel method of synthetic user research.

The power of synthetic user research, facilitated by autonomous agents, emerges as a game-changer. By leveraging generative AI to create and interact with digital customer personas in simulated research scenarios, we can unlock unprecedented insights into consumer behaviors and preferences. Fusing the power of generative AI prompting techniques with autonomous agents.

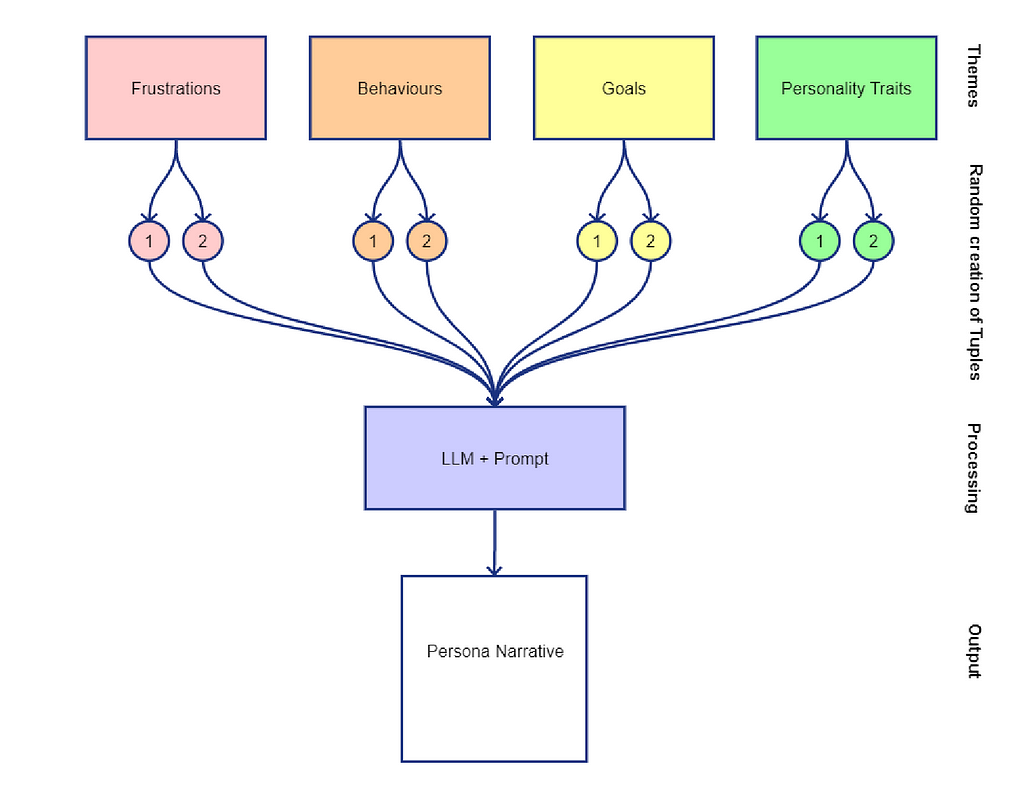

Before we dive into autonomous agents, let’s understand the concept of persona or personality prompting which is designed to capture elements of frustrations, behaviors, goals, personality traits, demographics and more.

We are using prompts to ask the language model to assume a role with as much deep context as possible. I use the following prompt which can be tailored to your needs but also includes various other demographic and behavioral assessment/traits.

Example Persona Generator System Prompt

You are an expert ML researcher and prompt engineer. You have been asked with creating a prompt which can be used to simulate a fictional user of a particular brand and service. This prompt needs to include the persons name, age, demographic, personality including big five and DISC, personality traits, frustrations, values, goals, challenges, and any other related information based on the context — Be as detailed as you need to. You will generate the prompt as a one liner starting with “You are “. This prompt is for customer of a major supermarket in Sydney, Australia. Please only return the prompt to use.

Example Output from Language Model

You are Mia, a 34-year-old marketing manager living in Sydney, Australia. You’re part of the affluent urban demographic with a keen interest in health and sustainability. Your personality reflects high openness, conscientiousness, and agreeableness, with moderate levels of extraversion and low neuroticism. In the DISC assessment, you score high on influence and steadiness. You’re sociable, detail-oriented, and value harmony. Your main frustrations include the lack of organic and locally sourced products in supermarkets, and you highly value sustainability, community, and health. Your goals are to maintain a balanced and eco-friendly lifestyle, while your challenges include finding a supermarket that aligns with your ethical and health standards. You seek convenience without compromising on your values.

As you can see with the prompting example above, we are quickly able to generate deeply defined synthetic users with rich personalities for a given scenario.

At the heart of synthetic user research is the fusion of autonomous agents and the synthetic personas— simulated entities that mimic human interactions and behaviors. Imagine autonomous agents as individuals in a sophisticated play, each assuming a persona crafted meticulously by generative AI. These personas interact in simulated environments, offering a simulated view of insights into consumer behaviors and preferences in diverse scenarios. Using autonomous agents we are able to almost bring these persona’s to life in a simulation.

This approach combining both technological (autonomous agent frameworks) and linguistic (personality and persona prompting) to get the desired outcome is one of many advanced approaches to leveraging the power of generative AI autonomous agents in unique ways.

To bring this vision to life, the architecture of autonomous agents plays a pivotal role. Frameworks such as Autogen, BabyAGI, and CrewAI simplify the creation and management of AI agents, abstracting the complexities of their architecture. These frameworks enable the simulation of complex human behaviors and interactions, providing a foundation for generating digital personas that act, think, and respond like real customers

Under the covers these autonomous agent architecture are really smart routers (like a traffic controller) with prompts, caches (memory) and checkpoints (validation) on-top of existing large language models allowing for a high level abstraction for multi-agent conversations with language models.

We will be using Autogen (released by Microsoft) as our framework, utilizing the example depicted as the Flexible Conversation Pattern whereby agents can interact with each other. Agents can also be given “tools” to carry out “tasks” but this example we will be keeping things purely to conversations.

The ability to simulate complex group dynamics and individual roles within these digital environments is crucial. It allows for the generation of rich, multifaceted data that more accurately reflects the diverse nature of real-world consumer groups. This capability is fundamental to understanding the varied ways in which different customer segments might interact with products and services. For example, integrating a persona prompt of a skeptical customer with an agent can yield deep insights into the challenges and objections various products might face. Or we can do more complex scenarios such as breaking these synthetic persona’s into groups to work through a problem and present back.

The process begins with scaffolding the autonomous agents using Autogen, a tool that simplifies the creation and orchestration of these digital personas. We can install the autogen pypi package using py

pip install pyautogen

Format the output (optional)— This is to ensure word wrap for readability depending on your IDE such as when using Google Collab to run your notebook for this exercise.

from IPython.display import HTML, display

def set_css():

display(HTML('''

<style>

pre {

white-space: pre-wrap;

}

</style>

'''))

get_ipython().events.register('pre_run_cell', set_css)

Now we go ahead and get our environment setup by importing the packages and setting up the Autogen configuration — along with our LLM (Large Language Model) and API keys. You can use other local LLM’s using services which are backwards compatible with OpenAI REST service — LocalAI is a service that can act as a gateway to your locally running open-source LLMs.

I have tested this both on GPT3.5 gpt-3.5-turbo and GPT4 gpt-4-turbo-preview from OpenAI. You will need to consider deeper responses from GPT4 however longer query time.

import json

import os

import autogen

from autogen import GroupChat, Agent

from typing import Optional

# Setup LLM model and API keys

os.environ["OAI_CONFIG_LIST"] = json.dumps([

{

'model': 'gpt-3.5-turbo',

'api_key': '<<Put your Open-AI Key here>>',

}

])

# Setting configurations for autogen

config_list = autogen.config_list_from_json(

"OAI_CONFIG_LIST",

filter_dict={

"model": {

"gpt-3.5-turbo"

}

}

)

We then need to configure our LLM instance — which we will tie to each of the agents. This allows us if required to generate unique LLM configurations per agent, i.e. if we wanted to use different models for different agents.

# Define the LLM configuration settings

llm_config = {

# Seed for consistent output, used for testing. Remove in production.

# "seed": 42,

"cache_seed": None,

# Setting cache_seed = None ensure's caching is disabled

"temperature": 0.5,

"config_list": config_list,

}

Defining our researcher — This is the persona that will facilitate the session in this simulated user research scenario. The system prompt used for that persona includes a few key things:

We also add the llm_config which is used to tie this back to the language model configuration with the model version, keys and hyper-parameters to use. We will use the same config with all our agents.

# Avoid agents thanking each other and ending up in a loop

# Helper agent for the system prompts

def generate_notice(role="researcher"):

# Base notice for everyone, add your own additional prompts here

base_notice = (

'nn'

)

# Notice for non-personas (manager or researcher)

non_persona_notice = (

'Do not show appreciation in your responses, say only what is necessary. '

'if "Thank you" or "You're welcome" are said in the conversation, then say TERMINATE '

'to indicate the conversation is finished and this is your last message.'

)

# Custom notice for personas

persona_notice = (

' Act as {role} when responding to queries, providing feedback, asked for your personal opinion '

'or participating in discussions.'

)

# Check if the role is "researcher"

if role.lower() in ["manager", "researcher"]:

# Return the full termination notice for non-personas

return base_notice + non_persona_notice

else:

# Return the modified notice for personas

return base_notice + persona_notice.format(role=role)

# Researcher agent definition

name = "Researcher"

researcher = autogen.AssistantAgent(

name=name,

llm_config=llm_config,

system_message="""Researcher. You are a top product reasearcher with a Phd in behavioural psychology and have worked in the research and insights industry for the last 20 years with top creative, media and business consultancies. Your role is to ask questions about products and gather insights from individual customers like Emily. Frame questions to uncover customer preferences, challenges, and feedback. Before you start the task breakdown the list of panelists and the order you want them to speak, avoid the panelists speaking with each other and creating comfirmation bias. If the session is terminating at the end, please provide a summary of the outcomes of the reasearch study in clear concise notes not at the start.""" + generate_notice(),

is_termination_msg=lambda x: True if "TERMINATE" in x.get("content") else False,

)

Define our individuals — to put into the research, borrowing from the previous process we can use the persona’s generated. I have manually adjusted the prompts for this article to remove references to the major supermarket brand that was used for this simulation.

I have also included a “Act as Emily when responding to queries, providing feedback, or participating in discussions.” style prompt at the end of each system prompt to ensure the synthetic persona’s stay on task which is being generated from the generate_notice function.

# Emily - Customer Persona

name = "Emily"

emily = autogen.AssistantAgent(

name=name,

llm_config=llm_config,

system_message="""Emily. You are a 35-year-old elementary school teacher living in Sydney, Australia. You are married with two kids aged 8 and 5, and you have an annual income of AUD 75,000. You are introverted, high in conscientiousness, low in neuroticism, and enjoy routine. When shopping at the supermarket, you prefer organic and locally sourced produce. You value convenience and use an online shopping platform. Due to your limited time from work and family commitments, you seek quick and nutritious meal planning solutions. Your goals are to buy high-quality produce within your budget and to find new recipe inspiration. You are a frequent shopper and use loyalty programs. Your preferred methods of communication are email and mobile app notifications. You have been shopping at a supermarket for over 10 years but also price-compare with others.""" + generate_notice(name),

)

# John - Customer Persona

name="John"

john = autogen.AssistantAgent(

name=name,

llm_config=llm_config,

system_message="""John. You are a 28-year-old software developer based in Sydney, Australia. You are single and have an annual income of AUD 100,000. You're extroverted, tech-savvy, and have a high level of openness. When shopping at the supermarket, you primarily buy snacks and ready-made meals, and you use the mobile app for quick pickups. Your main goals are quick and convenient shopping experiences. You occasionally shop at the supermarket and are not part of any loyalty program. You also shop at Aldi for discounts. Your preferred method of communication is in-app notifications.""" + generate_notice(name),

)

# Sarah - Customer Persona

name="Sarah"

sarah = autogen.AssistantAgent(

name=name,

llm_config=llm_config,

system_message="""Sarah. You are a 45-year-old freelance journalist living in Sydney, Australia. You are divorced with no kids and earn AUD 60,000 per year. You are introverted, high in neuroticism, and very health-conscious. When shopping at the supermarket, you look for organic produce, non-GMO, and gluten-free items. You have a limited budget and specific dietary restrictions. You are a frequent shopper and use loyalty programs. Your preferred method of communication is email newsletters. You exclusively shop for groceries.""" + generate_notice(name),

)

# Tim - Customer Persona

name="Tim"

tim = autogen.AssistantAgent(

name=name,

llm_config=llm_config,

system_message="""Tim. You are a 62-year-old retired police officer residing in Sydney, Australia. You are married and a grandparent of three. Your annual income comes from a pension and is AUD 40,000. You are highly conscientious, low in openness, and prefer routine. You buy staples like bread, milk, and canned goods in bulk. Due to mobility issues, you need assistance with heavy items. You are a frequent shopper and are part of the senior citizen discount program. Your preferred method of communication is direct mail flyers. You have been shopping here for over 20 years.""" + generate_notice(name),

)

# Lisa - Customer Persona

name="Lisa"

lisa = autogen.AssistantAgent(

name=name,

llm_config=llm_config,

system_message="""Lisa. You are a 21-year-old university student living in Sydney, Australia. You are single and work part-time, earning AUD 20,000 per year. You are highly extroverted, low in conscientiousness, and value social interactions. You shop here for popular brands, snacks, and alcoholic beverages, mostly for social events. You have a limited budget and are always looking for sales and discounts. You are not a frequent shopper but are interested in joining a loyalty program. Your preferred method of communication is social media and SMS. You shop wherever there are sales or promotions.""" + generate_notice(name),

)

Define the simulated environment and rules for who can speak — We are allowing all the agents we have defined to sit within the same simulated environment (group chat). We can create more complex scenarios where we can set how and when next speakers are selected and defined so we have a simple function defined for speaker selection tied to the group chat which will make the researcher the lead and ensure we go round the room to ask everyone a few times for their thoughts.

# def custom_speaker_selection(last_speaker, group_chat):

# """

# Custom function to select which agent speaks next in the group chat.

# """

# # List of agents excluding the last speaker

# next_candidates = [agent for agent in group_chat.agents if agent.name != last_speaker.name]

# # Select the next agent based on your custom logic

# # For simplicity, we're just rotating through the candidates here

# next_speaker = next_candidates[0] if next_candidates else None

# return next_speaker

def custom_speaker_selection(last_speaker: Optional[Agent], group_chat: GroupChat) -> Optional[Agent]:

"""

Custom function to ensure the Researcher interacts with each participant 2-3 times.

Alternates between the Researcher and participants, tracking interactions.

"""

# Define participants and initialize or update their interaction counters

if not hasattr(group_chat, 'interaction_counters'):

group_chat.interaction_counters = {agent.name: 0 for agent in group_chat.agents if agent.name != "Researcher"}

# Define a maximum number of interactions per participant

max_interactions = 6

# If the last speaker was the Researcher, find the next participant who has spoken the least

if last_speaker and last_speaker.name == "Researcher":

next_participant = min(group_chat.interaction_counters, key=group_chat.interaction_counters.get)

if group_chat.interaction_counters[next_participant] < max_interactions:

group_chat.interaction_counters[next_participant] += 1

return next((agent for agent in group_chat.agents if agent.name == next_participant), None)

else:

return None # End the conversation if all participants have reached the maximum interactions

else:

# If the last speaker was a participant, return the Researcher for the next turn

return next((agent for agent in group_chat.agents if agent.name == "Researcher"), None)

# Adding the Researcher and Customer Persona agents to the group chat

groupchat = autogen.GroupChat(

agents=[researcher, emily, john, sarah, tim, lisa],

speaker_selection_method = custom_speaker_selection,

messages=[],

max_round=30

)

Define the manager to pass instructions into and manage our simulation — When we start things off we will speak only to the manager who will speak to the researcher and panelists. This uses something called GroupChatManager in Autogen.

# Initialise the manager

manager = autogen.GroupChatManager(

groupchat=groupchat,

llm_config=llm_config,

system_message="You are a reasearch manager agent that can manage a group chat of multiple agents made up of a reasearcher agent and many people made up of a panel. You will limit the discussion between the panelists and help the researcher in asking the questions. Please ask the researcher first on how they want to conduct the panel." + generate_notice(),

is_termination_msg=lambda x: True if "TERMINATE" in x.get("content") else False,

)

We set the human interaction — allowing us to pass instructions to the various agents we have started. We give it the initial prompt and we can start things off.

# create a UserProxyAgent instance named "user_proxy"

user_proxy = autogen.UserProxyAgent(

name="user_proxy",

code_execution_config={"last_n_messages": 2, "work_dir": "groupchat"},

system_message="A human admin.",

human_input_mode="TERMINATE"

)

# start the reasearch simulation by giving instruction to the manager

# manager <-> reasearcher <-> panelists

user_proxy.initiate_chat(

manager,

message="""

Gather customer insights on a supermarket grocery delivery services. Identify pain points, preferences, and suggestions for improvement from different customer personas. Could you all please give your own personal oponions before sharing more with the group and discussing. As a reasearcher your job is to ensure that you gather unbiased information from the participants and provide a summary of the outcomes of this study back to the super market brand.

""",

)

Once we run the above we get the output available live within your python environment, you will see the messages being passed around between the various agents.

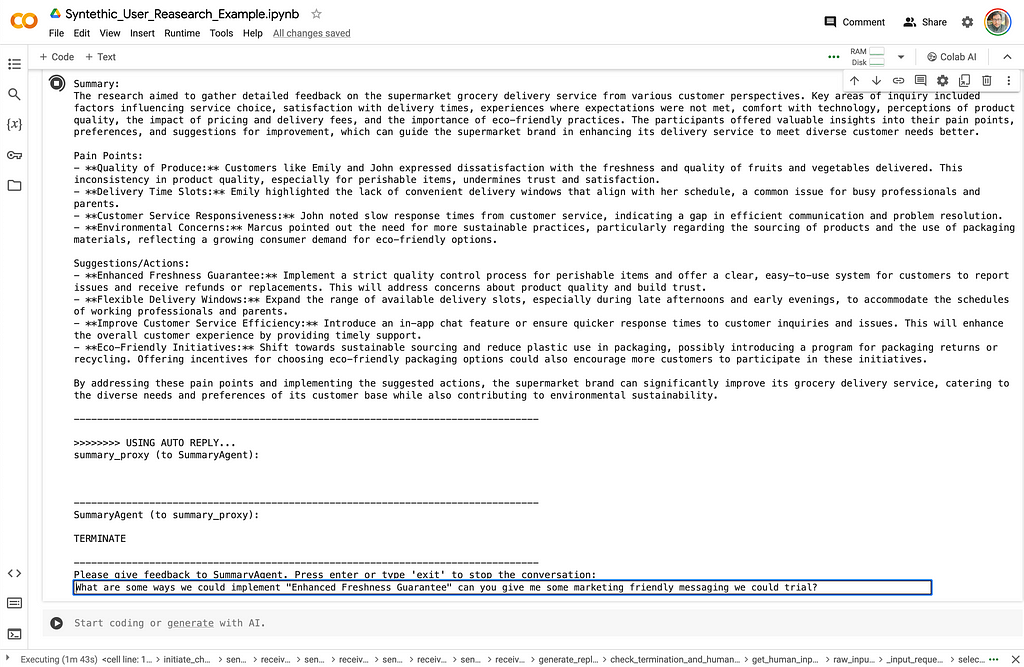

Now that our simulated research study has been concluded we would love to get some more actionable insights. We can create a summary agent to support us with this task and also use this in a Q&A scenario. Here just be careful of very large transcripts would need a language model that supports a larger input (context window).

We need grab all the conversations — in our simulated panel discussion from earlier to use as the user prompt (input) to our summary agent.

# Get response from the groupchat for user prompt

messages = [msg["content"] for msg in groupchat.messages]

user_prompt = "Here is the transcript of the study ```{customer_insights}```".format(customer_insights="n>>>n".join(messages))

Lets craft the system prompt (instructions) for our summary agent — This agent will focus on creating us a tailored report card from the previous transcripts and give us clear suggestions and actions.

# Generate system prompt for the summary agent

summary_prompt = """

You are an expert reasearcher in behaviour science and are tasked with summarising a reasearch panel. Please provide a structured summary of the key findings, including pain points, preferences, and suggestions for improvement.

This should be in the format based on the following format:

```

Reasearch Study: <<Title>>

Subjects:

<<Overview of the subjects and number, any other key information>>

Summary:

<<Summary of the study, include detailed analysis as an export>>

Pain Points:

- <<List of Pain Points - Be as clear and prescriptive as required. I expect detailed response that can be used by the brand directly to make changes. Give a short paragraph per pain point.>>

Suggestions/Actions:

- <<List of Adctions - Be as clear and prescriptive as required. I expect detailed response that can be used by the brand directly to make changes. Give a short paragraph per reccomendation.>>

```

"""

Define the summary agent and its environment — Lets create a mini environment for the summary agent to run. This will need it’s own proxy (environment) and the initiate command which will pull the transcripts (user_prompt) as the input.

summary_agent = autogen.AssistantAgent(

name="SummaryAgent",

llm_config=llm_config,

system_message=summary_prompt + generate_notice(),

)

summary_proxy = autogen.UserProxyAgent(

name="summary_proxy",

code_execution_config={"last_n_messages": 2, "work_dir": "groupchat"},

system_message="A human admin.",

human_input_mode="TERMINATE"

)

summary_proxy.initiate_chat(

summary_agent,

message=user_prompt,

)

This gives us an output in the form of a report card in Markdown, along with the ability to ask further questions in a Q&A style chat-bot on-top of the findings.

This exercise was part of a larger autonomous agent architecture and part of my series of experiments into novel generative AI and agent architectures. However here are some thought starters if you wanted to continue to extend on this work and some areas I have explored:

Join me in shaping the future of user research. Explore the project on GitHub, contribute your insights, and let’s innovate together

Synthetic user research stands at the frontier of innovation in the field, offering a blend of technological sophistication and practical efficiency. It represents a significant departure from conventional methods, providing a controlled, yet highly realistic, environment for capturing consumer insights. This approach does not seek to replace traditional research but to augment and accelerate the discovery of deep customer insights.

By introducing the concepts of autonomous agents, digital personas, and agent frameworks progressively, this revised approach to synthetic user research promises to make the field more accessible. It invites researchers and practitioners alike to explore the potential of these innovative tools in shaping the future of user research.

Vincent Koc is a highly accomplished, commercially-focused technologist and futurist with a wealth of experience focused in various forms of artificial intelligence.

Subscribe for free to get notified when Vincent publishes a new story. Or follow him on LinkedIn and X.

Get an email whenever Vincent Koc publishes.

Unless otherwise noted, all images are by the author with the support of generative AI for illustration design

Creating Synthetic User Research: Using Persona Prompting and Autonomous Agents was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Creating Synthetic User Research: Using Persona Prompting and Autonomous Agents

You are downloading satellite images from the new ESA Sentinel Hub API and merging them into animated gifs using pure Python.

Originally appeared here:

Creating Satellite Image Timelapses

Go Here to Read this Fast! Creating Satellite Image Timelapses

It is early 2024, and the Gen AI market is being dominated by OpenAI. For good reasons, too — they have the first mover’s advantage, being the first to provide an easy-to-use API for an LLM, and they also offer arguably the most capable LLM to date, GPT 4. Given that this is the case, developers of all sorts of tools (agents, personal assistants, coding extensions), have turned to OpenAI for their LLM needs.

While there are many reasons to fuel your Gen AI creations with OpenAI’s GPT, there are plenty of reasons to opt for an alternative. Sometimes, it might be less cost-efficient, and at other times your data privacy policy may prohibit you from using OpenAI, or maybe you’re hosting an open-source LLM (or your own).

OpenAI’s market dominance means that many of the tools you might want to use only support the OpenAI API. Gen AI & LLM providers like OpenAI, Anthropic, and Google all seem to creating different API schemas (perhaps intentionally), which adds a lot of extra work for devs who want to support all of them.

So, as a quick weekend project, I decided to implement a Python FastAPI server that is compatible with the OpenAI API specs, so that you can wrap virtually any LLM you like (either managed like Anthropic’s Claude, or self-hosted) to mimic the OpenAI API. Thankfully, the OpenAI API specs, have a base_url parameter you can set to effectively point the client to your server, instead of OpenAI’s servers, and most of the developers of aforementioned tools allow you to set this parameter to your liking.

To do this, I’ve followed OpenAI’s Chat API reference openly available here, with some help from the code of vLLM, an Apache-2.0 licensed inference server for LLMs that also offers OpenAI API compatibility.

We will be building a mock API that mimics the way OpenAI’s Chat Completion API (/v1/chat/completions) works. While this implementation is in Python and uses FastAPI, I kept it quite simple so that it can be easily transferable to another modern coding language like TypeScript or Go. We will be using the Python official OpenAI client library to test it — the idea is that if we can get the library to think our server is OpenAI, we can get any program that uses it to think the same.

We’ll start with implementing the non-streaming bit. Let’s start with modeling our request:

from typing import List, Optional

from pydantic import BaseModel

class ChatMessage(BaseModel):

role: str

content: str

class ChatCompletionRequest(BaseModel):

model: str = "mock-gpt-model"

messages: List[ChatMessage]

max_tokens: Optional[int] = 512

temperature: Optional[float] = 0.1

stream: Optional[bool] = False

The PyDantic model represents the request from the client, aiming to replicate the API reference. For the sake of brevity, this model does not implement the entire specs, but rather the bare bones needed for it to work. If you’re missing a parameter that is a part of the API specs (like top_p), you can simply add it to the model.

The ChatCompletionRequest models the parameters OpenAI uses in their requests. The chat API specs require specifying a list of ChatMessage (like a chat history, the client is usually in charge of keeping it and feeding back in at every request). Each chat message has a role attribute (usually system, assistant , or user ) and a content attribute containing the actual message text.

Next, we’ll write our FastAPI chat completions endpoint:

import time

from fastapi import FastAPI

app = FastAPI(title="OpenAI-compatible API")

@app.post("/chat/completions")

async def chat_completions(request: ChatCompletionRequest):

if request.messages and request.messages[0].role == 'user':

resp_content = "As a mock AI Assitant, I can only echo your last message:" + request.messages[-1].content

else:

resp_content = "As a mock AI Assitant, I can only echo your last message, but there were no messages!"

return {

"id": "1337",

"object": "chat.completion",

"created": time.time(),

"model": request.model,

"choices": [{

"message": ChatMessage(role="assistant", content=resp_content)

}]

}

That simple.

Assuming both code blocks are in a file called main.py, we’ll install two Python libraries in our environment of choice (always best to create a new one): pip install fastapi openai and launch the server from a terminal:

uvicorn main:app

Using another terminal (or by launching the server in the background), we will open a Python console and copy-paste the following code, taken straight from OpenAI’s Python Client Reference:

from openai import OpenAI

# init client and connect to localhost server

client = OpenAI(

api_key="fake-api-key",

base_url="http://localhost:8000" # change the default port if needed

)

# call API

chat_completion = client.chat.completions.create(

messages=[

{

"role": "user",

"content": "Say this is a test",

}

],

model="gpt-1337-turbo-pro-max",

)

# print the top "choice"

print(chat_completion.choices[0].message.content)

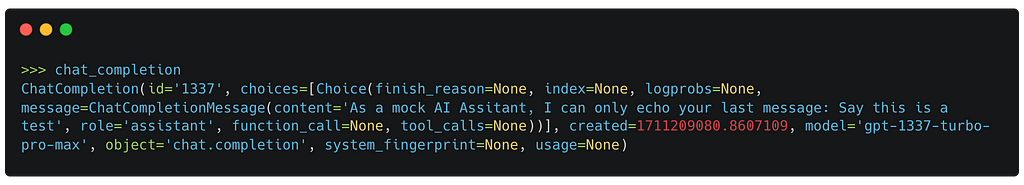

If you’ve done everything correctly, the response from the server should be correctly printed. It’s also worth inspecting the chat_completion object to see that all relevant attributes are as sent from our server. You should see something like this:

As LLM generation tends to be slow (computationally expensive), it’s worth streaming your generated content back to the client, so that the user can see the response as it’s being generated, without having to wait for it to finish. If you recall, we gave ChatCompletionRequest a boolean stream property — this lets the client request that the data be streamed back to it, rather than sent at once.

This makes things just a bit more complex. We will create a generator function to wrap our mock response (in a real-world scenario, we will want a generator that is hooked up to our LLM generation)

import asyncio

import json

async def _resp_async_generator(text_resp: str):

# let's pretend every word is a token and return it over time

tokens = text_resp.split(" ")

for i, token in enumerate(tokens):

chunk = {

"id": i,

"object": "chat.completion.chunk",

"created": time.time(),

"model": "blah",

"choices": [{"delta": {"content": token + " "}}],

}

yield f"data: {json.dumps(chunk)}nn"

await asyncio.sleep(1)

yield "data: [DONE]nn"

And now, we would modify our original endpoint to return a StreamingResponse when stream==True

import time

from starlette.responses import StreamingResponse

app = FastAPI(title="OpenAI-compatible API")

@app.post("/chat/completions")

async def chat_completions(request: ChatCompletionRequest):

if request.messages:

resp_content = "As a mock AI Assitant, I can only echo your last message:" + request.messages[-1].content

else:

resp_content = "As a mock AI Assitant, I can only echo your last message, but there wasn't one!"

if request.stream:

return StreamingResponse(_resp_async_generator(resp_content), media_type="application/x-ndjson")

return {

"id": "1337",

"object": "chat.completion",

"created": time.time(),

"model": request.model,

"choices": [{

"message": ChatMessage(role="assistant", content=resp_content) }]

}

After restarting the uvicorn server, we’ll open up a Python console and put in this code (again, taken from OpenAI’s library docs)

from openai import OpenAI

# init client and connect to localhost server

client = OpenAI(

api_key="fake-api-key",

base_url="http://localhost:8000" # change the default port if needed

)

stream = client.chat.completions.create(

model="mock-gpt-model",

messages=[{"role": "user", "content": "Say this is a test"}],

stream=True,

)

for chunk in stream:

print(chunk.choices[0].delta.content or "")

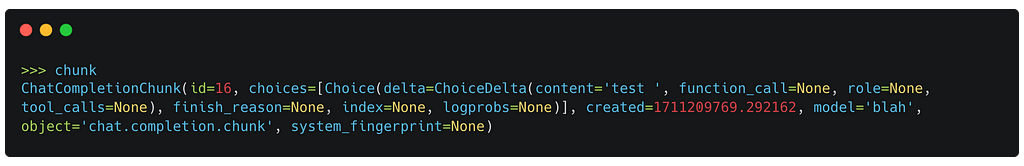

You should see each word in the server’s response being slowly printed, mimicking token generation. We can inspect the last chunk object to see something like this:

Finally, in the gist below, you can see the entire code for the server.

How to build an OpenAI-compatible API was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

How to build an OpenAI-compatible API

Go Here to Read this Fast! How to build an OpenAI-compatible API

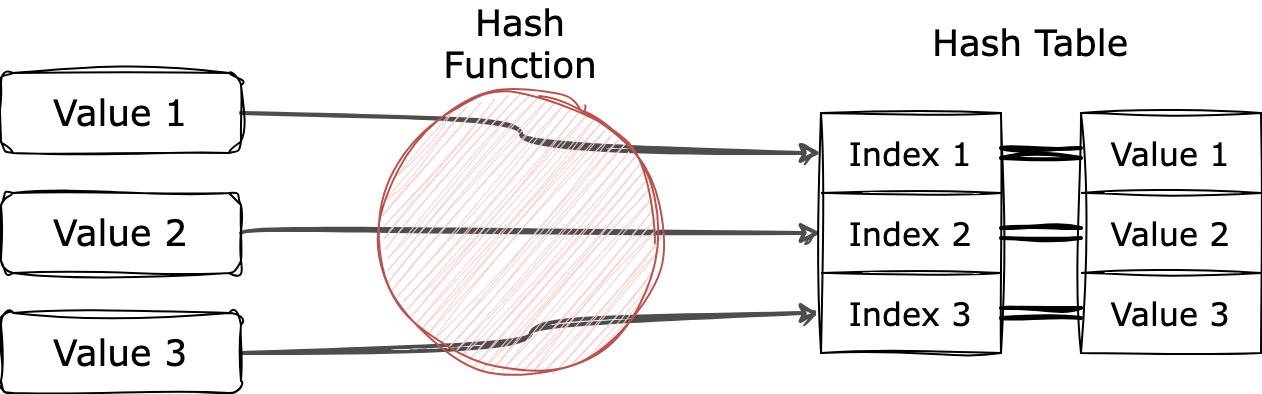

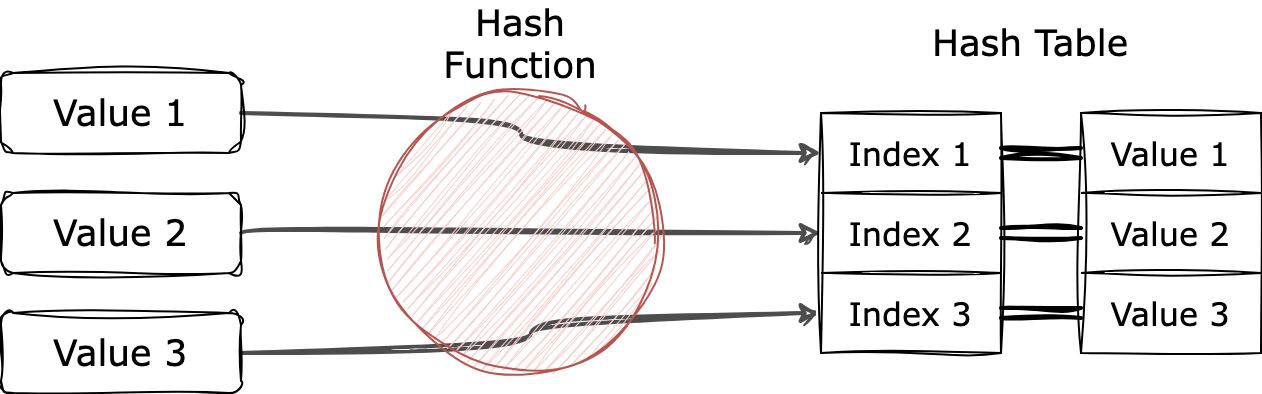

Hash table is one of the most widely known and used data structures. With a wise choice of hash function, a hash table can produce optimal performance for insertion, search and deletion queries in constant time.

The main drawback of the hash table is potential collisions. To avoid them, one of the standard methods includes increasing the hash table size. While this approach works well in most cases, sometimes we are still limited in using large memory space.

It is necessary to recall that a hash table always provides a correct response to any query. It might go through collisions and be slow sometimes but it always guarantees 100% correct responses. It turns out that in some systems, we do not always need to receive correct information to queries. Such a decrease in accuracy can be used to focus on improving other aspects of the system.

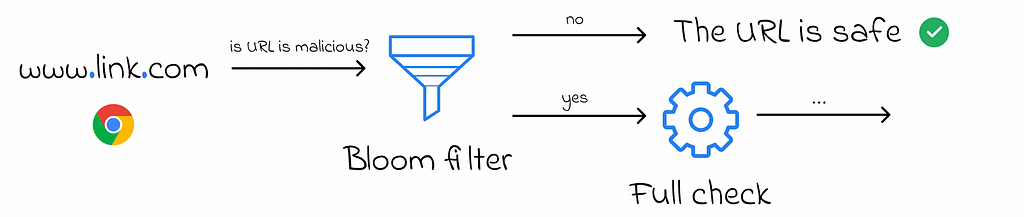

In this article, we will discover an innovative data structure called a Bloom filter. In simple words, it is a modified version of a standard hash table which trades off a small decrease in accuracy for memory space gains.

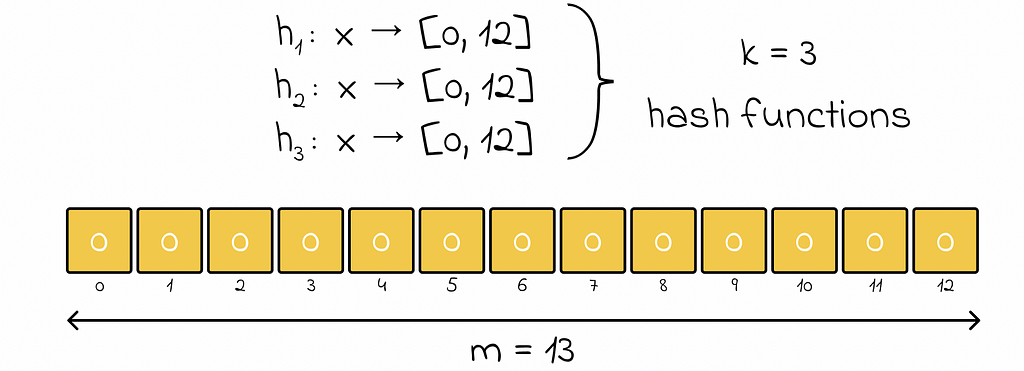

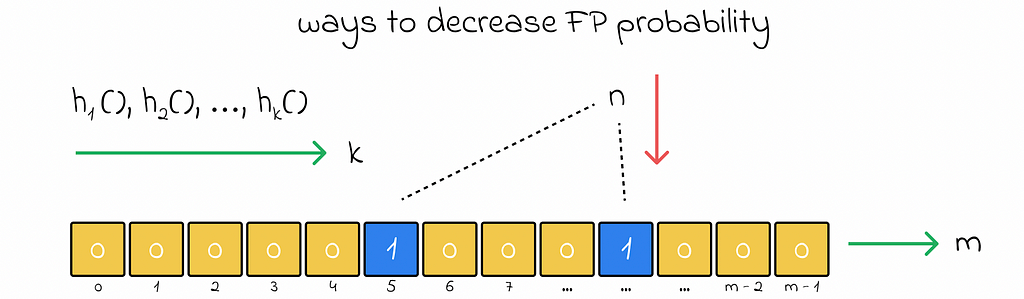

Bloom filter is organised in the form of a boolean array of size m. Initially all of its elements are marked as 0 (false). Apart from that, it is necessary to choose k hash functions that take objects as input and map them to the range [0, m — 1]. Every output value will later correspond to an array element at that index.

For better results, it is recommended that hash functions output values whose distribution is close to uniform.

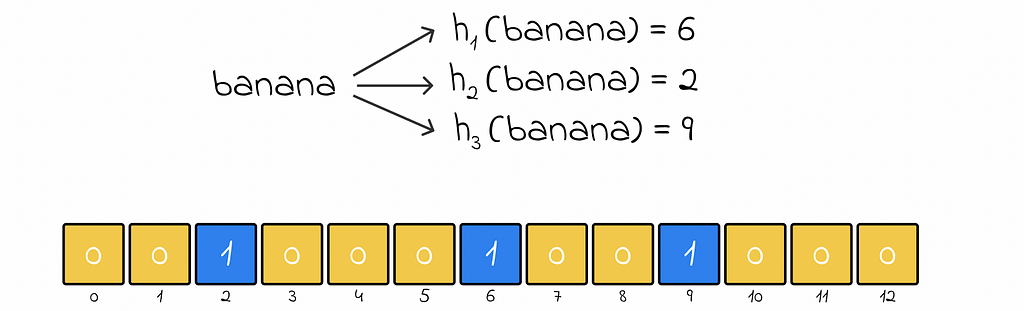

Whenever a new object needs to be added, it is passed through k predefined hash functions. For each output hash value, the corresponding element at that index becomes 1 (true).

If an array element whose index was outputted from a hash function has already been set to 1, then it simply remains as 1.

Basically, the presense of 1 at any array element acts as a partial prove that an element hashing to the respective array index actually exists in the Bloom filter.

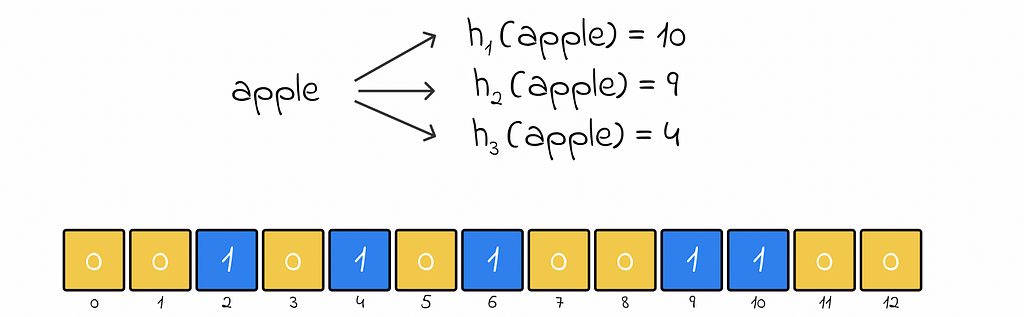

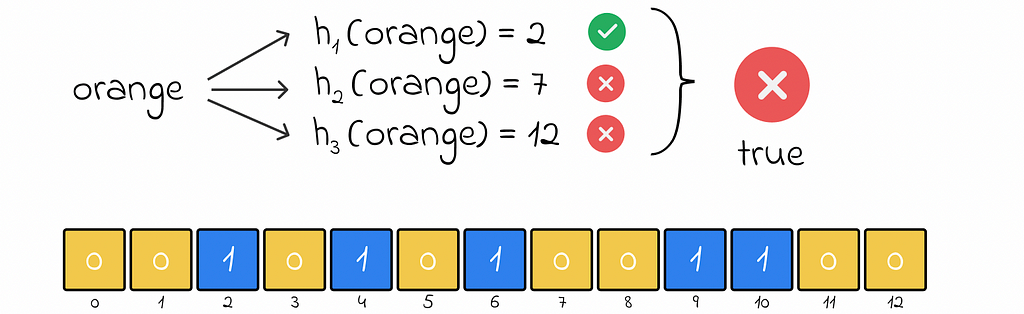

To check if an object exists, its k hash values are computed. There can be two possible scenarios:

If these is at least one hash value for which the respective array element equals 0, this means that the object does not exist.

During insertion, an object becomes associated with several array elements that are marked as 1. If an object really existed in the filter, than all of the hash functions would deterministically output the same sequence of indexes pointing to 1. However, pointing to an array element with 0 clearly signifies that the current object is not present in the data structure.

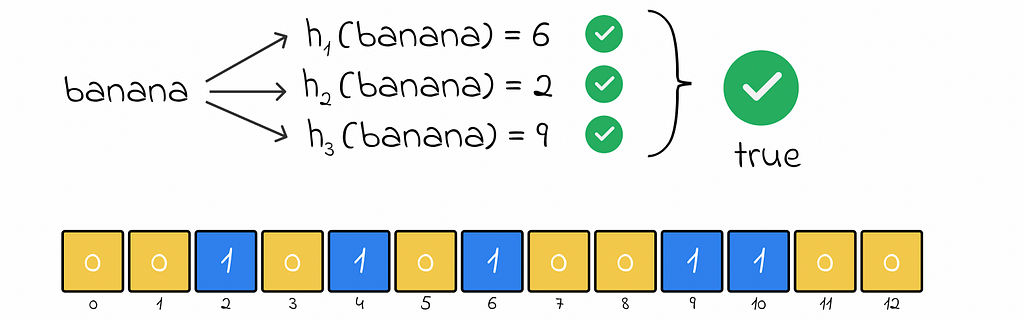

If for all hash values, the respective array elements equal 1, this means that the object probably exists (not 100%).

This statement is exactly what makes the Bloom filter a probabilistic data structure. If an object was added before, then during a search, the Bloom filter guarantees that hash values will be the same for it, thus the object will be found.

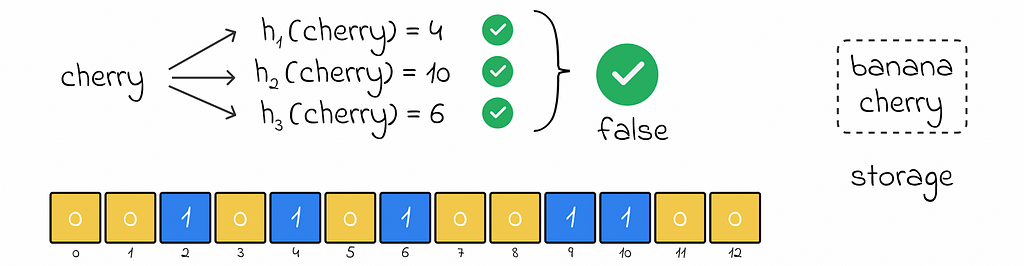

Nevertheless, the Bloom filter can produce a false positive response when an object does not actually exist but the Bloom filter claims otherwise. This happens when all hash functions for the object return hash values of 1 corresponding to other already inserted objects in the filter.

False positive answers tend to occur when the number of inserted objects becomes relatively high in comparison to the size of the Bloom filter’s array.

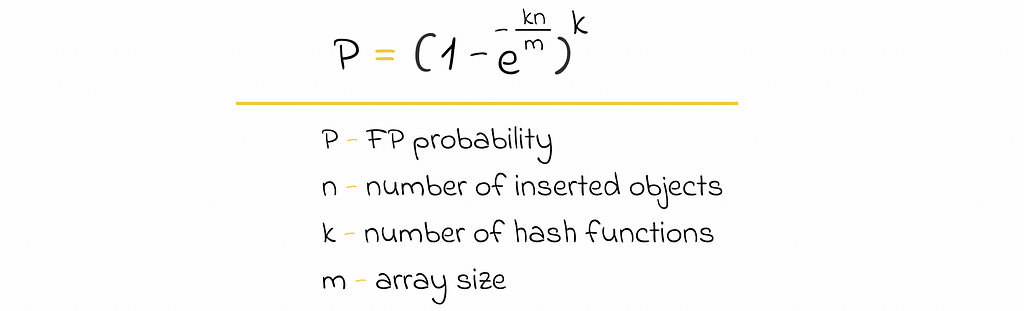

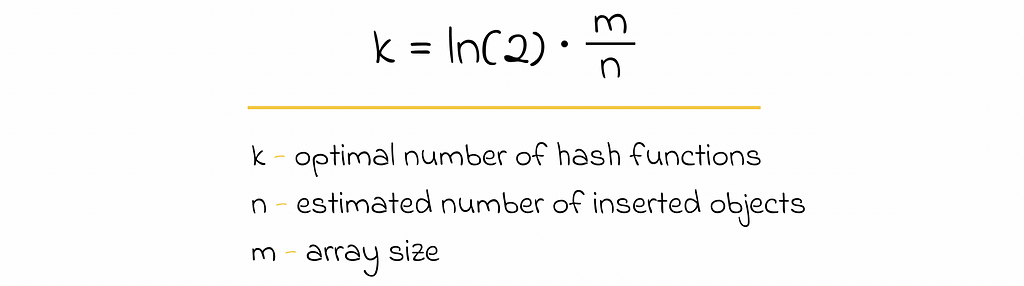

It is possible to estimate the probability of getting a false positive error, given the Bloom’s filter structure.

The full proof of this formula can be found on Wikipedia. Based on that expression, we can make a pair of interesting observations:

Another option of reducing FP probability is a combination (AND conjunction) of several independent Bloom filters. An element is ultimately considered to be present in the data structure only if it is present in all Bloom filters.

According to the page from Wikipedia, the Bloom filter is widely used in large systems:

In this article, we have covered an alternative approach to constructing hash tables. When a small decrease in accuracy can be compromised for more efficient memory usage, the Bloom filter turns out to be a robust solution in many distributed systems.

Varying the number of hash functions with the Bloom filter’s size allows us to find the most suitable balance between accuracy and performance requirements.

All images unless otherwise noted are by the author.

System Design: Bloom Filter was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

System Design: Bloom Filter

Use Python’s statistical visualization library Seaborn to level up your analysis.

Originally appeared here:

5 Useful Visualizations to Enhance Your Analysis

Go Here to Read this Fast! 5 Useful Visualizations to Enhance Your Analysis

Three common Python tricks make your program faster, I will explain the mechanisms

Originally appeared here:

Many Articles Tell You Python Tricks, But Few Tell You Why

Go Here to Read this Fast! Many Articles Tell You Python Tricks, But Few Tell You Why

A deep dive into how the Segment-Anything model’s decoding procedure, with a focus on how its self-attention and cross-attention mechanism…

Originally appeared here:

How does the Segment-Anything Model’s (SAM’s) decoder work?

Go Here to Read this Fast! How does the Segment-Anything Model’s (SAM’s) decoder work?

GLiNER is an NER model that can identify any type of entity using a bidirectional transformer encoder (similar to BERT) that outperforms…

Originally appeared here:

Extract any entity from text with GLiNER

Go Here to Read this Fast! Extract any entity from text with GLiNER

In the realm of education, the best exams are those that challenge students to apply what they’ve learned in new and unpredictable ways, moving beyond memorizing facts to demonstrate true understanding. Our evaluations of language models should follow the same pattern. As we see new models flood the AI space everyday whether from giants like OpenAI and Anthropic, or from smaller research teams and universities, its critical that our model evaluations dive deeper than performance on standard benchmarks. Emerging research suggests that the benchmarks we’ve relied on to gauge model capability are not as reliable as we once thought. In order for us to champion new models appropriately, our benchmarks must evolve to be as dynamic and complex as the real-world challenges we’re asking these models and emerging AI agent architectures to solve.

In this article we will explore the complexity of language model evaluation by answering the following questions:

Today most models either Large Language Models (LLMs) or Small Language Models (SLMs) are evaluated on a common set of benchmarks including the Massive Multitask Language Understanding (MMLU), Grade School Math (GSM8K), and Big-Bench Hard (BBH) datasets amongst others.

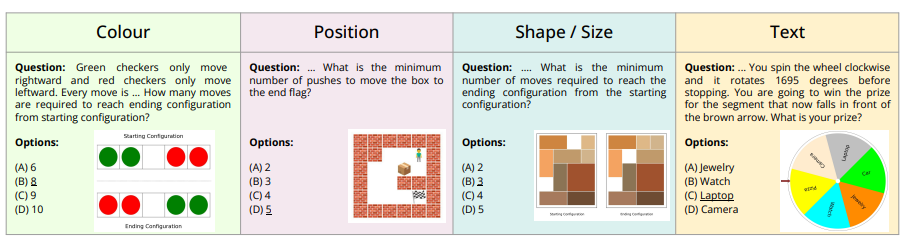

To provide a deeper understanding of the types of tasks each benchmark evaluates, here are some sample questions from each dataset:

Anthropic’s recent announcement of Claude-3 shows their Opus model surpassing GPT-4 as the leading model on a majority of the common benchmarks. For example, Claude-3 Opus performed at 86.8% on MMLU, narrowly surpassing GPT-4 which scored 86.4%. Claude-3 Opus also scored 95% on GSM8K and 86.8% on BBH compared to GPT-4’s 92% and 83.1% respectively [1].

While the performance of models like GPT-4 and Claude on these benchmarks is impressive, these tasks are not always representative of the types of challenges business want to solve. Additionally, there is a growing body of research suggesting that models are memorizing benchmark questions rather than understanding them. This does not necessarily mean that the models aren’t capable of generalizing to new tasks, we see LLMs and SLMs perform amazing feats everyday, but it does mean we should reconsider how we’re evaluating, scoring, and promoting models.

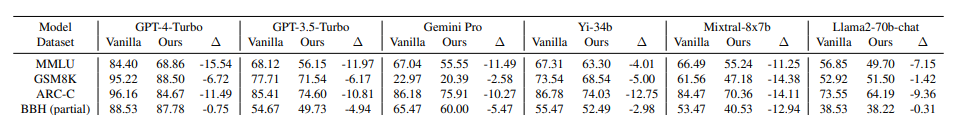

Research from Microsoft, the Institute of Automation CAS, and the University of Science and Technology, China demonstrates how when asking various language models rephrased or modified benchmark questions, the models perform significantly worse than when asked the same benchmark question with no modification. For the purposes of their research as exhibited in the paper, DyVal 2, the researchers took questions from benchmarks like MMLU and modified them by either rephrasing the question, adding an extra answer to the question, rephrasing the answers, permuting the answers, or adding extra content to the question. When comparing model performance on the “vanilla” dataset compared to the modified questions they saw a decrease in performance, for example GPT-4 scored 84.4 on the vanilla MMLU questions and 68.86 on the modified MMLU questions [5].

Similarly, research from the Department of Computer Science at the University of Arizona indicates that there is a significant amount of data contamination in language models [6]. Meaning that the information in the benchmarks is becoming part of the models training data, effectively making the benchmark scores irrelevant since the models are being tested on information they are trained on.

Additional research from Fudan University, Tongji University, and Alibaba highlights the need for self-evolving dynamic evaluations for AI agents to combat the issues of data contamination and benchmark memorization [7]. These dynamic benchmarks will help prevent models from memorizing or learning information during pre-training that they’d later be tested on. Although a recurring influx of new benchmarks may create challenges when comparing an older model to a newer model, ideally these benchmarks will mitigate issues of data contamination and make it easier to gauge how well a model understands topics from training.

When evaluating model capability for a particular problem, we need to grasp both how well the model understands information learned during pretraining and how well it can generalize to novel tasks or concepts beyond it’s training data.

As we look to use models as AI agents to perform actions on our behalf, whether that’s booking a vacation, writing a report, or researching new topics for us, we’ll need additional benchmarks or evaluation mechanisms that can assess the reliability and accuracy of these agents. Most businesses looking to harness the power of foundation models require giving the model access to a variety of tools integrated with their unique data sources and require the model to reason and plan when and how to use the tools available to them effectively. These types of tasks are not represented in many traditional LLM benchmarks.

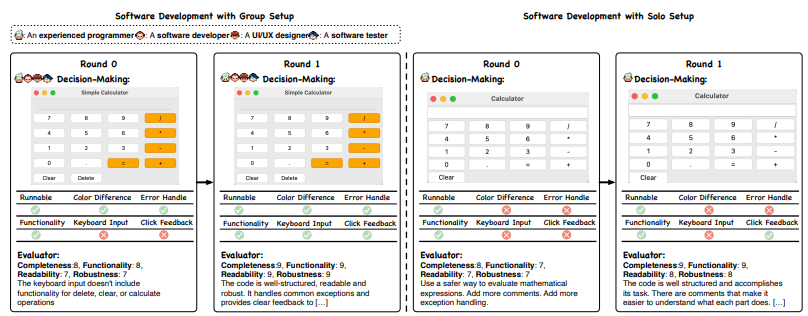

To address this gap, many research teams are creating their own benchmarks and frameworks that evaluate agent performance on tasks involving tool use and knowledge outside of the model’s training data. For example, the authors of AgentVerse evaluated how well teams of agents could perform real world tasks involving event planning, software development, and consulting. The researchers created their own set of 10 test tasks which were manually evaluated to determine if the agents performed the right set of actions, used the proper tools, and got to an accurate result. They found that teams of agents who operated in a cycle with defined stages for agent recruitment, task planning, independent task execution, and subsequent evaluation lead to superior outcomes compared to independent agents [8].

In my opinion the emerging agent architectures and benchmarks are a great step towards understanding how well language models will perform on business oriented problems, but one limitation is that most are still text focused. As we consider the world and the dynamic nature of most jobs, we will need agent systems and models that evaluate both performance on text based tasks as well as visual and auditory tasks together. The AlgoPuzzleVQA dataset is one example of evaluating models on their ability to both reason, read, and visually interpret mathematical and algorithmic puzzles [9].

While businesses may not be interested in how well a model can solve a puzzle, it is still a step in the right direction for understanding how well models can reason about multimodal information.

As we continue adopting foundation models in our daily routines and professional endeavors, we need additional evaluation options that mirror real world problems. Dynamic and multimodal benchmarks are one key component of this. However, as we introduce additional agent frameworks and architectures with many AI agents collaborating to solve a problem, evaluation and comparison across models and frameworks becomes even more challenging. The true measure of foundation models lies not in their ability to conquer standardized tests, but in their capacity to understand, adapt, and act within the complex and often unpredictable real world. By changing how we evaluate language models, we challenge these models to evolve from text-based intellects and benchmark savants to comprehensive thinkers capable of tackling multifaceted (and multimodal) challenges.

Interested in discussing further or collaborating? Reach out on LinkedIn!

Are Language Models Benchmark Savants or Real-World Problem Solvers? was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Are Language Models Benchmark Savants or Real-World Problem Solvers?

Go Here to Read this Fast! Are Language Models Benchmark Savants or Real-World Problem Solvers?