Originally appeared here:

Open source observability for AWS Inferentia nodes within Amazon EKS clusters

Originally appeared here:

Open source observability for AWS Inferentia nodes within Amazon EKS clusters

Authors: Elahe Aghapour, Salar Rahili

With the rise of the transformer architecture and high-throughput compute, training foundational models has turned into a hot topic recently. This has led to promising efforts to either integrate or train foundational models to enhance the capabilities of reinforcement learning (RL) algorithms, signaling an exciting direction for the field. Here, we’re discussing how foundational models can give reinforcement learning a major boost.

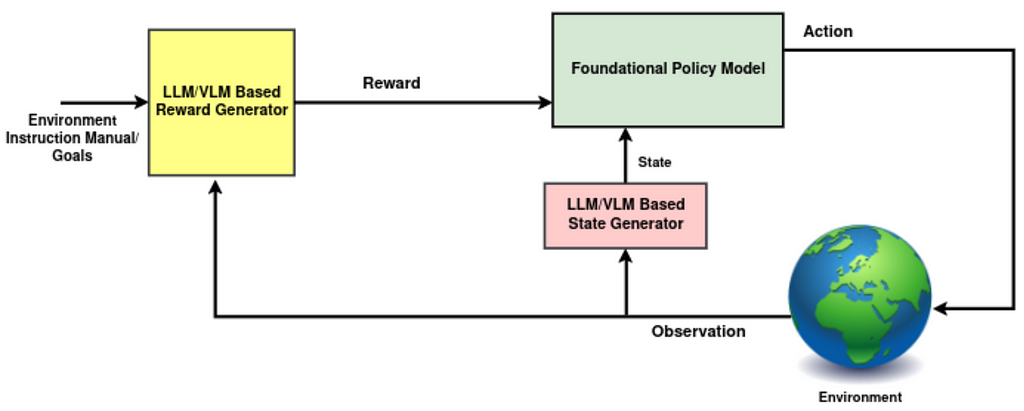

Before diving into the latest research on how foundational models can give reinforcement learning a major boost, let’s engage in a brainstorming session. Our goal is to pinpoint areas where pre-trained foundational models, particularly Large Language Models (LLMs) or Vision-Language Models (VLMs), could assist us, or how we might train a foundational model from scratch. A useful approach is to examine each element of the reinforcement learning training loop individually, to identify where there might be room for improvement:

1- Environment: Given that pre-trained foundational models understand the causal relationships between events, they can be utilized to forecast environmental changes resulting from current actions. Although this concept is intriguing, we’re not yet aware of any specific studies that focus on it. There are two primary reasons holding us back from exploring this idea further for now.

2- State (LLM/VLM Based State Generator): While experts often use the terms observation and state interchangeably, there are distinctions between them. A state is a comprehensive representation of the environment, while an observation may only provide partial information. In the standard RL framework, we don’t often discuss the specific transformations that extract and merge useful features from observations, past actions, and any internal knowledge of the environment to produce “state”, the policy input. Such a transformation could be significantly enhanced by employing LLMs/VLMs, which allow us to infuse the “state” with broader knowledge of the world, physics, and history (refer to Fig. 1, highlighted in pink).

3- Policy (Foundational Policy Model): Integrating foundational models into the policy, the central decision-making component in RL, can be highly beneficial. Although employing such models to generate high-level plans has proven successful, transforming the state into low-level actions has challenges we’ll delve into later. Fortunately, there has been some promising research in this area recently.

4- Reward (LLM/VLM Based Reward Generator): Leveraging foundational models to more accurately assess chosen actions within a trajectory has been a primary focus among researchers. This comes as no surprise, given that rewards have traditionally served as the communication channel between humans and agents, setting goals and guiding the agent towards what is desired.

1- Foundational models in reward

It is challenging to use foundational models to generate low level control actions as low level actions are highly dependent on the setting of the agent and are underrepresented in foundational models’ training dataset. Hence, the foundation model application is generally focused on high level plans rather than low level actions. Reward bridges the gap between high-level planner and low level actions where foundation models can be used. Researchers have adopted various methodologies integrating foundation models for reward assignment. However, the core principle revolves around employing a VLM/LLM to effectively track the progress towards a subgoal or task.

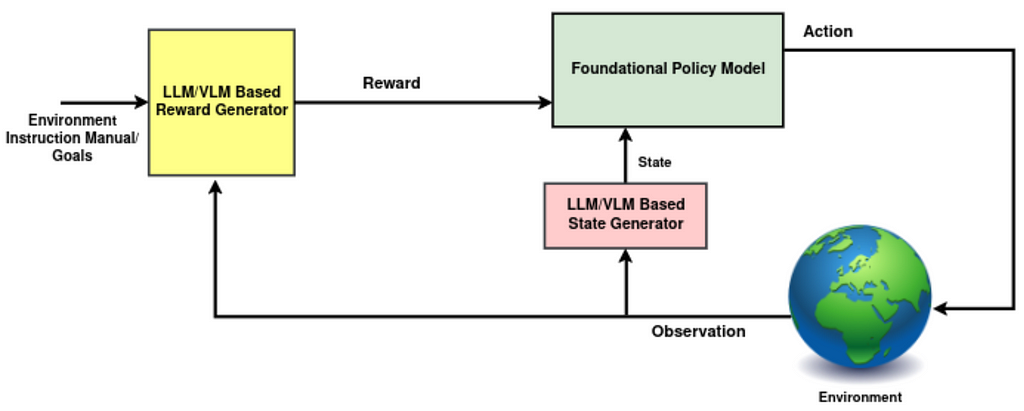

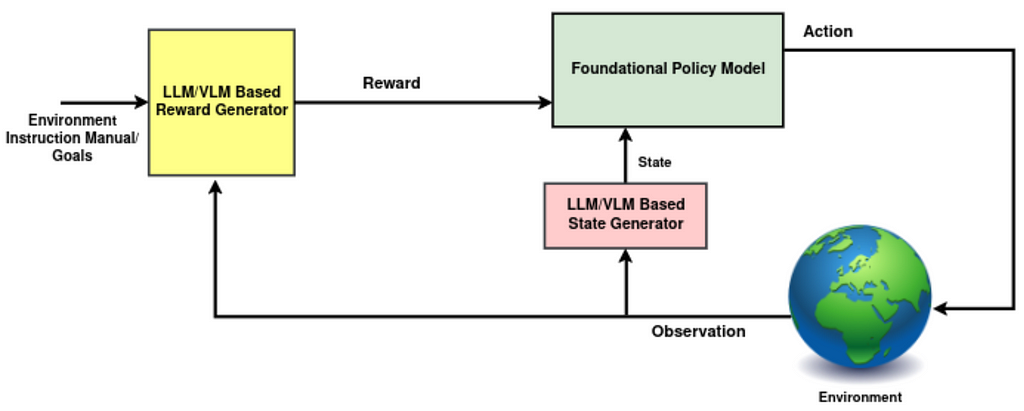

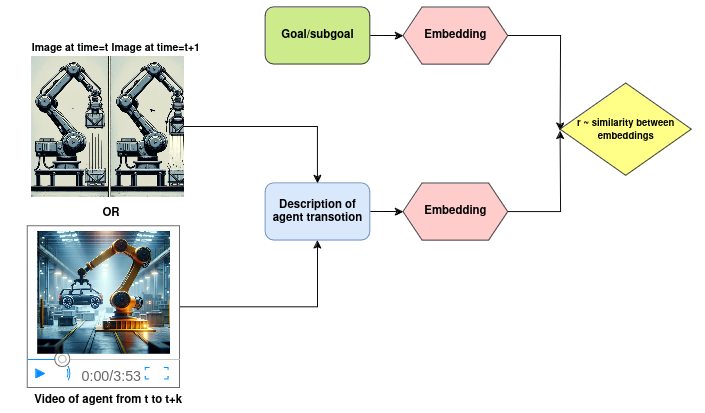

1.a Assigning reward values based on similarity

Consider the reward value as a signal that indicates whether the agent’s previous action was beneficial in moving towards the goal. A sensible method involves evaluating how closely the previous action aligns with the current objective. To put this approach into practice, as can be seen in Fig. 2, it’s essential to:

– Generate meaningful embeddings of these actions, which can be done through images, videos, or text descriptions of the most recent observation.

– Generate meaningful representations of the current objective.

– Assess the similarity between these representations.

Let’s explore the specific mechanics behind the leading research in this area.

Dense and well-shaped reward functions enhance the stability and training speed of the RL agent. Intrinsic rewards address this challenge by rewarding the agent for novel states’ exploration. However, in large environments where most of the unseen states are irrelevant to the downstream task, this approach becomes less effective. ELLM uses background knowledge of LLM to shape the exploration. It queries LLM to generate a list of possible goals/subgoals given a list of the agent’s available actions and a text description of the agent current observation, generated by a state captioner. Then, at each time step, the reward is computed by the semantic similarity, cosine similarity, between the LLM generated goal and the description of the agent’s transition.

LiFT has a similar framework but also leverages CLIP4Clip-style VLMs for reward assignment. CLIP4Clip is pre-trained to align videos and corresponding language descriptions through contrastive learning. In LiFT, the agent is rewarded based on the alignment score, cosine similarity, between the task instructions and videos of the agent’s corresponding behavior, both encoded by CLIP4CLIP.

UAFM has a similar framework where the main focus is on robotic manipulation tasks, e.g., stacking a set of objects. For reward assignment, they measure the similarity between the agent state image and the task description, both embedded by CLIP. They finetune CLIP on a small amount of data from the simulated stacking domain to be more aligned in this use case.

1.b Assigning rewards through reasoning on auxiliary tasks:

In scenarios where the foundational model has the proper understanding of the environment, it becomes feasible to directly pass the observations within a trajectory to the model, LLM/VLM. This evaluation can be done either through straightforward QA sessions based on the observations or by verifying the model’s capability in predicting the goal only by looking at the observation trajectory.

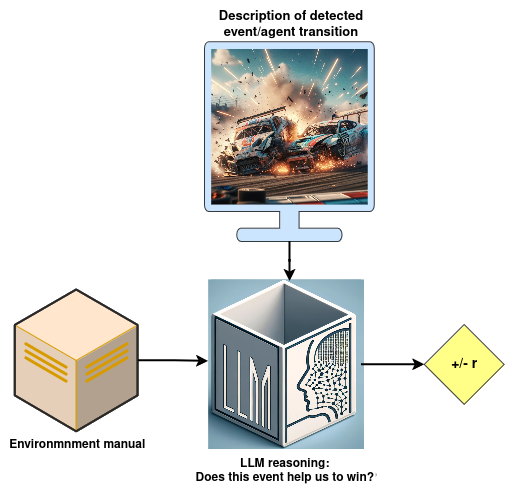

Read and Reward integrates the environment’s instruction manual into reward generation through two key components, as can be seen in Fig. 3:

EAGER introduces a unique method for creating intrinsic rewards through a specially designed auxiliary task. This approach presents a novel concept where the auxiliary task involves predicting the goal based on the current observation. If the model predicts accurately, this indicates a strong alignment with the intended goal, and thus, a larger intrinsic reward is given based on the prediction confidence level. To achieve this goal, To accomplish this, two modules are employed:

(P.S. Although this work does not utilize a foundational model, we’ve included it here due to its intriguing approach, which can be easily adapted to any pre-trained LLM)

1.c Generating reward function code

Up to this point, we’ve discussed generating reward values directly for the reinforcement learning algorithms. However, running a large model at every step of the RL loop can significantly slow down the speed of both training and inference. To bypass this bottleneck, one strategy involves utilizing our foundational model to generate the code for the reward function. This allows for the direct generation of reward values at each step, streamlining the process.

For the code generation schema to work effectively, two key components are required:

1- A code generator, LLM, which receives a detailed prompt containing all the necessary information to craft the code.

2- A refinement process that evaluates and enhances the code in collaboration with the code generator.

Let’s look at the key contributions for generating reward code:

R2R2S generates reward function code through two main components:

Text2Reward develops a method to generate dense reward functions as an executable code within iterative refinement. Given the subgoal of the task, it has two key components:

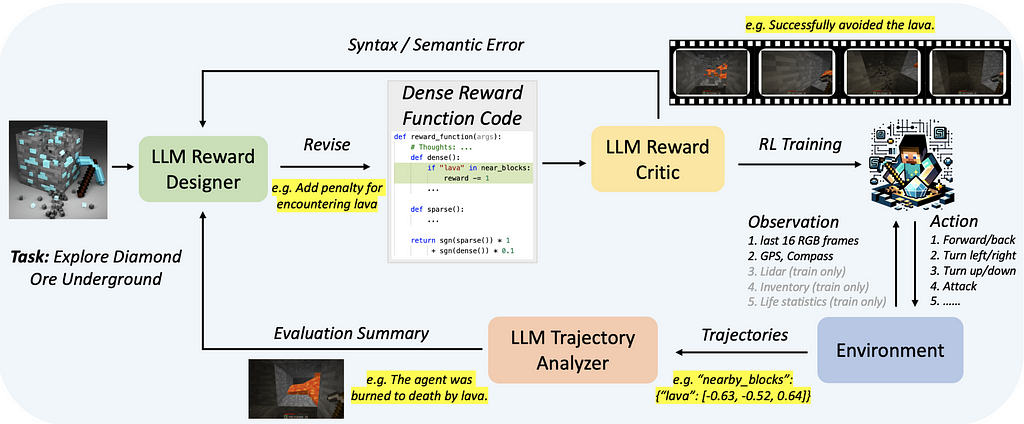

Auto MC-Reward has a similar algorithm to Text2Reward, to generate the reward function code, see Fig. 4. The main difference is in the refinement stage where it has two modules, both LLMs:

EUREKA generates reward code without the need for task-specific prompting, predefined reward templates, or predefined few-shot examples. To achieve this goal, it has two stages:

Within these two steps, EUREKA is able to generate reward functions that outperform expert human-engineered rewards without any task specific templates.

1.d. Train a reward model based on preferences (RLAIF)

An alternative method is to use a foundational model to generate data for training a reward function model. The significant successes of Reinforcement Learning with Human Feedback (RLHF) have recently drawn increased attention towards employing trained reward functions on a larger scale. The heart of such algorithms is the use of a preference dataset to train a reward model which can subsequently be integrated into reinforcement learning algorithms. Given the high cost associated with generating preference data (e.g., action A is preferable to action B) through human feedback, there’s growing interest in constructing this dataset by obtaining feedback from an AI agent, i.e. VLM/LLM. Training a reward function, using AI-generated data and integrating it within a reinforcement learning algorithm, is known as Reinforcement Learning with AI Feedback (RLAIF).

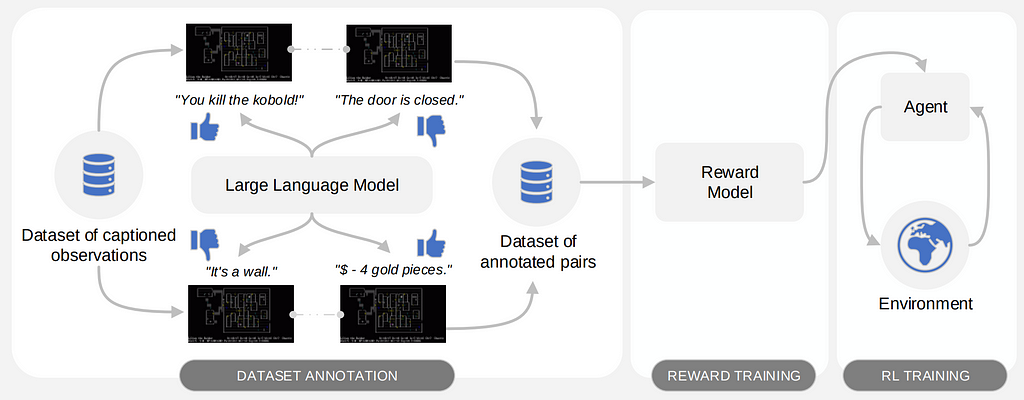

MOTIF requires access to a passive dataset of observations with sufficient coverage. Initially, LLM is queried with a summary of desired behaviors within the environment and a text description of two randomly sampled observations. It then generates the preference, selecting between 1, 2, or 0 (indicating no preference), as seen in Fig. 5. This process constructs a dataset of preferences between observation pairs. Subsequently, this dataset is used to train a reward model employing preference based RL techniques.

2- Foundation models as Policy

Achieving the capability to train a foundational policy that not only excels in tasks previously encountered but also possesses the ability to reason about and adapt to new tasks using past learning, is an ambition within the RL community. Such a policy would ideally generalize from past experiences to tackle novel situations and, through environmental feedback, achieve goals previously unseen with human-like adaptability.

However, several challenges stand in the way of training such agents. Among these challenges are:

Up to now, it’s mostly been teams with substantial resources and top-notch setups who’ve really pushed the envelope in this domain.

AdA paved the way for training an RL foundation model within the X.Land 2.0 3D environment. This model achieves human time-scale adaptation on held-out test tasks without any further training. The model’s success is founded on three ingredients:

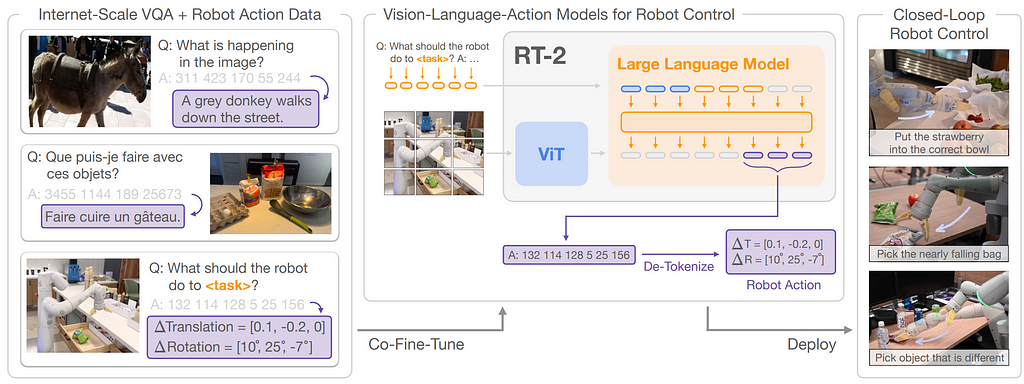

RT-2 introduces a method to co-finetune a VLM on both robotic trajectory data and vision-language tasks, resulting in a policy model called RT-2. To enable vision-language models to generate low-level actions, actions are discretized into 256 bins and represented as language tokens.

By representing actions as language tokens, RT-2 can directly utilize pre-existing VLM architectures without requiring substantial modifications. Hence, VLM input includes robot camera image and textual task description formatted similarly to Vision Question Answering tasks and the output is a series of language tokens that represent the robot’s low-level actions; see Fig. 6.

They noticed that co-finetuning on both types of data with the original web data leads to more generalizable policies. The co-finetuning process equips RT-2 with the ability to understand and execute commands that were not explicitly present in its training data, showcasing remarkable adaptability. This approach enabled them to leverage internet-scale pretraining of VLM to generalize to novel tasks through semantic reasoning.

3- Foundation Models as State Representation

In RL, a policy’s understanding of the environment at any given moment comes from its “state” which is essentially how it perceives its surroundings. Looking at the RL block diagram, a reasonable module to inject world knowledge into is the state. If we can enrich observations with general knowledge useful for completing tasks, the policy can pick up new tasks much faster compared to RL agents that begin learning from scratch.

PR2L introduces a novel approach to inject the background knowledge of VLMs from internet scale data into RL.PR2L employs generative VLMs which generate language in response to an image and a text input. As VLMs are proficient in understanding and responding to visual and textual inputs, they can provide a rich source of semantic features from observations to be linked to actions.

PR2L, queries a VLM with a task-relevant prompt for each visual observation received by the agent, and receives both the generated textual response and the model’s intermediate representations. They discard the text and use some or all of the models intermediate representation generated for both the visual and text input and the VLM’s generated textual response as “promptable representations”. Due to the variable size of these representations, PR2L incorporates an encoder-decoder Transformer layer to embed all the information embedded in promptable representations into a fixed size embedding. This embedding, combined with any available non-visual observation data, is then provided to the policy network, representing the state of the agent. This innovative integration allows the RL agent to leverage the rich semantic understanding and background knowledge of VLMs, facilitating more rapid and informed learning of tasks.

Also Read Our Previous Post: Towards AGI: LLMs and Foundational Models’ Roles in the Lifelong Learning Revolution

References:

[1] ELLM: Du, Yuqing, et al. “Guiding pretraining in reinforcement learning with large language models.” 2023.

[2] Text2Reward: Xie, Tianbao, et al. “Text2reward: Automated dense reward function generation for reinforcement learning.” 2023.

[3] R2R2S: Yu, Wenhao, et al. “Language to rewards for robotic skill synthesis.” 2023.

[4] EUREKA: Ma, Yecheng Jason, et al. “Eureka: Human-level reward design via coding large language models.” 2023.

[5] MOTIF: Klissarov, Martin, et al. “Motif: Intrinsic motivation from artificial intelligence feedback.” 2023.

[6] Read and Reward: Wu, Yue, et al. “Read and reap the rewards: Learning to play atari with the help of instruction manuals.” 2024.

[7] Auto MC-Reward: Li, Hao, et al. “Auto MC-reward: Automated dense reward design with large language models for minecraft.” 2023.

[8] EAGER: Carta, Thomas, et al. “Eager: Asking and answering questions for automatic reward shaping in language-guided RL.” 2022.

[9] LiFT: Nam, Taewook, et al. “LiFT: Unsupervised Reinforcement Learning with Foundation Models as Teachers.” 2023.

[10] UAFM: Di Palo, Norman, et al. “Towards a unified agent with foundation models.” 2023.

[11] RT-2: Brohan, Anthony, et al. “Rt-2: Vision-language-action models transfer web knowledge to robotic control.” 2023.

[12] AdA: Team, Adaptive Agent, et al. “Human-timescale adaptation in an open-ended task space.” 2023.

[13] PR2L: Chen, William, et al. “Vision-Language Models Provide Promptable Representations for Reinforcement Learning.” 2024.

[14] Clip4Clip: Luo, Huaishao, et al. “Clip4clip: An empirical study of clip for end to end video clip retrieval and captioning.” 2022.

[15] Clip: Radford, Alec, et al. “Learning transferable visual models from natural language supervision.” 2021.

[16] RoBERTa: Liu, Yinhan, et al. “Roberta: A robustly optimized bert pretraining approach.” 2019.

[17] Preference based RL: SWirth, Christian, et al. “A survey of preference-based reinforcement learning methods.” 2017.

[18] Muesli: Hessel, Matteo, et al. “Muesli: Combining improvements in policy optimization.” 2021.

[19] Melo, Luckeciano C. “Transformers are meta-reinforcement learners.” 2022.

[20] RLHF: Ouyang, Long, et al. “Training language models to follow instructions with human feedback, 2022.

Pushing RL Boundaries: Integrating Foundational Models, e.g. was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Pushing RL Boundaries: Integrating Foundational Models, e.g.

Go Here to Read this Fast! Pushing RL Boundaries: Integrating Foundational Models, e.g.

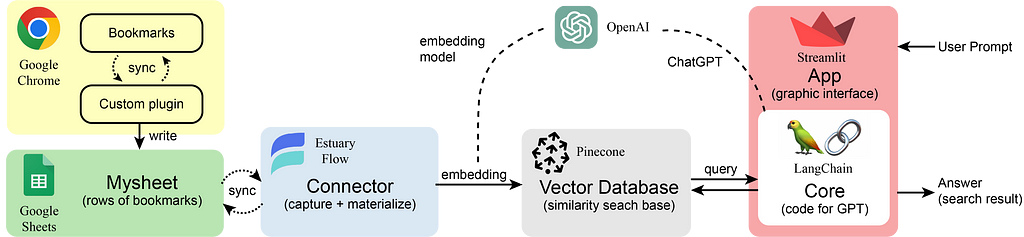

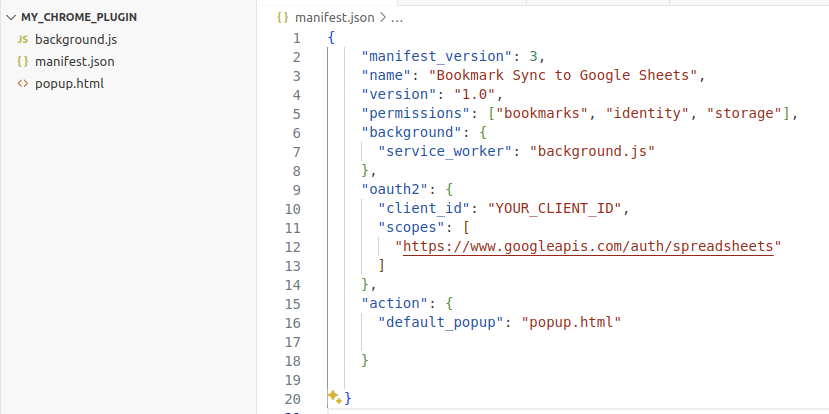

· Introduction

· Vectore Store Creation

· Method: Naive Retriever

· Method: Parent Document Retriever

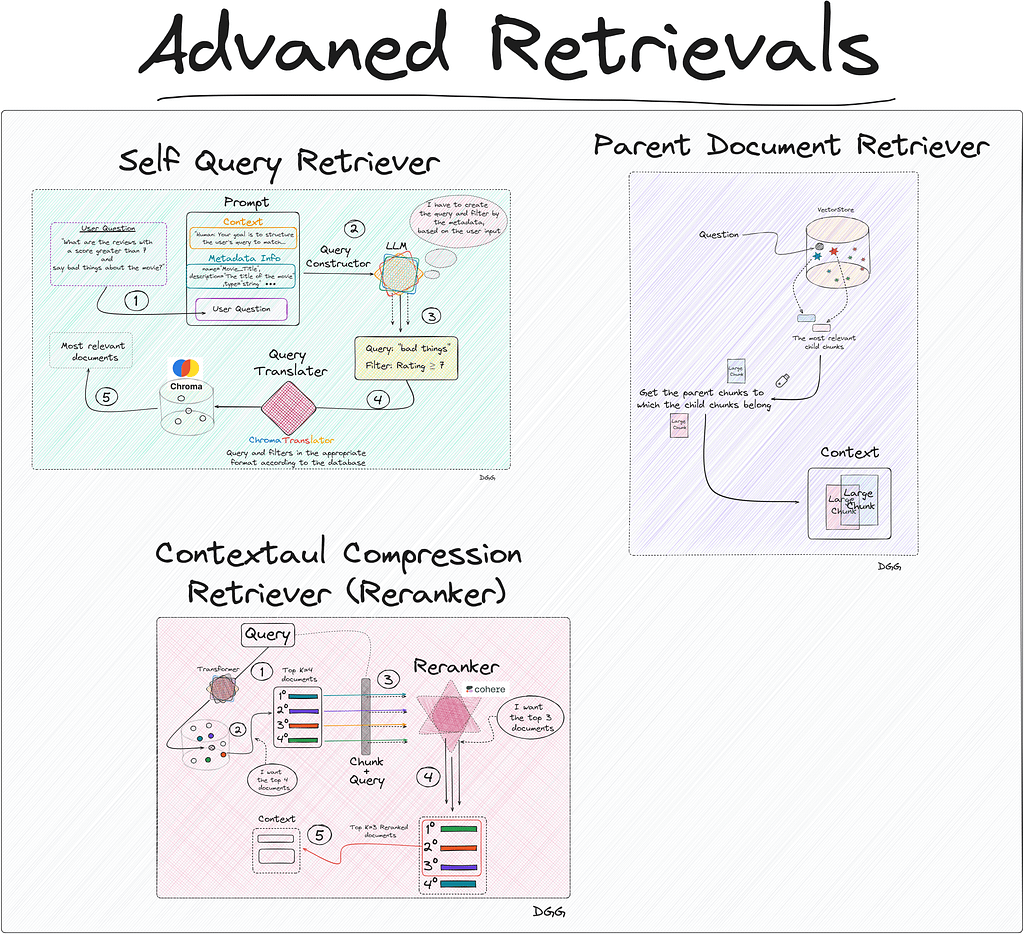

· Method: Self Query Retriever

∘ Query Constructor

∘ Query Translater

· Method: Contextual Compression Retriever (Reranking)

· Conclusion

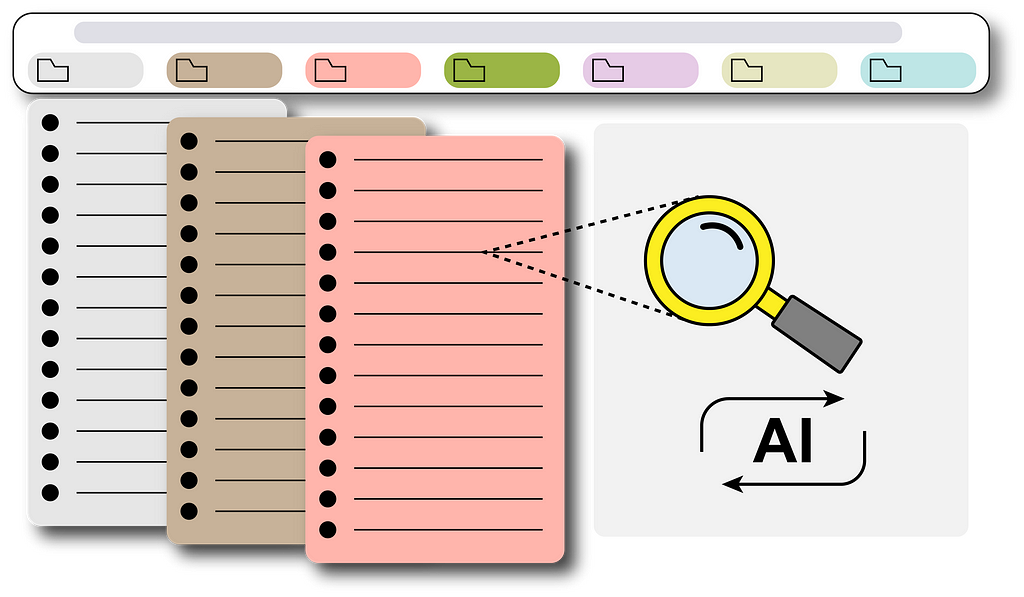

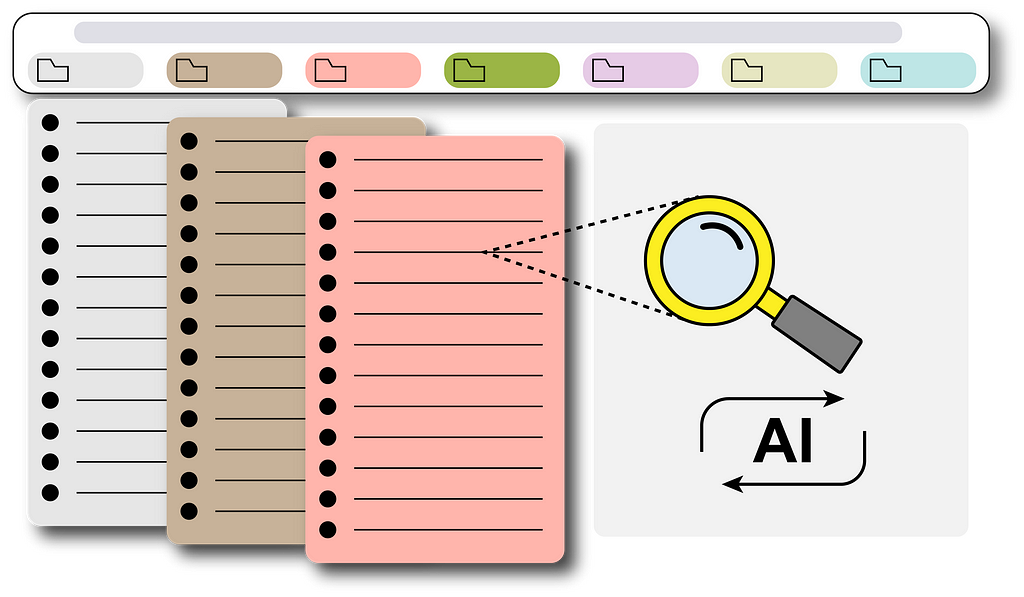

Let’s briefly remember what the 3 acronyms that make up the word RAG mean:

As we can see, the generation of the response to a user’s query (If we apply a RAG for the purpose of Q&A), depends directly on how well we have built the “Augmented” and especially the “Retrieval”.

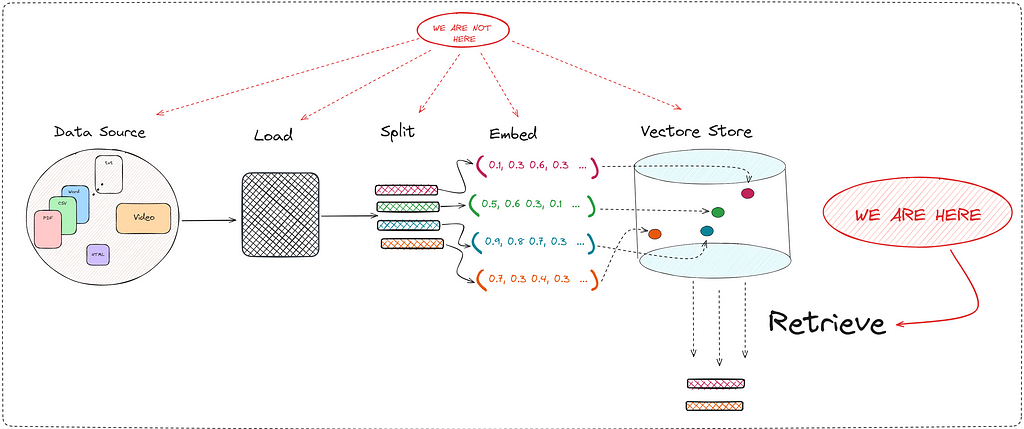

In this article we are going to focus exclusively on the “Retrieval” part. In this important process of returning the most relevant documents, the concept of vector store appears.

To create these retrievals, we will use the Langchain library.

The vectore store is nothing more than a vector database, which stores documents in vector format. This vector representation comes from the use of transformers. I’m not saying something you don’t know at the moment.

It is clear that the more robust and complete this vector store is, the better retriever we can run. We already know that the creation of this database is an art in itself. Depending on the size of the chunks or the embedding model we use, our RAG will be better or worse.

I make a clarification here:

In this post we are NOT going to discuss how to create this vector store.

In this post we are going to discuss some of the techniques used to retrieve relevant documents.

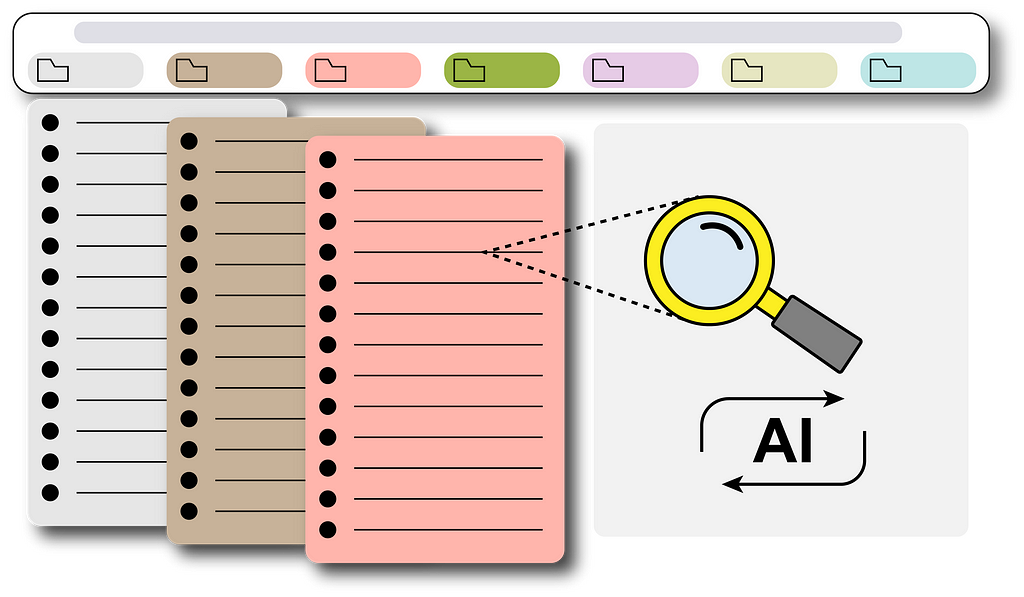

Since a picture is worth a thousand words, I suggest you take a look at the following:

Therefore, I reiterate that in this post we are going to deeply study one of the many important steps in creating a good RAG tool. The “Retrieve” step is key since it directly improves the context that the LLM has when generating a response.

The methods we will study are:

You can find the project with the notebooks here. And you can also take a look at my github:

damiangilgonzalez1995 – Overview

To expose these methods, a practical use case will be carried out to improve the explanation. Therefore, we are going to create a RAG about reviews of the John Wick movies.

So that the reader can follow each step of this post, they can access the repository that I have created. In it you will find the code for each of the methods, in addition to the documents used to create the vector store. The jupyter notebook in charge of this task can be found in the git repository, and is the file called “0_create_vectore_db.ipynb”.

In relation to the data source of our RAG, there are 4 csv’s each corresponding to the reviews obtained for each of the films in the John Wick saga. The files contain the following information:

As you can see, the “Review” field will be the target of our retriever. The other fields being important to store as metadata:

To read and convert each row of our files into the “Document” format, we execute the following code:

from langchain_community.document_loaders.csv_loader import CSVLoader

from datetime import datetime, timedelta

documents = []

for i in range(1, 4):

loader = CSVLoader(

encoding="utf8",

file_path=f"data/john_wick_{i}.csv",

metadata_columns=["Review_Date", "Review_Title", "Review_Url", "Author", "Rating"]

)

movie_docs = loader.load()

for doc in movie_docs:

# We add metadate about the number of the movi

doc.metadata["Movie_Title"] = f"John Wick {i}"

# convert "Rating" to an `int`, if no rating is provided - None

doc.metadata["Rating"] = int(doc.metadata["Rating"]) if doc.metadata["Rating"] else 5

documents.extend(movie_docs)

We already have our documents in “Document” format:

print(documents[0])

Document(page_content=": 0nReview: The best way I can describe John Wick is to picture Taken but instead of Liam Neeson it's Keanu Reeves and instead of his daughter it's his dog. That's essentially the plot of the movie. John Wick (Reeves) is out to seek revenge on the people who took something he loved from him. It's a beautifully simple premise for an action movie - when action movies get convoluted, they get bad i.e. A Good Day to Die Hard. John Wick gives the viewers what they want: Awesome action, stylish stunts, kinetic chaos, and a relatable hero to tie it all together. John Wick succeeds in its simplicity.", metadata={'source': 'data/john_wick_1.csv', 'row': 0, 'Review_Date': '6 May 2015', 'Review_Title': ' Kinetic, concise, and stylish; John Wick kicks ass.n', 'Review_Url': '/review/rw3233896/?ref_=tt_urv', 'Author': 'lnvicta', 'Rating': 8, 'Movie_Title': 'John Wick 1', 'last_accessed_at': datetime.datetime(2024, 4, 8, 11, 49, 47, 92560)})

We only have to create a vector database (Vectore Store) locally. For this, I have used Chroma. Also keep in mind that it is necessary to use an embedding model, which will transform our documents into vector format for storage. Everything mentioned can be seen in the following piece of code:

from langchain_community.vectorstores import Chroma

from langchain_openai import OpenAIEmbeddings

import os

from dotenv import load_dotenv

load_dotenv()

os.environ["OPENAI_API_KEY"] = os.getenv('OPENAI_KEY')

embeddings = OpenAIEmbeddings(model="text-embedding-3-small")

db = Chroma.from_documents(documents=documents, embedding=embeddings, collection_name="doc_jonhWick", persist_directory="./jonhWick_db")

This will create a database on our premises called “JonhWick_db”. This will be the database that our RAG will use and from where our retriever will obtain the most relevant documents regarding the user’s queries.

Now is the time to present the different methods for creating a retriever.

Code in 1_naive_retriever.ipynb file.

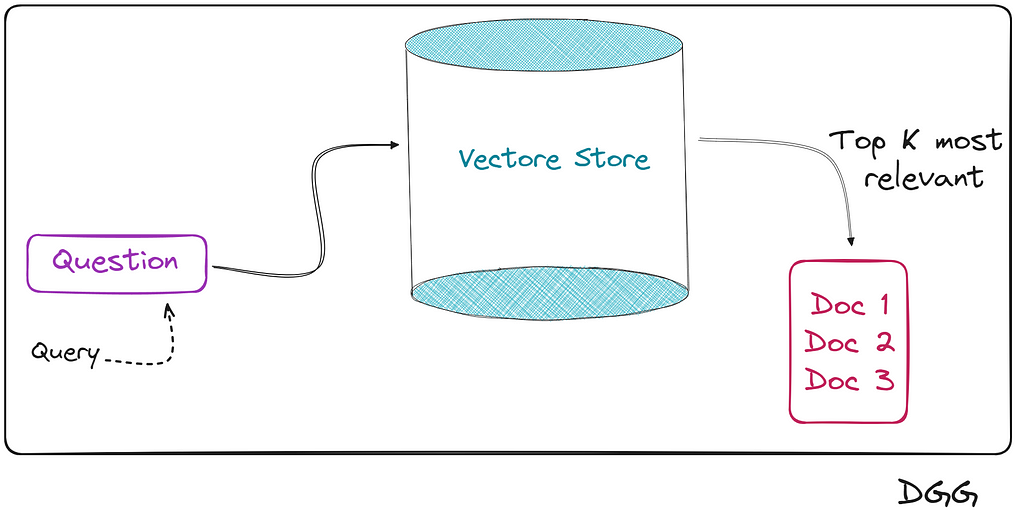

This method is the simplest, in fact its name indicates it. We use this adjective to identify this method for the simple reason that when entering the query into our database, we hope (naively) that it will return the most relevant documents/chunks.

Basically what happens is that we encode the user query with the same transformer with which we created the vector store. Once its vector representation is obtained, we calculate the similarity by calculating the cosine, the distance, etc.

And we collect the top K documents closest/similar to the query.

The flow of this type of retriever can be seen in the following image:

Keeping the scheme in mind, let’s see how all this looks in the code. We read the database:

from langchain_community.vectorstores import Chroma

from langchain_openai import OpenAIEmbeddings

import os

from dotenv import load_dotenv

load_dotenv()

os.environ["OPENAI_API_KEY"] = os.getenv('OPENAI_KEY')

embeddings = OpenAIEmbeddings(model="text-embedding-3-small")

vectordb= Chroma(persist_directory="./jonhWick_db",

embedding_function=embeddings,

collection_name="doc_jonhWick")pyth

And we create our retriever. We can configure the similarity calculation method, in addition to other parameters.

Retriever

# Specifying top k

naive_retriever = vectordb.as_retriever(search_kwargs={ "k" : 10})

# Similarity score threshold retrieval

# naive_retriever = db.as_retriever(search_kwargs={"score_threshold": 0.8}, search_type="similarity_score_threshold")

# Maximum marginal relevance retrieval

# naive_retriever = db.as_retriever(search_type="mmr")

Actually, we have already created our “Naive Retriever”, but to see how it works, we will create the complete RAG that we remember is composed of the following components:

Augmented & Generation

from langchain_core.prompts import ChatPromptTemplate

from langchain_openai import ChatOpenAI

# Augmented

TEMPLATE = """

You are happy assistant. Use the context provided below to answer the question.

If you do not know the answer, or are unsure, say you don't know.

Query:

{question}

Context:

{context}

"""

rag_prompt = ChatPromptTemplate.from_template(TEMPLATE)

# Generation

chat_model = ChatOpenAI()

We already have the 3 components of our RAG. All that remains is to assemble them, and for this we will use the langchain chains to create a RAG.

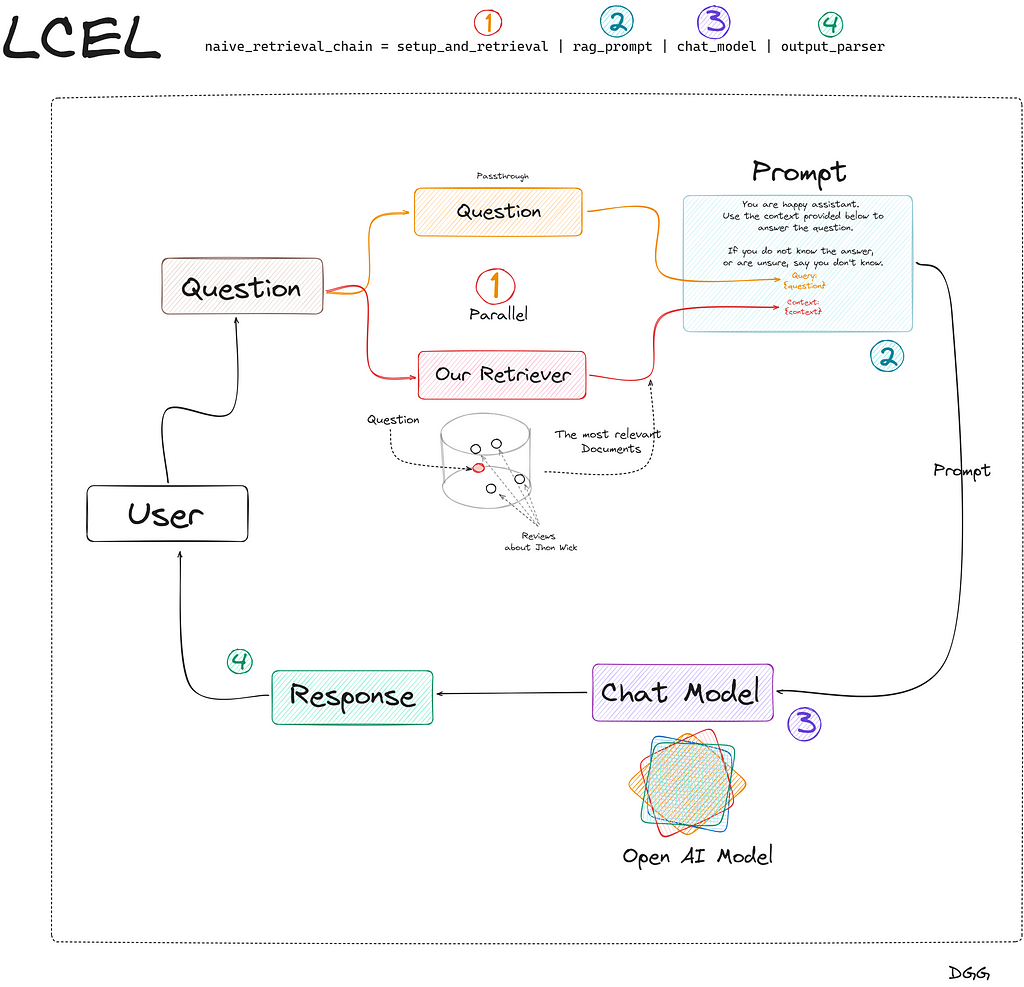

I don’t know if you know the language created by langchain for creating chains in a more efficient way. This language is known as LCEL (LangChain Expression Language). If you are new to this way of creating chains in langchain, I leave you a very good tutorial here:

Finally, we create our RAG using Langchain’s own chain creation language (LCEL):

from langchain_core.runnables import RunnablePassthrough, RunnableParallel

from operator import itemgetter

from langchain_core.output_parsers import StrOutputParser

setup_and_retrieval = RunnableParallel({"question": RunnablePassthrough(), "context": naive_retriever })

output_parser = StrOutputParser()

naive_retrieval_chain = setup_and_retrieval

| rag_prompt

| chat_model

| output_parser

naive_retrieval_chain.invoke( "Did people generally like John Wick?")

# response: 'Yes, people generally liked John Wick.'

This is the simplest way to create a chain for a RAG. In the Jupyter notebook you can find the same chain but more robust. Since I don’t want us to get lost on this topic now, I have only shown the simplest form. Also so that we understand what is happening in the code above, I have created this very clarifying diagram:

Great, we’re done creating our Naive RAG. Let’s move on to the next method.

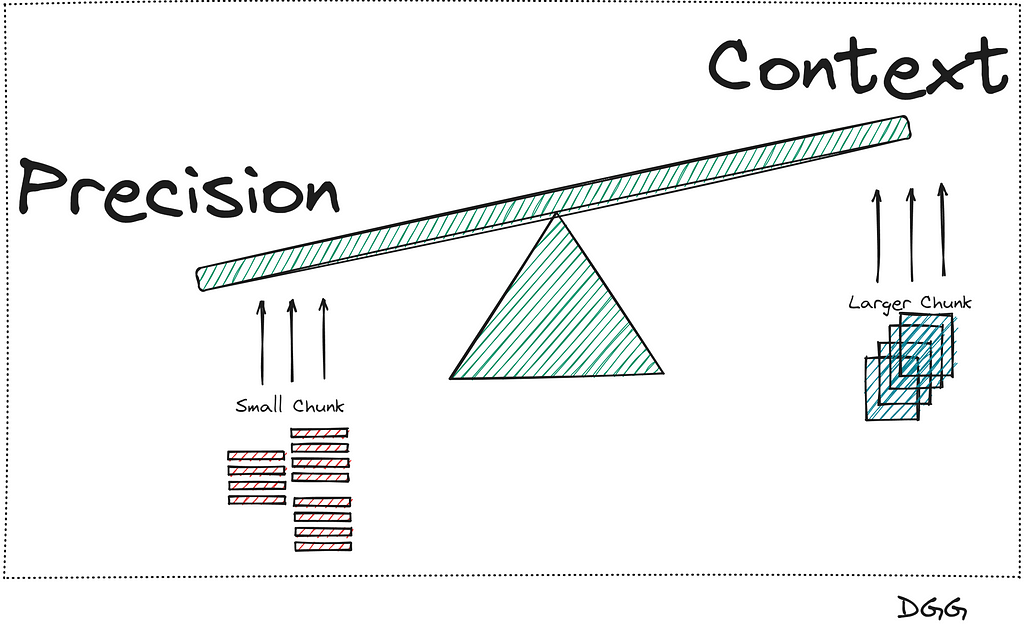

Code in 2_parent_document_retriever.ipynb file.

Imagine that we have created a RAG to recognize possible diseases by introducing some of their symptoms in the consultation. In the event that we have a Naive RAG, we may collect a series of possible diseases that only coincide in one or two symptoms, leaving our tool in a bit of a bad place.

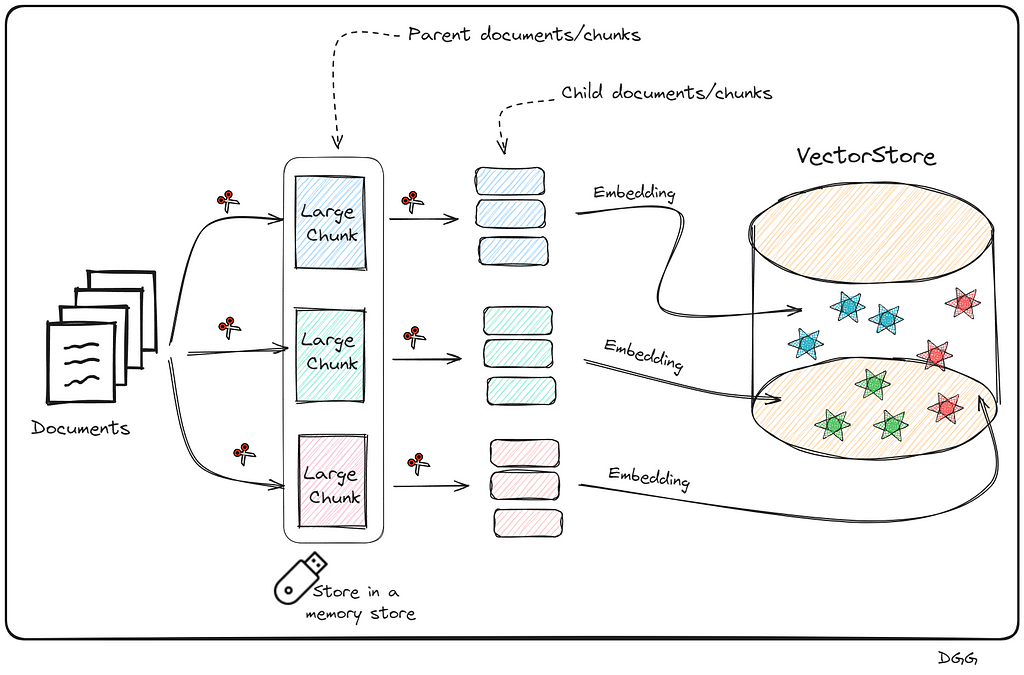

This is an ideal case to use Parent Doc Retriever. And the type of technique consists of cutting large chunks (parent chunk) into even smaller pieces (child chunk). By having small chunks, the information they contain is more concentrated and therefore, its informative value is not diluted between paragraphs of text.

There is a small problem in all this:

What has been said can be seen in the following image:

It seems that there is no way out of the problem, since when we increase the precision, the context is reduced, and vice versa. This is when this method appears that will solve our lives.

The main idea is to further chop the large chunks (Parent chunks/documents) into smaller chunks (Child Chunks/documents). Once this is done, perform the search for the most relevant top K documents with the child chunks, and return the parents chunks to which the top K child document belongs.

We already have the main idea, now let’s get it down to earth. The best way to explain it is step by step:

What has been said can be seen in the following image:

This may seem very complex to create, since we have to create a new database with the small chunks, save the parent chunks in memory. Additionally, know which parent chunk each child chunk belongs to. Thank goodness Langchain exists and the way to build it is super simple.

Surely you have come to the conclusion that it is necessary to create a new vector store for this method. Furthermore, in the case of reviews of the John Wick movies, such as the data source with CSV files, it is not necessary to perform the first split (parent chunks). This is because we can consider each row of our csv files to be a chunk in itself.

Overall, let’s visualize the following image that reflects how this method works:

Going to code it is represented as follows:

from langchain.retrievers import ParentDocumentRetriever

from langchain.storage import InMemoryStore

from langchain_text_splitters import RecursiveCharacterTextSplitter

from langchain_openai import OpenAIEmbeddings

from langchain_community.vectorstores import Chroma

# documents = Read csv files. Check jupyter notebook for more details

parent_docs = documents

# Embedding Model

embeddings = OpenAIEmbeddings(model="text-embedding-3-small")

# Splitters

child_splitter = RecursiveCharacterTextSplitter(chunk_size=200)

# We don't need a parent splitter because the data cames from CSV file, and each row is a parent doc.

# parent_splitter = RecursiveCharacterTextSplitter(chunk_size=800)

# Stores

store = InMemoryStore()

vectorstore = Chroma(embedding_function=embeddings, collection_name="fullDoc", persist_directory="./JohnWick_db_parentsRD")

parent_document_retriever = ParentDocumentRetriever(

vectorstore=vectorstore,

docstore=store,

child_splitter=child_splitter,

# parent_splitter =parent_splitter

)

Something intuitive about what happens here is that the number of chunks in the vector store (number of child chunks) should be much higher than the number of documents stored in memory (parent chunks). With the following code we can check it:

print(f"Number of parent chunks is: {len(list(store.yield_keys()))}")

print(f"Number of child chunks is: {len(parent_document_retriever.vectorstore.get()['ids'])}")

'''

Number of parent chunks is: 75

Number of child chunks is: 3701

'''

Great, we would already have our Parent Document Retriever, we just need to create our RAG based on this retriever and that would be it. It would be done exactly the same as in the previous method. I attach the code for creating the chain in langchain. To see more details, take a look at the jupyter notebook.

setup_and_retrieval = RunnableParallel({"question": RunnablePassthrough(), "context": parent_document_retriever })

output_parser = StrOutputParser()

parent_retrieval_chain = setup_and_retrieval | rag_prompt | chat_model | output_parser

Note that it is exactly the same as in the previous case, only with the small difference that in the “setup_and_retrieval” variable, we configure that we want to use our “parent_document_retriever”, instead of the “naive_retriever”.

Code in 3_self_query_retriever.ipynb file.

This is possibly one of the most optimal methods to improve the efficiency of our retriever.

Its main feature is that it is capable of performing searches in the vector store, applying filters based on the metadata.

We know that when we apply a “Naive retrieval”, we are calculating the similarity of all the chunks of the vector database with the query. The more chunks the vector store has, the more similarity calculations will have to be done. Now, imagine being able to do a prior filter based on the metadata, and after selecting the chunks that meet the conditions imposed in relation to the metadata, calculate similarities. This can drastically reduce computational and time cost.

Let’s look at a use case to fully understand when to apply this type of retreival.

Let’s imagine that we have stored in our vector database a large number of experiences and leisure offers (Ex: surf classes, zip line, gastronomic route, etc.). The description of the experience is what we have encoded, using our embedding model. Additionally, each offer has 3 key values or metadata: Date, price and place.

Let’s imagine that a user is looking for an experience of this style: An experience in nature, that is for the whole family and safe. Furthermore, the price must be less than $50 and the place is California.

Something is clear here

WE DO NOT WANT YOU TO RETURN US ACTIVITY/EXPERIENCES THAT DO NOT MEET THE PRICE OR PLACE THAT THE USER REQUESTS.

Therefore, it does not make sense to calculate similarities with chunks/experiences that do not comply with the metadata filter.

This case is ideal for applying Self Query Retriever. What this type of retriever allows us is to perform a first filter through the metadata, and then perform the similarity calculation between the chunks that meet the metadata requirements and the user input.

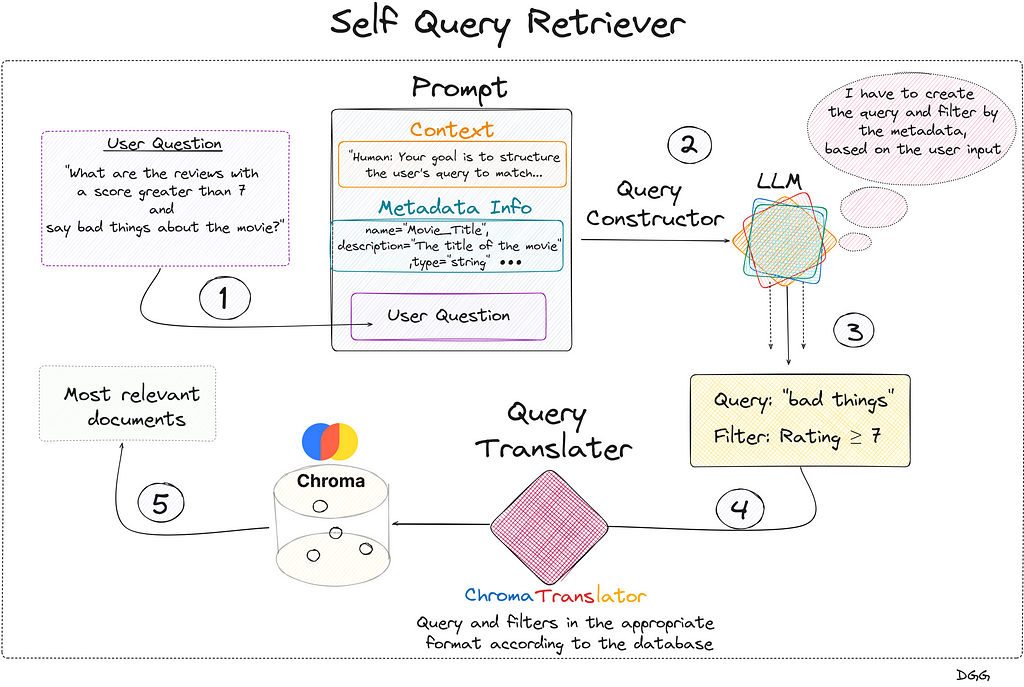

This technique can be summarized in two very specific steps:

The objective of the step called “Query Constructor” is to create the appropriate query and filters according to the user input.

Who is in charge of applying the corresponding filters and how do you know what they are?

For this we are going to use an LLM. This LLM will have to be able to decide which filters to apply and when. We will also have to explain beforehand what the metadata is and what each of them means. In short, the prompt must contain 3 key points:

The output generated by the LLM cannot be directly entered into the database. Therefore, the so-called “Query Translater” is needed.

This is a module in charge of translating the output of the LLM (Query Constructor) into the appropriate format to perform the query. Depending on the vector database you use, you will have to use one or the other. In my case I used Chroma db, therefore, I need a translator focused on this database. Luckily, Langchain has specific database translators for almost all of them.

As you may have already noticed, I am a big fan of diagrams. Let’s look at the following which provides quite a bit of clarity to the matter:

Regarding the previous image, we see that everything begins with the user’s query. We create the prompt that contains the 3 key fields and is introduced to the LLM that generates a response with two key fields: “Query” and “Filter”. This is fed into the query translator which translates these two fields into the correct format needed by Chroma DB. Performs the query and returns the most relevant documents based on the user’s initial question.

Something to emphasize is that the query entered by the user does not have to be the same as the one entered into the database. In the diagram shown, it can be seen that the LLM, taking into account the available metadata and the user’s question, detects that it can create a filter with the “Rating” metadata. It also creates a new query based on the user’s query.

Let’s look at all this in code. As I have explained, it is very important to provide the LLM with a detailed description of the metadata available in the vector store. This translates into the following piece of code:

from langchain.chains.query_constructor.base import AttributeInfo

from langchain.retrievers.self_query.base import SelfQueryRetriever

from langchain_openai import ChatOpenAI

from langchain.retrievers.self_query.chroma import ChromaTranslator

metadata_field_info = [

AttributeInfo(

name="Movie_Title",

description="The title of the movie",

type="string",

),

AttributeInfo(

name="Review_Date",

description="The date of the review",

type="string",

),

AttributeInfo(

name="Review_Title",

description="The title of the review",

type="string",

),

AttributeInfo(

name="Review_Url",

description="The URL of the review",

type="string",

),

AttributeInfo(

name="Author",

description="The author of the review",

type="string",

),

AttributeInfo(

name="Rating",

description="A 1 to 10 rating for the movie",

type="integer",

)

]

To define our retrieval we must define the following points:

Let’s see what it looks like in code:

document_content_desription = "A review of the Jonh Wick movie."

embeddings = OpenAIEmbeddings(model="text-embedding-3-small")

chat_model = ChatOpenAI()

self_query_retriever = SelfQueryRetriever.from_llm(

llm=ChatOpenAI(temperature=0),

vectorstore =vectordb,

document_contents = document_content_desription,

metadata_field_info =metadata_field_info,

verbose = True,

structured_query_translator = ChromaTranslator()

)

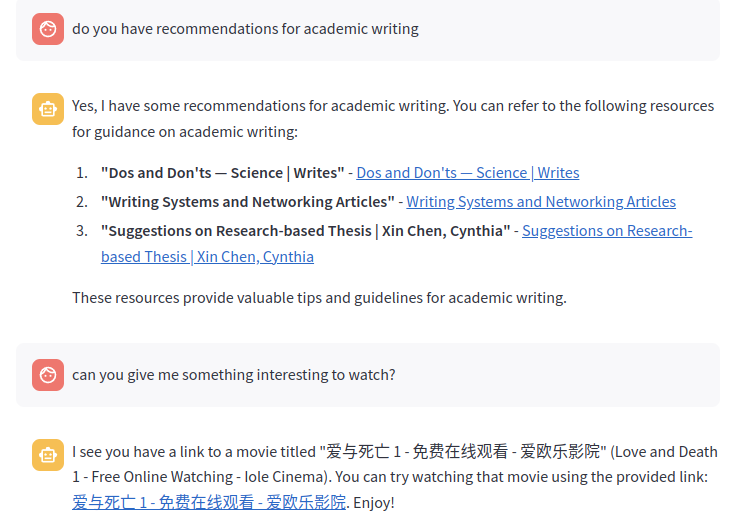

Let’s see with a very clear example how we have greatly improved our RAG by using this type of retriever. First we use a naive retriever and then a self query retriever.

Question = "Make a summary of the reviews that talk about John Wick 3 and have a score higher than 7"

response = naive_retrieval_chain.invoke(Question)

print(response)

'''

I don't know the answer.

'''

------------------------------------------------------------------------

response = self_retrieval_chain.invoke(Question)

print(response)

'''

John Wick: Chapter 3 - Parabellum is quite literally

about consequences, dealing with the fallout of John's...

'''

As we can see, there is a notable improvement.

Code in 4_contextual_compression_retriever(reranking).ipynb file.

There seems to be no solution for this problem. When we increase one of the metrics, the other seems destined to decrease. Are we sure about that?

This is when this technique, compression retriever, is presented, focusing on the reranking technique. Let’s say that this technique consists of two very different steps:

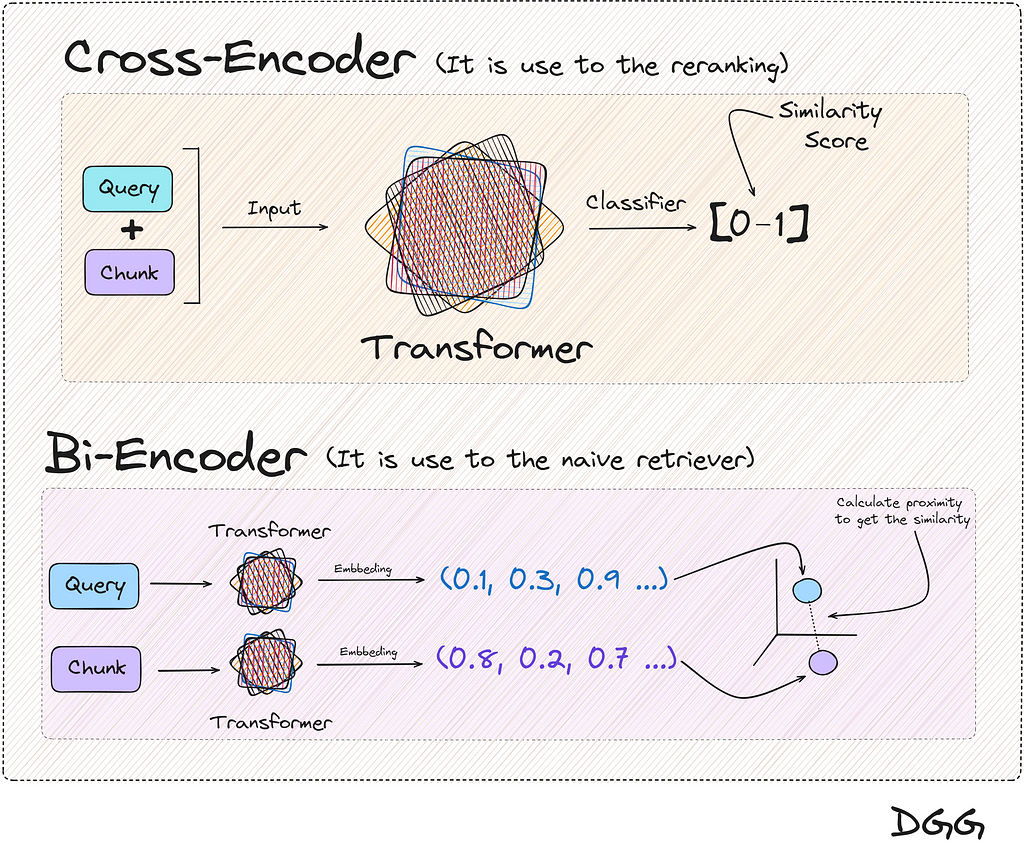

For the first step, what is known as Bi-Encoder is used, which is nothing more than what we usually use to make a basic RAG. Vectorize our documents. vectorize the query and calculate the similarity with any metric of our choice.

The second step is something different from what we are used to seeing. This recalculation/reranking is executed by the reranking model or cross-encoder.

These models expect two documents/texts as input, returning a similarity score between the pair.

If one of these two inputs is the query and the other is a chunk, we can calculate the similarity between the two.

These two methods can be displayed as follows:

You will have realized that the two methods in the end provide the same result, a metric that reflects the similarity between two texts. And this is totally true, but there is a key feature:

The result returned by the cross encoder is much more reliable than with the Bi-encoder

Okay, it works better, then, because we don’t use it directly with all chunks, instead of just the top K chunks. Because it would be terribly expensive in time and money/computation. For this reason, we make a first filter of the chunks closest in similarity to the query, reducing the use of the reranking model to only K times.

A good question would be where to find the Cross-Encoder models? We are lucky that there are open source models that we can find in HuggingFace, but for the practical case of this post we are going to use the model made available by the company Cohere.

Cohere | The leading AI platform for enterprise

To better understand the architecture of this method, let’s look at a visual example.

The image shows the steps:

Returning again to the computational cost and time, if the cross-encoders were applied directly, think that with each new query, the similarity of the query with each of the documents should be calculated. Something that is not optimal at all.

On the other hand, using Bi-Encoders, the vector representation of the documents is the same for each new query.

We then have a much superior method that is expensive to execute, and on the other hand, another method that works well but does not have a large computational cost with each new query. All this ends with the conclusion of unifying these two methods for a better RAG. And this is known as the Contextual Compression with reranking method.

Let’s move on to the code part. Let’s remember that this method uses a retreiver, which in our case will be a Naive Retriever:

naive_retriever = vectordb.as_retriever(search_kwargs={ "k" : 10})

Thanks to Langchain and its integration with Cohere, we only have to import the module that will execute the call to the Cohere cross-encoder model:

from langchain_cohere import CohereRerank

os.environ["COHERE_API_KEY"] = "YOUR API KEY FROM COHERE"

compressor = CohereRerank(top_n=3)

Finally, we create our Contextual Compression Retriever with Langchain:

from langchain.retrievers.contextual_compression import ContextualCompressionRetriever

compression_retriever = ContextualCompressionRetriever(

base_compressor=compressor,

base_retriever=naive_retriever

)

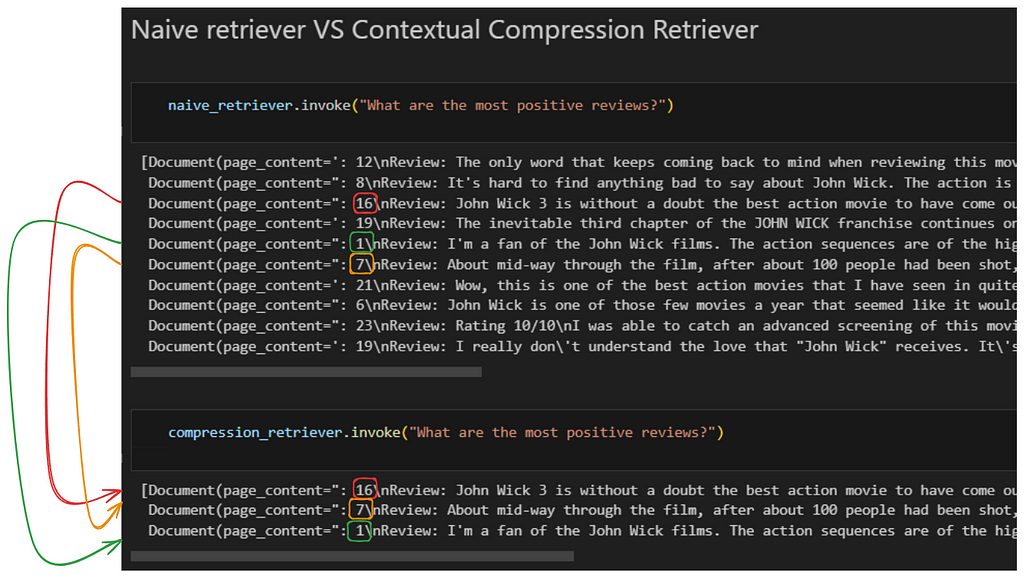

As simple as that. Let’s see a comparison between a Naive Retriever and a Reranking Retriever:

As we see, Naive returns us the top 10 chunks/documents. After performing the reranking and obtaining the 3 most relevant documents/chunks, there are noticeable changes. Notice how document number 16, which is in third position in relation to its relevance in the first retriever, becomes first position when performing the reranking.

We have seen that depending on the characteristics of the case where we want to apply a RAG, we will want to use one method or another. Furthermore, there may be the case in which one does not know which retriever method to use. For this, there are different libraries to evaluate your rags.

There are several tools for this purpose. Some of those options that I personally recommend are the combination of RAGAS and LangSmith.

Evaluating RAG pipelines with Ragas + LangSmith

I highly recommend following, learning and watching the videos of these people who are really what inspired me to make this article.

Thank you for reading!

If you find my work useful, you can subscribe to get an email every time that I publish a new article.

If you’d like, follow me on Linkedin!

Advanced Retriever Techniques to Improve Your RAGs was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Advanced Retriever Techniques to Improve Your RAGs

Go Here to Read this Fast! Advanced Retriever Techniques to Improve Your RAGs

Convolutional Neural Networks (CNNs) are a specialized kind of deep neural networks designed primarily for processing structured array data such as images. CNNs operate by recognizing patterns directly from pixel data of images, eliminating the need for manual feature extraction. They are particularly powerful in understanding the spatial hierarchy in images, utilizing learnable filters that process data in patches and thus preserving the spatial relationships between pixels.

These networks are incredibly effective at tasks that involve large amounts of visual data and are widely used in applications ranging from image and video recognition to real-time object detection, playing pivotal roles in advancements like facial recognition technology and autonomous vehicles.

In this article, we’ll explore AlexNet, a groundbreaking CNN architecture that has significantly influenced the field of computer vision. Known for its robust performance on various visual recognition tasks, AlexNet utilizes deep learning to interpret complex imagery directly. We’ll break down the mathematics behind its operations and the coding framework that powers it.

Index

· 2: Overview of AlexNet Architecture

∘ 2.1: General Layer Structure

∘ 2.2: Output Layer and Softmax Classification

· 3: In-depth Analysis of AlexNet Components

∘ 3.1: ReLU Nonlinearity

∘ 3.2: Training on Multiple GPUs

∘ 3.3: Local Response Normalization

∘ 3.4: Overlapping Pooling

∘ 3.5: Fully Connected Layers and Dropout

∘ 3.6: Dropout

· 4: Training Process and Optimization

∘ 4.1: Stochastic Gradient Descent Parameters

∘ 4.2: Initialization

∘ 4.3: Strategy for Adjusting the Learning Rate

· 5: Building AlexNet in Python

∘ 5.1: AlexNet Class

∘ 5.2: Early Stopping Class

∘ 5.3: Trainer Class

∘ 5.4: Data Preprocessing

∘ 5.5: Model Training and Evaluation

AlexNet is a pioneering deep learning network that rose to prominence after winning the ImageNet Large Scale Visual Recognition Challenge in 2012. Developed by Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton, AlexNet significantly lowered the top-5 error rate to 15.3% from the previous best of 26.2%, setting a new benchmark for the field. This achievement highlighted the effectiveness of CNNs that use ReLU activations, GPU acceleration, and dropout regularization to manage complex image classification tasks across large datasets.

The model comprises several layers that have become standard in most deep-learning CNNs today. These include convolutional layers, max-pooling, dropout, fully connected layers, and a softmax output layer. The model’s success demonstrated the practicality of deeper network architectures through creative approaches to design and training.

In this article, we will break down the sophisticated design and mathematical principles that underpin AlexNet. We’ll also review AlexNet’s training procedures and optimization techniques, and we will build it from scratch using PyTorch.

AlexNet’s architecture cleverly extracts features through a hierarchical layering system where each layer builds on the previous layers’ outputs to refine the feature extraction process. Here’s a detailed breakdown of its layers and functions:

Input Image

The model processes input images resized to 227×227 pixels. Each image has three channels (Red, Green, and Blue), reflecting standard RGB encoding.

Layer Configuration

It consists of eight primary layers that learn weights, five of which are convolutional, and the remaining three are fully connected. Between these layers, activation functions, normalization, pooling, and dropout are strategically applied to improve learning efficacy and combat overfitting.

Convolutional Layers

The initial layer uses 96 kernels (filters) sized 11x11x3, which convolve with the input image using a stride of 4 pixels. This large stride size helps reduce the output spatial volume size significantly, making the network computationally efficient right from the first layer.

Outputs from the first layer undergo normalization and max-pooling before reaching the second convolutional layer, which consists of 256 kernels each of size 5x5x48. The use of 48 feature maps each corresponds to separate filtered outputs from the previous layer, allowing this layer to mix features effectively.

The third convolutional layer does not follow with pooling or normalization, which typically helps to maintain the feature map’s richness derived from previous layers. It includes 384 kernels of size 3x3x256, directly connected to the outputs of the second layer, enhancing the network’s ability to capture complex features.

The fourth convolutional layer mirrors the third layer’s configuration but uses 384 kernels of size 3x3x192, enhancing the depth of the network without altering the layer’s spatial dimensions.

The final convolutional layer employs 256 kernels of size 3x3x192 and is followed by a max-pooling layer, which helps to reduce dimensionality and provides rotational and positional invariance to the features being learned.

Fully Connected Layers

The first fully connected layer is a dense layer with 4096 neurons. It takes the flattened output from the preceding convolutional layers (transformed into a 1D vector) and projects it onto a high-dimensional space to learn non-linear combinations of the features.

The second fully connected layer also features 4096 neurons and includes dropout regularization. Dropout helps prevent overfitting by randomly setting a fraction of input units to zero during training, which encourages the network to learn more robust features that are not reliant on any small set of neurons.

The final fully connected layer comprises 1000 neurons, each corresponding to a class of the ImageNet challenge. This layer is essential for class prediction, and it typically utilizes a softmax function to derive the classification probabilities.

The final layer in AlexNet is a softmax regression layer which outputs a distribution over the 1000 class labels by applying the softmax function to the logits of the third fully connected layer.

The softmax function is given by:

where zi are the logits or the raw prediction scores for each class from the final fully connected layer.

This layer essentially converts the scores into probabilities by comparing the exponentiated score of each class with the sum of exponentiated scores for all classes, highlighting the most probable class.

The softmax layer not only outputs these probabilities but also forms the basis for the cross-entropy loss during training, which measures the difference between the predicted probability distribution and the actual distribution (the true labels).

The Rectified Linear Unit (ReLU) has become a standard activation function for deep neural networks, especially CNNs like AlexNet. Its simplicity allows models to train faster and converge more effectively compared to networks using sigmoid or tanh functions.

The mathematical representation of ReLU is straightforward:

This function outputs x if x is positive; otherwise, it outputs zero.

Graphically, it looks like a ramp function that increases linearly for all positive inputs and is zero for negative inputs.

Advantages Of Sigmoid Over Tanh

ReLU has several advantages over traditional activation functions such as sigmoid:

and hyperbolic tangent:

ReLU helps neural networks converge faster by addressing the vanishing gradient problem. This problem occurs with sigmoid and tanh functions where gradients become very small (approach zero) as inputs become large, in either positive or negative direction. This small gradient slows down the training significantly as it provides very little update to the weights during backpropagation. In contrast, the gradient of the ReLU function is either 0 (for negative inputs) or 1 (for positive inputs), which simplifies and speeds up gradient descent.

It promotes sparsity of the activation. Since it outputs zero for half of its input domain, it inherently produces sparse data representations. Sparse representations seem to be more beneficial than dense representations (as typically produced by sigmoid or tanh functions), particularly in large-scale image recognition tasks where the inherent data dimensionality is very high but the informative part is relatively low.

Moreover, ReLU involves simpler mathematical operations. For any input value, this activation function requires a single max operation, whereas sigmoid and tanh involve more complex exponential functions, which are computationally more expensive. This simplicity of ReLU leads to much faster computational performance, especially beneficial when training deep neural networks on large datasets.

Because the negative part of ReLU’s function is zeroed out, it avoids the problem of outputs that do not change in a non-linear fashion as seen with sigmoid or tanh functions. This characteristic allows the network to model the data more cleanly and avoid potential pitfalls in training dynamics.

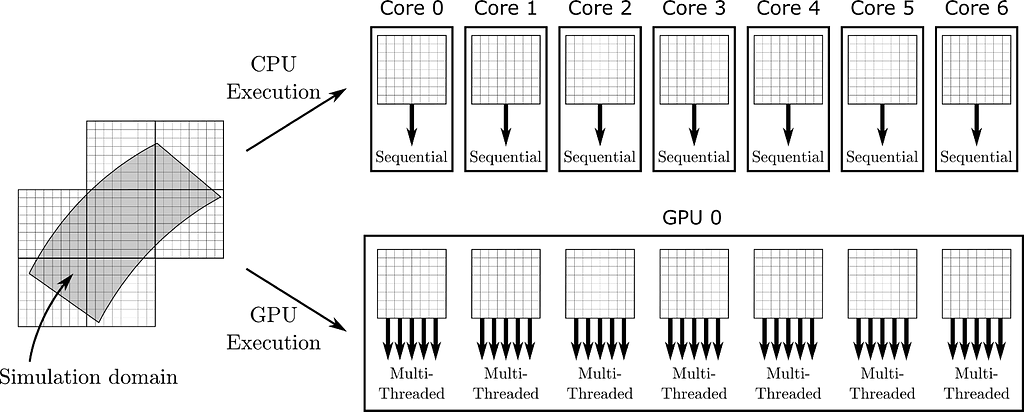

AlexNet was one of the pioneering convolutional neural networks to leverage parallel GPU training, managing its deep and computation-heavy architecture. The network operates on two GPUs simultaneously, a core part of its design that greatly improves its performance and practicality.

Layer-wise Distribution

AlexNet’s layers are distributed between two GPUs. Each GPU processes half of the neuron activations (kernels) in the convolutional layers. Specifically, the kernels in the third layer receive inputs from all kernel maps of the second layer, whereas the fourth and fifth layers only receive inputs from kernel maps located on the same GPU.

Communication Across GPUs

The GPUs need to communicate at specific layers crucial for combining their outputs for further processing. This inter-GPU communication is essential for integrating the results of parallel computations.

Selective Connectivity

Not every layer in AlexNet is connected across both GPUs. This selective connectivity reduces the amount of data transferred between GPUs, cutting down on communication overhead and enhancing computation efficiency.

This strategy of dividing not just the dataset but also the network model across two GPUs enables AlexNet to handle more parameters and larger input sizes than if it were running on a single GPU. The extra processing power allows AlexNet to handle its 60 million parameters and the extensive computations required for training deep networks on large-scale image classification tasks efficiently.

Training with larger batch sizes is more feasible with multiple GPUs. Larger batches provide more stable gradient estimates during training, which is vital for efficiently training deep networks. While not directly a result of using multiple GPUs, the ability to train with larger batch sizes and more rapid iteration times helps combat overfitting. The network experiences a more diverse set of data in a shorter amount of time, which enhances its ability to generalize from the training data to unseen data.

Local Response Normalization (LRN) in AlexNet is a normalization strategy that plays a crucial role in the network’s ability to perform well in image classification tasks. This technique is applied to the output of the ReLU non-linearity activation function.

LRN aims to encourage lateral inhibition, a biological process where activated neurons suppress the activity of neighboring neurons in the same layer. This mechanism works under the “winner-takes-all” principle, where neurons showing relatively high activity suppress the less active neurons around them. This dynamic allows the most significant features relative to their local neighborhood to be enhanced while suppressing the lesser ones.

The LRN layer computes a normalized output for each neuron by performing a sort of lateral inhibition by damping the responses of neurons when their locally adjacent neurons exhibit high activity.

Given a neuron’s activity ax, yi at position (x, y) in the feature map i, the response-normalized activity bx, yi is given by:

where:

Local Response Normalization (LRN) serves to implement a form of local inhibition among adjacent neurons, which is inspired by the concept of lateral inhibition found in biological neurons. This inhibition plays a vital role in several key areas:

Activity Regulation

LRN prevents any single feature map from overwhelming the response of the network by penalizing larger activations that lack support from their surroundings. This squaring and summing of neighboring activations ensures no single feature disproportionately influences the output, enhancing the model’s ability to generalize across various inputs.

Contrast Normalization

By emphasizing patterns that stand out relative to their neighbors, LRN functions similarly to contrast normalization in visual processing. This feature highlights critical local features in an image more effectively, aiding in the visual differentiation process.

Error Rate Reduction

Incorporating LRN in AlexNet has helped reduce the top-1 and top-5 error rates in the ImageNet classification tasks. It manages the high activity levels of neurons, thereby improving the overall robustness of the network.

Overlapping pooling is a technique used in convolutional neural networks (CNNs) to reduce the spatial dimensions of the input data, simplify the computations, and help control overfitting. It modifies the standard non-overlapping (traditional) max-pooling by allowing the pooling windows to overlap.

Traditional Max Pooling

In traditional max pooling, the input image or feature map is divided into distinct, non-overlapping regions, each corresponding to the size of the pooling filter, often 2×2. For each of these regions, the maximum pixel value is determined and output to the next layer. This process reduces the data dimensions by selecting the most prominent features from non-overlapping neighborhoods.

For example, assuming a pooling size (z) of 2×2 and a stride (s) of 2 pixels, the filter moves 2 pixels across and 2 pixels down the input field. The stride of 2 ensures there is no overlap between the regions processed by the filter.

Overlapping Pooling in AlexNet

Overlapping pooling, used by AlexNet, involves setting the stride smaller than the pool size. This approach allows the pooling regions to overlap, meaning the same pixel may be included in multiple pooling operations. It increases the density of the feature mapping and helps retain more information through the layers.

For example, using a pooling size of 3×3 and a stride of 2 pixels. This configuration means that while the pooling filter is larger (3×3), it moves by only 2 pixels each time it slides over the image or feature map. As a result, adjacent pooling regions share a column or row of pixels that gets processed multiple times, enhancing feature integration.

In the architecture of AlexNet, after several stages of convolutional and pooling layers, the high-level reasoning in the network is done by fully connected layers. Fully connected layers play a crucial role in transitioning from the extraction of feature maps in the convolutional layers to the final classification.

A fully connected (FC) layer takes all neurons in the previous layer (whether they are the output of another fully connected layer, or a flattened output from a pooling or convolutional layer) and connects each of these neurons to every neuron it contains. In AlexNet, there are three fully connected layers following the convolutional and pooling layers.

The first two fully connected layers in AlexNet have 4096 neurons each. These layers are instrumental in integrating the localized, filtered features that the prior layers have identified into global, high-level patterns that can represent complex dependencies in the inputs. The final fully connected layer effectively acts as a classifier: with one neuron for each class label (1000 for ImageNet), it outputs the network’s prediction for the input image’s category.

Each neuron in these layers applies a ReLU (Rectified Linear Unit) activation function except for the output layer, which uses a softmax function to map the output logits (the raw prediction scores for each class) to a probabilistic distribution over the classes.

The output from the final pooling or convolutional layer typically undergoes flattening before being fed into the fully connected layers. This process transforms the 2D feature maps into 1D feature vectors, making them suitable for processing via traditional neural network techniques. The final layer’s softmax function then classifies the input image by assigning probabilities to each class label based on the feature combinations learned through the network.

Dropout is a regularization technique used to prevent overfitting in neural networks, particularly effective in large networks like AlexNet. Overfitting occurs when a model learns patterns specific to the training data, but which do not generalize to new data.

In AlexNet, dropout is applied to the outputs of the first two fully connected layers. Each neuron in these layers has a probability p (commonly set to 0.5, i.e., 50%) of being “dropped,” meaning it is temporarily removed from the network along with all its incoming and outgoing connections.

If you want to dive deep into Dropout’s math and code, I highly recommend you take a look at section 3.4 of my previous article:

The Math Behind Fine-Tuning Deep Neural Networks

In AlexNet, Stochastic Gradient Descent (SGD) is employed to optimize the network during training. This method updates the network’s weights based on the error gradient of the loss function, where the effective tuning of parameters such as batch size, momentum, and weight decay is critical for the model’s performance and convergence. In today’s article, we will use a Pytorch implementation of SGD, and we will cover a high-level view of this popular optimization technique. If you are interested in a low-level view, scraping its math, and building the optimizer from scratch, take a look at this article:

Stochastic Gradient Descent: Math and Python Code

Let’s cover now the main components of SGD and the settings used in AlexNet:

Batch Size

The batch size, which is the number of training examples used to calculate the loss function’s gradient for one update of the model’s weights, is set to 128 in AlexNet. This size strikes a balance between computational efficiency — since larger batches require more memory and computation — and the accuracy of error estimates, which benefit from averaging across more examples.

The choice of a batch size of 128 helps stabilize the gradient estimates, making the updates smoother and more reliable. While larger batches provide a clearer signal for each update by reducing noise in the gradient calculations, they also require more computational resources and may sometimes generalize less effectively from training data to new situations.

Momentum

Momentum in SGD helps accelerate the updates in the correct direction and smoothens the path taken by the optimizer. It modifies the update rule by incorporating a fraction of the previous update vector. In AlexNet, the momentum value is 0.9, implying that 90% of the previous update vector contributes to the current update. This high level of momentum speeds up convergence towards the loss function’s minimum, which is particularly useful when dealing with small but consistent gradients.

Using momentum ensures that updates not only move in the right direction but also build up speed along surfaces of the loss function’s topology that have consistent gradients. This aspect is crucial for escaping from any potential shallow local minima or saddle points more effectively.

Weight Decay

Weight decay acts as a regularization term that penalizes large weights by adding a portion of the weight values to the loss function. AlexNet sets this parameter at 0.0005 to keep the weights from becoming too large, which could lead to overfitting given the network’s large number of parameters.

Weight decay is essential in complex models like AlexNet, which are prone to overfitting due to their high capacity. By penalizing the magnitude of the weights, weight decay ensures that the model does not rely too heavily on a small number of high-weight features, promoting a more generalized model.

The update rule for AlexNet’s weights can be described as follows:

Here:

These settings help ensure that the network not only learns efficiently but also achieves robust performance on both seen and unseen data, optimizing the speed and accuracy of training while maintaining the ability to generalize well.

Proper initialization of weights and biases and the careful adjustment of the learning rate are critical to training deep neural networks. These factors influence the rate at which the network converges and its overall performance on both training and validation datasets.

Weights Initialization

In AlexNet, the weights for the convolutional layers are initialized from a zero-mean Gaussian distribution with a standard deviation of 0.01. This narrow standard deviation prevents any single neuron from initially overwhelming the output, ensuring a uniform scale of weight initialization.

Similarly, weights in the fully connected layers are initialized from a Gaussian distribution. Special attention is given to the variance of this distribution to keep the output variance consistent across layers, which is crucial for maintaining the stability of deeper networks.

To get a better understanding of this process let’s build the initialization for AlexNet from scratch in Python:

import numpy as np

def initialize_weights(layer_shapes):

weights = []

for shape in layer_shapes:

if len(shape) == 4: # This is a conv layer: (out_channels, in_channels, filter_height, filter_width)

std_dev = 0.01 # Standard deviation for conv layers

fan_in = np.prod(shape[1:]) # product of in_channels, filter_height, filter_width

elif len(shape) == 2: # This is a fully connected layer: (out_features, in_features)

# He initialization: std_dev = sqrt(2. / fan_in)

fan_in = shape[1] # number of input features

std_dev = np.sqrt(2. / fan_in) # Recommended to maintain variance for ReLU

else:

raise ValueError("Invalid layer shape: must be 4D (conv) or 2D (fc)")

# Gaussian initialization

weight = np.random.normal(loc=0, scale=std_dev, size=shape)

weights.append(weight)

return weights

# Example usage:

layer_shapes = [

(96, 3, 11, 11), # Conv1 Layer: 96 filters, 3 input channels, 11x11 filter size

(256, 96, 5, 5), # Conv2 Layer: 256 filters, 96 input channels, 5x5 filter size

(384, 256, 3, 3), # Conv3 Layer: 384 filters, 256 input channels, 3x3 filter size

(384, 384, 3, 3), # Conv4 Layer: 384 filters, 384 input channels, 3x3 filter size

(256, 384, 3, 3), # Conv5 Layer: 256 filters, 384 input channels, 3x3 filter size

(4096, 256*6*6), # FC1 Layer: 4096 output features, (256*6*6) input features

(4096, 4096), # FC2 Layer: 4096 output features, 4096 input features

(1000, 4096) # FC3 (output) Layer: 1000 classes, 4096 input features

]

initialized_weights = initialize_weights(layer_shapes)

for idx, weight in enumerate(initialized_weights):

print(f"Layer {idx+1} weights shape: {weight.shape} mean: {np.mean(weight):.5f} std dev: {np.std(weight):.5f}")

The initialize_weights function takes a list of tuples describing the dimensions of each layer’s weights. Convolutional layers expect four dimensions (number of filters, input channels, filter height, filter width), while fully connected layers expect two dimensions (output features, input features).

In the convolutional layers standard deviation is fixed at 0.01, aligned with the original AlexNet configuration to prevent overwhelming outputs by any single neuron.

Fully connected layers use He initialization (good practice for layers using ReLU activation) where the standard deviation is adjusted to sqrt(2/fan_in) to keep the output variance consistent, promoting stable learning in deep networks.

For each layer defined in layer_shapes, weights are initialized from a Gaussian (normal) distribution centered at zero with a calculated

Biases Initialization

Biases in some convolutional layers are set to 1, particularly in layers followed by ReLU activations. This initialization pushes the neuron outputs into the positive range of the ReLU function, ensuring they are active from the beginning of training. Biases in other layers are initialized at 0 to start from a neutral output.

Like in certain convolutional layers, biases in fully connected layers are also set to 1. This strategy helps to prevent dead neurons at the start of training by ensuring that neurons are initially in the positive phase of activation.

AlexNet begins with an initial learning rate of 0.01. This rate is high enough to allow significant updates to the weights, facilitating rapid initial progress without being so high as to risk the divergence of the learning process.

The learning rate is decreased by a factor of 10 at predetermined points during the training. This approach is known as “step decay.” In AlexNet, these adjustments typically occur when the validation error rate stops decreasing significantly. Reducing the learning rate at these points helps refine the weight adjustments, promoting better convergence.

Starting with a higher learning rate helps the model overcome potential local minima more effectively. As the network begins to stabilize, reducing the learning rate helps it settle into broad, flat minima that are generally better for generalization to new data.

As training progresses, lowering the learning rate allows for finer weight adjustments. This gradual refinement helps the model to not only fit the training data better but also improves its performance on validation data, ensuring the model is not just memorizing the training examples but genuinely learning to generalize from them.

In this section, we detail the step-by-step process to recreate AlexNet in Python using PyTorch, providing insights into the class architecture, its initial setup, training procedures, and evaluation techniques.

I suggest you keep this Jupyter Notebook open and accessible, as it contains all the code we will be covering today:

Let’s start with building the AlexNet main class:

# PyTorch for creating and training the neural network

import torch

import torch.nn as nn

import torch.optim as optim

from torch.utils.data.dataset import random_split

# platform for getting the operating system

import platform

# torchvision for loading and transforming the dataset

import torchvision

import torchvision.transforms as transforms

# ReduceLROnPlateau for adjusting the learning rate

from torch.optim.lr_scheduler import ReduceLROnPlateau

# numpy for numerical operations

import numpy as np

# matplotlib for plotting

import matplotlib.pyplot as plt

class AlexNet(nn.Module):

def __init__(self, num_classes=1000):

super(AlexNet, self).__init__()

self.features = nn.Sequential(

nn.Conv2d(3, 64, kernel_size=11, stride=4, padding=2),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2),

nn.Conv2d(64, 192, kernel_size=5, padding=2),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2),

nn.Conv2d(192, 384, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(384, 256, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(256, 256, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2),

)

self.avgpool = nn.AdaptiveAvgPool2d((6, 6))

self.classifier = nn.Sequential(

nn.Dropout(),

nn.Linear(256 * 6 * 6, 4096),

nn.ReLU(inplace=True),

nn.Dropout(),

nn.Linear(4096, 4096),

nn.ReLU(inplace=True),

nn.Linear(4096, num_classes),

)

def forward(self, x):

x = self.features(x)

x = self.avgpool(x)

x = torch.flatten(x, 1)

x = self.classifier(x)

return x

Initializationclass AlexNet(nn.Module)

class AlexNet(nn.Module):

def __init__(self, num_classes=1000):

super(AlexNet, self).__init__()

The AlexNet class inherits from nn.Module, a base class for all neural network modules in PyTorch. Any new network architecture in PyTorch is created by subclassing nn.Module.

The initialization method defines how the AlexNet object should be constructed when instantiated. It optionally takes a parameter num_classes to allow for flexibility in the number of output classes, defaulting to 1000, which is typical for ImageNet tasks.

Feature Layers

self.features = nn.Sequential(

nn.Conv2d(3, 64, kernel_size=11, stride=4, padding=2),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2),

nn.Conv2d(64, 192, kernel_size=5, padding=2),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2),

nn.Conv2d(192, 384, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(384, 256, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(256, 256, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2),

)

Here is where the convolutional layers of AlexNet are defined. The nn.Sequential container wraps a sequence of layers, and data passes through these layers in the order they are added.

nn.Conv2d(3, 64, kernel_size=11, stride=4, padding=2)

The first layer is a 2D convolutional layer (nn.Conv2d) with 3 input channels (RGB image), and 64 output channels (feature maps), with a kernel size of 11×11, a stride of 4, and padding of 2 on each side. This layer processes the input image and begins the feature extraction.

nn.ReLU(inplace=True)

Then, we pass the ReLU activation function which introduces non-linearity, allowing the model to learn complex patterns. The inplace=True parameter helps to save memory by modifying the input directly.

nn.MaxPool2d(kernel_size=3, stride=2)

The max-pooling layer reduces the spatial dimensions of the input feature maps, making the model more robust to the position of features in the input images. It uses a window of size 3×3 and a stride of 2.

Additional nn.Conv2d and nn.MaxPool2d layers follow, which further refine and compact the feature representation. Each convolutional layer typically increases the number of feature maps while reducing their dimensionality through pooling, a pattern that helps in abstracting from the spatial input to features that progressively encapsulate more semantic information.

Adaptive Pooling and Classifier

self.avgpool = nn.AdaptiveAvgPool2d((6, 6))

self.avgpool adaptively pools the feature maps to a fixed size of 6×6, which is necessary for matching the input size requirement of the fully connected layers, allowing the network to handle various input dimensions.

self.classifier = nn.Sequential(

nn.Dropout(),

nn.Linear(256 * 6 * 6, 4096),

nn.ReLU(inplace=True),

nn.Dropout(),

nn.Linear(4096, 4096),

nn.ReLU(inplace=True),

nn.Linear(4096, num_classes),

)

Here, we define another sequential container named classifier, which contains the fully connected layers of the network. These layers are responsible for making the final classification based on the abstract features extracted by the convolutional layers.

nn.Dropout() randomly zeroes some of the elements of the input tensor with a probability of 0.5 for each forward call, which helps prevent overfitting.

nn.Linear(256 * 6 * 6, 4096) reshapes the flattened feature maps from the adaptive pooling layer into a vector of size 4096. It connects every input to every output with learned weights.

Finally, nn.ReLU and nn.Dropout calls further refine the learning pathway, providing non-linear activation points and regularization respectively. The final nn.Linear layer reduces the dimension from 4096 to num_classes, outputting the raw scores for each class.

Forward Method

def forward(self, x):

x = self.features(x)

x = self.avgpool(x)

x = torch.flatten(x, 1)

x = self.classifier(x)

return x

The forward method dictates the execution of the forward pass of the network:

The EarlyStopping class is used during the training of machine learning models to halt the training process when the validation loss ceases to improve. This approach is instrumental in preventing overfitting and conserving computational resources by stopping the training at the optimal time.

class EarlyStopping:

"""

Early stopping to stop the training when the loss does not improve after

Args:

-----

patience (int): Number of epochs to wait before stopping the training.

verbose (bool): If True, prints a message for each epoch where the loss

does not improve.

delta (float): Minimum change in the monitored quantity to qualify as an improvement.

"""

def __init__(self, patience=7, verbose=False, delta=0):

self.patience = patience

self.verbose = verbose

self.counter = 0

self.best_score = None

self.early_stop = False

self.delta = delta

def __call__(self, val_loss):

"""

Args:

-----

val_loss (float): The validation loss to check if the model performance improved.

Returns:

--------

bool: True if the loss did not improve, False if it improved.

"""

score = -val_loss

if self.best_score is None:

self.best_score = score

elif score < self.best_score + self.delta:

self.counter += 1

if self.counter >= self.patience:

self.early_stop = True

else:

self.best_score = score

self.counter = 0

Initialization

def __init__(self, patience=7, verbose=False, delta=0):

self.patience = patience

self.verbose = verbose

self.counter = 0

self.best_score = None

self.early_stop = False

self.delta = delta

The EarlyStopping class is initialized with several parameters that configure its operation:

patience determines the number of epochs to wait for an improvement in the validation loss before stopping the training. It is set by default to 7, allowing some leeway for the model to overcome potential plateaus in the loss landscape.

verbose controls the output of the class; if set to True, it will print a message for each epoch where the loss does not improve, providing clear feedback during training.

delta sets the threshold for what constitutes an improvement in the loss, aiding in fine-tuning the sensitivity of the early stopping mechanism.

Callable Method

def __call__(self, val_loss):

score = -val_loss

if self.best_score is None:

self.best_score = score

elif score < self.best_score + self.delta:

self.counter += 1

if self.counter >= self.patience:

self.early_stop = True

else:

self.best_score = score

self.counter = 0