Or how to “eliminate” human annotators

Motivation

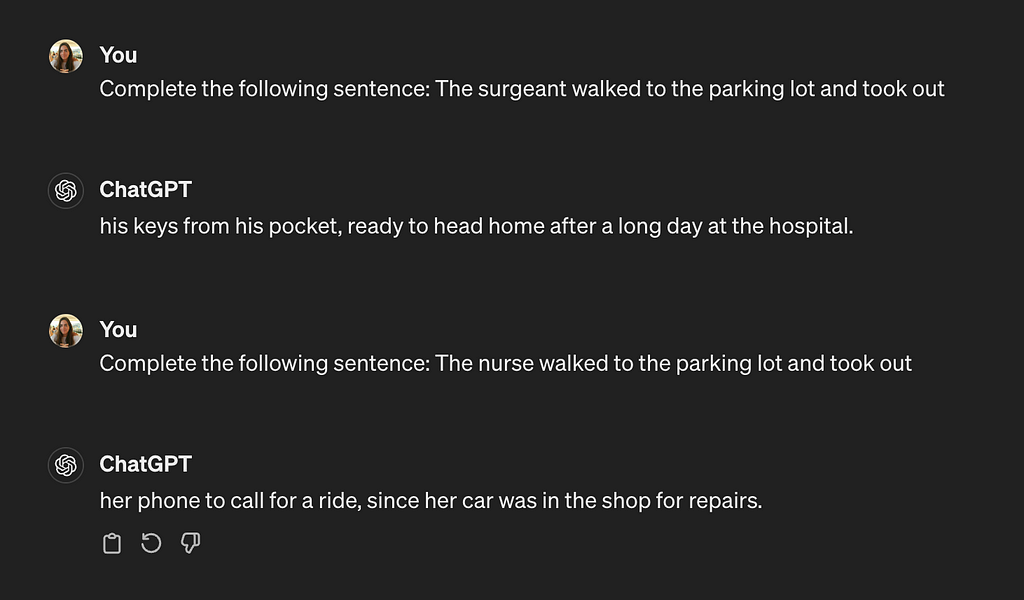

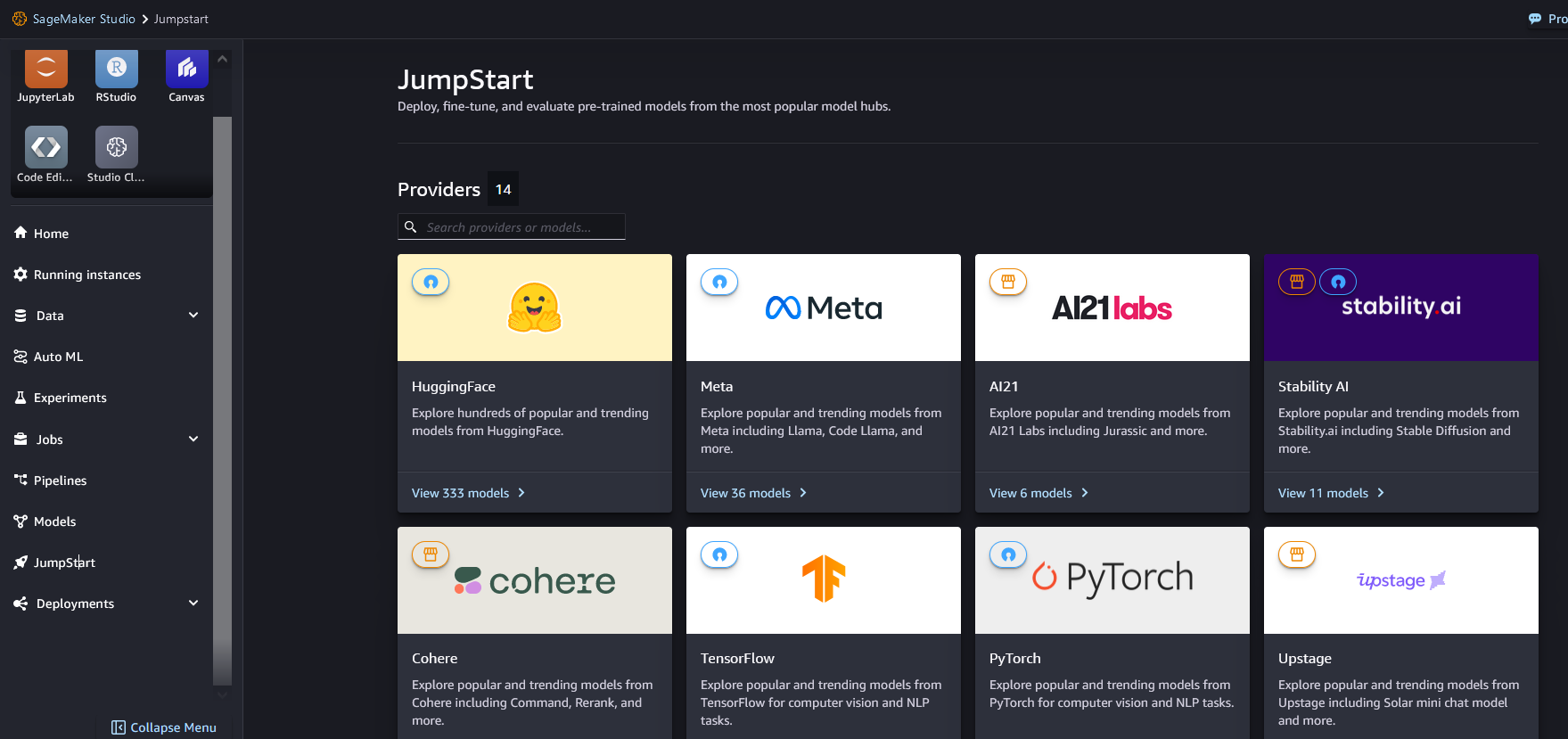

As Large Language Models (LLMs) revolutionize our life, the growth of instruction-tuned LLMs faces significant challenges: the critical need for vast, varied, and high-quality datasets. Traditional methods, such as employing human annotators to generate datasets — a strategy used in InstructGPT (image above)— face high costs, limited diversity, creativity, and allignment challenges. To address these limitations, the Self-Instruct framework² was introduced. Its core idea is simple and powerful: let language models (LM) generate training data, leading to more cost-effective, diverse and creative datasets.

Therefore, in this article, I would like to lead you through the framework step-by-step, demonstrating all the details so that after reading it, you will be able to reproduce the results yourself 🙂

❗ This article provides all steps from code perspective, so please feel free to visit the original GitHub repository .❗

Self-Instruct Framework

The recipe is relatively straightforward:

- Step 0 — Define Instruction Data:

— Add a seed of high-quality and diverse human-written tasks in different domains as tuples (instruction, instances) to the task pool; - Step 1 — Instruction Generation:

— Sample 8 (6 human-written and 2 model-generated) instructions from the task pool;

— Insert bootstrapped instructions into the prompt in a few-shot way and ask an LM to come up with more instructions;

— Filter generated instructions out based on ROUGE-metric (a method to evaluate the similarity between text outputs and reference texts) and some heuristics (I will cover this later);

— Repeat Step 1 until reaching some amount of instructions; - Step 2 — Classification Task Identification:

— For every generated instruction in the task pool, we need to identify its type (classification or non-classification) via a few-shot manner; - Step 3 — Instance Generation:

— Given the instructions and task types, generate instances (inputs and outputs) and filter them out based on heuristics; - Step 4 — Finetuning the LM to Follow Instructions:

— Utilize generated tasks to finetune a pre-trained model.

Voila, that’s how the Self-Instruct works, but the devil is in the details, so let’s dive into every step!

Step 0 — Define Instruction Data

Let’s begin by understanding what is inside the initial “Seed of tasks”: it consists of 175 seed tasks (25 classification and 150 non-classifications) with one instruction and one instance per task in different domains. Each task has an id, name, instruction, instances (input and output), and is_classification binary flag, identifying whether the task has a limited output label space.

There are some examples of classification and non-classification tasks with empty and non-empty input fields:

Therefore, we can see in the first example how the input field clarifies and provides context to the more general instruction, while in the second example, we don’t need an input field as long as the instruction is already self-contained. Also, the first example is the classification task — we can answer it by assigning some labels from limited space, while we can’t do the same with the second example.

This step is crucial as long as we encourage task diversity via data formats in the dataset and demonstrate correct ways of solving various tasks.

As long as we define the instruction format, we add them to the task pool to store our final dataset.

Step 1 — Instruction Generation

Sampling and prompting

By adding a human-written seed set of tasks to the task pool, we can start with instructions generation. To do so, we need to sample 8 instructions from the task pool (6 human-written and 2 machine-generated) and encode them into the following prompt:

However, in the beginning, we do not have any machine-generated instructions. Therefore, we just replaced them with empty strings in the prompt.

After generation, we extract instructions from the LM’s response (via regular expressions), filter them out, and add filtered instructions to the task pool:

We repeat the instruction generation step until we reach some number of machine-generated instructions (specified at the beginning of the step).

Filtering

To obtain a diverse dataset, we need to define somehow which instructions will be added or not to the task pool, and the easiest way is a heuristically chosen set of rules, for instance:

- Filter out instructions that are too short or too long;

- Filter based on keywords unsuitable for language models (image, graph, file, plot, …);

- Filter those starting with punctuation;

- Filter those starting with non-English characters;

- Filter those when their ROUGE-L similarity with any existing instruction is higher than 0.7;

Step 2— Classification Task Identification

The authors of Self-Instruct noticed that depending on an instruction, the language models can be biased towards one label, especially for classification tasks. Therefore, to eliminate such such, we need to classify every instruction via few-shot prompting:

Step 3 — Instance Generation

After identifying the instruction type, we can finally generate input and output, considering that we have two types of instructions (classification or non-classification). How? Few-shot prompting!

For non-classification instructions, we ask the model to generate input and only then output (Input-First Approach), but for classification tasks, we ask the model to generate output (class label) first and then condition input generation based on output (Output-First Approach). Compared to Step 0, we don’t restrict the number of generated instances per every instruction.

After generation, we extract instances and format them (regular expressions); after formatting, we filter them out using some rules, for example:

- If input and output are the same,

- If instances are already in the task pool,

- If the output is empty,

- These are usually incomplete generations if the input or output ends with a colon;

And some other heuristics. In the end, we have the following example of a generated task with 1 instruction and 1 instance:

That’s the main idea behind Self-Intsruct!

Step 4— Finetuning the LM to Follow Instructions

After completing all previous steps, we can take a pre-trained LM and instruction-tune it on the generated dataset to achieve better metrics.

Overcoming challenges

At the beginning of the article, I covered some challenges that “instruction-tuned” LLMs face; let’s see how Self-Instruct enables overcoming them.

Quantity

With the help of only 175 initial human-written tasks, 52K instructions and 82K instances were generated:

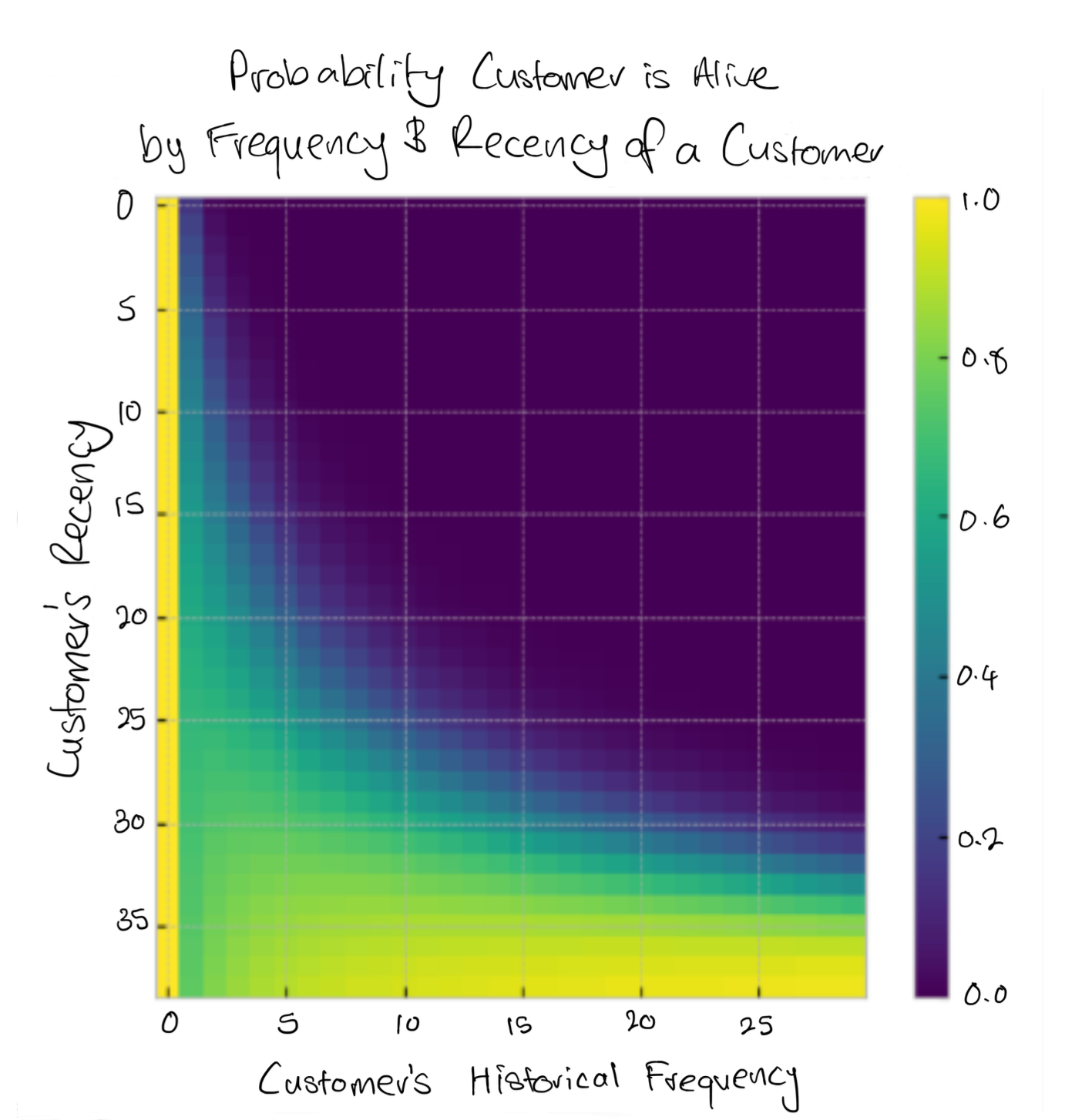

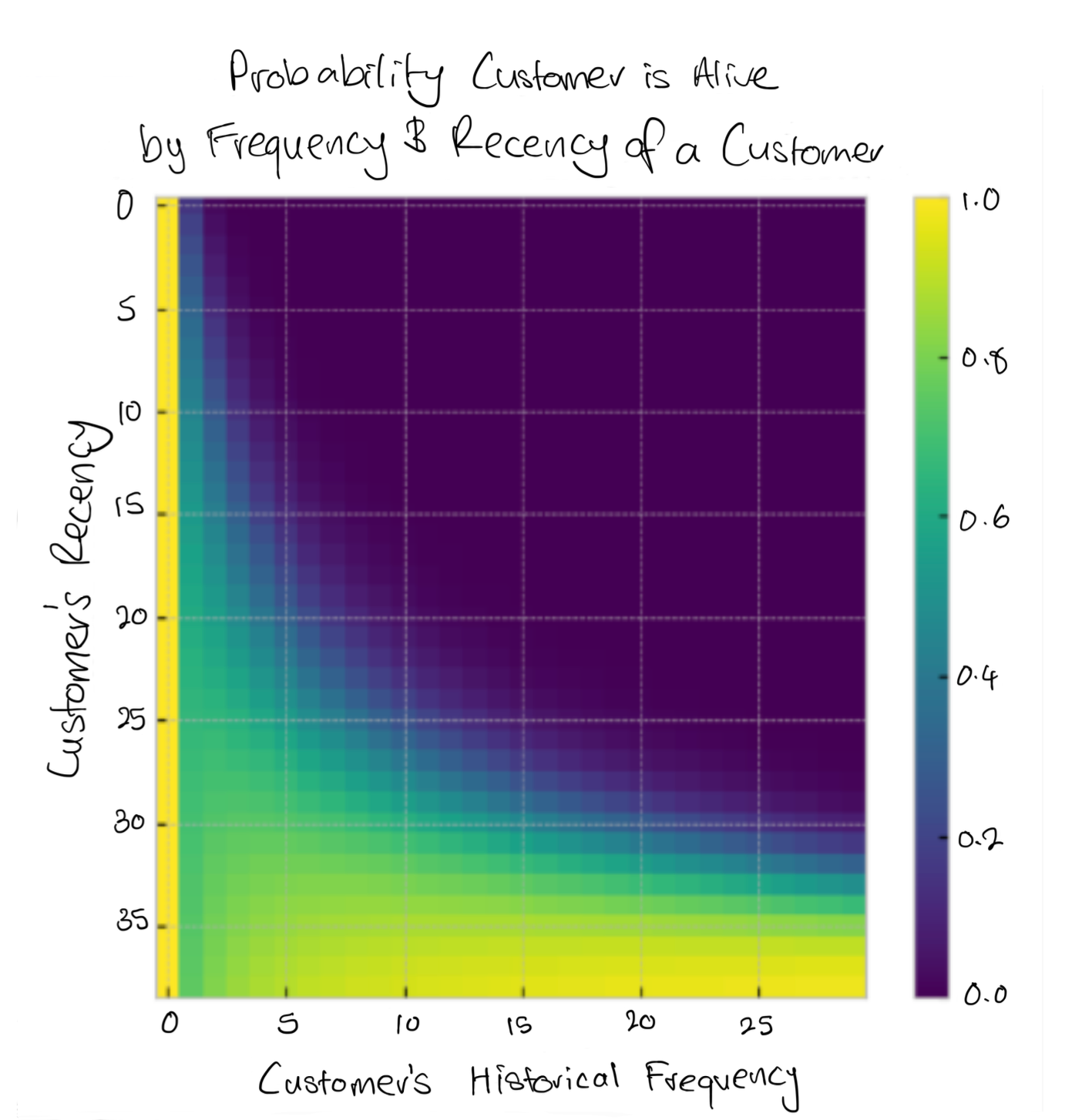

Diversity

To investigate how diverse the generated dataset is, authors of Self-Instruct used Berkley Neural Parser to parse instructions and then extract the closest verb to the root and its first direct noun object. 26K out of 52K instructions have a verb-noun format, but the other 26K instructions have more complex structure (e.g., “Classify whether this tweet contains political content or not.”) or are framed as questions (e.g., “Which of these statements are true?”).

Quality

To prove that Self-Instruct can generate high-quality tasks, it was randomly selected 200 generated instructions and sampled 1 instance per instruction, and then the author of the framework assessed them, obtaining the following results:

As we can see, 92% of all tasks describe a valid task, and 54% — have all valid fields (given that we generated 52K tasks, at least 26K will represent high-quality data, which is fantastic!)

Costs

The Self-Instruct framework also introduces significant cost advantages as well. The initial phases of task generation (Steps 1-3 ) amount to a mere $600, while the last step of fine-tuning using the GPT-3 model incurs a cost of $338. It’s vital to keep in mind when we look at results!

Results

How Self-Instruct can enhance the ROUGE-L metric on the SuperNI (Super-Natural Instructions) dataset? For that, we can compare the results of 1) off-the-shelf pre-trained LMs without any instruction fine-tuning (Vanilla LMs), 2) Instruction-tuned models (Instruction-tuned w/o SuperNI), and 3) Instruction-tuned models trained on SuperNI (Instruction-tuned w/ SuperNI):

As we can see, using Self-Instruct demonstrates a 33% absolute improvement over the original model on the dataset (1); simultaneously, it shows that using the framework can also slightly improve metrics after fine-tuning the SuperNI dataset (3).

Moreover, if we create a new (=unseen) dataset of 252 instructions and 1 instance per instruction and evaluate a selection of instruction-tuned variants, we can see the following results:

GPT3 + Self-Instruct shows impressive results compared to other instruction-tuned variants, but there is still a place for improvement compared to InstructGPT (previously available LLMs by OpenAI) variants.

Enhancements

The idea behind Self-Instruct is straightforward, but at the same time, it is compelling, so let’s look at how we can use it in different cases.

Stanford Alpaca³

In 2023, Alpaca LLM from Stanford gained colossal interest due to affordability, accessibility, and the fact that it was developed for less than $600, and at the same time, it combined LLaMA and Self-Instruct ideas.

Alpaca’s version of Self-Instruct were slightly modified:

- Step 1 (instruction generation): more aggressive batch decoding was applied, i.e., generating 20 instructions at once

- Step 2 (classification task): this step was wholly excluded

- Step 3 (instance generation): only one instance is generated per instruction

In the end, researchers from Stanford could achieve significant improvements in comparison to the initial set-up in Self-Instruct and based on performed a blind pairwise comparison between text-davinci-003 (InstructGPT-003) and Alpaca 7B: Alpaca wins 90 versus 89 comparisons against text-davinci-003.

Self-Rewarding Language Models⁴

In 2024, Self-Instruct is a practical framework used in more complex set-ups like in Self-Rewarding Language Models by Meta. As in Self-Instruct, initially, we have a seed set of human-written tasks; we then generate new instructions {xᵢ} and prompt model Mₜ to generate outputs {yᵢ¹, …, yᵢᵏ} and later generate rewards {rᵢ¹, …, rᵢᵏ } — that’s how we could ““eliminate”” human-annotators in InstructGPT by self-instruction process. The last block of Self-Rewarding models is instruction following training — on this step, we compose preference pairs and via DPO train Mₜ₊₁ — next iteration model. Therefore, we can repeat this procedure repeatedly to enrich the dataset and improve the initial pre-trained model.

Exploring Limitations

Although Self-Instruct offers an innovative approach to autonomous dataset generation, its reliance on large pre-trained models introduces potential limitations.

Data quality

Despite the impressive capability to generate synthetic data, the quality — marked by a 54% validity in the Overcoming Challenges section — remains a concern. It underscores a critical issue: the biases inherent in pre-trained models could replicate, or even amplify, within the generated datasets.

Tail phenomena

Instructions vary in frequency: some instructions are frequently requested, while others are rare. Nonetheless, it’s crucial to effectively manage these infrequent requests, as they highlight the brittleness of LLMs in processing uncommon and creative tasks.

Conclusion

In conclusion, the Self-Instruct framework represents an advancement in developing instruction-tuned LMs, offering an innovative solution to the challenges of dataset generation. Enabling LLMs to autonomously produce diverse and high-quality data significantly reduces dependency on human annotators, therefore driving down costs.

Unless otherwise noted, all images are by the author, inspired by Self-Instruct 🙂

References:

[1] Ouyang, Long, et al. “Training language models to follow instructions with human feedback.” Advances in Neural Information Processing Systems 35 (2022): 27730–27744

[2] Wang, Y., Kordi, Y., Mishra, S., Liu, A., Smith, N.A., Khashabi, D. and Hajishirzi, H., 2022. Self-instruct: Aligning language model with self generated instructions. arXiv preprint arXiv:2212.10560.

[3] Taori, R., Gulrajani, I., Zhang, T., Dubois, Y., Li, X., Guestrin, C., Liang, P. and Hashimoto, T.B., 2023. Stanford alpaca: An instruction-following llama model.

[4] Yuan, W., Pang, R.Y., Cho, K., Sukhbaatar, S., Xu, J. and Weston, J., 2024. Self-rewarding language models. arXiv preprint arXiv:2401.10020.

Self-Instruct Framework, Explained was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Self-Instruct Framework, Explained

Go Here to Read this Fast! Self-Instruct Framework, Explained