How to build a process with strong communication and expectation-setting practices

Starting an exploratory data analysis can be daunting. How do you know what to look at? How do you know when you’re done? What if you miss something important? In my experience, you can alleviate some of these worries with communication and expectation-setting. I’m sharing my process for exploratory data analysis here as a resource for folks just getting started in data, and for more experienced analysts and data scientists seeking to hone their own processes.

1. Talk to stakeholders about their objectives

One of the first things you should do when starting an exploratory analysis is to talk to the product manager/ leadership/ stakeholder(s) responsible for making decisions using the output of the analysis. Develop a solid understanding of the decisions they need to make, or the types of changes/ interventions they need to make calls on.

If you’re supporting product iterations, it may also be helpful to speak with UX researchers, designers, or customer service representatives who interact with customers or receive end-user feedback. You can add a lot of value by understanding whether a customer request is viable, or identifying patterns in user behavior that indicate the need for a specific feature.

2. Summarize analysis goals and get alignment

These conversations will help you determine the analysis goals, i.e., whether you should focus on identifying patterns and relationships, understanding distributions, etc. Summarize your understanding of the goal(s), specify an analysis period and population, and make sure all relevant stakeholders are aligned. At this point, I also like to communicate non-goals of the analysis — things stakeholders should not expect to see as part of my deliverable(s).

Make sure you understand the kinds of decisions to be made based on the results of your analysis. Get alignment from all stakeholders on the goals of the analysis before you start.

3. Develop a list of research questions

Create a series of questions related to the analysis goals you would like to answer, and note the dimensions you’re interested in exploring within, i.e., specific time periods, new users, users in a certain age bracket or geographical area, etc.

Example: for an analysis on user engagement, a product manager may want to know how many times new users typically visit your website in their first versus second month.

4. Identify your knowns and unknowns

Collect any previous research, organizational lore, and widely accepted assumptions related to the analysis topic. Review what’s been previously researched or analyzed to understand what is already known in this arena.

Make note of whether there are historical answers to any of your analysis questions. Note: when you’re determining how relevant those answers are, consider the amount of time since any previous analysis, and whether there have been significant changes in the analysis population or product/ service since then.

Example: Keeping to the new user activity idea, maybe someone did an analysis two years ago that identified that users’ activity tapered off and plateaued 5 weeks after account creation. If the company introduced a new 6-week drip campaign for new users a year ago, this insight may not be relevant any longer.

5. Understand what is possible with the data you have

Once you’ve synthesized your goals and key questions, you can identify what relevant data is easily available, and what supplemental data is potentially accessible. Verify your permissions to each data source, and request access from data/ process owners for any supplemental datasets. Spend some time familiarizing yourself with the datasets, and rule out any questions on your list it’s not possible to answer with the data you have.

6. Set expectations for what constitutes one analysis

Do a prioritization exercise with the key stakeholder(s), for example, a product manager, to understand which questions they believe are most important. It’s a good idea to T-shirt size (S, M, L) the complexity of the questions on your list before this conversation to illustrate the level of effort to answer them. If the questions on your list are more work than is feasible in a single analysis, use those prioritizations to determine how to stagger them into multiple analyses.

T-shirt size the level of effort to answer the analysis questions on your list. If it adds up to more work than is feasible in a single analysis, work with stakeholders to prioritize them into multiple analyses.

7. Transform and clean the data as necessary

If data pipelines are in place and data is already in the format you want, evaluate the need for data cleaning (looking for outliers, missingness/ sparse data, duplicates, etc.), and perform any necessary cleaning steps. If not, create data pipelines to handle any required relocation or transformations before data cleaning.

8. Use summary statistics to understand the “shape” of data

Start the analysis with high-level statistical exploration to understand distributions of features and correlations between them. You may notice data sparsity or quality issues that impact your ability to answer questions from your analysis planning exercise. It’s important to communicate early to stakeholders about questions you cannot address, or that will have “noisy” answers less valuable for decision-making.

9. Answer your analysis questions

At this stage, you’ll move into answering the specific questions you developed for the analysis. I like to visualize as I go, as this can make it easier to spot patterns, trends, and anomalies, and I can drop interesting visuals right into my write-up draft.

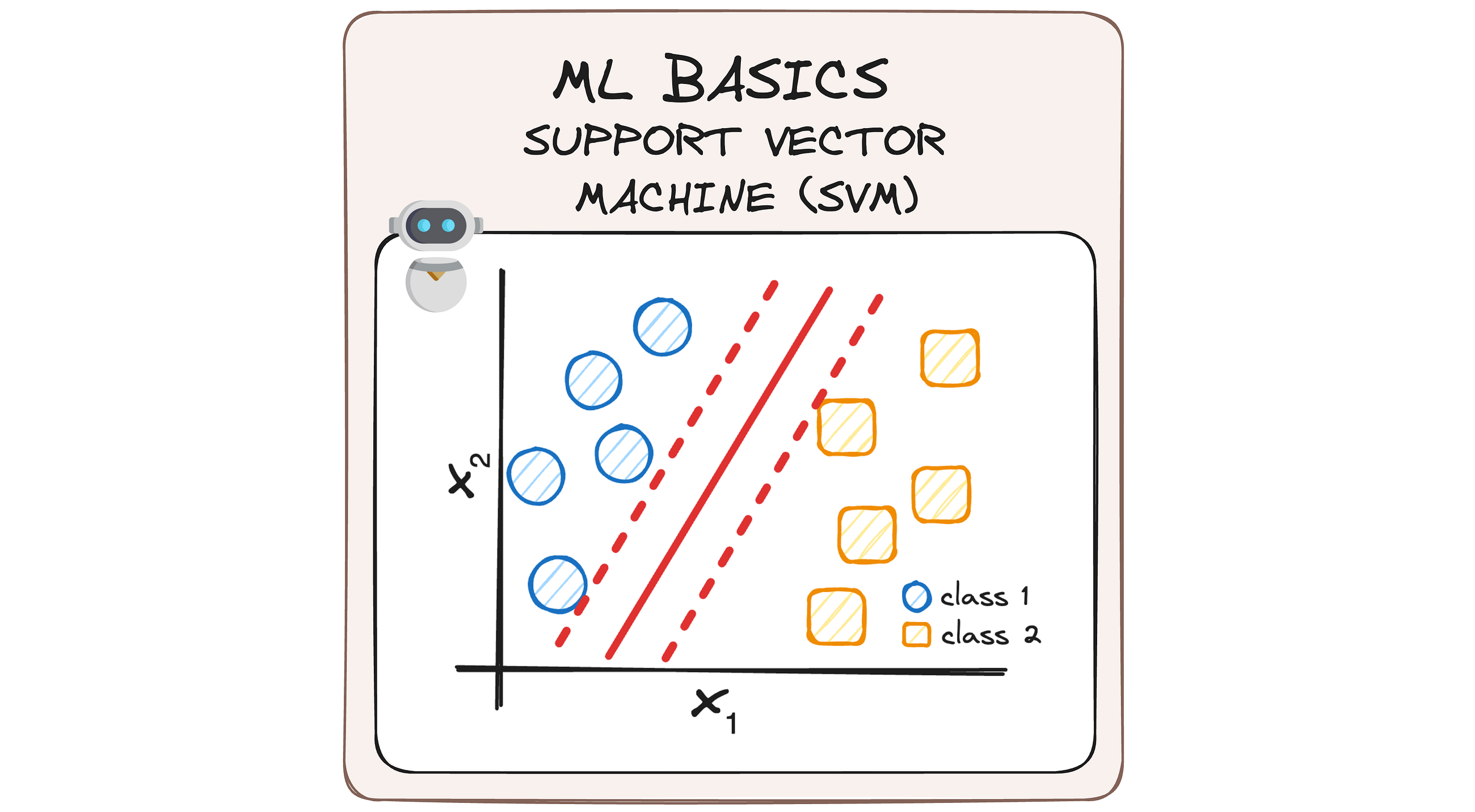

Depending on the type of analysis, you may want to generate some additional features (ex: bucket ranges for a numeric feature, indicators for whether a specific action was taken within a given period or more times than a given threshold) to explore correlations further, and look for less intuitive relationships between features using machine learning.

Visualize and document your findings as you go to minimize re-work and develop an idea of the “storyline” or theme for the analysis.

10. Document your findings

I like the question framework for analyses because it makes it easy to document my findings as I go. As you conduct your analysis, note answers you find under each question. Highlight findings you think are interesting, and make notes on any trains of thought a finding sparked.

This decreases the work that you need to do at the end of your analysis, and you can focus on fleshing out your findings with the “so what?” that tells the audience why they should care about a finding, and the “what next?” recommendations that make your insights actionable. When that’s in place, reorganize questions as necessary to create a consistent “storyline” for the analysis and key findings. At the end, you can include any next steps or additional lines of inquiry you recommend the team look into based on your findings.

If you’re working in a team environment, you may want to have one or more teammates review your code and/ or write-up. Iterate on your draft based on their feedback.

11. Share your findings

When your analysis is ready to share with the original stakeholders, be thoughtful about the format you choose. Depending on the audience, they may respond best to a Slack post, a presentation, a walkthrough of the analysis document, or some combination of the above. Finally, promote your analysis in internal channels, just in case your findings are useful to teams you weren’t working with.

Congrats, you’re done with your analysis!

Exploratory Data Analysis in 11 Steps was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Exploratory Data Analysis in 11 Steps

Go Here to Read this Fast! Exploratory Data Analysis in 11 Steps