Smartly transforming a hash table to a probabilistic data structure to trade accuracy for large memory gains

Introduction

Hash table is one of the most widely known and used data structures. With a wise choice of hash function, a hash table can produce optimal performance for insertion, search and deletion queries in constant time.

The main drawback of the hash table is potential collisions. To avoid them, one of the standard methods includes increasing the hash table size. While this approach works well in most cases, sometimes we are still limited in using large memory space.

It is necessary to recall that a hash table always provides a correct response to any query. It might go through collisions and be slow sometimes but it always guarantees 100% correct responses. It turns out that in some systems, we do not always need to receive correct information to queries. Such a decrease in accuracy can be used to focus on improving other aspects of the system.

In this article, we will discover an innovative data structure called a Bloom filter. In simple words, it is a modified version of a standard hash table which trades off a small decrease in accuracy for memory space gains.

Bloom filter

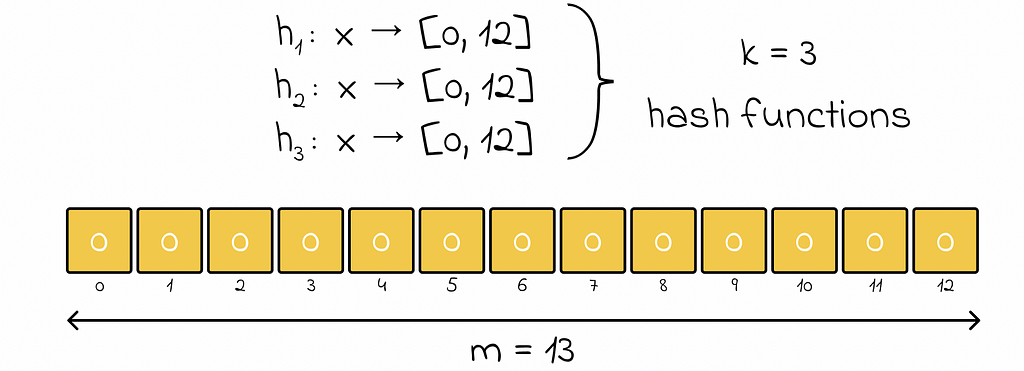

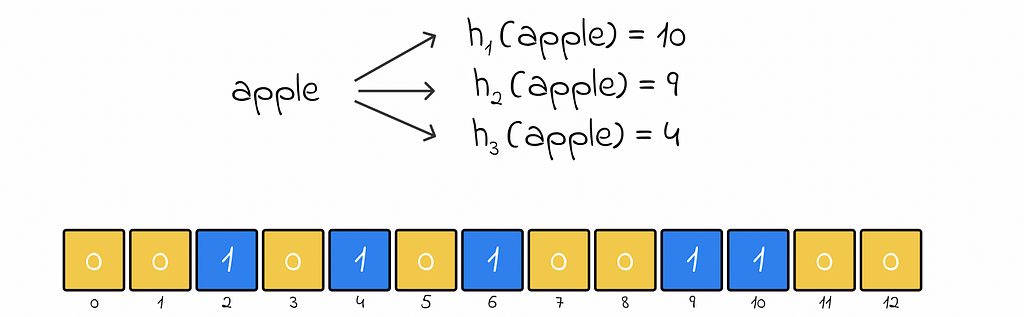

Bloom filter is organised in the form of a boolean array of size m. Initially all of its elements are marked as 0 (false). Apart from that, it is necessary to choose k hash functions that take objects as input and map them to the range [0, m — 1]. Every output value will later correspond to an array element at that index.

For better results, it is recommended that hash functions output values whose distribution is close to uniform.

Insertion

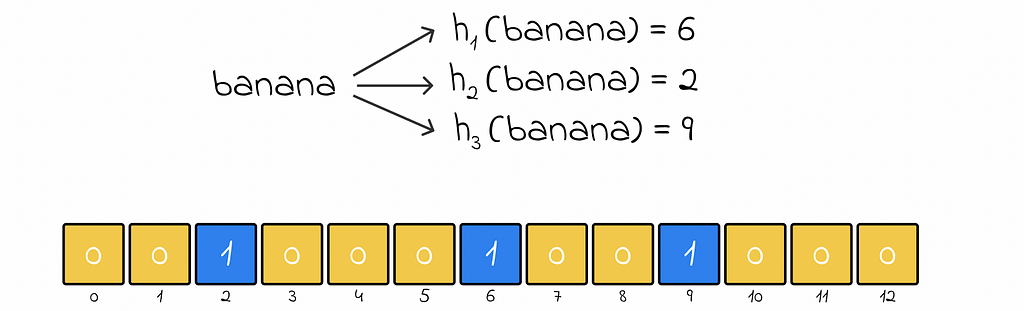

Whenever a new object needs to be added, it is passed through k predefined hash functions. For each output hash value, the corresponding element at that index becomes 1 (true).

If an array element whose index was outputted from a hash function has already been set to 1, then it simply remains as 1.

Basically, the presense of 1 at any array element acts as a partial prove that an element hashing to the respective array index actually exists in the Bloom filter.

Search

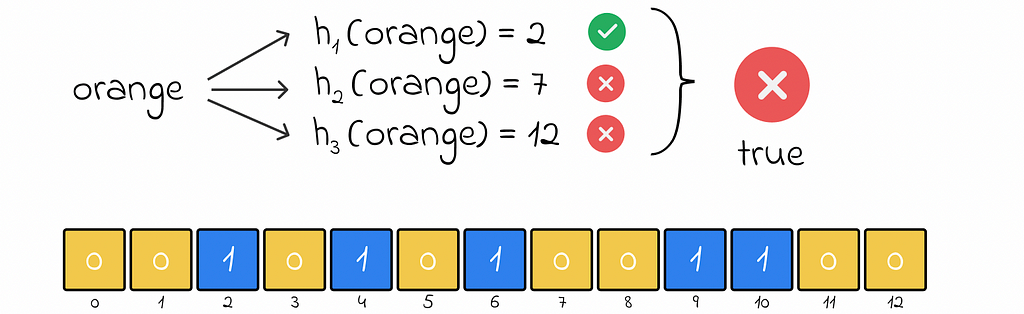

To check if an object exists, its k hash values are computed. There can be two possible scenarios:

If these is at least one hash value for which the respective array element equals 0, this means that the object does not exist.

During insertion, an object becomes associated with several array elements that are marked as 1. If an object really existed in the filter, than all of the hash functions would deterministically output the same sequence of indexes pointing to 1. However, pointing to an array element with 0 clearly signifies that the current object is not present in the data structure.

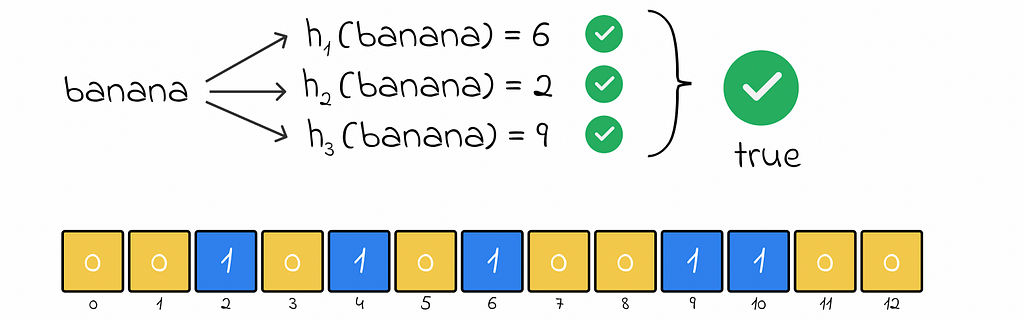

If for all hash values, the respective array elements equal 1, this means that the object probably exists (not 100%).

This statement is exactly what makes the Bloom filter a probabilistic data structure. If an object was added before, then during a search, the Bloom filter guarantees that hash values will be the same for it, thus the object will be found.

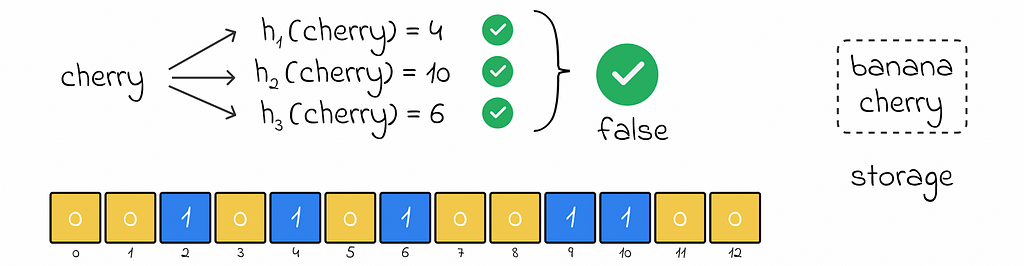

Nevertheless, the Bloom filter can produce a false positive response when an object does not actually exist but the Bloom filter claims otherwise. This happens when all hash functions for the object return hash values of 1 corresponding to other already inserted objects in the filter.

False positive answers tend to occur when the number of inserted objects becomes relatively high in comparison to the size of the Bloom filter’s array.

Estimation of false positive errors

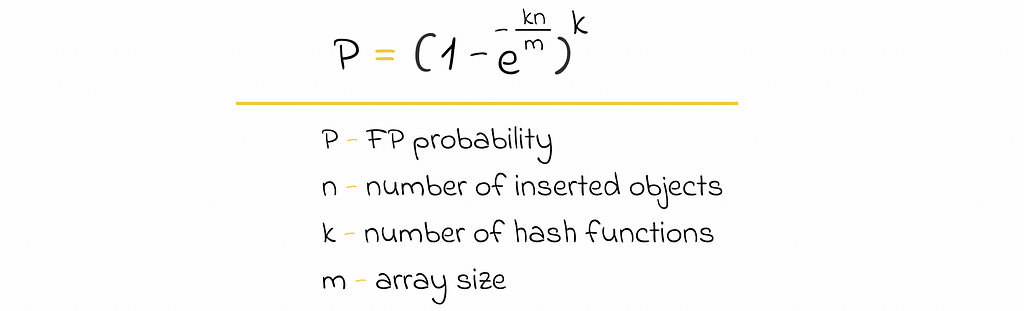

It is possible to estimate the probability of getting a false positive error, given the Bloom’s filter structure.

The full proof of this formula can be found on Wikipedia. Based on that expression, we can make a pair of interesting observations:

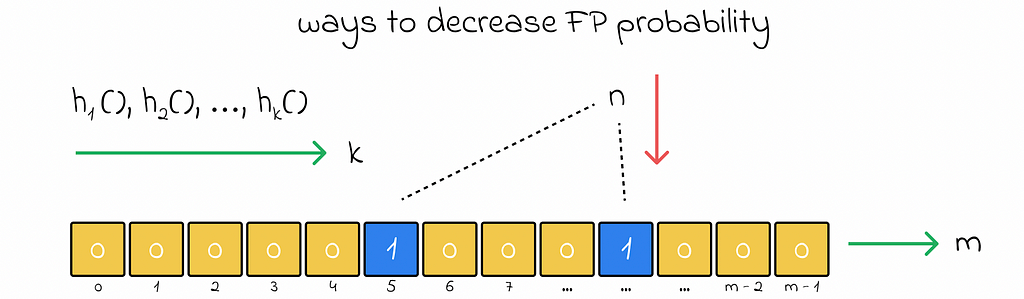

- The FP probability decreases with the increase in the number of hash hash functions k, increase in the array size m, and decrease in the number of inserted objects n.

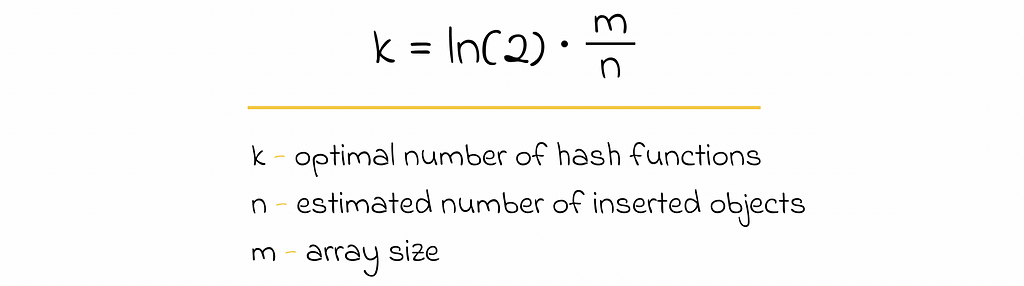

- Before inserting objects into the Bloom filter, we can find the optimal number of required hash functions k that will minimize the FP probability if we know the array size m and can estimate the number of objects n that will be inserted in the future.

Another option of reducing FP probability is a combination (AND conjunction) of several independent Bloom filters. An element is ultimately considered to be present in the data structure only if it is present in all Bloom filters.

Constraints

- Contrary to hash tables, the standard implementation of a Bloom filter does not support deletion.

- The chosen number of hash functions k and array size m at the beginning cannot be changed later. If there is such a need, the only way to do it is to build another Bloom filter with new settings by inserting all the previously stored objects.

Applications

According to the page from Wikipedia, the Bloom filter is widely used in large systems:

- Databases like Apache HBase, Apache Cassandra and PostgreSQL use the Bloom filter to check non-existing rows or columns. This approach is considerably faster than using disk lookups.

- Medium uses the Bloom filter to filter out pages that have already been recommended to a user.

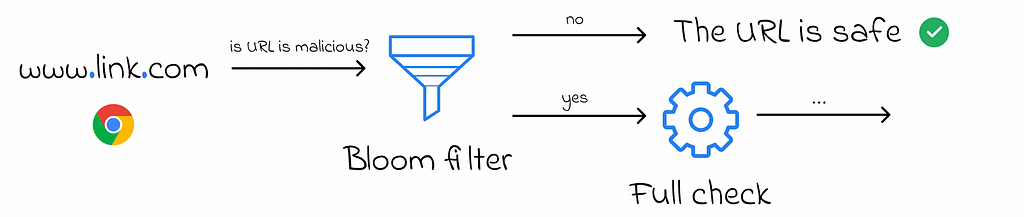

- Google Chrome used the Bloom filter in the past to identify malicious URLs. A URL was considered safe if the Bloom filter returned a negative response. Otherwise, the full check was performed.

Conclusion

In this article, we have covered an alternative approach to constructing hash tables. When a small decrease in accuracy can be compromised for more efficient memory usage, the Bloom filter turns out to be a robust solution in many distributed systems.

Varying the number of hash functions with the Bloom filter’s size allows us to find the most suitable balance between accuracy and performance requirements.

Resources

All images unless otherwise noted are by the author.

System Design: Bloom Filter was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

System Design: Bloom Filter