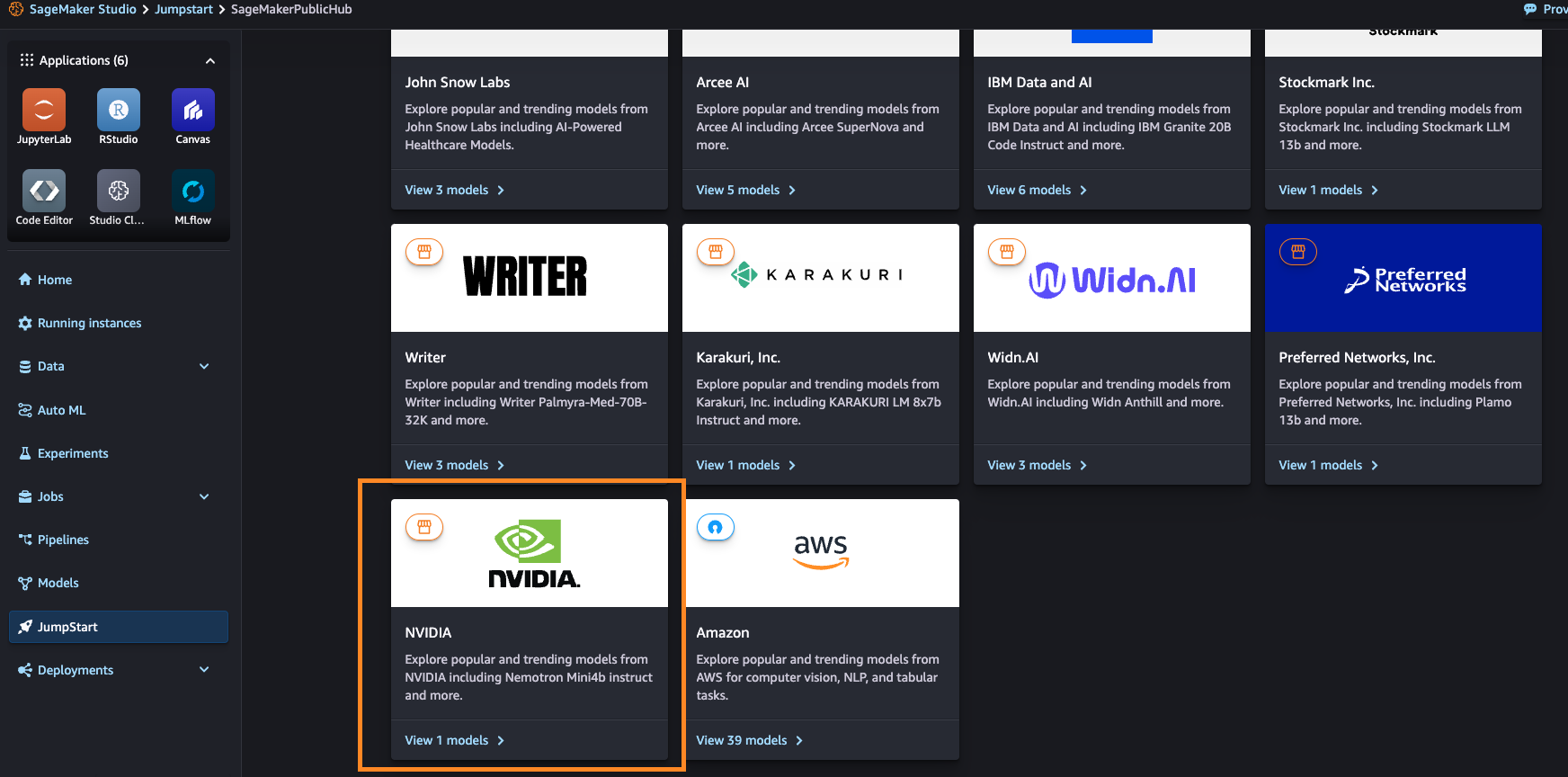

At re:Invent 2024, we are excited to announce new capabilities to speed up your AI inference workloads with NVIDIA accelerated computing and software offerings on Amazon SageMaker. In this post, we will explore how you can use these new capabilities to enhance your AI inference on Amazon SageMaker. We’ll walk through the process of deploying NVIDIA NIM microservices from AWS Marketplace for SageMaker Inference. We’ll then dive into NVIDIA’s model offerings on SageMaker JumpStart, showcasing how to access and deploy the Nemotron-4 model directly in the JumpStart interface. This will include step-by-step instructions on how to find the Nemotron-4 model in the JumpStart catalog, select it for your use case, and deploy it with a few clicks.

Originally appeared here:

Speed up your AI inference workloads with new NVIDIA-powered capabilities in Amazon SageMaker