A guide to the most popular techniques when randomized A/B testing is not possible

Randomized Control Trials (RCT) is the most classical form of product A/B testing. In Tech, companies use widely A/B testing as a way to measure the effect of an algorithmic change on user behavior or the impact of a new user interface on user engagement.

Randomization of the unit ensures that the results of the experiment are uncorrelated with the treatment assignment, eliminating selection bias and hence enabling us to rely upon assumptions of statistical theory to draw conclusions from what is observed.

However, random assignment is not always possible, i.e. subjects of an experiment cannot be randomly assigned to the control and treatment groups. There are cases where targeting a specific user is impractical due to spillover effects or unethical, hence experiments need to happen on the city/country level, or cases where you cannot practically enforce the user to be in the treatment group, like when testing a software update. In those cases, statistical techniques need to be applied since the basic assumptions of statistical theory are no longer valid once the randomization is violated.

Let’s see some of the most commonly used techniques, how they work in simple terms and when they are applied.

Statistical Techniques

Difference in Differences (DiD)

This method is usually used when the subject of the experiment is aggregated at the group level. Most common cases is when the subject of the experiment is a city or a country. When, for example, a company tests a new feature by launching it only in a specific city or country (treatment group) and then compares the outcome to the rest of the cities/countries (control group). Note that in that case, cities or countries are often selected based on their product market fit, rather than being randomly assigned. This approach helps ensure that the test results are relevant and generalizable to the target market.

DiD measures the change in the difference in the average outcome between the control and treatment groups over the course of pre and post intervention periods. If the treatment has no effect on the subjects, you would expect to see a constant difference between the treatment and control groups over time. This means that the trends in both groups would be similar, with no significant changes or deviations after the intervention.

Therefore DiD compares the average outcome in treatment vs control groups post treatment and searches for statistical significance under the null assumption that pre treatment the treatment and control groups had parallel trends and that trends remain parallel post treatment (Ho). If a treatment has no impact, the treatment and control groups will show similar patterns over time. However, if the treatment is effective, the patterns will diverge after the intervention, with the treatment group showing a significant change in direction, slope, or level compared to the control group.

If the assumption of parallel trends is met, DiD can provide a credible estimate of the treatment effect. However, if the trends are not parallel, the results may be biased, and alternative methods (such as the Synthetic Control methods discussed below) or adjustments may be necessary to obtain a reliable estimate of the treatment effect.

DiD Application

Let’s see how DiD is applied in practice by looking at Card and Krueger study (1993) that used the DiD approach to analyze the impact of a minimum wage increase on employment. The study analyzed 410 fast-food restaurants in New Jersey and Pennsylvania following the increase in New Jersey’s minimum wage from $4.25 to $5.05 per hour. Full-time equivalent employment in New Jersey was compared against Pennsylvania’s before and after the rise of the minimum wage. New Jersey, in this natural experiment, becomes the treatment group and Pennsylvania the control group.

By using this dataset from the study, I tried to replicate the DiD analysis.

import pandas as pd

import statsmodels.formula.api as smf

df = pd.read_csv('njmin3.csv')

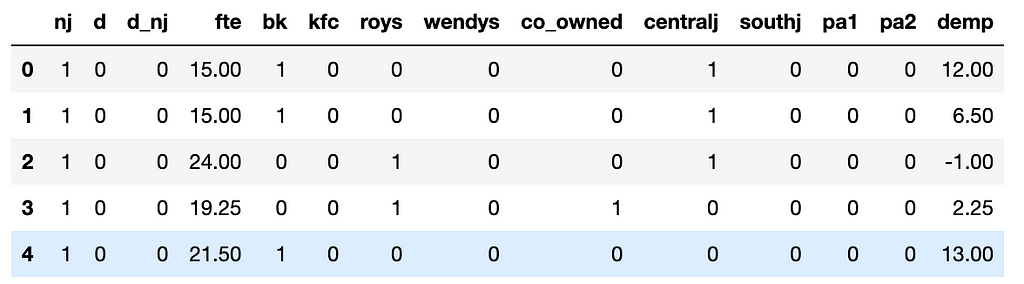

df.head()

In the data, column “nj” is 1 if it is New Jersey, column “d” is 1 if it is after the NJ min wage increase and column “d_nj” is the nj × d interaction.

Based on the basic DiD regression equation, here we have fte (i.e. full-time employment) is

fte_it = α+ β * nj_it + γ * d_t + δ * (nj_it × d_t) + ϵ_it

where ϵ_it is the error term.

model = smf.ols(formula = "fte ~ d_nj + d + nj", data = df).fit()

print(model.summary())

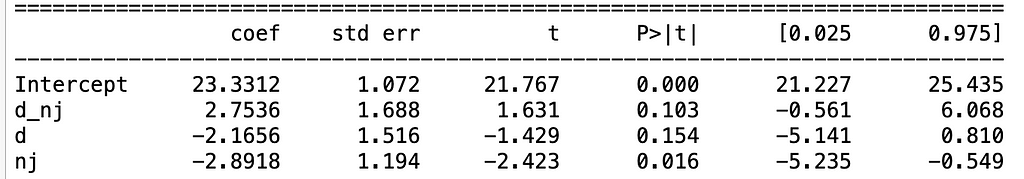

The key parameter of interest is the nj × d interaction (i.e. “d_nj”), which estimates the average treatment effect of the intervention. The result of the regression shows that “d_nj” is not statistically significant (since p-value is 0.103 > 0.05), meaning the minimum wage law has no impact on employment.

Synthetic Controls

Synthetic control methods compare the unit of interest (city/country in treatment) to a weighted average of the unaffected units (cities/countries in control), where the weights are selected in a way that the synthetic control unit best matches the treatment unit pre-treatment behavior.

The post-treatment outcome of the treatment unit is then compared to the synthetic unit, which serves as a counterfactual estimate of what would have happened if the treatment unit had not received the treatment. By using a weighted average of control units, synthetic control methods can create a more accurate and personalized counterfactual scenario, reducing bias and improving the estimates of the treatment effect.

For more detailed explanation on how the Synthetic Control methods work by means of an example, I found the Understanding the Synthetic Control Methods particularly helpful.

Propensity Score Matching (PSM)

Think about designing an experiment to evaluate let’s say the impact of a Prime subscription on revenue per customer. There is no way you can randomly assign users to subscribe or not. Instead, you can use propensity score matching to find non-Prime users (control group) that are similar to Prime users (treatment group) based on characteristics like age, demographics, and behavior.

The propensity score used in the matching is basically the probability of a unit receiving a particular treatment given a set of observed characteristics and it is calculated using logistic regression or other statistical methods. Once the propensity score is calculated, units in the treatment and control group are matched based on these scores, creating a synthetic control group that is statistically similar to the treatment. This way, you can create a comparable control group to estimate the effect of the Prime subscription.

Similarly, when studying the effect of a new feature or intervention on teenagers and parents, you can use PSM to create a control group that resembles the treatment group, ensuring a more accurate estimate of the treatment effect. These methods help mitigate confounding variables and bias, allowing for a more reliable evaluation of the treatment effect in non-randomized settings.

Takeaway

When standard A/B testing and randomization of the units is not possible, we can no longer rely upon assumptions of statistical theory to draw conclusions from what is observed. Statistical techniques, like DiD, Synthetic Controls and PSM, need to be applied once the randomization is violated.

On top of those, there are more techniques, also popular, in addition to the ones discussed here, such as the Instrumental Variables (IV), the Bayesian Structural Time Series (BSTS) and the Regression Discontinuity Design (RDD) that are used to estimated the treatment effect when randomization is not possible or when there is no control group at all.

Product Quasi-Experimentation: Statistical Techniques When Standard A/B Testing Is Not Possible was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Product Quasi-Experimentation: Statistical Techniques When Standard A/B Testing Is Not Possible