Go here to Read this Fast! BNB, Avalanche, and Ordinal fluctuate as Pullix rallies in presale

Originally appeared here:

BNB, Avalanche, and Ordinal fluctuate as Pullix rallies in presale

Go here to Read this Fast! BNB, Avalanche, and Ordinal fluctuate as Pullix rallies in presale

Originally appeared here:

BNB, Avalanche, and Ordinal fluctuate as Pullix rallies in presale

Originally appeared here:

Matrixport predicts challenging Q1 for Bitcoin as GBTC investors keep capitalizing

Go here to Read this Fast! SEC delays Grayscale spot Ethereum ETF to May

Originally appeared here:

SEC delays Grayscale spot Ethereum ETF to May

Input and output (I/O) operations refer to the transfer of data between a computer’s main memory and various peripherals. Storage peripherals such as HDDs and SSDs have particular performance characteristics in terms of latency, throughput, and rate which can influence the performance of the computer system they power. Extrapolating, the performance and design of distributed and cloud based Data Storage depends on that of the medium. This article is intended to be a bridge between Data Science and Storage Systems: 1/ I am sharing a few datasets of various sources and sizes which I hope will be novel for Data Scientists and 2/ I am bringing up the potential for advanced analytics in Distributed Systems.

Storage access traces are “a treasure trove of information for optimizing cloud workloads.” They’re crucial for capacity planning, data placement, or system design and evaluation, suited for modern applications. Diverse and up-to-date datasets are particularly needed in academic research to study novel and unintuitive access patterns, help the design of new hardware architectures, new caching algorithms, or hardware simulations.

Storage traces are notoriously difficult to find. The SNIA website is the best known “repository for storage-related I/O trace files, associated tools, and other related information” but many traces don’t comply with their licensing or upload format. Finding traces becomes a tedious process of scanning the academic literature or attempting to generate one’s own.

Popular traces which are easier to find tend to be outdated and overused. Traces older than 10 years should not be used in modern research and development due to changes in application workloads and hardware capabilities. Also, an over-use of specific traces can bias the understanding of real workloads so it’s recommended to use traces from multiple independent sources when possible.

This post is an organized collection of recent public traces I found and used. In the first part I categorize them by the level of abstraction they represent in the IO stack. In the second part I list and discuss some relevant datasets. The last part is a summary of all with a personal view on the gaps in storage tracing datasets.

I distinguish between three types of traces based on data representation and access model. Let me explain. A user, at the application layer, sees data stored in files or objects which are accessed by a large range of abstract operations such as open or append. Closer to the media, the data is stored in a continuous memory address space and accessed as blocks of fixed size which may only be read or written. At a higher abstraction level, within the application layer, we may also have a data presentation layer which may log access to data presentation units, which may be, for example, rows composing tables and databases, or articles and paragraphs composing news feeds. The access may be create table, or post article.

While traces can be taken anywhere in the IO stack and contain information from multiple layers, I am choosing to structure the following classification based on the Linux IO stack depicted below.

The data in these traces is representative of the operations at the block layer. In Linux, this data is typically collected with blktrace (and rendered readable with blkparse), iostat, or dtrace. The traces contain information about the operation, the device, CPU, process, and storage location accessed. The first trace listed is an example of blktrace output.

The typical information generated by tracing programs may be too detailed for analysis and publication purposes and it is often simplified. Typical public traces contain operation, offset, size, and sometimes timing. At this layer the operations are only read and write. Each operation accesses the address starting at offset and is applied to a continuous size of memory specified in number of blocks (4KiB NTFS). For example, a trace entry for a read operation contains the address where the read starts (offset), and the number of blocks read (size). The timing information may contain the time the request was issued (start time), the time it was completed (end time), the processing in between (latency), and the time the request waited (queuing time).

Available traces sport different features, have wildly different sizes, and are the output of a variety of workloads. Selecting the right one will depend on what one’s looking for. For example, trace replay only needs the order of operations and their size; For performance analysis timing information is needed.

At the application layer, data is located in files and objects which may be created, opened, appended, or closed, and then discovered via a tree structure. From an user’s point of view, the storage media is decoupled, hiding fragmentation, and allowing random byte access.

I’ll group together file and object traces despite a subtle difference between the two. Files follow the file system’s naming convention which is structured (typically hierarchical). Often the extension suggests the content type and usage of the file. On the other hand, objects are used in large scale storage systems dealing with vast amounts of diverse data. In object storage systems the structure is not intrinsic, instead it is defined externally, by the user, with specific metadata files managed by their workload.

Being generated within the application space, typically the result of an application logging mechanism, object traces are more diverse in terms of format and content. The information recorded may be more specific, for example, operations can also be delete, copy, or append. Objects typically have variable size and even the same object’s size may vary in time after appends and overwrites. The object identifier can be a string of variable size. It may encode extra information, for example, an extension that tells the content type. Other meta-information may come from the range accessed, which may tell us, for example, whether the header, the footer or the body of an image, parquet, or CSV file was accessed.

Object storage traces are better suited for understanding user access patterns. In terms of block access, a video stream and a sequential read of an entire file generate the same pattern: multiple sequential IOs at regular time intervals. But these trace entries should be treated differently if we are to replay them. Accessing video streaming blocks needs to be done with the same time delta between them, regardless of the latency of each individual block, while reading the entire file should be asap.

Specific to each application, data may be abstracted further. Data units may be instances of a class, records in a database, or ranges in a file. A single data access may not even generate a file open or a disk IO if caching is involved. I choose to include such traces because they may be used to understand and optimize storage access, and in particular cloud storage. For example, the access traces from Twitter’s Memcache are useful in understanding popularity distributions and therefore may be useful for data formatting and placement decisions. Often they’re not storage traces per se, but they can be useful in the context of cache simulation, IO reduction, or data layout (indexing).

Data format in these traces can be even more diverse due to a new layer of abstraction, for example, by tweet identifiers in Memcached.

Let’s look at a few traces in each of the categories above. The list details some of the newer traces — no older than 10 years — and it is by no means exhaustive.

YCSB RocksDB SSD 2020

These are SSD traces collected on a 28-core, 128 GB host with two 512 GB NVMe SSD Drives, running Ubuntu. The dataset is a result of running the YCSB-0.15.0 benchmark with RocksDB.

The first SSD stores all blktrace output, while the second hosts YCSB and RocksDB. YCSB Workload A consists of 50% reads and 50% updates of 1B operations on 250M records. Runtime is 9.7 hours, which generates over 352M block I/O requests at the file system level writing a total of 6.8 TB to the disk, with a read throughput of 90 MBps and a write throughput of 196 MBps.

The dataset is small compared to all others in the list, and limited in terms of workload, but a great place to start due to its manageable size. Another benefit is reproducibility: it uses open source tracing tools and benchmarking beds atop a relatively inexpensive hardware setup.

Format: These are SSD traces taken with blktrace and have the typical format after parsing with blkparse: [Device Major Number,Device Minor Number] [CPU Core ID] [Record ID] [Timestamp (in nanoseconds)] [ProcessID] [Trace Action] [OperationType] [SectorNumber + I/O Size] [ProcessName]

259,2 0 1 0.000000000 4020 Q R 282624 + 8 [java]

259,2 0 2 0.000001581 4020 G R 282624 + 8 [java]

259,2 0 3 0.000003650 4020 U N [java] 1

259,2 0 4 0.000003858 4020 I RS 282624 + 8 [java]

259,2 0 5 0.000005462 4020 D RS 282624 + 8 [java]

259,2 0 6 0.013163464 0 C RS 282624 + 8 [0]

259,2 0 7 0.013359202 4020 Q R 286720 + 128 [java]

Where to find it: http://iotta.snia.org/traces/block-io/28568

License: SNIA Trace Data Files Download License

Alibaba Block Traces 2020

The dataset consists of “block-level I/O requests collected from 1,000 volumes, where each has a raw capacity from 40 GiB to 5 TiB. The workloads span diverse types of cloud applications. Each collected I/O request specifies the volume number, request type, request offset, request size, and timestamp.”

Limitations (from the academic paper)

A drawback of this dataset is its size. When uncompressed it results in a 751GB file which is difficult to store and manage.

Format: device_id,opcode,offset,length,timestamp

419,W,8792731648,16384,1577808144360767

725,R,59110326272,360448,1577808144360813

12,R,350868463616,8192,1577808144360852

725,R,59110686720,466944,1577808144360891

736,R,72323657728,516096,1577808144360996

12,R,348404277248,8192,1577808144361031

Additionally, there is an extra file containing each virtual device’s id device_id with its total capacity.

Where to find it: https://github.com/alibaba/block-traces

License: CC-4.0.

Tencent Block Storage 2018

This dataset consists of “216 I/O traces from a warehouse (also called a failure domain) of a production cloud block storage system (CBS). The traces are I/O requests from 5584 cloud virtual volumes (CVVs) for ten days (from Oct. 1st to Oct. 10th, 2018). The I/O requests from the CVVs are mapped and redirected to a storage cluster consisting of 40 storage nodes (i.e., disks).”

Limitations:

Format: Timestamp,Offset,Size,IOType,VolumeID

1538323200,12910952,128,0,1063

1538323200,6338688,8,1,1627

1538323200,1904106400,384,0,1360

1538323200,342884064,256,0,1360

1538323200,15114104,8,0,3607

1538323200,140441472,32,0,1360

1538323200,15361816,520,1,1371

1538323200,23803384,8,0,2363

1538323200,5331600,4,1,3171

Where to find it: http://iotta.snia.org/traces/parallel/27917

License: NIA Trace Data Files Download License

K5cloud Traces 2018

This dataset contains traces from virtual cloud storage from the FUJITSU K5 cloud service. The data is gathered during a week, but not continuously because “ one day’s IO access logs often consumed the storage capacity of the capture system.” There are 24 billion records from 3088 virtual storage nodes.

The data is captured in the TCP/IP network between servers running on hypervisor and storage systems in a K5 data center in Japan. The data is split between three datasets by each virtual storage volume id. Each virtual storage volume id is unique in the same dataset, while each virtual storage volume id is not unique between the different datasets.

Limitations:

The fields in the IO access log are: ID,Timestamp,Type,Offset,Length

1157,3.828359000,W,7155568640,4096

1157,3.833921000,W,7132311552,8192

1157,3.841602000,W,15264690176,28672

1157,3.842341000,W,28121042944,4096

1157,3.857702000,W,15264718848,4096

1157,9.752752000,W,7155568640,4096

Where to find it: http://iotta.snia.org/traces/parallel/27917

License: CC-4.0.

Server-side I/O request arrival traces 2019

This repository contains two datasets for IO block traces with additional file identifiers: 1/ parallel file systems (PFS) and 2/ I/O nodes.

Notes:

Format: The format is slightly different for the two datasets, an artifact of different file systems. For IO nodes, it consists of multiple files, each with tab-separated values Timestamp FileHandle RequestType Offset Size. A peculiarity is that reads and writes are in separate files named accordingly.

265277355663 00000000fbffffffffffff0f729db77200000000000000000000000000000000 W 2952790016 32768

265277587575 00000000fbffffffffffff0f729db77200000000000000000000000000000000 W 1946157056 32768

265277671107 00000000fbffffffffffff0f729db77200000000000000000000000000000000 W 973078528 32768

265277913090 00000000fbffffffffffff0f729db77200000000000000000000000000000000 W 4026531840 32768

265277985008 00000000fbffffffffffff0f729db77200000000000000000000000000000000 W 805306368 32768

The PFS scenario has two concurrent applications, “app1” and “app2”, and its traces are inside a folder named accordingly. Each row entry has the following format: [<Timestamp>] REQ SCHED SCHEDULING, handle:<FileHandle>, queue_element: <QueueElement>, type: <RequestType>, offset: <Offset>, len: <Size> Different from the above are:

[D 01:11:03.153625] REQ SCHED SCHEDULING, handle: 5764607523034233445, queue_element: 0x12986c0, type: 1, offset: 369098752, len: 1048576

[D 01:11:03.153638] REQ SCHED SCHEDULING, handle: 5764607523034233445, queue_element: 0x1298e30, type: 1, offset: 268435456, len: 1048576

[D 01:11:03.153651] REQ SCHED SCHEDULING, handle: 5764607523034233445, queue_element: 0x1188b80, type: 1, offset: 0, len: 1048576

[D 01:11:03.153664] REQ SCHED SCHEDULING, handle: 5764607523034233445, queue_element: 0xf26340, type: 1, offset: 603979776, len: 1048576

[D 01:11:03.153676] REQ SCHED SCHEDULING, handle: 5764607523034233445, queue_element: 0x102d6e0, type: 1, offset: 637534208, len: 1048576

Where to find it: https://zenodo.org/records/3340631#.XUNa-uhKg2x

License: CC-4.0.

IBM Cloud Object Store 2019

These are anonymized traces from the IBM Cloud Object Storage service collected with the primary goal to study data flows to the object store.

The dataset is composed of 98 traces containing around 1.6 Billion requests for 342 Million unique objects. The traces themselves are about 88 GB in size. Each trace contains the REST operations issued against a single bucket in IBM Cloud Object Storage during a single week in 2019. Each trace contains between 22,000 to 187,000,000 object requests. All the traces were collected during the same week in 2019. The traces contain all data access requests issued over a week by a single tenant of the service. Object names are anonymized.

Some characteristics of the workload have been published in this paper, although the dataset used was larger:

Format: <time stamp of request> <request type> <object ID> <optional: size of object> <optional: beginning offset> <optional: ending offset> The timestamp is the number of milliseconds from the point where we began collecting the traces.

1219008 REST.PUT.OBJECT 8d4fcda3d675bac9 1056

1221974 REST.HEAD.OBJECT 39d177fb735ac5df 528

1232437 REST.HEAD.OBJECT 3b8255e0609a700d 1456

1232488 REST.GET.OBJECT 95d363d3fbdc0b03 1168 0 1167

1234545 REST.GET.OBJECT bfc07f9981aa6a5a 528 0 527

1256364 REST.HEAD.OBJECT c27efddbeef2b638 12752

1256491 REST.HEAD.OBJECT 13943e909692962f 9760

Where to find it: http://iotta.snia.org/traces/key-value/36305

License: SNIA Trace Data Files Download License

Wiki Analytics Datasets 2019

The wiki dataset contains data for 1/ upload (image) web requests of Wikimedia and 2/ text (HTML pageview) web requests from one CDN cache server of Wikipedia. The mos recent dataset, from 2019 contains 21 upload data files and 21 text data files.

Format: Each upload data file, denoted cache-u, contains exactly 24 hours of consecutive data. These files are each roughly 1.5GB in size and hold roughly 4GB of decompressed data each.

This dataset is the result of a single type of workload, which may limit the applicability, but it is large and complete, which makes a good testbed.

Each decompressed upload data file has the following format: relative_unix hashed_path_query image_type response_size time_firstbyte

0 833946053 jpeg 9665 1.85E-4

0 -1679404160 png 17635 2.09E-4

0 -374822678 png 3333 2.18E-4

0 -1125242883 jpeg 4733 1.57E-4

Each text data file, denoted cache-t, contains exactly 24 hours of consecutive data. These files are each roughly 100MB in size and hold roughly 300MB of decompressed data each.

Each decompressed upload data file has the following format: relative_unix hashed_host_path_query response_size time_firstbyte

4619 540675535 57724 1.92E-4

4619 1389231206 31730 2.29E-4

4619 -176296145 20286 1.85E-4

4619 74293765 14154 2.92E-4

Where to find it: https://wikitech.wikimedia.org/wiki/Analytics/Data_Lake/Traffic/Caching

License: CC-4.0.

This dataset contains one-week-long traces from Twitter’s in-memory caching (Twemcache / Pelikan) clusters. The data comes from 54 largest clusters in Mar 2020, Anonymized Cache Request Traces from Twitter Production.

Format: Each trace file is a csv with the format: timestamp,anonymized key,key size,value size,client id,operation,TTL

0,q:q:1:8WTfjZU14ee,17,213,4,get,0

0,yDqF:3q:1AJrrJ1nnCJKKrnGx1A,27,27,5,get,0

0,q:q:1:8WTw2gCuJe8,17,720,6,get,0

0,yDqF:vS:1AJr9JnArxCJGxn919K,27,27,7,get,0

0,yDqF:vS:1AJrrKG1CAnr1C19KxC,27,27,8,get,0

License: CC-4.0.

If you’re still here and haven’t gone diving into one of the traces linked above it may be because you haven’t found what you’re looking for. There are a few gaps that current storage traces have yet to fill:

Exploring Public Storage Traces was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Exploring Public Storage Traces

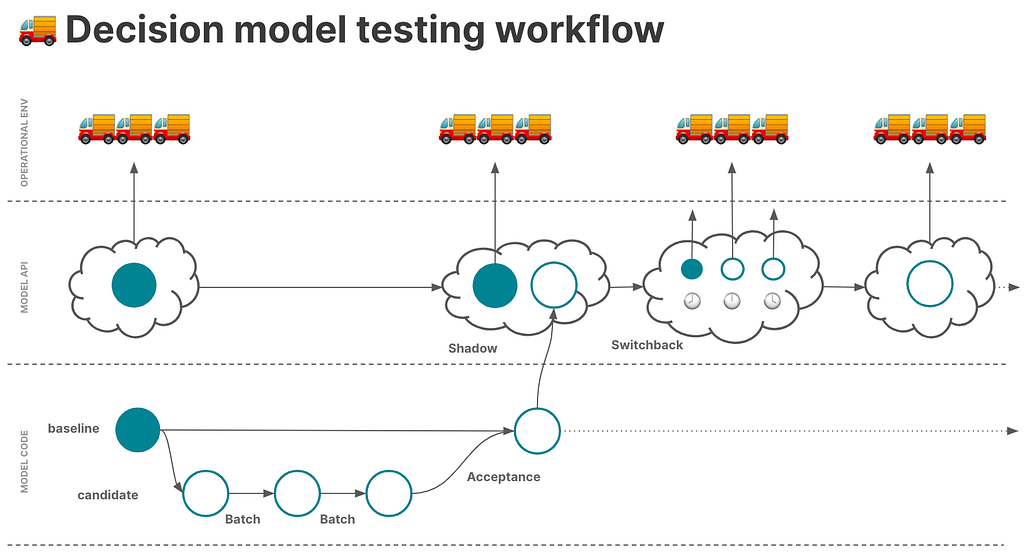

Switchback testing for decision models allows algorithm teams to compare a candidate model to a baseline model in a true production environment, where both models are making real-world decisions for the operation. With this form of testing, teams can randomize which model is applied to units of time and/or location in order to mitigate confounding effects (like holidays, major events, etc.) that can impact results when doing a pre/post rollout test.

Switchback tests can go by several names (e.g., time split experiments), and they are often referred to as A/B tests. While this is a helpful comparison for orientation, it’s important to acknowledge that switchback and A/B tests are similar but not the same. Decision models can’t be A/B tested the same way webpages can be due to network effects. Switchback tests allow you to account for these network effects, whereas A/B tests do not.

For example, when you A/B test a webpage by serving up different content to users, the experience a user has with Page A does not affect the experience another user has with Page B. However, if you tried to A/B test delivery assignments to drivers — you simply can’t. You can’t assign the same order to two different drivers as a test for comparison. There isn’t a way to isolate treatment and control within a single unit of time or location using traditional A/B testing. That’s where switchback testing comes in.

Let’s explore this type of testing a bit further.

Imagine you work at a farm share company that delivers fresh produce (carrots, onions, beets, apples) and dairy items (cheese, ice cream, milk) from local farms to customers’ homes. Your company recently invested in upgrading the entire vehicle fleet to be cold-chain ready. Since all vehicles are capable of handling temperature-sensitive items, the business is ready to remove business logic that was relevant to the previous hybrid fleet.

Before the fleet upgrade, your farm share handled temperature-sensitive items last-in-first-out (LIFO). This meant that if a cold item such as ice cream was picked up, a driver had to immediately drop the ice cream off to avoid a sad melty mess. This LIFO logic helped with product integrity and customer satisfaction, but it also introduced inefficiencies with route changes and backtracking.

After the fleet upgrade, the team wants to remove this constraint since all vehicles are capable of transporting cold items for longer with refrigeration. Previous tests using historical inputs, such as batch experiments (ad-hoc tests used to compare one or more models against offline or historical inputs [1]) and acceptance tests (tests with pre-defined pass/fail metrics used to compare the current model with a candidate model against offline or historical inputs before ‘accepting’ the new model [2]), have indicated that vehicle time on road and unassigned stops decrease for the candidate model compared to the production model that has the LIFO constraint. You’ve run a shadow test (an online test in which one or more candidate models is run in parallel to the current model in production but “in the shadows”, not impacting decisions [3]) to ensure model stability under production conditions. Now you want to let your candidate model have a go at making decisions for your production systems and compare the results to your production model.

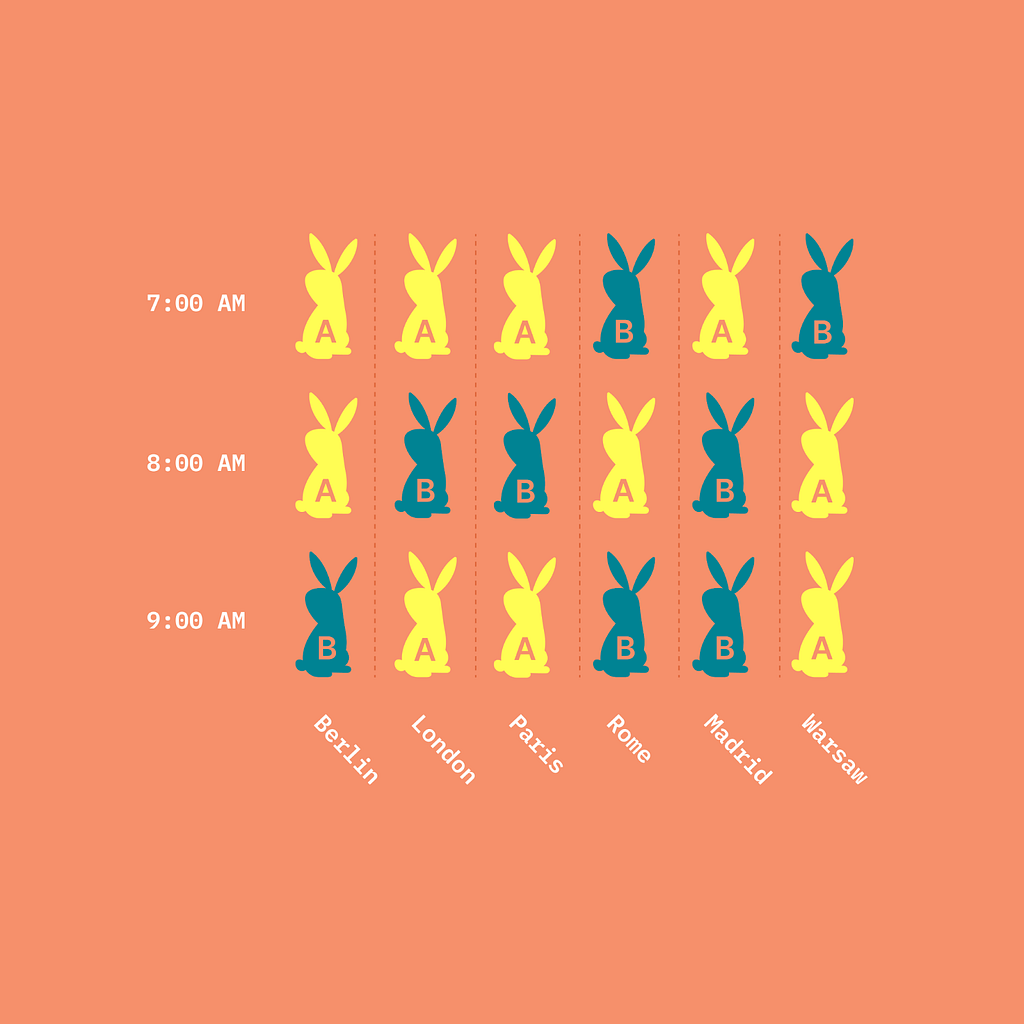

For this test, you decide to randomize based on time (every 1 hour) in two cities: Denver and New York City. Here’s an example of the experimental units for one city and which treatment was applied to them.

After 4 weeks of testing, you find that your candidate model outperforms the production model by consistently having lower time on road, fewer unassigned stops, and happier drivers because they weren’t zigzagging across town to accommodate the LIFO constraint. With these results, you work with the team to fully roll out the new model (without the LIFO constraint) to both regions.

Switchback tests build understanding and confidence in the behavioral impacts of model changes when there are network effects in play. Because they use online data and production conditions in a statistically sound way, switchback tests give insight into how a new model’s decision making impacts the real world in a measured way rather than just “shipping it” wholesale to prod and hoping for the best. Switchback testing is the most robust form of testing to understand how a candidate model will perform in the real world.

This type of understanding is something you can’t get from shadow tests. For example, if you run a candidate model that changes an objective function in shadow mode, all of your KPIs might look good. But if you run that same model as a switchback test, you might see that delivery drivers reject orders at a higher rate compared to the baseline model. There are just behaviors and outcomes you can’t always anticipate without running a candidate model in production in a way that lets you observe the model making operational decisions.

Additionally, switchback tests are especially relevant for supply and demand problems in the routing space, such as last-mile delivery and dispatch. As described earlier, standard A/B testing techniques simply aren’t appropriate under these conditions because of network effects they can’t account for.

There’s a quote from the Principles of Chaos Engineering, “Chaos strongly prefers to experiment directly on production traffic” [4]. Switchback testing (and shadow testing) are made for facing this type of chaos. As mentioned in the section before: there comes a point when it’s time to see how a candidate model makes decisions that impact real-world operations. That’s when you need switchback testing.

That said, it doesn’t make sense for the first round of tests on a candidate model to be switchback tests. You’ll want to run a series of historical tests such as batch, scenario, and acceptance tests, and then progress to shadow testing on production data. Switchback testing is often a final gate before committing to fully deploying a candidate model in place of an existing production model.

To perform switchback tests, teams often build out the infra, randomization framework, and analysis tooling from scratch. While the benefits of switchback testing are great, the cost to implement and maintain it can be high and often requires dedicated data science and data engineering involvement. As a result, this type of testing is not as common in the decision science space.

Once the infra is in place and switchback tests are live, it becomes a data wrangling exercise to weave together the information to understand what treatment was applied at what time and reconcile all of that data to do a more formal analysis of the results.

A few good points of reference to dive into include blog posts on the topic from DoorDash like this one (they write about it quite a bit) [5], in addition to this Towards Data Science post from a Databricks solutions engineer [6], which references a useful research paper out of MIT and Harvard [7] that’s worth a read as well.

Switchback testing for decision models is similar to A/B testing, but allows teams to account for network effects. Switchback testing is a critical piece of the DecisionOps workflow because it runs a candidate model using production data with real-world effects. We’re continuing to build out the testing experience at Nextmv — and we’d like your input.

If you’re interested in more content on decision model testing and other DecisionOps topics, subscribe to the Nextmv blog.

The author works for Nextmv as Head of Product.

[1] R. Gough, What are batch experiments for optimization models? (2023), Nextmv

[2] T. Bogich, What’s next for acceptance testing? (2023), Nextmv

[3] T. Bogich, What is shadow testing for optimization models and decision algorithms? (2023), Nextmv

[4] Principles of Chaos Engineering (2019), Principles of Chaos

[5] C. Sneider, Y. Tang, Experiment Rigor for Switchback Experiment Analysis (2019), DoorDash Engineering

[6] M. Berk, How to Optimize your Switchback A/B Test Configuration (2021), Towards Data Science

[7] I. Bojinov, D. Simchi-Levi, J. Zhao, Design and Analysis of Switchback Experiments (2020), arXiv

What is switchback testing for decision models? was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

What is switchback testing for decision models?

Go Here to Read this Fast! What is switchback testing for decision models?

Background

As a recent Grinnell College alum, I’ve closely observed and been impacted by significant shifts in the academic landscape. When I graduated, the acceptance rate at Grinnell had plummeted by 15% from the time I entered, paralleled by a sharp rise in tuition fees. This pattern wasn’t unique to my alma mater; friends from various colleges echoed similar experiences.

This got me thinking: Is this a widespread trend across U.S. colleges? My theory was twofold: firstly, the advent of online applications might have simplified the process of applying to multiple colleges, thereby increasing the applicant pool and reducing acceptance rates. Secondly, an article from the Migration Policy Institute highlighted a doubling in the number of international students in the U.S. from 2000 to 2020 (from 500k to 1 million), potentially intensifying competition. Alongside, I was curious about the tuition fee trends from 2001 to 2022. My aim here is to unravel these patterns through data visualization. For the following analysis, all images, unless otherwise noted, are by the author!

Dataset

The dataset I utilized encompasses a range of data about U.S. colleges from 2001 to 2022, covering aspects like institution type, yearly acceptance rates, state location, and tuition fees. Sourced from the College Scorecard, the original dataset was vast, with over 3,000 columns and 10,000 rows. I meticulously selected pertinent columns for a focused analysis, resulting in a refined dataset available on Kaggle. To ensure relevance and completeness, I concentrated on 4-year colleges featured in the U.S. News college rankings, drawing the list from here.

Change in Acceptance Rates Over the Years

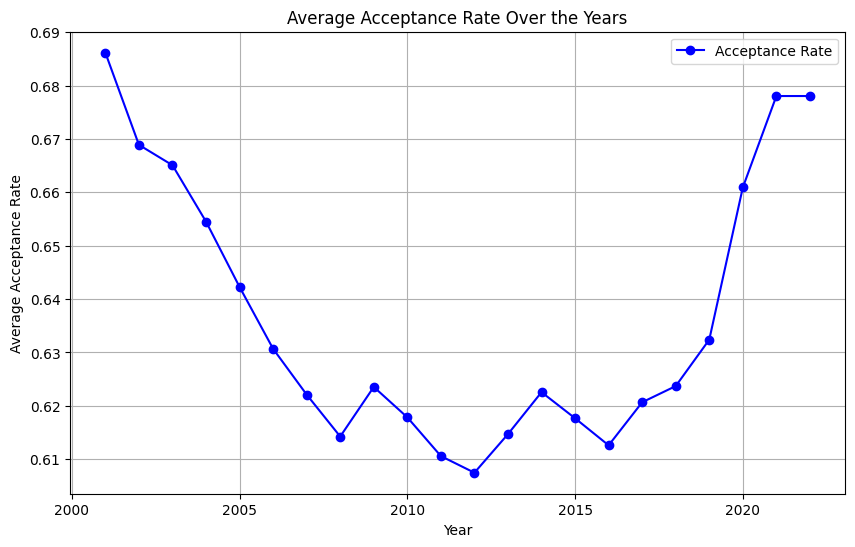

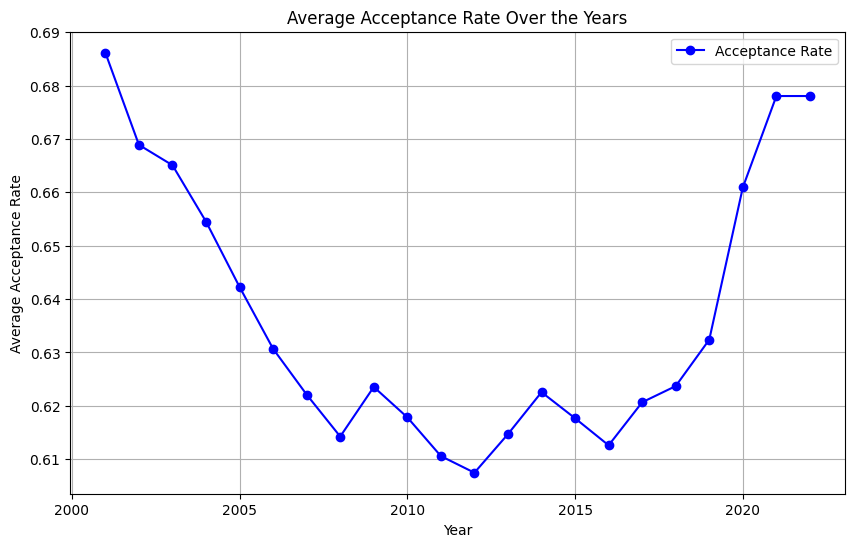

Let’s dive into the evolution of college acceptance rates over the past two decades. Initially, I suspected that I would observe a steady decline. Figure 1 illustrates this trajectory from 2001 to 2022. A consistent drop is evident until 2008, followed by fluctuations leading up to a notable increase around 2020–2021, likely a repercussion of the COVID-19 pandemic influencing gap year decisions and enrollment strategies.

avg_acp_ranked = df_ranked.groupby("year")["ADM_RATE_ALL"].mean().reset_index()

plt.figure(figsize=(10, 6)) # Set the figure size

plt.plot(avg_acp_ranked['year'], avg_acp_ranked['ADM_RATE_ALL'], marker='o', linestyle='-', color='b', label='Acceptance Rate')

plt.title('Average Acceptance Rate Over the Years') # Set the title

plt.xlabel('Year') # Label for the x-axis

plt.ylabel('Average Acceptance Rate') # Label for the y-axis

plt.grid(True) # Show grid

# Show a legend

plt.legend()

# Display the plot

plt.show()

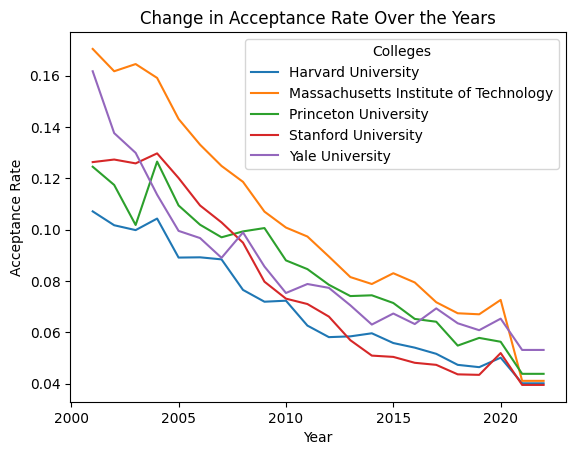

However, the overall drop wasn’t as steep as my experience at Grinnell suggested. In contrast, when we zoom into the acceptance rates of more prestigious universities (Figure 2), a steady decline becomes apparent. This led me to categorize colleges into three groups based on their 2022 admission rates (Top 10% competitive, top 50%, and others) and analyze the trends within these segments.

pres_colleges = ["Princeton University", "Massachusetts Institute of Technology", "Yale University", "Harvard University", "Stanford University"]

pres_df = df[df['INSTNM'].isin(pres_colleges)]

pivot_pres = pres_df.pivot_table(index="INSTNM", columns="year", values="ADM_RATE_ALL")

pivot_pres.T.plot(linestyle='-')

plt.title('Change in Acceptance Rate Over the Years')

plt.xlabel('Year')

plt.ylabel('Acceptance Rate')

plt.legend(title='Colleges')

plt.show()

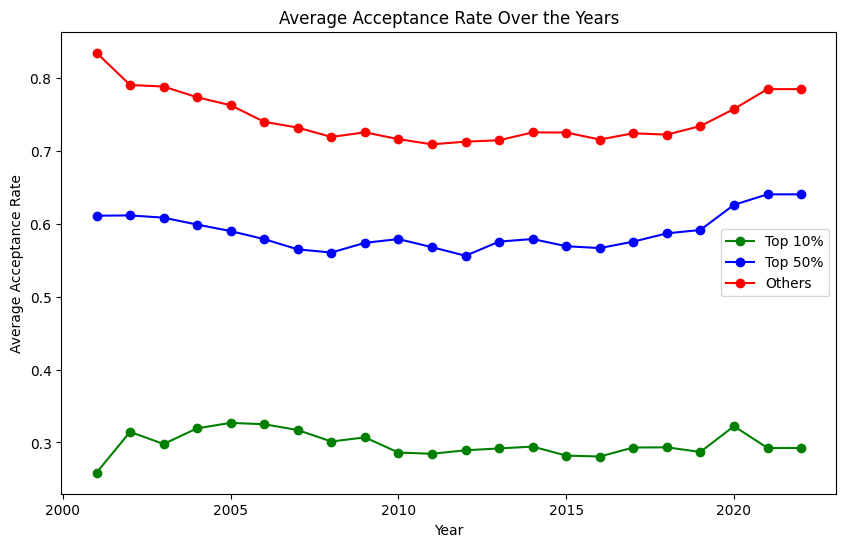

Figure 3 unveils some surprising insights. Except for the least competitive 50%, colleges have generally seen an increase in acceptance rates since 2001. The fluctuations post-2008 across all but the top 10% of colleges could be attributed to economic factors like the recession. Notably, competitive colleges didn’t experience the pandemic-induced spike in acceptance rates seen elsewhere.

top_10_threshold_ranked = df_ranked[df_ranked["year"] == 2001]["ADM_RATE_ALL"].quantile(0.1)

top_50_threshold_ranked = df_ranked[df_ranked["year"] == 2001]["ADM_RATE_ALL"].quantile(0.5)

top_10 = df_ranked[(df_ranked["year"]==2001) & (df_ranked["ADM_RATE_ALL"] <= top_10_threshold_ranked)]["UNITID"]

top_50 = df_ranked[(df_ranked["year"]==2001) & (df_ranked["ADM_RATE_ALL"] > top_10_threshold_ranked) & (df_ranked["ADM_RATE_ALL"] <= top_50_threshold_ranked)]["UNITID"]

others = df_ranked[(df_ranked["year"]==2001) & (df_ranked["ADM_RATE_ALL"] > top_50_threshold_ranked)]["UNITID"]

top_10_df = df_ranked[df_ranked["UNITID"].isin(top_10)]

top50_df = df_ranked[df_ranked["UNITID"].isin(top_50)]

others_df = df_ranked[df_ranked["UNITID"].isin(others)]

avg_acp_top10 = top_10_df.groupby("year")["ADM_RATE_ALL"].mean().reset_index()

avg_acp_others = others_df.groupby("year")["ADM_RATE_ALL"].mean().reset_index()

avg_acp_top50 = top50_df.groupby("year")["ADM_RATE_ALL"].mean().reset_index()

plt.figure(figsize=(10, 6)) # Set the figure size

plt.plot(avg_acp_top10['year'], avg_acp_top10['ADM_RATE_ALL'], marker='o', linestyle='-', color='g', label='Top 10%')

plt.plot(avg_acp_top50['year'], avg_acp_top50['ADM_RATE_ALL'], marker='o', linestyle='-', color='b', label='Top 50%')

plt.plot(avg_acp_others['year'], avg_acp_others['ADM_RATE_ALL'], marker='o', linestyle='-', color='r', label='Others')

plt.title('Average Acceptance Rate Over the Years') # Set the title

plt.xlabel('Year') # Label for the x-axis

plt.ylabel('Average Acceptance Rate') # Label for the y-axis

# Show a legend

plt.legend()

# Display the plot

plt.show()

One finding particularly intrigued me: when considering the top 10% of colleges, their acceptance rates hadn’t decreased notably over the years. This led me to question whether the shift in competitiveness was widespread or if it was a case of some colleges becoming significantly harder or easier to get into. The steady decrease in acceptance rates at prestigious institutions (shown in Figure 2) hinted at the latter.

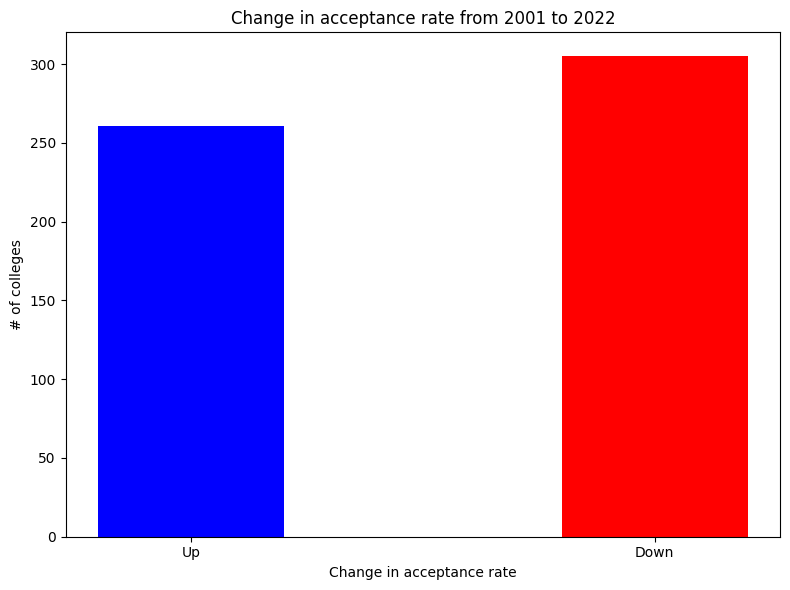

To get a clearer picture, I visualized the changes in college competitiveness from 2001 to 2022. Figure 4 reveals a surprising trend: about half of the colleges actually became less competitive, contrary to my initial expectations.

pivot_pres_ranked = df_ranked.pivot_table(index="INSTNM", columns="year", values="ADM_RATE_ALL")

pivot_pres_ranked_down = pivot_pres_ranked[pivot_pres_ranked[2001] >= pivot_pres_ranked[2022]]

len(pivot_pres_ranked_down)

pivot_pres_ranked_up = pivot_pres_ranked[pivot_pres_ranked[2001] < pivot_pres_ranked[2022]]

len(pivot_pres_ranked_up)

categories = ["Up", "Down"]

values = [len(pivot_pres_ranked_up), len(pivot_pres_ranked_down)]

plt.figure(figsize=(8, 6))

plt.bar(categories, values, width=0.4, align='center', color=["blue", "red"])

plt.xlabel('Change in acceptance rate')

plt.ylabel('# of colleges')

plt.title('Change in acceptance rate from 2001 to 2022')

# Show the chart

plt.tight_layout()

plt.show()

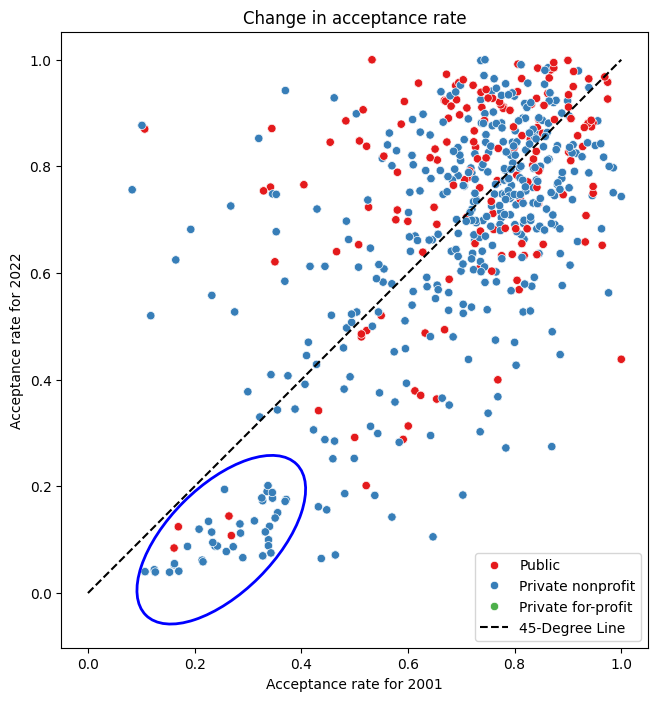

This prompted me to explore possible factors influencing these shifts. My hypothesis, reinforced by Figure 2, was that already selective colleges became even more so over time. Figure 5 compares acceptance rates in 2001 and 2022.

The 45-degree line delineates colleges that became more or less competitive. Those below the line saw reduced acceptance rates. A noticeable cluster in the lower-left quadrant represents selective colleges that became increasingly exclusive. This trend is underscored by the observation that colleges with initially low acceptance rates (left side of the plot) tend to fall below this dividing line, while those on the right are more evenly distributed.

Furthermore, it’s interesting to note that since 2001, the most selective colleges are predominantly private. To test whether the changes in acceptance rates differed significantly between the top and bottom 50 percentile colleges, I conducted an independent t-test (Null hypothesis: θ_top = θ_bottom). The results showed a statistically significant difference.

import seaborn as sns

from matplotlib.patches import Ellipse

pivot_region = pd.merge(pivot_pres_ranked[[2001, 2022]], df_ranked[["REGION","INSTNM", "UNIVERSITY", "CONTROL"]], on="INSTNM", how="right")

plt.figure(figsize=(8, 8))

sns.scatterplot(data=pivot_region, x=2001, y=2022, hue='CONTROL', palette='Set1', legend='full')

plt.xlabel('Acceptance rate for 2001')

plt.ylabel('Acceptance rate for 2022')

plt.title('Change in acceptance rate')

x_line = np.linspace(0, max(pivot_region[2001]), 100) # X-values for the line

y_line = x_line # Y-values for the line (slope = 1)

plt.plot(x_line, y_line, label='45-Degree Line', color='black', linestyle='--')

# Define ellipse parameters (center, width, height, angle)

ellipse_center = (0.25, 0.1) # Center of the ellipse

ellipse_width = 0.4 # Width of the ellipse

ellipse_height = 0.2 # Height of the ellipse

ellipse_angle = 45 # Rotation angle in degrees

# Create an Ellipse patch

ellipse = Ellipse(

xy=ellipse_center,

width=ellipse_width,

height=ellipse_height,

angle=ellipse_angle,

edgecolor='b', # Edge color of the ellipse

facecolor='none', # No fill color (transparent)

linewidth=2 # Line width of the ellipse border

)

plt.gca().add_patch(ellipse)

# Add the ellipse to the current a

plt.legend()

plt.gca().set_aspect('equal')

plt.show()

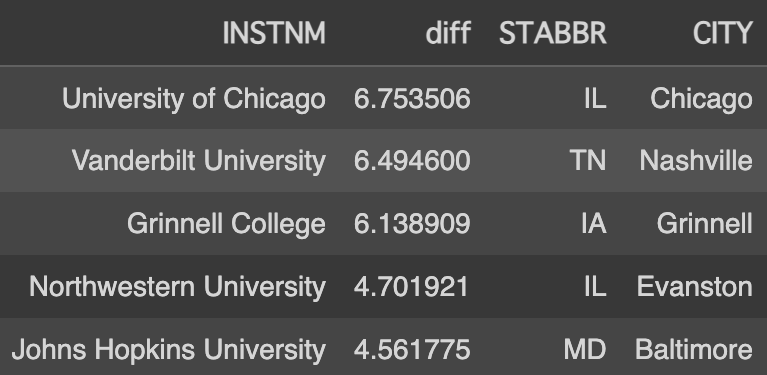

Another aspect that piqued my curiosity was regional differences. Figure 6 lists the top 5 colleges with the most significant decrease in acceptance rates (calculated by dividing the 2022 acceptance rate by the 2001 rate).

It was astonishing to see how high the acceptance rate for the University of Chicago was two decades ago — half of the applicants were admitted then!

This also helped me understand my initial bias towards a general decrease in acceptance rates; notably, Grinnell College, my alma mater, is among these top 5 with a significant drop in acceptance rate.

Interestingly, three of the top five colleges are located in the Midwest. My theory is that with the advent of the internet, these institutions, not as historically renowned as those on the West and East Coasts, have gained more visibility both domestically and internationally.

pivot_pres_ranked["diff"] = pivot_pres_ranked[2001] / pivot_pres_ranked[2022]

tmp = pivot_pres_ranked.reset_index()

tmp = tmp.merge(df_ranked[df_ranked["year"]==2022][["INSTNM", "STABBR", "CITY"]],on="INSTNM")

tmp.sort_values(by="diff",ascending=False)[["INSTNM", "diff", "STABBR", "CITY"]].head(5)

In the following sections, we’ll explore tuition trends and their correlation with these acceptance rate changes, delving deeper into the dynamics shaping modern U.S. higher education.

Change in Tuition Over the Years

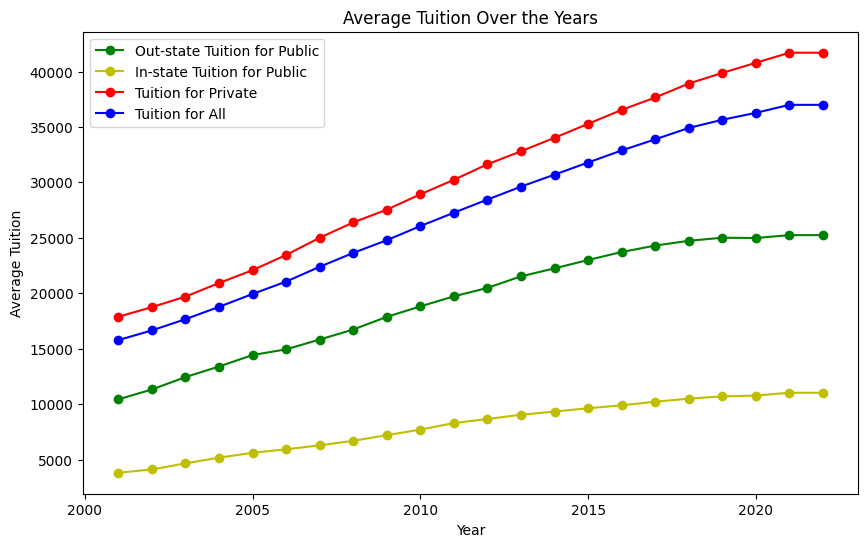

Analyzing tuition trends over the past two decades reveals some eye-opening patterns. Figure 7 presents the average tuition over the years across different categories: private, public in-state, public out-of-state, and overall. A steady climb in tuition fees is evident in all categories.

Notably, private universities exhibit a higher increase compared to public ones, and the rise in public in-state tuition appears relatively modest. However, it’s striking that the overall average tuition has more than doubled since 2001, soaring from $15k to $35k.

avg_tuition = df_ranked.groupby('year')["TUITIONFEE_OUT"].mean().reset_index()

avg_tuition_private = df_ranked[df_ranked['CONTROL'] != "Public"].groupby('year')["TUITIONFEE_OUT"].mean().reset_index()

avg_tuition_public_out = df_ranked[df_ranked['CONTROL'] == "Public"].groupby('year')["TUITIONFEE_OUT"].mean().reset_index()

avg_tuition_public_in = df_ranked[df_ranked['CONTROL'] == "Public"].groupby('year')["TUITIONFEE_IN"].mean().reset_index()

plt.figure(figsize=(10, 6)) # Set the figure size (optional)

plt.plot(avg_tuition_public_out['year'], avg_tuition_public_out['TUITIONFEE_OUT'], marker='o', linestyle='-', color='g', label='Out-state Tuition for Public')

plt.plot(avg_tuition_public_in['year'], avg_tuition_public_in['TUITIONFEE_IN'], marker='o', linestyle='-', color='y', label='In-state Tuition for Public')

plt.plot(avg_tuition_private['year'], avg_tuition_private['TUITIONFEE_OUT'], marker='o', linestyle='-', color='r', label='Tuition for Private')

plt.plot(avg_tuition['year'], avg_tuition['TUITIONFEE_OUT'], marker='o', linestyle='-', color='b', label='Tuition for All')

plt.title('Average Tuition Over the Years') # Set the title

plt.xlabel('Year') # Label for the x-axis

plt.ylabel('Average Tuition') # Label for the y-axis

# Show a legend

plt.legend()

# Display the plot

plt.show()

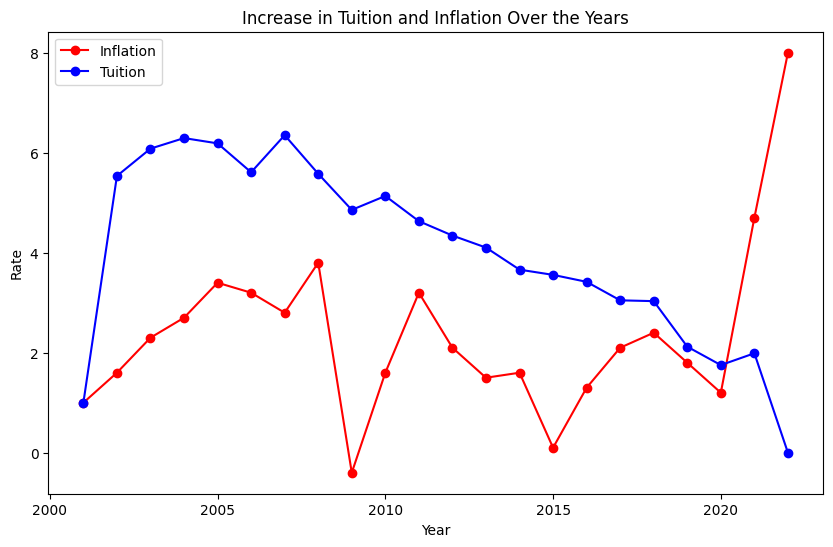

One might argue that this increase is in line with general economic inflation, but a comparison with inflation rates paints a different picture (Figure 8). Except for the last two years, where inflation spiked due to the pandemic, tuition hikes consistently outpaced inflation.

Although the pattern of tuition increases mirrors that of inflation, it’s important to note that unlike inflation, which dipped into negative territory in 2009, tuition increases never fell below zero. Though the rate of increase has been slowing, the hope is for it to eventually stabilize and halt the upward trajectory of tuition costs.

avg_tuition['Inflation tuition'] = avg_tuition['TUITIONFEE_OUT'].pct_change() * 100

avg_tuition.iloc[0,2] = 1

avg_tuition

plt.figure(figsize=(10, 6)) # Set the figure size

plt.plot(df_inflation['year'], df_inflation['Inflation rate'], marker='o', linestyle='-', color='r', label='Inflation')

plt.plot(avg_tuition['year'],avg_tuition['Inflation tuition'], marker='o', linestyle='-', color='b', label='Tuition')

plt.title('Increase in Tuition and Inflation Over the Years') # Set the title

plt.xlabel('Year') # Label for the x-axis

plt.ylabel('Rate') # Label for the y-axis

# Show a legend

plt.legend()

# Display the plot

plt.show()

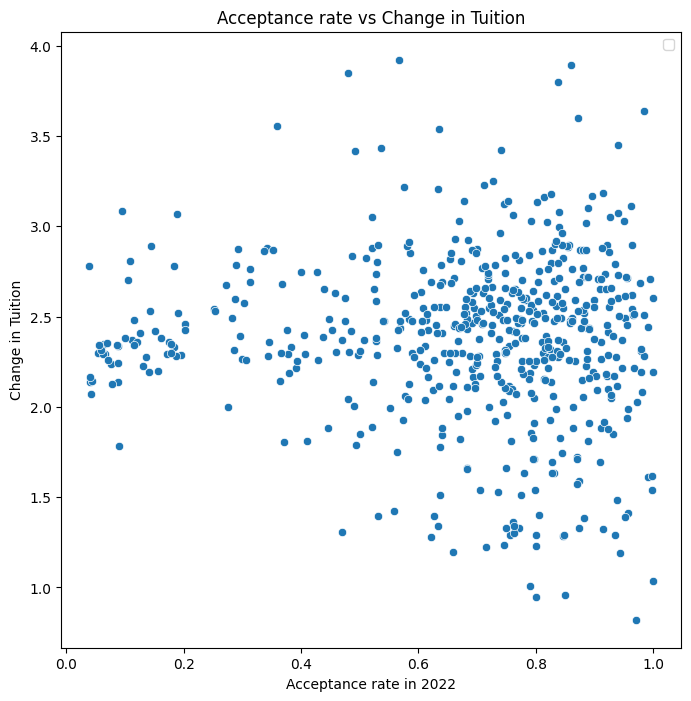

In exploring the characteristics of colleges that have raised tuition fees more significantly, I hypothesized that more selective colleges might exhibit higher increases due to greater demand. Figure 9 investigates this theory. Contrary to expectations, the data does not show a clear trend correlating selectivity with tuition increase. The change in tuition seems to hover around an average of 2.2 times across various acceptance rates. However, it’s noteworthy that tuition at almost all selective universities has more than doubled, whereas the distribution for other universities is more varied. This indicates a lower standard deviation in tuition changes at selective schools compared to their less selective counterparts.

tuition_pivot = df_ranked.pivot_table(index="INSTNM", columns="year", values="TUITIONFEE_OUT")

tuition_pivot["TUI_CHANGE"] = tuition_pivot[2022]/tuition_pivot[2001]

tuition_pivot = tuition_pivot[tuition_pivot["TUI_CHANGE"] < 200]

print(tuition_pivot["TUI_CHANGE"].isnull().sum())

tmp = pd.merge(tuition_pivot["TUI_CHANGE"], df_ranked[df_ranked["year"]==2022][["ADM_RATE_ALL", "INSTNM", "REGION", "STABBR", "CONTROL"]], on="INSTNM", how="right")

plt.figure(figsize=(8, 8))

sns.scatterplot(data=tmp, x="ADM_RATE_ALL", y="TUI_CHANGE", palette='Set2', legend='full')

plt.xlabel('Acceptance rate in 2022')

plt.ylabel('Change in Tuition')

plt.title('Acceptance rate vs Change in Tuition')

plt.legend()

plt.show()

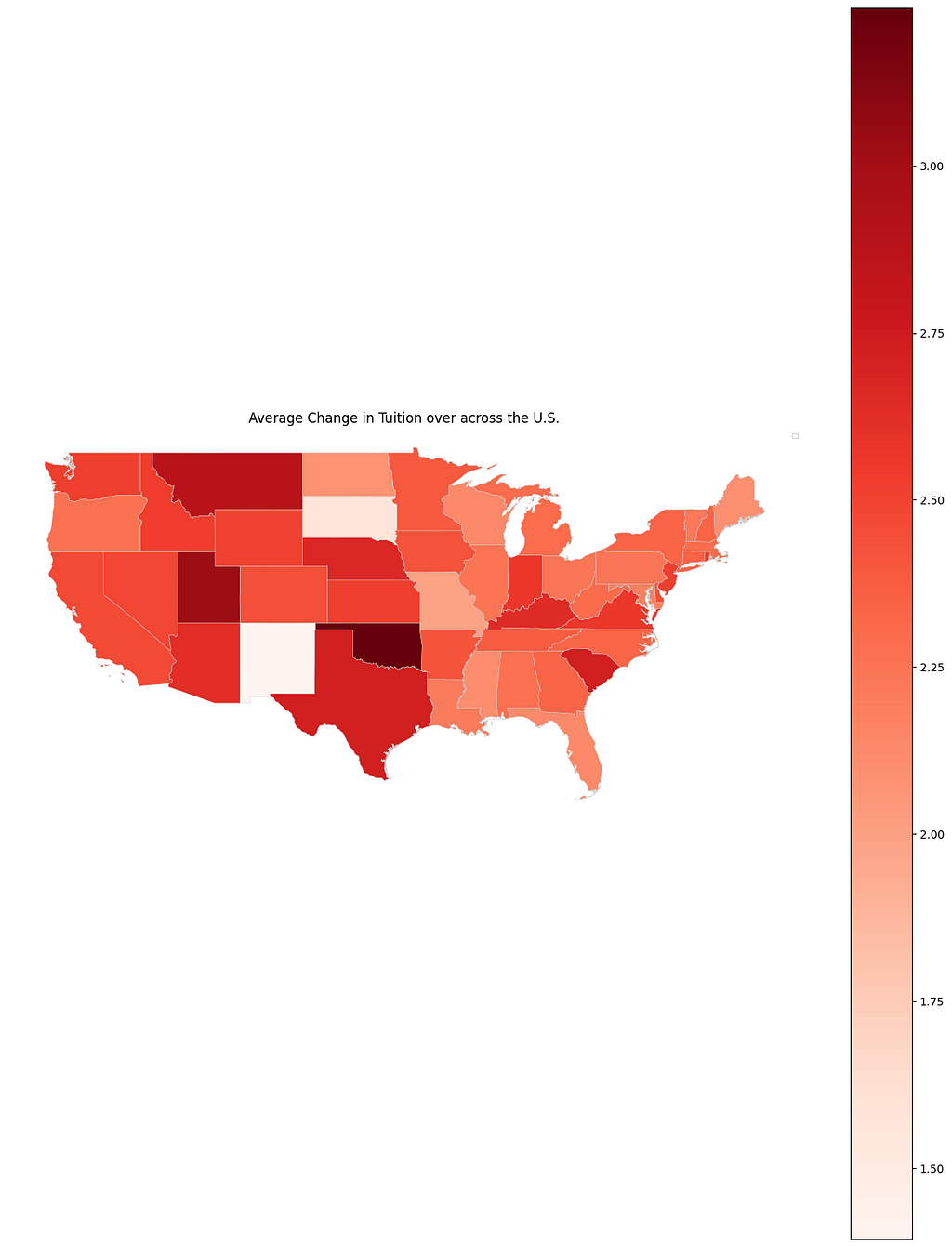

After examining the relationship between acceptance rates and tuition hikes, I turned my attention to regional factors. I hypothesized that schools in the West Coast, influenced by the economic surge of tech companies, might have experienced significant tuition increases. To test this, I visualized the tuition growth for each state in Figure 10.

Contrary to my expectations, the West Coast wasn’t the region with the highest rise in tuition. Instead, states like Oklahoma and Utah saw substantial increases, while South Dakota and New Mexico had the smallest hikes. While there are exceptions, the overall trend suggests that tuition increases in the western states generally outpace those in the eastern states.

import geopandas as gpd

sta_tui = tmp.groupby("STABBR")["TUI_CHANGE"].mean()

sta_tui = sta_tui.reset_index()

shapefile_path = "path_to_shape_file"

gdf = gpd.read_file(shapefile_path)

sta_tui["STUSPS"] = sta_tui["STABBR"]

merged_data = gdf.merge(sta_tui, on="STUSPS", how="left")

final = merged_data.drop([42, 44, 45, 38, 13])

# Plot the choropleth map

fig, ax = plt.subplots(1, 1, figsize=(16, 20))

final.plot(column='TUI_CHANGE', cmap="Reds", ax=ax, linewidth=0.3, edgecolor='0.8', legend=True)

ax.set_title('Average Change in Tuition over across the U.S.')

plt.axis('off') # Turn off axis

plt.legend(fontsize=6)

plt.show()

Future Directions and Limitations

While this analysis provides insights based on single-year comparisons for changes in acceptance rates and tuition, a more comprehensive view could be obtained from a 5-year average comparison. In my preliminary analysis using this approach, the conclusions were similar.

The dataset used also contains many other attributes like racial proportions, mean SAT scores, and median household income. However, I didn’t utilize these due to missing values in older data. By focusing on more recent years, these additional factors could offer deeper insights. For those interested in further exploration, the dataset is available on Kaggle.

It’s important to note that this analysis is based on colleges ranked in the U.S. News, introducing a certain degree of bias. The trends observed may differ from the overall U.S. college landscape.

For data enthusiasts, my code and methodology are accessible for further exploration. I invite you to delve into it and perhaps uncover new perspectives or validate these findings. Thank you for joining me on this data-driven journey through the changing landscape of U.S. higher education!

[1] Emma Israel and Jeanne Batalova. “International Students in the United States” (January 14, 2021). https://www.migrationpolicy.org/article/international-students-united-states

[2] U.S. Department of Education College Scoreboard (last updated October 10, 2023). Public Domain, https://will-stanton.com/creating-a-great-data-science-resume/

[3] Andrew G. Reiter, “U.S. News & World Report Historical Liberal Arts College and University Rankings” http://andyreiter.com/datasets/

Exploring a Two-Decade Trend: College Acceptance Rates and Tuition in the U.S. was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Exploring a Two-Decade Trend: College Acceptance Rates and Tuition in the U.S.

Go Here to Read this Fast! The 6 best smart sprinkler controllers for your yard in 2024

Originally appeared here:

The 6 best smart sprinkler controllers for your yard in 2024

Go Here to Read this Fast! The 5 best smart alarm clocks for your bedside table in 2024

Originally appeared here:

The 5 best smart alarm clocks for your bedside table in 2024

Go Here to Read this Fast! The 5 best Peloton alternatives for exercising at home in 2024

Originally appeared here:

The 5 best Peloton alternatives for exercising at home in 2024

Go here to Read this Fast! Ethereum recovering, Pushd and Polkadot vie for top crypto position

Originally appeared here:

Ethereum recovering, Pushd and Polkadot vie for top crypto position