Go here to Read this Fast! China’s new rules force banks to flag transactions with crypto: report

Originally appeared here:

China’s new rules force banks to flag transactions with crypto: report

Go here to Read this Fast! China’s new rules force banks to flag transactions with crypto: report

Originally appeared here:

China’s new rules force banks to flag transactions with crypto: report

Originally appeared here:

Ethereum price will hit $10k as ETH scarcity narrative ‘strong in practice,’ 1confirmation’s Tomaino says

Go here to Read this Fast! 2 crypto coins set to crush Cardano for gains in Q1 2025

Originally appeared here:

2 crypto coins set to crush Cardano for gains in Q1 2025

X (formerly known as Twitter) aims to chart a new course this year by blending finance, artificial intelligence, and social media into its ecosystem. On Dec. 31, CEO Linda Yaccarino teased upcoming features to provide users with broader capabilities beyond traditional social media interactions. According to her, 2025 will be a year when the platform’s […]

The post X aims for super app status in 2025 with X Money, X TV and deeper AI integration appeared first on CryptoSlate.

Originally appeared here:

X aims for super app status in 2025 with X Money, X TV and deeper AI integration

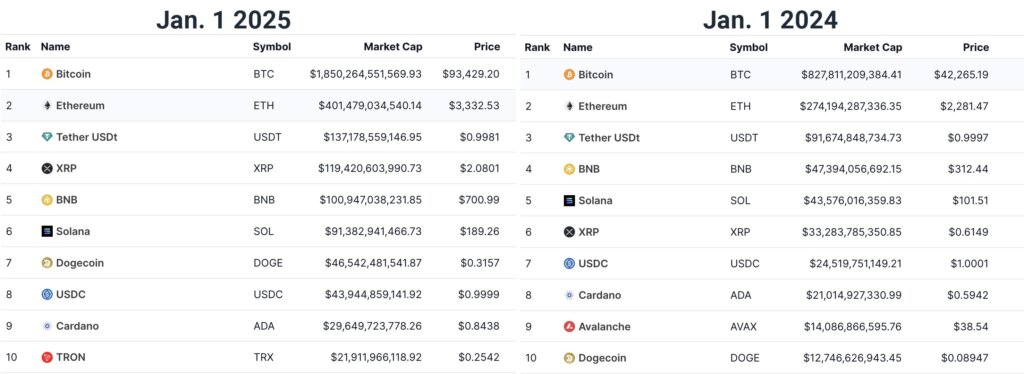

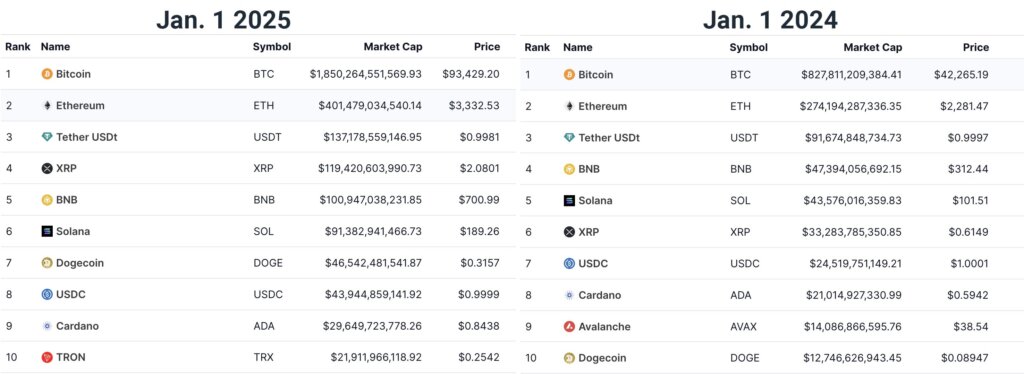

The crypto market ended the year with a combined valuation of $3.26 trillion. 2024 featured shifting momentum across Bitcoin, Ethereum, and other assets, influenced by Bitcoin spot ETF approval, the US election, and evolving crypto regulation. The top 10 coins and tokens on Jan. 1, 2025, include the majority of the same ones present a […]

The post Top 10 cryptocurrency rankings on January 1 2025 vs 2024 sees Avalanche replaced by Tron appeared first on CryptoSlate.

Originally appeared here:

Top 10 cryptocurrency rankings on January 1 2025 vs 2024 sees Avalanche replaced by Tron

The United Kingdom faces mounting challenges in regulating crypto advertising as the Financial Conduct Authority (FCA) grapples with widespread violations, the Financial Times reported on Jan. 1. According to the report, the FCA issued 1,702 alerts against potentially misleading crypto ads between October 2023 and October 2024, yet only 54% resulted in content removal. Despite […]

The post FCA struggles with crypto ads as UK looks to encourage crypto compliance appeared first on CryptoSlate.

Go here to Read this Fast! FCA struggles with crypto ads as UK looks to encourage crypto compliance

Originally appeared here:

FCA struggles with crypto ads as UK looks to encourage crypto compliance

At press time, traders were over-leveraged at $0.339 on the lower side and $0.361 on the upper side.

XLM’s Open Interest (OI) had surged by 18% in the past 24 hours.

Stellar [XLM] seemed t

The post Can Stellar [XLM] hit $0.60? Assessing key levels appeared first on AMBCrypto.

Go here to Read this Fast! Can Stellar [XLM] hit $0.60? Assessing key levels

Originally appeared here:

Can Stellar [XLM] hit $0.60? Assessing key levels

The XRP price action leaned bearishly.

The defense of the $2 support has been stout and the selling volume was weak.

Based on the price charts and the liquidation heatmap, Ripple [XRP] has a

The post XRP price prediction: Bulls and bears clash over THIS support appeared first on AMBCrypto.

Go here to Read this Fast! XRP price prediction: Bulls and bears clash over THIS support

Originally appeared here:

XRP price prediction: Bulls and bears clash over THIS support

A recent report has revealed an interesting connection between global inflation and the crypto market cap.

Is the recent ‘dip’ in the crypto market cap just a false alarm, or is volatility loom

The post After crypto market cap rises with ‘Trump pump’, mapping what Q1 2025 holds appeared first on AMBCrypto.

Nowhere has the proliferation of generative AI tooling been more aggressive than in the world of software development. It began with GitHub Copilot’s supercharged autocomplete, then exploded into direct code-along integrated tools like Aider and Cursor that allow software engineers to dictate instructions and have the generated changes applied live, in-editor. Now tools like Devin.ai aim to build autonomous software generating platforms which can independently consume feature requests or bug tickets and produce ready-to-review code.

The grand aspiration of these AI tools is, in actuality, no different from the aspirations of all the software that has ever written by humans: to automate human work. When you scheduled that daily CSV parsing script for your employer back in 2005, you were offloading a tiny bit of the labor owned by our species to some combination of silicon and electricity. Where generative AI tools differ is that they aim to automate the work of automation. Setting this goal as our north star enables more abstract thinking about the inherit challenges and possible solutions of generative AI software development.

⭐ Our North Star: Automate the process of automation

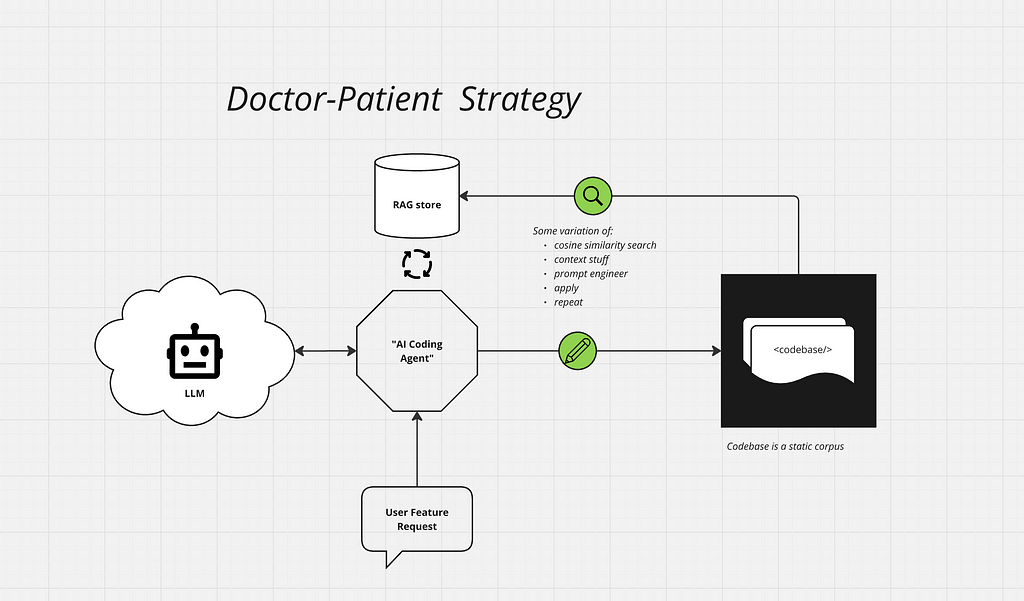

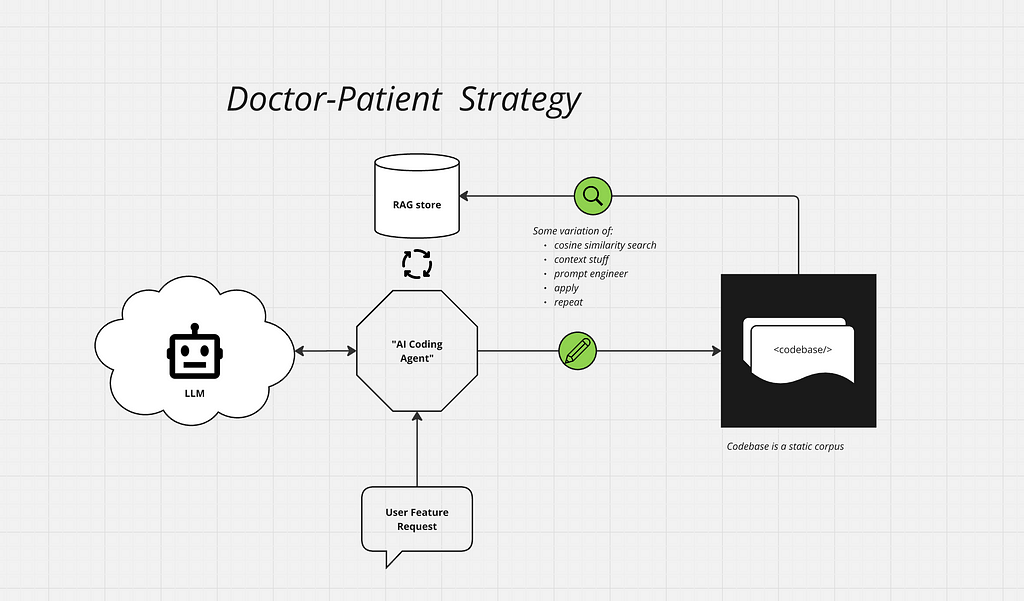

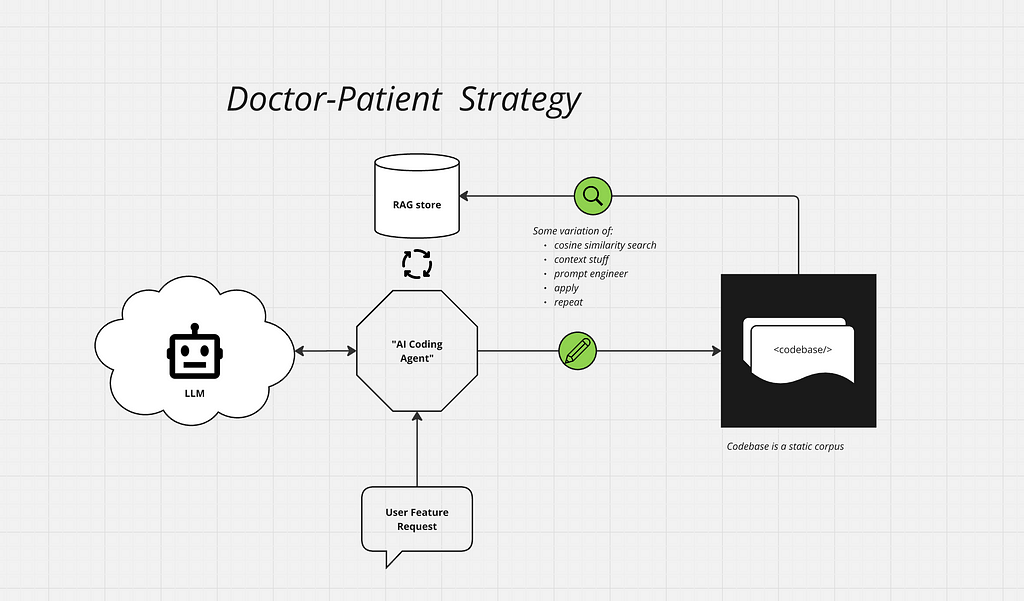

Most contemporary tools approach our automation goal by building stand-alone “coding bots.” The evolution of these bots represents an increasing success at converting natural language instructions into subject codebase modifications. Under the hood, these bots are platforms with agentic mechanics (mostly search, RAG, and prompt chains). As such, evolution focuses on improving the agentic elements — refining RAG chunking, prompt tuning etc.

This strategy establishes the GenAI tool and the subject codebase as two distinct entities, with a unidirectional relationship between them. This relationship is similar to how a doctor operates on a patient, but never the other way around — hence the Doctor-Patient strategy.

A few reasons come to mind that explain why this Doctor-Patient strategy has been the first (and seemingly only) approach towards automating software automation via GenAI:

The independent and unidirectional relationship between agentic platform/tool and codebase that defines the Doctor-Patient strategy is also the greatest limiting factor of this strategy, and the severity of this limitation has begun to present itself as a dead end. Two years of agentic tool use in the software development space have surfaced antipatterns that are increasingly recognizable as “bot rot” — indications of poorly applied and problematic generated code.

Bot rot stems from agentic tools’ inability to account for, and interact with, the macro architectural design of a project. These tools pepper prompts with lines of context from semantically similar code snippets, which are utterly useless in conveying architecture without a high-level abstraction. Just as a chatbot can manifest a sensible paragraph in a new mystery novel but is unable to thread accurate clues as to “who did it”, isolated code generations pepper the codebase with duplicated business logic and cluttered namespaces. With each generation, bot rot reduces RAG effectiveness and increases the need for human intervention.

Because bot rotted code requires a greater cognitive load to modify, developers tend to double down on agentic assistance when working with it, and in turn rapidly accelerate additional bot rotting. The codebase balloons, and bot rot becomes obvious: duplicated and often conflicting business logic, colliding, generic and non-descriptive names for modules, objects, and variables, swamps of dead code and boilerplate commentary, a littering of conflicting singleton elements like loggers, settings objects, and configurations. Ironically, sure signs of bot rot are an upward trend in cycle time and an increased need for human direction/intervention in agentic coding.

This example uses Python to illustrate the concept of bot rot, however a similar example could be made in any programming language. Agentic platforms operate on all programming languages in largely the same way and should demonstrate similar results.

In this example, an application processes TPS reports. Currently, the TPS ID value is parsed by several different methods, in different modules, to extract different elements:

# src/ingestion/report_consumer.py

def parse_department_code(self, report_id:str) -> int:

"""returns the parsed department code from the TPS report id"""

dep_id = report_id.split(“-”)[-3]

return get_dep_codes()[dep_id]

# src/reporter/tps.py

def get_reporting_date(report_id:str) -> datetime.datetime:

"""converts the encoded date from the tps report id"""

stamp = int(report_id.split(“ts=”)[1].split(“&”)[0])

return datetime.fromtimestamp(stamp)

A new feature requires parsing the same department code in a different part of the codebase, as well as parsing several new elements from the TPS ID in other locations. A skilled human developer would recognize that TPS ID parsing was becoming cluttered, and abstract all references to the TPS ID into a first-class object:

# src/ingestion/report_consumer.py

from models.tps_report import TPSReport

def parse_department_code(self, report_id:str) -> int:

"""Deprecated: just access the code on the TPS object in the future"""

report = TPSReport(report_id)

return report.department_code

This abstraction DRYs out the codebase, reducing duplication and shrinking cognitive load. Not surprisingly, what makes code easier for humans to work with also makes it more “GenAI-able” by consolidating the context into an abstracted model. This reduces noise in RAG, improving the quality of resources available for the next generation.

An agentic tool must complete this same task without architectural insight, or the agency required to implement the above refactor. Given the same task, a code bot will generate additional, duplicated parsing methods or, worse, generate a partial abstraction within one module and not propagate that abstraction. The pattern created is one of a poorer quality codebase, which in turn elicits poorer quality future generations from the tool. Frequency distortion from the repetitive code further damages the effectiveness of RAG. This bot rot spiral will continue until a human hopefully intervenes with a git reset before the codebase devolves into complete anarchy.

The fundamental flaw in the Doctor-Patient strategy is that it approaches the codebase as a single-layer corpus, serialized documentation from which to generate completions. In reality, software is non-linear and multidimensional — less like a research paper and more like our aforementioned mystery novel. No matter how large the context window or effective the embedding model, agentic tools disambiguated from the architectural design of a codebase will always devolve into bot rot.

How can GenAI powered workflows be equipped with the context and agency required to automate the process of automation? The answer stems from ideas found in two well-established concepts in software engineering.

Test Driven Development is a cornerstone of modern software engineering process. More than just a mandate to “write the tests first,” TDD is a mindset manifested into a process. For our purposes, the pillars of TDD look something like this:

TDD implicitly requires that application code be written in a way that is highly testable. Overly complex, nested business logic must be broken into units that can be directly accessed by test methods. Hooks need to be baked into object signatures, dependencies must be injected, all to facilitate the ability of test code to assure functionality in the application. Herein is the first part of our answer: for agentic processes to be more successful at automating our codebase, we need to write code that is highly GenAI-able.

Another important element of TDD in this context is that testing must be an implicit part of the software we build. In TDD, there is no option to scratch out a pile of application code with no tests, then apply a third party bot to “test it.” This is the second part of our answer: Codebase automation must be an element of the software itself, not an external function of a ‘code bot’.

The earlier Python TPS report example demonstrates a code refactor, one of the most important higher-level functions in healthy software evolution. Kent Beck describes the process of refactoring as

“for each desired change, make the change easy (warning: this may be hard), then make the easy change.” ~ Kent Beck

This is how a codebase improves for human needs over time, reducing cognitive load and, as a result, cycle times. Refactoring is also exactly how a codebase is continually optimized for GenAI automation! Refactoring means removing duplication, decoupling and creating semantic “distance” between domains, and simplifying the logical flow of a program — all things that will have a huge positive impact on both RAG and generative processes. The final part of our answer is that codebase architecture (and subsequently, refactoring) must be a first class citizen as part of any codebase automation process.

Given these borrowed pillars:

An alternative strategy to the unidirectional Doctor-Patient takes shape. This strategy, where application code development itself is driven by the goal of generative self-automation, could be called Generative Driven Development, or GDD(1).

GDD is an evolution that moves optimization for agentic self-improvement to the center stage, much in the same way as TDD promoted testing in the development process. In fact, TDD becomes a subset of GDD, in that highly GenAI-able code is both highly testable and, as part of GDD evolution, well tested.

To dissect what a GDD workflow could look like, we can start with a closer look at those pillars:

In a highly GenAI-able codebase, it is easy to build highly effective embeddings and assemble low-noise context, side effects and coupling are rare, and abstraction is clear and consistent. When it comes to understanding a codebase, the needs of a human developer and those of an agentic process have significant overlap. In fact, many elements of highly GenAI-able code will look familiar in practice to a human-focused code refactor. However, the driver behind these principles is to improve the ability of agentic processes to correctly generate code iterations. Some of these principles include:

Every commercially viable programming language has at least one accompanying test framework; Python has pytest, Ruby has RSpec, Java has JUnit etc. In comparison, many other aspects of the SDLC evolved into stand-alone tools – like feature management done in Jira or Linear, or monitoring via Datadog. Why, then, are testing code part of the codebase, and testing tools part of development dependencies?

Tests are an integral part of the software circuit, tightly coupled to the application code they cover. Tests require the ability to account for, and interact with, the macro architectural design of a project (sound familiar?) and must evolve in sync with the whole of the codebase.

For effective GDD, we will need to see similar purpose-built packages that can support an evolved, generative-first development process. At the core will be a system for building and maintaining an intentional meta-catalog of semantic project architecture. This might be something that is parsed and evolved via the AST, or driven by a ULM-like data structure that both humans and code modify over time — similar to a .pytest.ini or plugin configs in a pom.xml file in TDD.

This semantic structure will enable our package to run stepped processes that account for macro architecture, in a way that is both bespoke to and evolving with the project itself. Architectural rules for the application such as naming conventions, responsibilities of different classes, modules, services etc. will compile applicable semantics into agentic pipeline executions, and guide generations to meet them.

Similar to the current crop of test frameworks, GDD tooling will abstract boilerplate generative functionality while offering a heavily customizable API for developers (and the agentic processes) to fine-tune. Like your test specs, generative specs could define architectural directives and external context — like the sunsetting of a service, or a team pivot to a new design pattern — and inform the agentic generations.

GDD linting will look for patterns that make code less GenAI-able (see Writing code that is highly GenAI-able) and correct them when possible, raise them to human attention when not.

Consider the problem of bot rot through the lens of a TDD iteration. Traditional TDD operates in three steps: red, green, and refactor.

With bot rot only the “green” step is present. Unless explicitly instructed, agentic frameworks will not write a failing test first, and without an understanding of the macro architectural design they cannot effectively refactor a codebase to accommodate the generated code. This is why codebases subject to the current crop of agentic tools degrade rather quickly — the executed TDD cycles are incomplete. By elevating these missing “bookends” of the TDD cycle in the agentic process and integrating a semantic map of the codebase architecture to make refactoring possible, bot rot will be effectively alleviated. Over time, a GDD codebase will become increasingly easier to traverse for both human and bot, cycle times will decrease, error rates will fall, and the application will become increasingly self-automating.

what could GDD development look like?

A GDD Engineer opens their laptop to start the day, cds into our infamous TPS report repo and opens a terminal. Let’s say the Python GDD equivalent of pytest is a (currently fictional) package named py-gdd.

First, they need to pick some work from the backlog. Scanning over the tickets in Jira they decide on “TPS-122: account for underscores in the new TPS ID format.” They start work in the terminal with:

>> git checkout -b feature/TPS-122/id-underscores && py-gdd begin TPS-122

A terminal spinner appears while py-gdd processes. What is py-gdd doing?

py-gdd responds with a developer-peer level statement about the execution plan, something to the effect of:

“I am going to parameterize all the tests that use TPS IDs with both dashes and underscores, I don’t think we need a stand-alone test for this then. And then I will abstract all the TPS ID parsing to a single TPS model.”

Notice how this wasn’t an unreadable wall of code + unimportant context + comment noise?

The Engineer scans the plan, which consists of more granular steps:

On confirmation, the terminal spinner appears again. Text appears in red:

Problem in step 3 - I am unable to get all tests to pass after 2 attempts

The Engineer looks at the test code and sees that the database test factory is hard-coded to the old ID type and cannot accept overrides — this explains why the py-gdd process cannot get the updates to pass. The Engineer explains this via in-terminal chat to py-gdd, and a new sub-plan is created:

In addition, back in the main plan the step “update semantics for database test factory” is added. The Engineer confirms again, and this time the plan completes successfully, and a Pull Request is created in GitHub.

py-gdd follows up with a list of concerns it developed during the plan execution:

concerns:

- there are several lower-level modules simply named “server” and this is semantically confusing.

- the tps_loader module aliases pandas as “pand” which is non-standard and may hinder generation.

- there are no test hooks in the highly complex “persist_schema” method of the InnatechDB class. This is makes both testing and generation difficult.

...

The Engineer instructs py-gdd to create tickets for each concern. On to the next ticket!

In this vision, an Engineer is still very heavily involved in the mechanical processes of GDD. But it is reasonable to assume that as a codebase grows and evolves to become increasingly GenAI-able due to GDD practice, less human interaction will become necessary. In the ultimate expression of Continuous Delivery, GDD could be primarily practiced via a perpetual “GDD server.” Work will be sourced from project management tools like Jira and GitHub Issues, error logs from Datadog and CloudWatch needing investigation, and most importantly generated by the GDD tooling itself. Hundreds of PRs could be opened, reviewed, and merged every day, with experienced human engineers guiding the architectural development of the project over time. In this way, GDD can become a realization of the goal to automate automation.

originally published on pirate.baby, my tech and tech-adjacent blog

GDD: Generative Driven Design was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

GDD: Generative Driven Design