Go here to Read this Fast! ZachXBT exposes Murad Mahmudov’s 11 meme coin wallets

Originally appeared here:

ZachXBT exposes Murad Mahmudov’s 11 meme coin wallets

Go here to Read this Fast! ZachXBT exposes Murad Mahmudov’s 11 meme coin wallets

Originally appeared here:

ZachXBT exposes Murad Mahmudov’s 11 meme coin wallets

Originally appeared here:

Taiwan regulators mull piloting crypto custody services with local banks: report

U.S. political developments to play a role in defining the crypto market trajectory in Q4 and beyond.

SOL and other unproven cryptocurrencies will likely lag ETH and BTC under Harris’s presiden

The post Solana: How U.S. elections could shape SOL’s trajectory in Q4 appeared first on AMBCrypto.

BNB bulls demonstrate dominance by pushing higher as most top coins face sell pressure.

Binance smart chain yield farming demand could be one of the key reasons behind BNB’s upside.

Binanc

The post BNB eyes $600 amid Scroll’s SCR crypto launch on Binance appeared first on AMBCrypto.

Render price remains in a stable range near $5.50 after a period of consolidation and rising whale activity.

The long-short ratio decline and OI-weighted funding rate indicate potential shifts

The post Render’s 3-month triangle pattern: A prelude to price breakout? appeared first on AMBCrypto.

Bitcoin Dogs has set a firm date for the release of its Telegram game, and analysts expect its release to fuel a wild Q4. October 30th is the day all 0DOG holders have marked in their calendars, and w

The post Bitcoin Dogs’ Telegram game ready to fuel massive Q4 surge appeared first on AMBCrypto.

Go here to Read this Fast! Bitcoin Dogs’ Telegram game ready to fuel massive Q4 surge

Originally appeared here:

Bitcoin Dogs’ Telegram game ready to fuel massive Q4 surge

Cryptocurrency exchange Crypto.com announced Tuesday that it had filed a lawsuit against the United States Securities and Exchange Commission (SEC).

Originally appeared here:

Crypto.Com Takes Legal Action Against SEC, Chair Gary Gensler To ‘Protect Future Of Crypto In US’

Coded Estate has achieved a significant milestone by closing an oversubscribed angel funding round, attracting investments from notable players such as Mozaik Capital, Hyperion Ventures, Black Dragon, Dutch Crypto Investors, and others. Coded Estate has officially unveiled its “Pre-Season Mainnet Campaign,” a strategic initiative designed to inject early liquidity into the platform and pave the […]

Originally appeared here:

Coded Estate Successfully Closes Oversubscribed Angel Funding Round, Fueling Launch of Real Estate Hub on Nibiru Chain

Azure Data Factory (ADF) is a popular tool for moving data at scale, particularly in Enterprise Data Lakes. It is commonly used to ingest and transform data, often starting by copying data from on-premises to Azure Storage. From there, data is moved through different zones following a medallion architecture. ADF is also essential for creating and restoring backups in case of disasters like data corruption, malware, or account deletion.

This implies that ADF is used to move large amounts of data, TBs and sometimes even PBs. It is thus important to optimize copy performance and so to limit throughput time. A common way to improve ADF performance is to parallelize copy activities. However, the parallelization shall happen where most of the data is and this can be challenging when the data lake is skewed.

In this blog post, different ADF parallelization strategies are discussed for data lakes and a project is deployed. The ADF solution project can be found in this link: https://github.com/rebremer/data-factory-copy-skewed-data-lake.

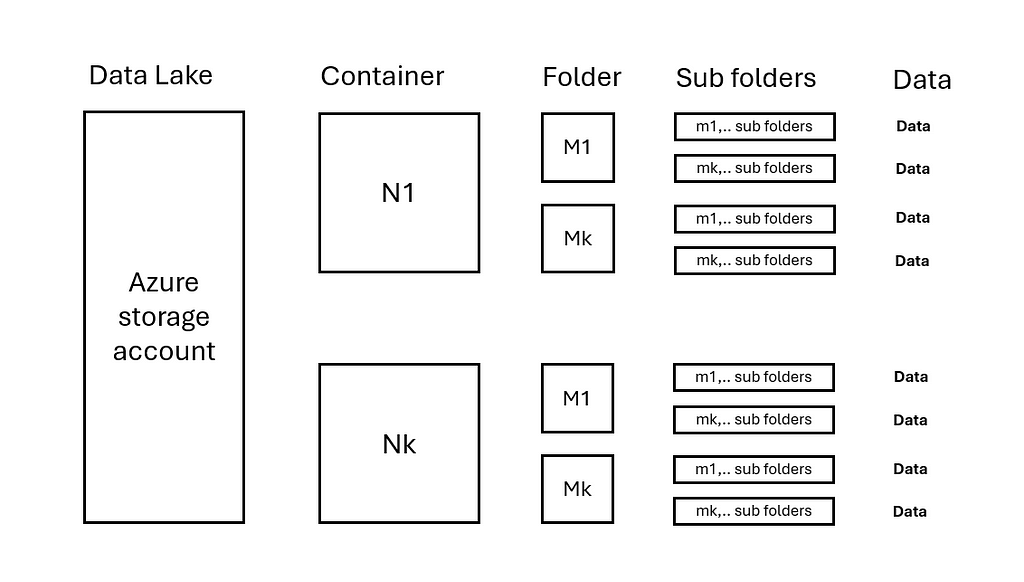

Data Lakes come in all sizes and manners. It is important to understand the data distribution within a data lake to improve copy performance. Consider the following situation:

See also image below:

In this situation, copy activities can be parallelized on each container N. For larger data volumes, performance can be further enhanced by parallelizing on folders M within container N. Subsequently, per copy activity it can be configured how much Data Integration Units (DIU) and copy parallelization within a copy activity is used.

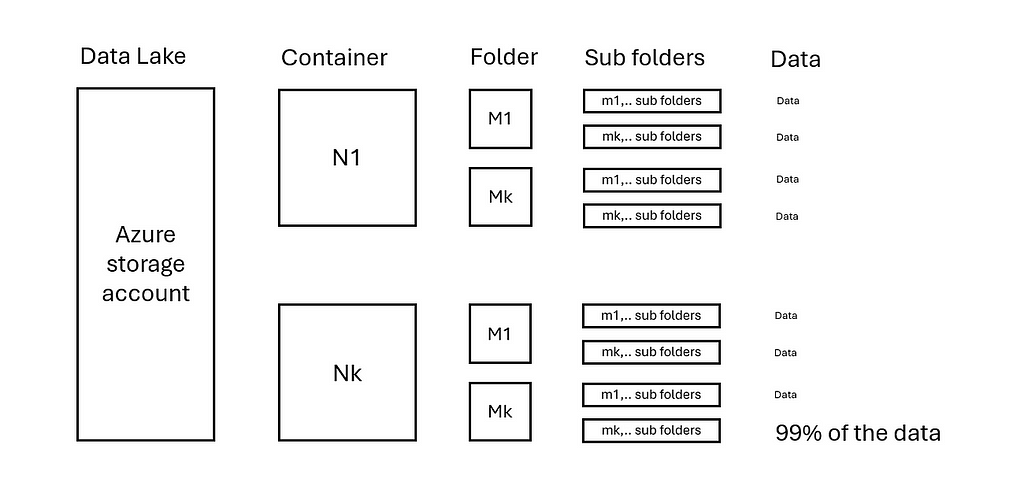

Now consider the following extreme situation that the last folder Nk and Mk has 99% of data, see image below:

This implies that parallelization shall be done on the sub folders in Nk/Mk where the data is. More advanced logic is then needed to pinpoint the exact data locations. An Azure Function, integrated within ADF, can be used to achieve this. In the next chapter a project is deployed and are the parallelization options discussed in more detail.

In this part, the project is deployed and a copy test is run and discussed. The entire project can be found in project: https://github.com/rebremer/data-factory-copy-skewed-data-lake.

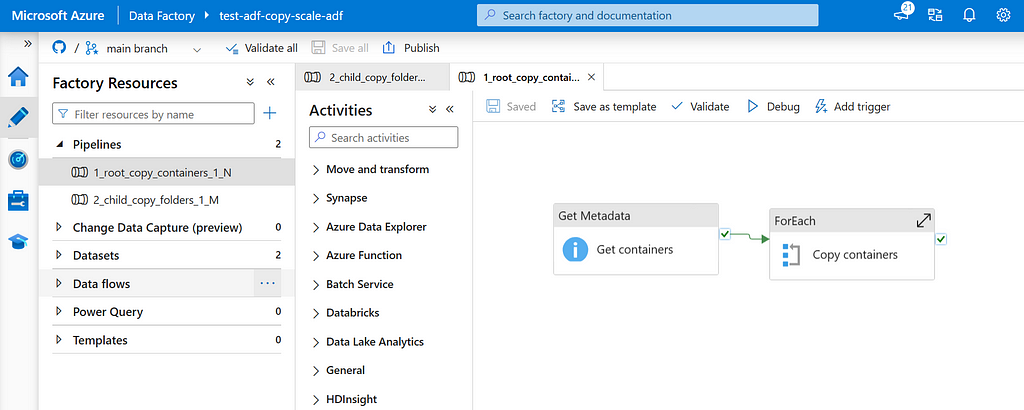

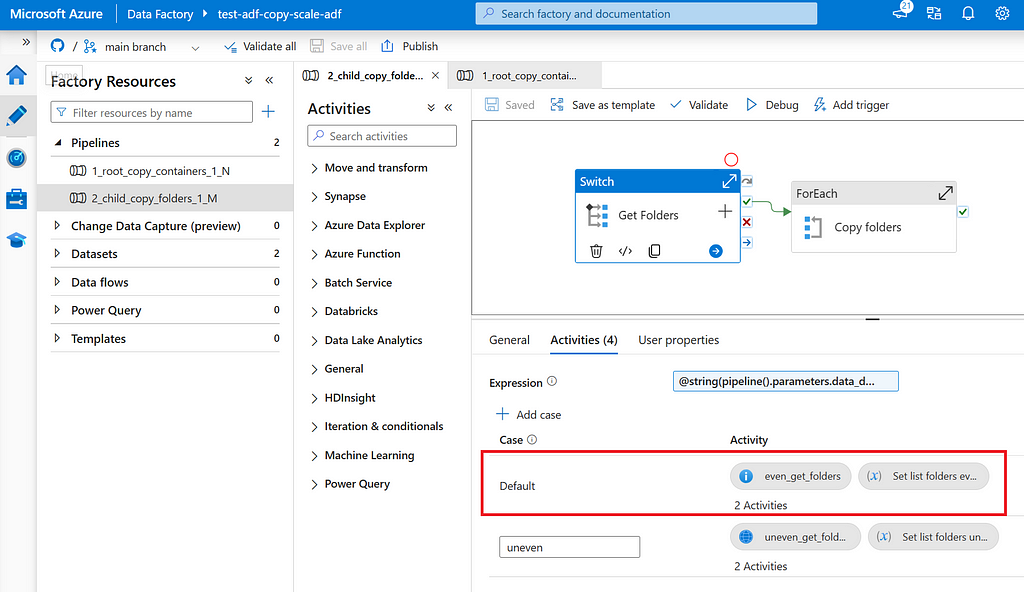

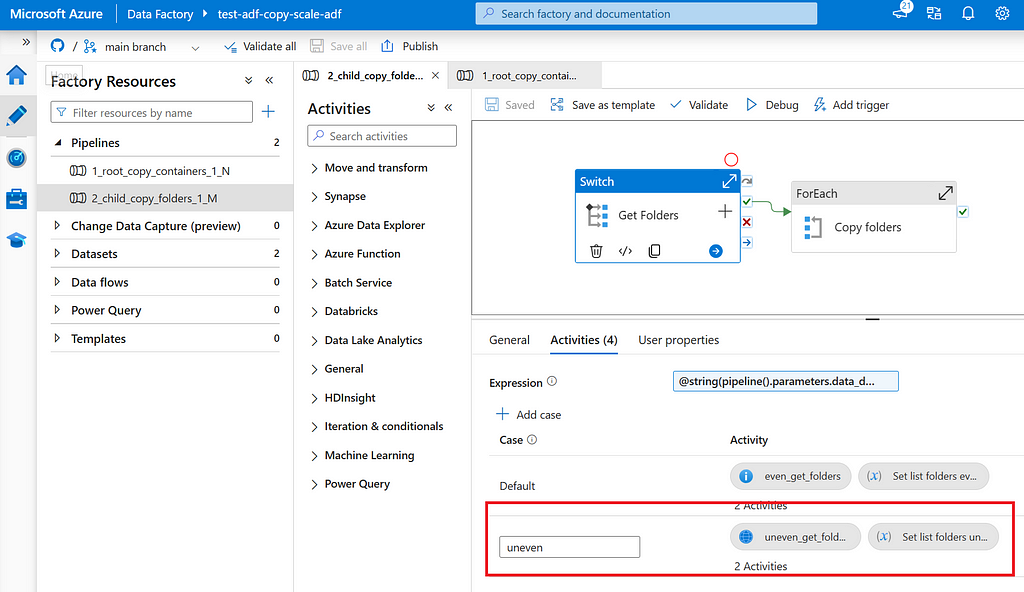

Run the script deploy_adf.ps1. In case ADF is successfully deployed, there are two pipelines deployed:

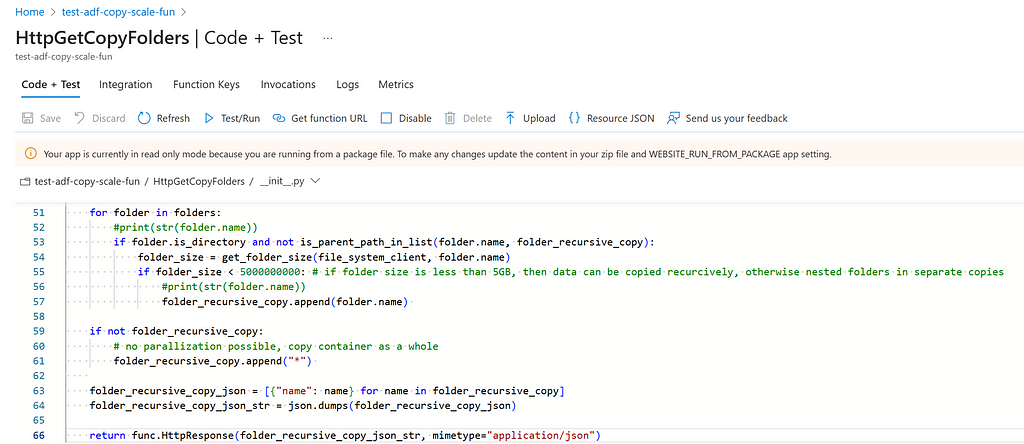

Subsequently, run the script deploy_azurefunction.ps1. In case the Azure Function is successfully deployed, the following code is deployed.

To finally run the project, make sure that the system assigned managed identity of the Azure Function and Data Factory can access the storage account where the data is copied from and to.

After the project is deployed, it can be noticed that the following tooling is deployed to improve the performance using parallelization.

In the uniformly distributed data lake, data is evenly distributed over N containers and M folders. In this situation, copy activities can just be parallelized on each folder M. This can be done using a Get Meta Data to list folders M, For Each to iterate over folders and copy activity per folder. See also image below.

Using this strategy, this would imply that each copy activity is going to copy an equal amount of data. A total of N*M copy activities will be run.

In the skewed distributed data lake, data is not evenly distributed over N containers and M folders. In this situation, copy activities shall be dynamically determined. This can be done using an Azure Function to list the data heavy folders, then a For Each to iterate over folders and copy activity per folder. See also image below.

Using this strategy, copy activities are dynamically scaled in data lake where data can be found and parallelization is thus needed most. Although this solution is more complex than the previous solution since it requires an Azure Function, it allows for copying skewed distributed data.

To compare the performance of different parallelization options, a simple test is set up as follows:

Test A: Copy 1 container with 1 copy activity using 32 DIU and 16 threads in copy activity (both set to auto) => 0.72 TB of data is copied, 12m27s copy time, average throughput is 0.99 GB/s

Test B: Copy 1 container with 1 copy activity using 128 DIU and 32 threads in copy activity => 0.72 TB of data is copied, 06m19s copy time, average throughput is 1.95 GB/s.

Test C: Copy 1 container with 1 copy activity using 200 DIU and 50 threads (max) => test aborted due to throttling, no performance gain compared to test B.

Test D: Copy 2 containers with 2 copy activities in parallel using 128 DIU and 32 threads for each copy activity => 1.44 TB of data is copied, 07m00s copy time, average throughput is 3.53 GB/s.

Test E: Copy 3 containers with 3 copy activities in parallel using 128 DIU and 32 threads for each copy activity => 2.17 TB of data is copied, 08m07s copy time, average throughput is 4.56 GB/s. See also screenshot below.

In this, it shall be noticed that ADF does not immediately start copying since there is a startup time. For an Azure IR this is ~10 seconds. This startup time is fixed and its impact on throughput can be neglected for large copies. Also, maximum ingress of a storage account is 60 Gbps (=7.5 GB/s). There cannot be scaled above this number, unless additional capacity is requested on the storage account.

The following takeaways can be drawn from the test:

Azure Data Factory (ADF) is a popular tool to move data at scale. It is widely used for ingesting, transforming, backing up, and restoring data in Enterprise Data Lakes. Given its role in moving large volumes of data, optimizing copy performance is crucial to minimize throughput time.

In this blog post, we discussed the following parallelization strategies to enhance the performance of data copying to and from Azure Storage.

Unfortunately, there is no silver bullet solution and it always requires analyses and testing to find the best strategy to improve copy performance for Enterprise Data Lakes. This article aimed to give guidance in choosing the best strategy.

How to Parallelize Copy Activities in Azure Data Factory was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

How to Parallelize Copy Activities in Azure Data Factory

Go Here to Read this Fast! How to Parallelize Copy Activities in Azure Data Factory

Finding the right trade-off between memory efficiency, accuracy, and speed

Originally appeared here:

Fine-Tuning LLMs with 32-bit, 8-bit, and Paged AdamW Optimizers

Go Here to Read this Fast! Fine-Tuning LLMs with 32-bit, 8-bit, and Paged AdamW Optimizers