Autonomous Robotics in the Era of Large Multimodal Models

In my recent work on Multiformer, I explored the power of lightweight hierarchical vision transformers to efficiently perform simultaneous learning and inference on multiple computer vision tasks essential for robotic perception. This “shared trunk” concept of a common backbone feeding features to multiple task heads has become a popular approach in multi-task learning, particularly in autonomous robotics, because it has repeatedly been demonstrated that learning a feature space that is useful for multiple tasks not only produces a single model which can perform multiple tasks given a single input, but also performs better at each individual task by leveraging the complementary knowledge learned from other tasks.

Traditionally, autonomous vehicle (AV) perception stacks form an understanding of their surroundings by performing simultaneous inference on multiple computer vision tasks. Thus, multi-task learning with a common backbone is a natural choice, providing a best-of-both-worlds solution for parameter efficiency and individual task performance. However, the rise of large multimodal models (LMMs) challenges this efficient multi-task paradigm. World models created using LMMs possess the profound ability to understand sensor data at both a descriptive and anticipatory level, moving beyond task-specific processing to holistic understanding of the environment and its future states (albeit with a far higher parameter count).

In this new paradigm, which has been dubbed AV2.0, tasks like semantic segmentation and depth estimation become emergent capabilities of models possessing a much deeper understanding of the data, and for which performing such tasks becomes superfluous for any reason other than relaying this knowledge to humans. In fact, the entire point of performing these intermediary tasks in a perception stack was to send those predictions into further layers of perception, planning, and control algorithms, which would then finally describe the relationship of the ego with its surroundings and the correct actions to take. By contrast, if a larger model is able to describe the full nature of a driving scenario, all the way up to and including the correct driving action to take given the same inputs, there’s no need for lossy intermediary representations of knowledge, and the network can learn to respond directly to the data. In this framework, the divide between perception, planning, and control is eliminated, creating a unified architecture that can be optimized end-to-end.

While it is still a burgeoning school of thought, end-to-end autonomous driving solutions using generative world models built with LMMs is a plausible long term winner. It continues a trend of simplifying previously complex solutions to challenging problems through sequence modeling formulations, which started in natural language processing (NLP), quickly extended into computer vision, and now seems to have taken a firm hold in Reinforcement Learning (RL). Further, these formerly distinct areas of research are becoming unified under a this common framework, and mutually accelerating as a result. For AV research, accepting this paradigm shift also means catching the wave of rapid acceleration in infrastructure and methodology for the training, fine-tuning, and deployment of large transformer models, as researchers from multiple disciplines continue to climb aboard and add momentum to the apparent “intelligence is a sequence modeling problem” phenomenon.

But what does this mean for traditional modular AV stacks? Are multi-task computer vision models like Multiformer bound for obsolescence? It seems clear that for simple problems, such as an application requiring basic image classification over a known set of classes, a large model is overkill. However, for complex applications like autonomous robotics, the answer is far less obvious at this stage. Large models come with serious drawbacks, particularly in their memory requirements and resource-intensive nature. Not only do they inflict large financial (and environmental) costs to train, but deployment possibilities are restricted as well: the larger the model, the larger the embedded system (robot) must be. Development of large models thus has a real barrier to entry, which is bound to discourage adoption by smaller outfits. Nevertheless, the allure of large model capabilities has generated global momentum in the development of accessible methods for their training and deployment, and this trend is bound to continue.

In 2019, Rich Sutton remarked on “The Bitter Lesson” in AI research, establishing that time and again, across disciplines from natural language to computer vision, complex approaches incorporating handcrafted elements based on human knowledge ultimately become time-wasting dead ends that are superseded substantially by more general methods that leverage raw computation. Currently, the advent of large transformers and the skillful shoehorning of various problems into self-supervised sequence modeling tasks are the major fuel burning out the dead wood of disjoint and bespoke problem formulations. Now, longstanding approaches in RL and Time Series Analysis, including vetted heroes like the Recurrent Neural Network (RNN), must defend their usefulness, or join SIFT and rule-based language models in retirement. When it comes to AV stack development, should we opt to break the cycle of ensnaring traditions and make the switch to large world modeling sooner than later, or can the accessibility and interpretability of traditional modular driving stacks withstand the surge of large models?

This article tells the story of an intriguing confluence of research trends that will guide us toward an educated answer to this question. First, we review traditional modular AV stack development, and how multi-task learning leads to improvements by leveraging generalized knowledge in a shared parameter space. Next, we journey through the meteoric rise of large language models (LLMs) and their expansion into multimodality with LMMs, setting the stage for their impact in robotics. Then, we learn about the history of world modeling in RL, and how the advent of LMMs stands to ignite a powerful revolution by bestowing these world models with the level of reasoning and semantic understanding seen in today’s large models. We then compare the strengths and weaknesses of this large world modeling approach against traditional AV stack development, showing that large models offer great advantages in simplified architecture, end-to-end optimization in a high-dimensional space, and extraordinary predictive power, but they do so at the cost of far higher parameter counts that pose multiple engineering challenges. With this in mind, we review several promising techniques for overcoming these engineering challenges in order to make the development and deployment of these large models feasible. Finally, we reflect on our findings to conclude that while large world models are favorably situated to become the long-term winner, the lessons learned from traditional methods will still be relevant in maximizing their success. We close with a discussion highlighting some promising directions for future work in this exciting domain.

Multi-task Learning in Computer Vision and AVs

Multi-task learning (MTL) is an area that has seen substantial research focus, often described as a major step towards human-like reasoning in artificial intelligence (AI). As outlined in Michael Crawshaw’s comprehensive survey on the subject, MTL involves training a model on multiple tasks simultaneously, allowing it to leverage shared information across these tasks. This approach is not only beneficial in terms of computational efficiency but also leads to improved task performance due to the complementary nature of the learned features. Crawshaw’s survey emphasizes that MTL models often outperform their single-task counterparts by learning more robust and generalized representations.

We believe that MTL reflects the learning process of human beings more accurately than single task learning in that integrating knowledge across domains is a central tenant of human intelligence. When a newborn baby learns to walk or use its hands, it accumulates general motor skills which rely on abstract notions of balance and intuitive physics. Once these motor skills and abstract concepts are learned, they can be reused and augmented for more complex tasks later in life, such as riding a bike or tightrope walking.

The benefits of MTL are particularly relevant in the context of AVs, which require real-time inference of multiple related vision tasks to make safe navigation decisions. MultiNet is a prime example of a MTL model designed for AVs, combining tasks like road segmentation, object detection, and classification within a unified architecture. The integration of MTL in AVs brings notable advantages like higher framerate and reduced memory footprint, crucial for the varying scales of autonomous robotics.

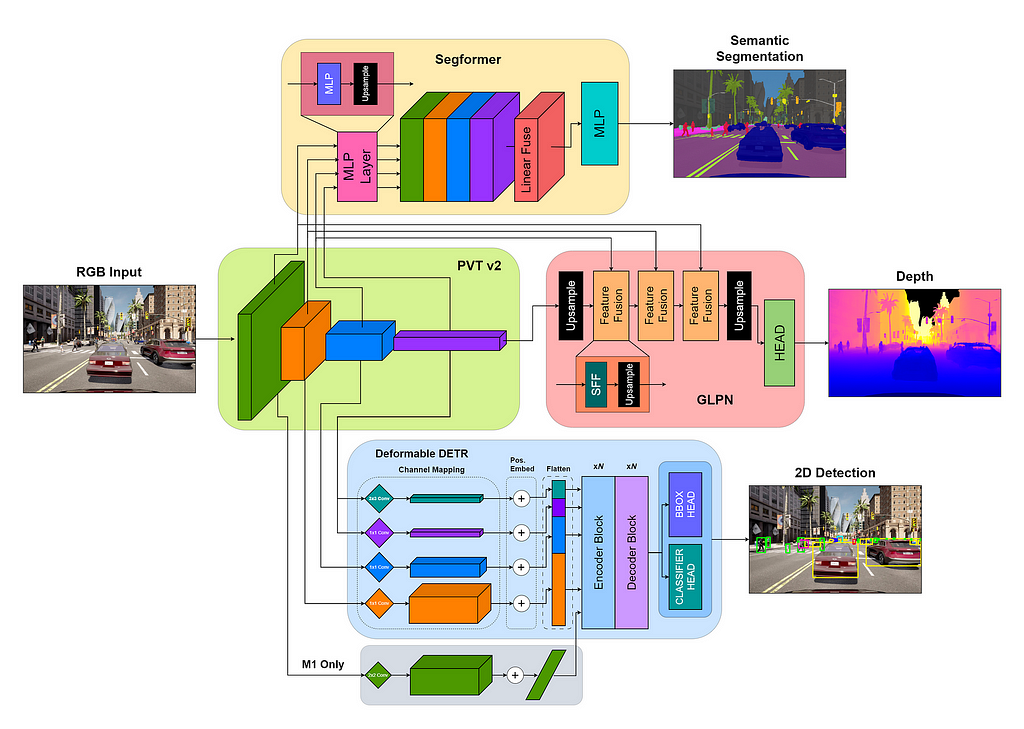

Transformer-based networks such as Vision Transformer (ViT) and its derivatives have shown incredible descriptive capacity in computer vision, and the fusion of transformers with convolutional architectures in the form of hierarchical transformers like the Pyramid Vision Transformer v2 (PVTv2) have proven particularly potent and easy to train, consistently outperforming ResNet backbones with fewer parameters in recent models like Segformer, GLPN, and Panoptic Segformer. Motivated by the desire for a powerful yet lightweight perception module, Multiformer combines the complementary strengths offered by MTL and the descriptive power of hierarchical transformers to achieve adept simultaneous performance on semantic segmentation, depth estimation, and 2D object detection with just over 8M (million) parameters, and is readily extensible to panoptic segmentation.

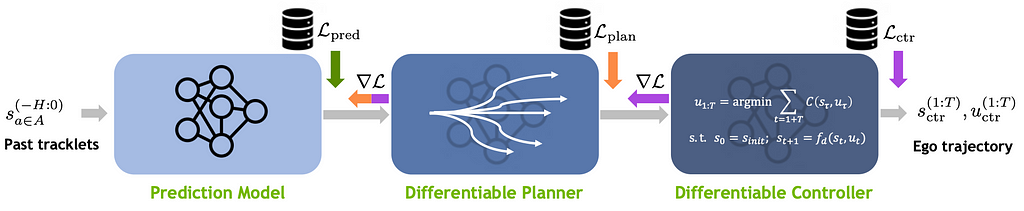

Building a full autonomy stack, however, requires more than just a perception module. We also need to plan and execute actions, so we need to add a planning and control module which can use the outputs of the perception stack to accurately track and predict the states of the ego and its environment in order to send commands that represent safe driving actions. One promising option for this is Nvidia’s DiffStack, which offers a trainable yet interpretable combination of trajectory forecasting, path planning, and control modeling. However, this module requires 3D agent poses as an input, which means our perception stack must generate them. Fortunately, there are algorithms available for 3D object detection, particularly when accurate depth information is available, but our object tracking is going to be extremely sensitive to our accuracy and temporal consistency on this difficult task, and any errors will propagate and diminish the quality of the downstream motion planning and control.

Indeed, the traditional modular paradigm of autonomy stacks, with its distinct stages from sensor input through perception, planning, and control, is inherently susceptible to compounding errors. Each stage in the sequence is reliant on the accuracy of the preceding one, which makes the system vulnerable to a cascade of errors, and impedes end-to-end error correction through crystallization of intermediary information. On the other hand, the modular approach is more interpretable than an end-to-end system since the intermediary representations can be understood and diagnosed. It is for this reason that end-to-end systems have often been avoided, seen as “black box” solutions with an unacceptable lack of interpretability for a safety-critical application of AI like autonomous navigation. But what if the interpretability issue could be overcome? What if these black boxes could explain the decisions they made in plain English, or any other natural language? Enter the era of LMMs in autonomous robotics, where this vision is not some distant dream, but a tangible reality.

Autoregressive Transformers and The Rise of LLMs

In what turned out to be one of the most impactful research papers of our time, Vaswani et al. introduced the transformer architecture in 2017 with “Attention is All You Need,” revolutionizing sequence-to-sequence (seq2seq) modeling with their proposed attention mechanisms. These innovative modules overcame the weaknesses of the previously favored RNNs by effectively capturing long-range dependencies in sequences and allowing more parallelization during computation, leading to substantial improvements in various seq2seq tasks. A year later, Google’s Bidirectional Encoder Representations from Transformers (BERT) strengthened transformer capabilities in NLP by introducing a bidirectional pretraining objective using masked language modeling (MLM) to fuse both the left and right contexts, encoding a more nuanced contextual understanding of each token, and empowering a variety of language tasks like sentiment analysis, question answering, machine translation, text summarization, and more.

In mid-2018, researchers at OpenAI demonstrated training a causal decoder-only transformer to work on byte pair encoded (BPE) text tokens with the Generative Pretrained Transformer (GPT). They found that pretraining on a self-supervised autoregressive language modeling task using large corpuses of unlabeled text data, followed by task-specific fine-tuning with task-aware input transformations (and architectural modifications when necessary), produced models which significantly improved state-of-the-art on a variety of language tasks.

While the task-aware input transformations in the token space used by GPT-1 can be considered an early form of “prompt engineering,” the term most widely refers to the strategic structuring of text to elicit multi-task behavior from language models demonstrated by researchers from Salesforce in 2018 with their influential Multitask Question Answering Network (MQAN). By framing tasks as strings of text with distinctive formatting, the authors trained a single model with no task-specific modules or parameters to perform well at a set of ten NLP tasks which they called the “Natural Language Decathlon” (decaNLP).

In 2019, OpenAI found that by adopting this form of prompt engineering at inference time, GPT-2 elicited promising zero-shot multi-task performance that scaled log-linearly with the size of the model and dataset. While these task prompt structures were not explicitly included in the training data the way they were for MQAN, the model was able to generalize knowledge from structured language that it had seen before to complete the task at hand. The model demonstrated impressive unsupervised multi-task learning with 1.5B parameters (up from 117M in GPT), indicating that this form of language modeling posed a promising path toward generalizable AI, and raising ethical concerns for the future.

Google research open-sourced the text-to-text transfer transformer (T5) in late 2019, with model sizes ranging up to 11B parameters. While also built with an autoregressive transformer, T5 represents natural language problems in a unified text-to-text framework using the full transformer architecture (complete with the encoder), differing from the next token prediction task of GPT-style models. While this text-to-text framework is a strong choice for applications requiring more control over task training and expected outputs, the next token prediction scheme of GPT-style models became favored for its task-agnostic training and freeform generation of long coherent responses to user inputs.

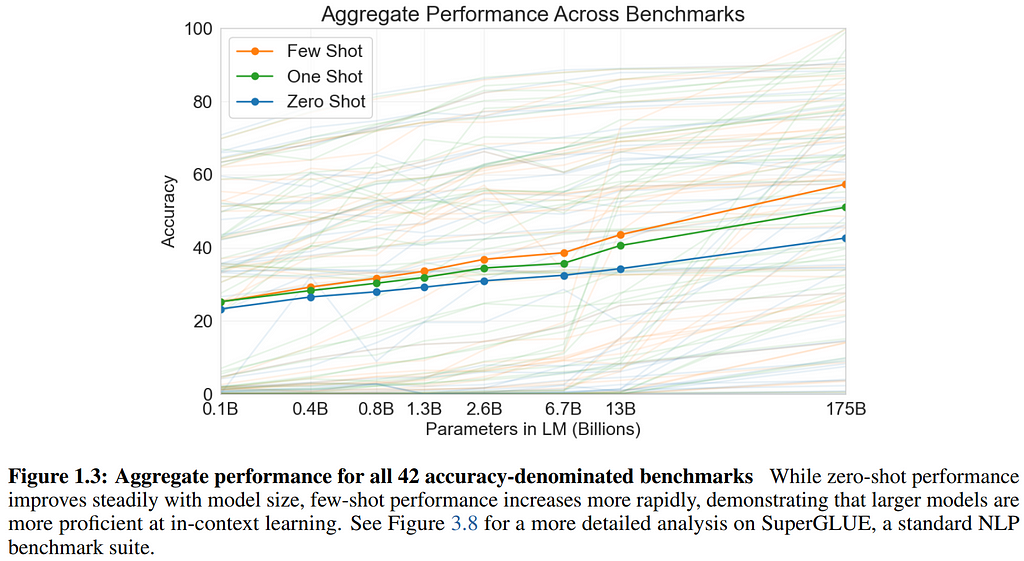

Then in 2020, OpenAI took model and data scaling to unprecedented heights with GPT-3, and the rest is history. In their paper titled “Language Models are Few-Shot Learners,” the authors define a “few-shot” transfer paradigm where they provide whatever number of examples for an unseen task (formulated as natural language) will fit into the model’s context before the final open-ended prompt of this task for the model to complete. They contrast this with “one-shot,” where one example is provided in context, and “zero-shot,” where no examples are provided at all. The team found that performance on all three evaluation methods continued to scale all the way to 175B parameters, a historic step change in published model sizes. This behemoth achieved generalist few-shot learning and text generation abilities approaching the level of humans, prompting mainstream attention, and spurring concerns for the future implications of this trend in AI research. Those concerned could find temporary solace in the fact that at these scales, training and fine-tuning of these models had been delivered far from the purview of all but the largest outfits, but this would surely change.

Groundbreaking on many fronts, GPT-3 also marked the end of OpenAI’s openness, the first of its closed-source models. Fortunately for the research community, the wave of open-source LLM research had already begun. EleutherAI released a popular series of large open-source GPT-3-style models starting with GPT-Neo 2.7B in 2020, continuing on to GPT-J 6B in 2021, and GPT-NeoX 20B in 2022, with the latter giving GPT-3.5 DaVinci a run for its money in the benchmarks (all are available in huggingface/transformers).

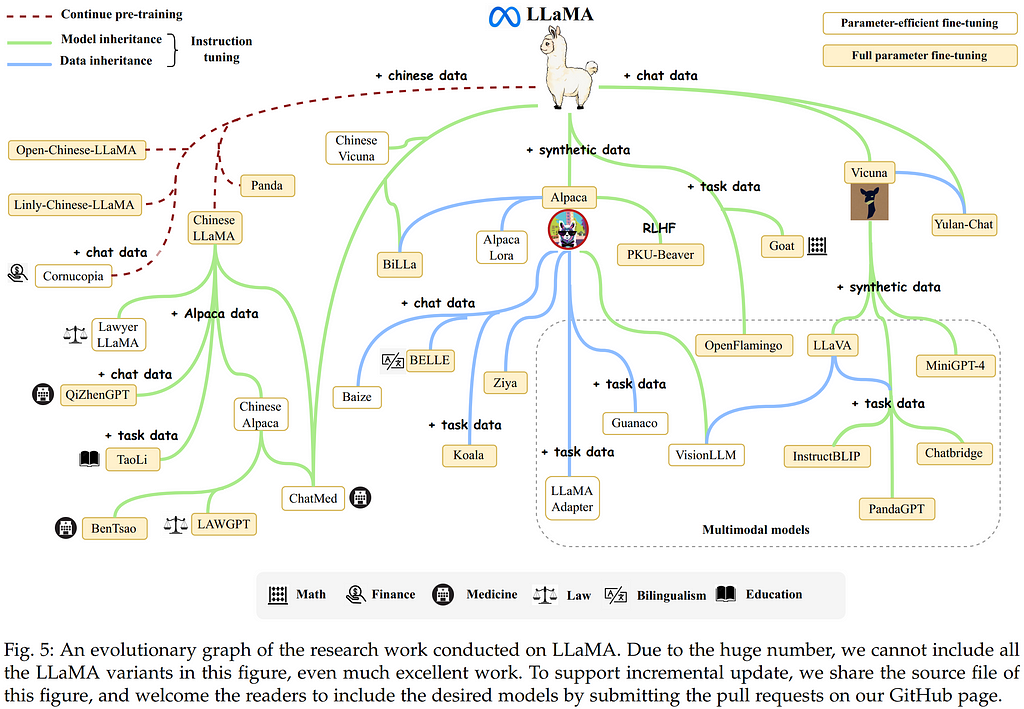

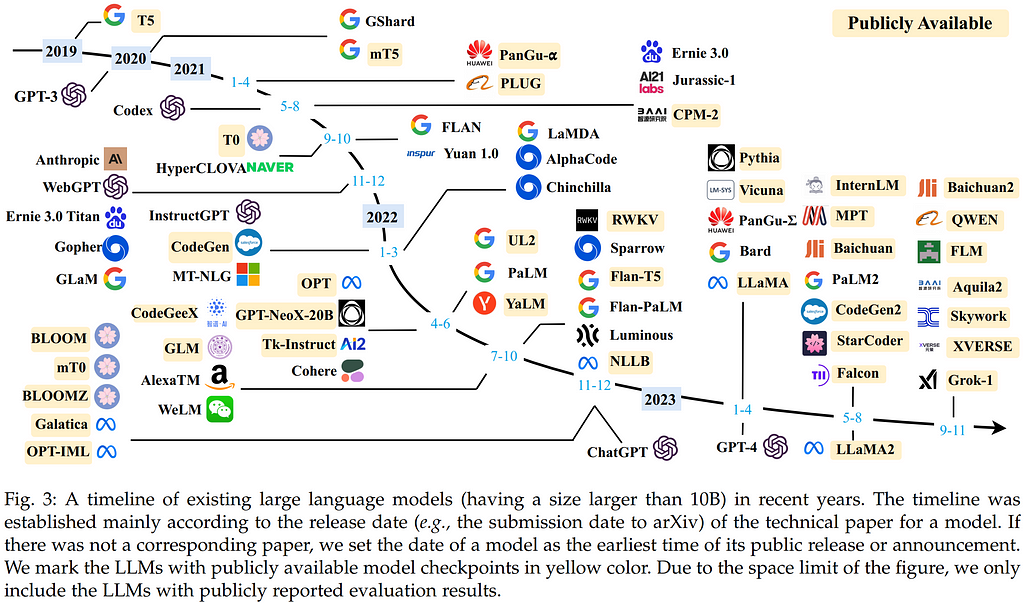

The following years marked a Cambrian Explosion of transformer-based LLMs. A supernova of research interest has produced a breathtaking list of publications for which a full review is well outside the scope of this article, but I refer the reader to Zhao et al. 2023 for a comprehensive survey. A few key developments deserving mention are, of course, OpenAI’s release of GPT-4, along with Meta AI’s open-source release of the fecund LLaMA, the potent Mistral 7B model, and its mixture-of-experts (MoE) version: Mixtral 8X7B, all in 2023. It is widely believed that GPT-4 is a MoE system, and the power demonstrated by Mixtral 8X7B (outperforming LLaMA 2 70B on most benchmarks with 6x faster inference) provides compelling evidence.

For a concise visual summary of the LLM Big Bang over the past years, it is helpful to borrow once more from the powerful Zhao et al. 2023 survey. Keep in mind this chart only includes models over 10B parameters, so it misses some important smaller models like Mistral 7B. Still, it provides a useful visual anchor for recent developments, as well as a testament to the amount of research momentum that formed after T5 and GPT-3.

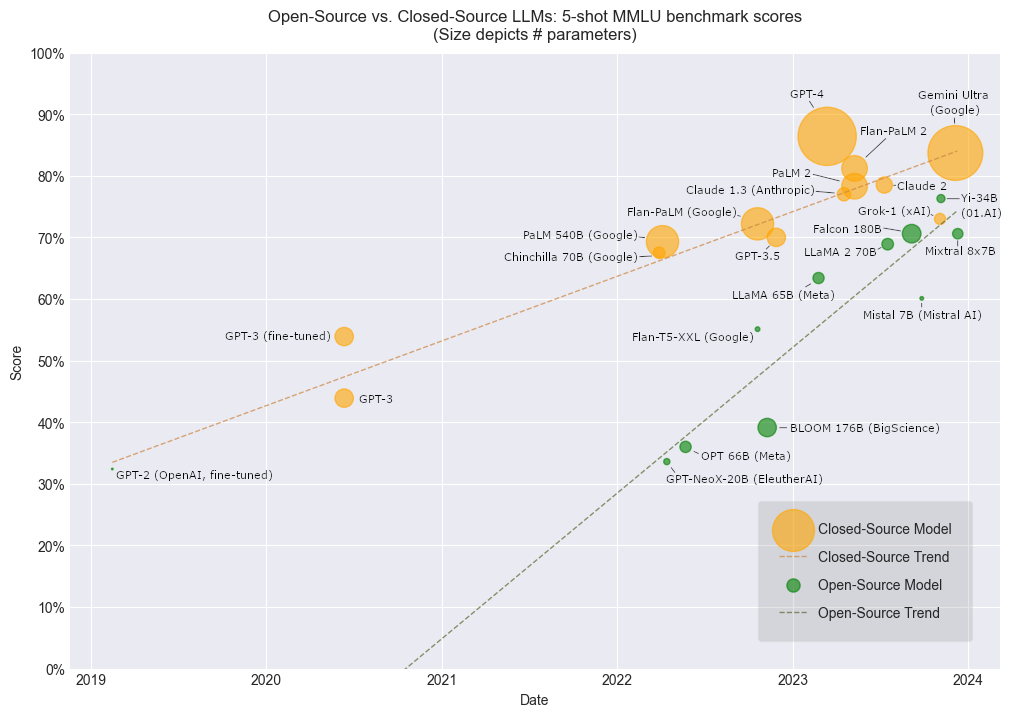

It is worth noting that while open-source LLMs have understandably lagged behind private models in terms of performance, that gap is narrowing over time, and open models seem poised to catch up in the near future. It would appear there’s no time like the present to become familiarized with the integration of LLMs into our work.

The Era of Large Multimodal Models

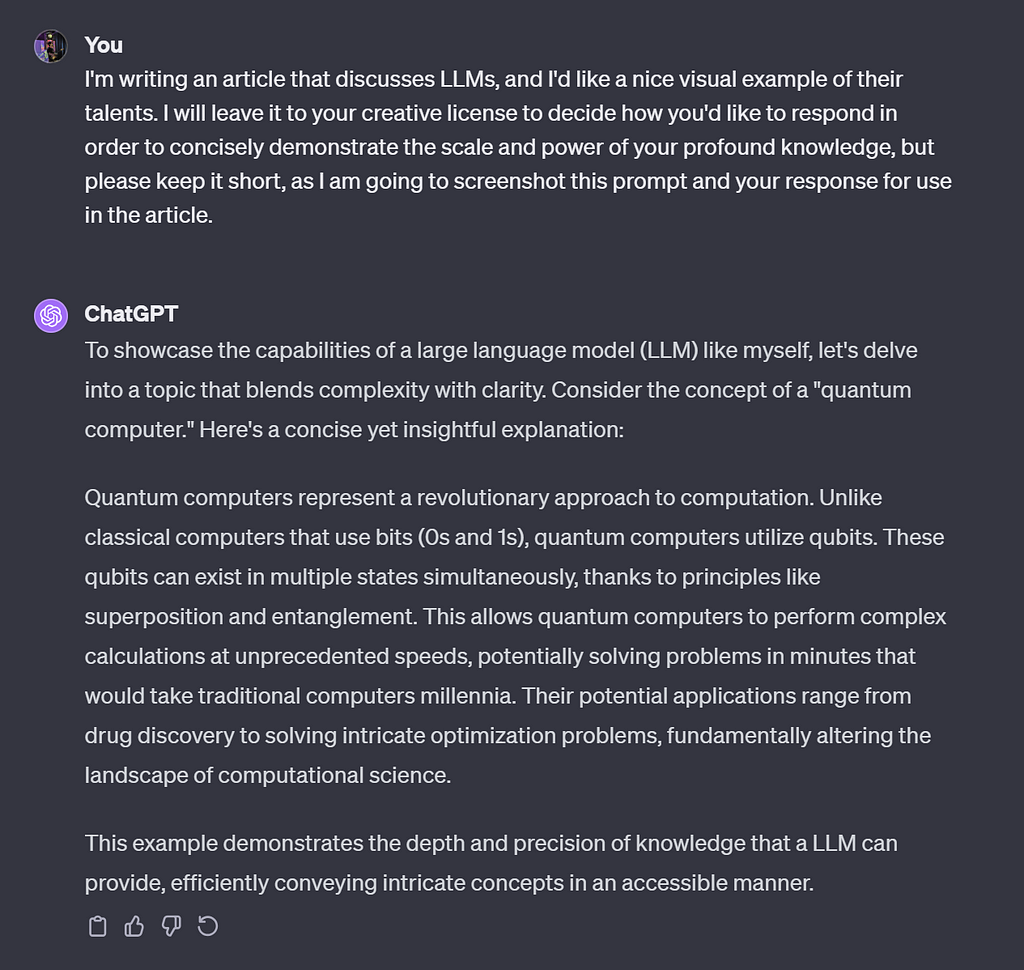

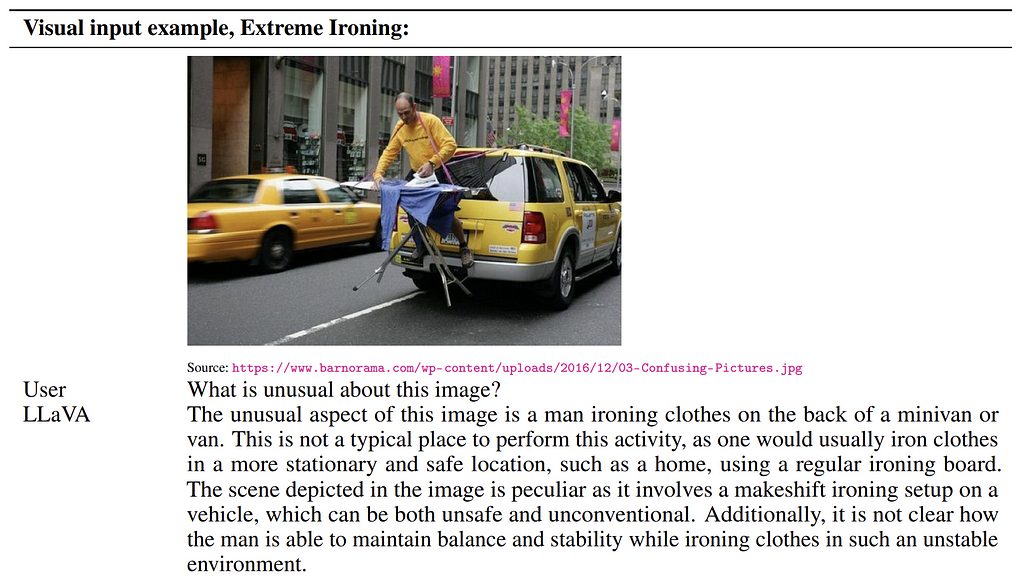

Expanding on the resounding success of LLMs, the most recent era in artificial intelligence has seen the advent of LMMs, representing a paradigm shift in how machines understand and interact with the world. These large models can take multiple modalities of data as input, return multiple modalities of data as output, or both, by learning a shared embedding space across these data modalities and sequence modeling that space using LLMs. This allows LMMs to perform groundbreaking feats like visual question answering using natural language, as shown in this demonstration of the Large Language and Vision Assistant (LLaVA):

A significant stride in visual-language pretraining (VLP), OpenAI’s Contrastive Language-Image Pre-training (CLIP) unlocked a new level of possibilities in 2021 when it established a contrastive method for learning a shared visual and language embedding space, allowing images and text to be represented in a mutual numeric space and matched based on cosine similarity scores. CLIP set off a revolution in computer vision when it was able to beat the state-of-the-art on several image classification benchmarks in a zero-shot fashion, surpassing expert models that were trained using supervision, and creating a surge of research interest in zero-shot classification. While it stopped short of capabilities like visual question answering, training CLIP produces an image encoder that can be removed and paired with a LLM to create a LMM. For example, the LLaVA model (seen demonstrated above) encodes images into the multimodal embedding space using a pretrained and frozen CLIP image encoder, as does DeepMind’s Flamingo.

*Note* — terminology for LMMs is not entirely consistent. Although “LMM” seems to have become the most popular, these models are referred to elsewhere as MLLMs, or even MM-LLMs.

Image embeddings generated by these pretrained CLIP encoders can be interleaved with text embeddings in an autoregressive transformer language model. AudioCLIP added audio as a third modality to the CLIP framework to beat the state-of-the-art in the Environmental Sound Classification (ESC) task. Meta AI’s influential ImageBind presents a framework for learning to encode joint embeddings across six data modalities: image, text, audio, depth, thermal, and Inertial Mass Unit (IMU) data, but demonstrates that emergent alignment across all modalities occurs by aligning each of them with the images only, demonstrating the rich semantic content of images (a picture really is worth a thousand words). PandaGPT combined the multimodal encoding scheme of ImageBind with the Vicuna LLM to create a LMM which understands data input in these six modalities, but like the other models mentioned so far, is limited to text output only.

Image is perhaps the most versatile format for model inputs, as it can be used to represent text, tabular data, audio, and to some extent, videos. There’s also so much more visual data than text data. We have phones/webcams that constantly take pictures and videos today.

Text is a much more powerful mode for model outputs. A model that can generate images can only be used for image generation, whereas a model that can generate text can be used for many tasks: summarization, translation, reasoning, question answering, etc.

— Keen summary of data modality strengths from Huyen’s “Multimodality and Large Multimodal Models (LMMs)” (2023).

In fact, the majority of research in LMMs has only offered unimodal language output, with the development of models returning data in multiple modalities lagging by comparison. Those works which have sought to provide multimodal output have predominantly guided the generation in the other modalities using decoded text from the LLM (e.g. when prompted for an image, GPT-4 will generate a specialized prompt in natural language and pass this to DALL-E 3, which then creates the image for the user), and this inherently introduces risk for cascading error and prevents end-to-end tuning. NExT-GPT seeks to address this issue, designing an all-to-all LMM that can be trained end-to-end. On the encoder side, NExT-GPT uses the ImageBind framework mentioned above. For guiding decoding across the 6 modalities, the LMM is fine-tuned on a custom-made modality-switching instruction tuning dataset called Mosit, learning to generate special modality signal tokens which serve as instructions to the decoding process. This allows for the handling of data output modality switching to be learned end-to-end.

GATO, developed by DeepMind in 2022, is a generalist agent that epitomizes the remarkable versatility of LMMs. This singular system demonstrated an unprecedented ability to perform a wide array of 604 distinct tasks, ranging from Atari games to complex control tasks like stacking blocks with a real robot arm, all within a unified learning framework. The success of GATO is a testament to the potential of LMMs to emulate human-like adaptability across diverse environments and tasks, inching closer to the elusive goal of artificial general intelligence (AGI).

World Models in the Era of LMMs

Deep Reinforcement Learning (RL) is a popular and well-studied approach to solving complex problems in robotics, first demonstrating superhuman capability in Atari games, then later beating the world’s top players of Go (a famously challenging game requiring long-term strategy). Traditional deep RL algorithms are generally classified as either a model-free or model-based approach, although recent work blurs this line through framing RL as a large sequence modeling problem using large transformer models, following the successful trend in NLP and computer vision.

While demonstrably effective and easier to design and implement than model-based approaches, model-free RL approaches are notoriously more sample inefficient, requiring far more interactions with an environment to learn a task than humans do. Model-based RL approaches require fewer interactions by learning to model how the environment changes given previous states and actions. These models can be used to anticipate future states of the environment, but this adds a failure mode to RL systems, since they must depend on the accuracy and feasibility of this modeling. There is a long history of using neural networks to learn dynamics models for training RL policies, dating back to the 1980s using feed-forward networks (FFNs), and to the 1990s with RNNs, with the latter becoming the dominant approach thanks to their ability to model and predict over multi-step time horizons.

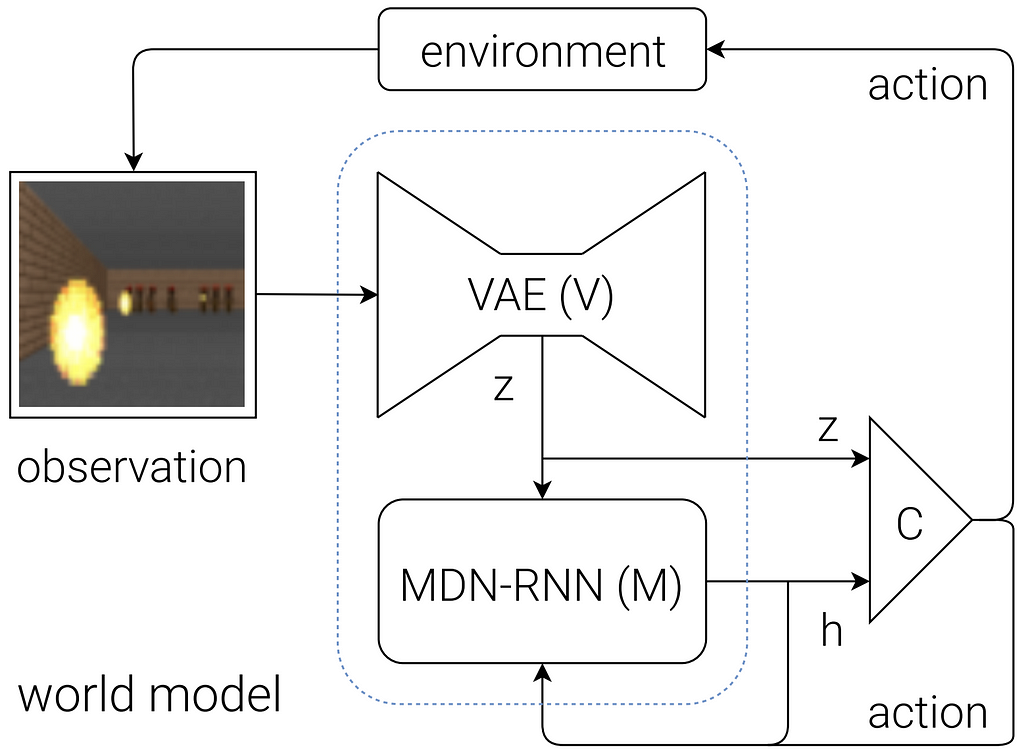

In 2018, Ha & Schmidhuber released a pivotal piece of research called “Recurrent World Models Facilitate Policy Evolution,” in which they demonstrated the power of expanding environment modeling past mere dynamics, instead modeling a compressed spatiotemporal latent representation of the environment itself using the combination of a convolutional variational autoencoder (CVAE) and a large RNN, together forming the so-called “world model.” The policy is trained completely within the representations of this world model, and since it is never exposed to the true environment, a reliable world model can be sampled from to simulate imaginary rollouts from its learned understanding of the world, supplying effective synthetic examples for further training of the policy. This makes policy training far more data efficient, which is a huge advantage for practical applications of RL in real world domains for which data collection and labeling is resource-intensive.

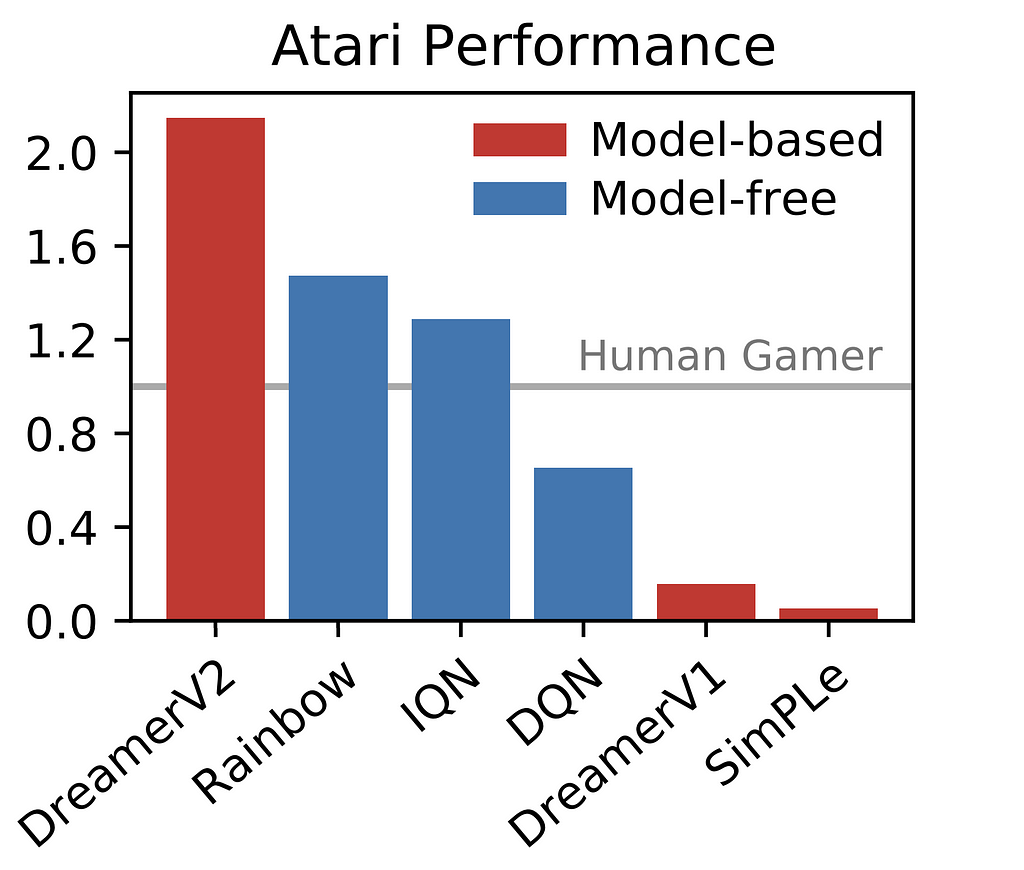

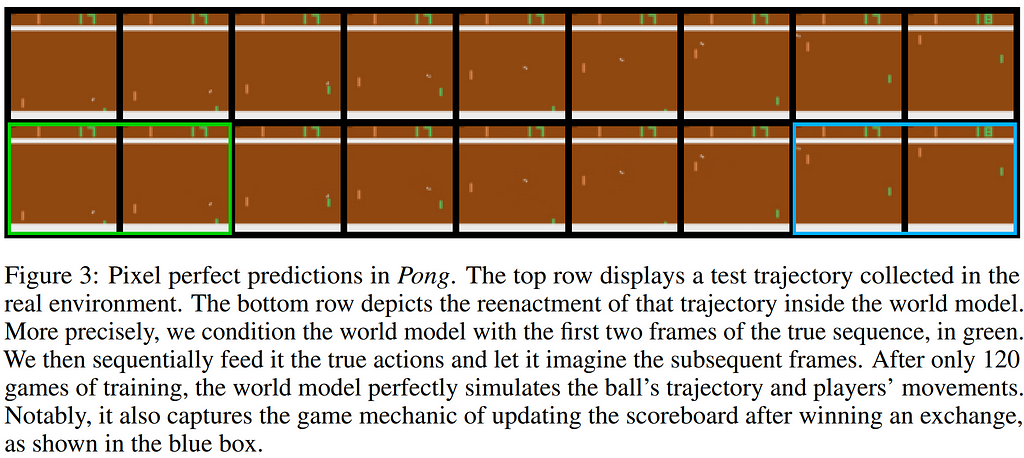

This enticing concept of learning in the imagination of world models has since caught on. Simulated Policy Learning (SimPLe) took advantage of this paradigm to train a PPO policy inside a video prediction model to achieve state of the art in Atari games using only two hours of real-time gameplay experience. DreamerV2 (an improvement on Dreamer) became the first example of an agent learned in imagination to achieve superhuman performance on the Atari 50M benchmark (although requiring months of gameplay experience). The Dreamer algorithm also proved to be effective for online learning of real robotics control in the form of DayDreamer.

Although they initially proved challenging to train in RL settings, the alluring qualities of transformers invited their disruptive effects into yet another research field. There are a number of benefits to framing RL as a sequence modeling problem, namely the simplification of architecture and problem formulation, and the scalability of the data and model size offered by transformers. Trajectory Transformer is trained to predict future states, rewards, and actions, but is limited to low-dimensional states, while Decision Transformer can handle image inputs but only predicts actions.

Posing reinforcement learning, and more broadly data-driven control, as a sequence modeling problem handles many of the considerations that typically require distinct solutions: actor-critic algorithms…estimation of the behavior policy…dynamics models…value functions. All of these problems can be unified under a single sequence model, which treats states, actions, and rewards as simply a stream of data. The advantage of this perspective is that high-capacity sequence model architectures can be brought to bear on the problem, resulting in a more streamlined approach that could benefit from the same scalability underlying large-scale unsupervised learning results.

— Motivation provided in the introduction to Trajectory Transformer

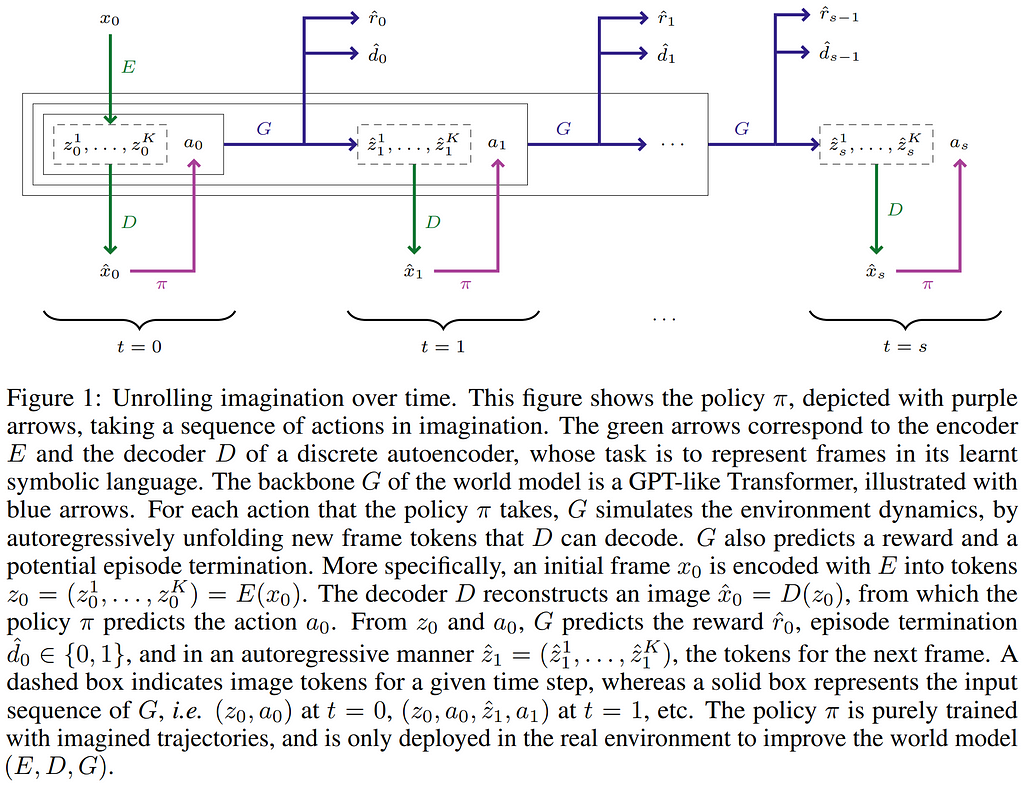

IRIS (Imagination with auto-Regression over an Inner Speech) is a recent open-source project which builds a generative world model that is similar in structure to VQGAN and DALL-E, combining a discrete autoencoder with a GPT-style autoregressive transformer. IRIS learns behavior by simulating millions of trajectories, using encoded image tokens and policy actions as inputs to the transformer to predict the next set of image tokens, rewards, and episode termination status. The predicted image tokens are decoded into an image which is passed to the policy to generate the next action, although the authors concede that training the policy on the latent space may result in better performance.

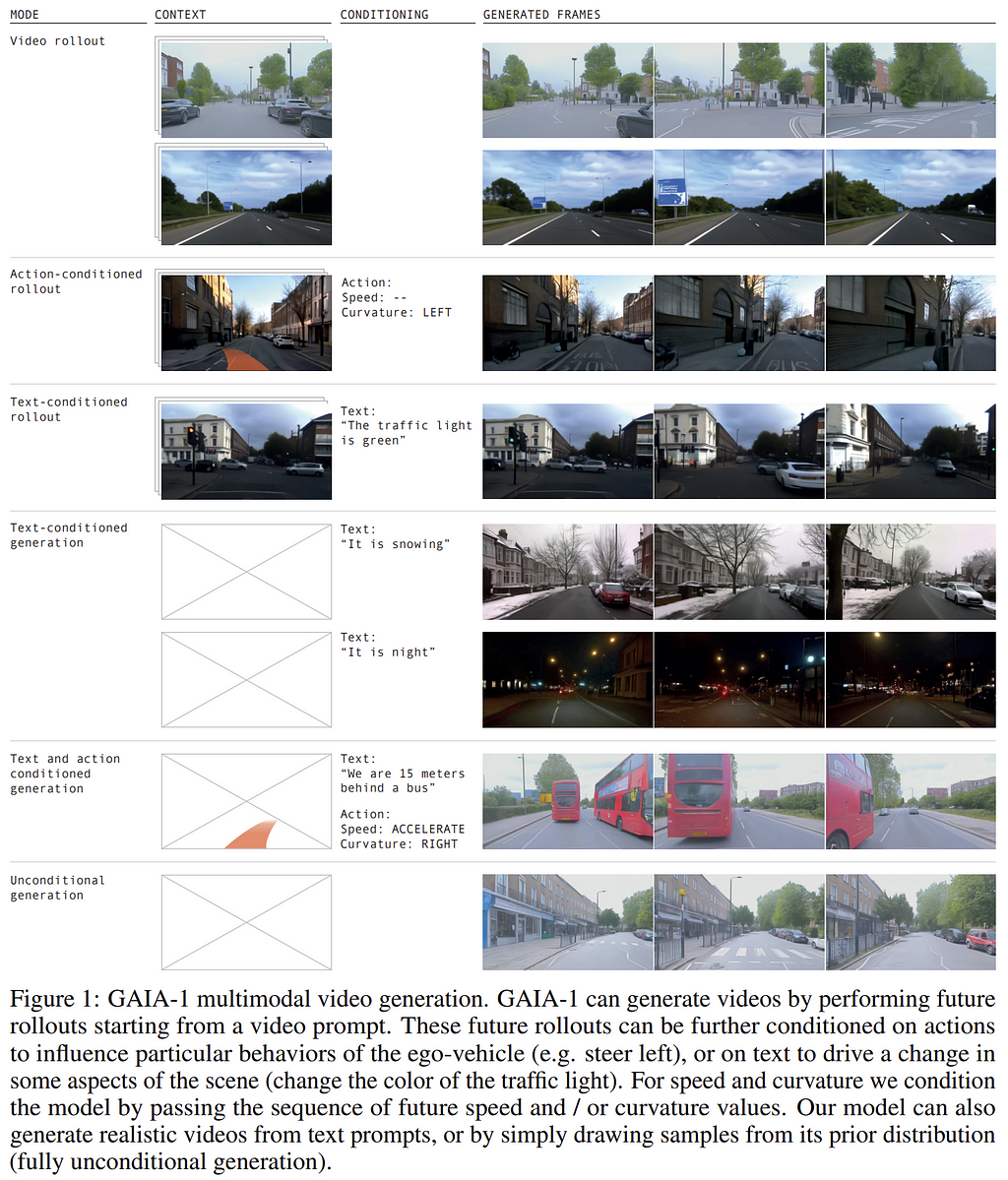

GAIA-1 by Wayve takes the autoregressive transformer world modeling approach to the next level by incorporating image and video generation using a diffusion decoder, as well as adding text conditioning as an input modality. This enables natural language guidance of the video generation at inference time, allowing for prompting specific scenarios like the presence of weather or agent behaviors such as the car straying from its lane. However, GAIA-1 is limited to image and video output, and future work should investigate multimodality in the output so that the model can explain what it sees and the actions it is taking, which has the potential to invalidate criticisms that end-to-end driving stacks are uninterpretable. Additionally, GAIA-1 generates action tokens in the latent space, but these are not decoded. Decoding these actions from the latent space would allow using the model for robotic control and improve interpretability. Further, the principles of ImageBind could be applied to expand the input data modalities (i.e. including depth) to potentially develop a more general internal world representation and better downstream generation.

In the context of these developments in world models, it’s important to acknowledge the potential disruptive impact of generative models like GAIA-1 on the field of synthetic data generation. As these advanced models become more adept at creating realistic, diverse datasets, they will revolutionize the way synthetic data is produced. Currently, the dominant approach to automotive synthetic data generation is to use simulation and physically-based rendering, typically within a game engine, to generate scenes with full control over the weather, map, and agents. Synscapes is a seminal work in this type of synthetic dataset generation, where the authors explore the benefits of engineering the data generation process to match the target domain as closely as possible in combating the deleterious effects of the synthetic-to-real domain gap on knowledge transfer.

While progress has been made in numerous ways to address it, this synthetic-to-real domain gap is an artifact of the synthetic data generation process and presents an ongoing challenge in the transferability of knowledge between domains, blocking the full potential of learning from simulation. Sampling synthetic data from a world model, however, is a fundamentally different approach and compelling alternative. Any gains in the model’s descriptive capacity and environmental knowledge will mutually benefit the quality of synthetic data produced by the model. This synthetic data is sampled directly from the model’s learned distribution, reducing any concerns over distribution alignment to be between the model and the domain being modeled, rather than involving a third domain that is affected by a completely different set of forces. As generative models continue to improve, it is conceivable that this type of synthetic data generation will supersede the complex and fundamentally disjoint generation process of today.

Navigating the Future: Multi-Task vs. Large World Models in Autonomous Systems

The landscape of autonomous navigation is witnessing an intriguing evolution in approaches to scene understanding, shaped by developments in both multi-task vision models and large world models. My own work, along with that of others in the field, has successfully leveraged multi-task models in perception modules, demonstrating their efficacy and efficiency. Concurrently, companies like Wayve are pioneering the use of large world models in autonomy, signaling a potential paradigm shift.

The compactness and data efficiency of multi-task vision models make them a natural choice for use in perception modules. By handling multiple vision tasks simultaneously, they offer a pragmatic solution within the traditional modular autonomy stack. However, in this design paradigm, such perception modules must be combined with downstream planning and control modules to achieve autonomous operation. This creates a series of complex components performing highly specialized problem formulations, a structure which is naturally vulnerable to compounding error. The ability of each module to perform well depends on the quality of information it receives from the previous link in this daisy-chained design, and errors appearing early in this pipeline are likely to get amplified.

While works like Nvidia’s DiffStack build towards differentiable loss formulations capable of backprop through distinct task modules to offer a best-of-both-worlds solution that is both learnable and interpretable by humans, the periodic crystallization of intermediary, human-interpretable data representations between modules is inherently a form of lossy compression that creates information bottlenecks. Further, chaining together multiple models accumulates their respective limitations in representing the world.

On the other hand, the use of LMMs as world models, illustrated by Wayve’s AV2.0 initiative, suggests a different trajectory. These models, characterized by their vast parameter spaces, propose an end-to-end framework for autonomy, encompassing perception, planning, and control. While their immense size poses challenges for training and deployment, recent advancements are mitigating these issues and making the use of large models more accessible.

As we look toward the future, it’s evident that the barriers to training and deploying large models are steadily diminishing. This ongoing progress in the field of AI is subtly yet significantly altering the dynamics between traditional task-specific models and their larger counterparts. While multi-task vision models currently hold an advantage in certain aspects like size and deployability, the continual advancements in large model training techniques and computational efficiency are gradually leveling the playing field. As these barriers continue to be lowered, we may witness a shift in preference towards more comprehensive and integrated models.

Bringing Fire to Mankind: Democratizing Large Models

Despite their impressive capabilities, large models pose significant challenges. The computational resources required for training are immense, raising concerns about environmental impact and accessibility, and creating a barrier to entry for research and development. Fortunately, there are several tools which can help us to bring the power of large foundation models (LFMs) down to earth: pruning, quantization, knowledge distillation, adapter modules, low-rank adaptation, sparse attention, gradient checkpointing, mixed precision training, and open-source components. This toolbox provides us with a promising recipe for concentrating the power obtained from large model training down to manageable scales.

One intuitive approach is to train a large model to convergence, remove the parameters which have minimal contribution to performance, then fine-tune the remaining network. This approach to network minimization via removal of unimportant weights to reduce the size and inference cost of neural networks is known as “pruning,” and goes back to the 1980s (see “Optimal Brain Damage” by LeCun et al., 1989). In 2017, researchers at Nvidia presented an influential method for network pruning which uses a Taylor expansion to estimate the change in loss function caused by removing a given neuron, providing a metric for its importance, and thus helping to identify which neurons can be pruned with the least impact on network performance. The pruning process is iterative, with a round of fine-tuning performed between each reduction in parameters, and repeated until the desired trade-off of accuracy and efficiency is reached.

Concurrently in 2017, researchers from Google released a seminal work in network quantization, providing an orthogonal method for shrinking the size of large pretrained models. The authors presented an influential 8-bit quantization scheme for both weights and activations (complete with training and inference frameworks) that was aimed at increasing inference speed on mobile CPUs by using integer-arithmetic-only inference. This form of quantization has been applied to LLMs to allow them to fit and perform inference on smaller hardware (see the plethora of quantized models offered by TheBloke on the Hugging Face hub).

Another method for condensing the capabilities of large, cumbersome models is knowledge distillation. It was in 2006 that researchers at Cornell University introduced the concept that would later come to be known as knowledge distillation in a work they called “Model Compression.” This work successfully explored the concept of training small and compact models to approximate the functions learned by large cumbersome experts (particularly large ensembles). The authors use these large experts to produce labels for large unlabeled datasets in various domains, and demonstrate that smaller models trained on the resulting labeled dataset performed better than equivalent models trained on the original training set for the task at hand. Moreover, they train the small model to target the raw logits produced by the large model, since their relative values contain much more information than the either the hard class labels or the softmax probabilities, the latter of which compresses details and gradients at the low end of the probability range.

Hinton et al. expanded on this concept and coined the term “distillation” in 2015 with “Distilling Knowledge in a Neural Network,” training the small model to target the probabilities produced by the large expert rather than the raw logits, but increasing the temperature parameter in the final softmax layer to produce “a suitably soft set of targets.” The authors establish that this parameter provides an adjustable level of amplification for the fine-grained information at the low end of the probability range, and find that models with less capacity work better with lower temperatures to filter out some of the detail at the far low end of the logit values to focus the model’s limited capacity on higher-level interactions. They further demonstrate that using their approach with the original training set rather than a new large transfer dataset still worked well.

Fine-tuning large models on data generated by other large models is also a form of knowledge distillation. Self-Instruct proposed a data pipeline for using a LLM to generate instruction tuning data, and while the original paper demonstrated fine-tuning GPT-3 on its own outputs, Alpaca used this approach to fine-tune LLaMA using ouptuts from GPT-3.5. WizardLM expanded on the Self-Instruct approach by introducing a method to control the complexity level of the generated instructions called Evol-Instruct. Vicuna and Koala used real human/ChatGPT interactions sourced from ShareGPT for instruction tuning. In Orca, Microsoft Research warned that while smaller models trained to imitate the outputs of LFMs may learn to mimic the writing style of those models, they often fail to capture the reasoning skills that generated the responses. Fortunately, their team found that using system instructions (e.g. “think step-by-step and justify your response”) when generating examples in order to coax the teacher into explaining its reasoning as part of the responses provides the smaller model with an effective window into the mind of the LFM. Orca 2 then introduced prompt erasure to compel the smaller models to learn the appropriate reasoning strategy for a given instruction.

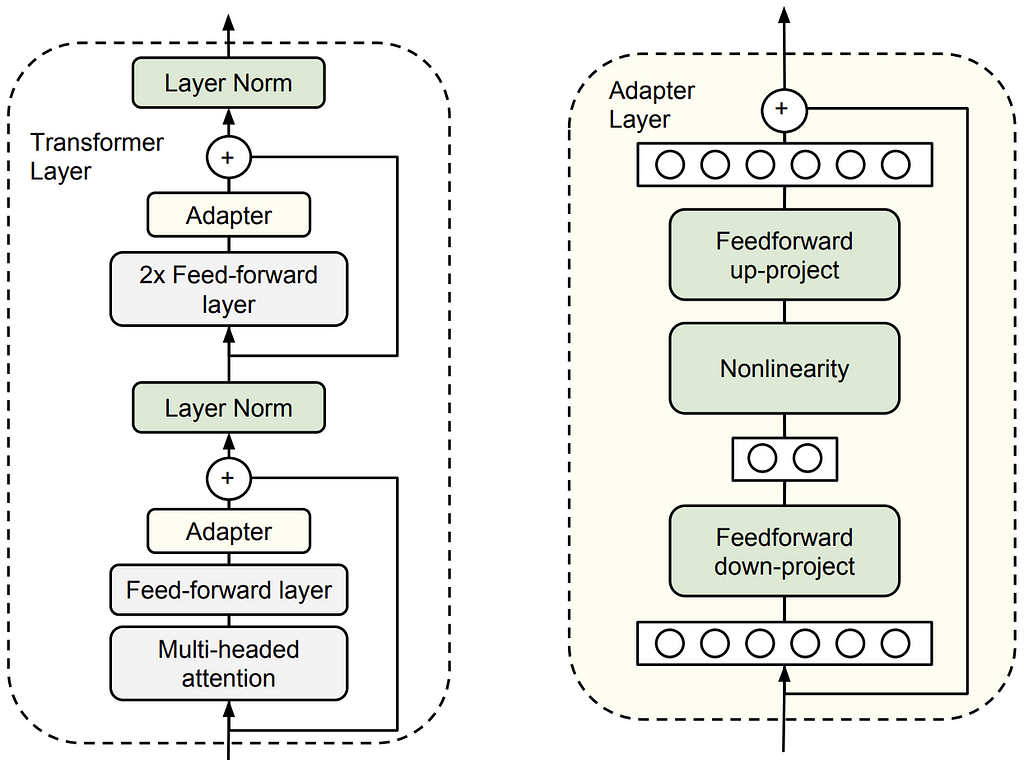

The methods described above all focus on condensing the power of a large pretrained models down to manageable scales, but what about the accessible fine-tuning of these large models? In 2017, Rebuffi et al. introduced the power of adapter modules for model fine-tuning. These are small trainable matrices that can be inserted into pretrained and frozen computer vision models to adapt them to new tasks and domains quickly with few examples. Two years later, Houlsby et al. demonstrated the use of these adapters in NLP to transfer a pretrained BERT model to 26 diverse natural language classification tasks, achieving near state-of-the-art performance. Adapters enable the parameter-efficient fine-tuning of LFMs, and can be easily interchanged to switch between the resulting experts, rather than needing an entirely different model for each task, which would be prohibitively expensive to train and deploy.

In 2021, Microsoft research improved on this concept, introducing a groundbreaking approach for training a new form of adapters with Low-Rank Adaptation (LoRA). Rather than insert adapter matrices into the model like credit cards, which slows down the model’s inference speed, this method learns weight delta matrices which can be combined with the frozen weights at inference time, providing a lightweight adapter for switching a base model between fine-tuned tasks without any added inference latency. They reduce the number of trainable parameters by representing the weight delta matrix with a low-rank decomposition into two smaller matrices A and B (whose dot product takes the original weight matrix shape), motivated by their hypothesis (inspired by Aghajanyan et al., 2020) that the updates to the weights during fine-tuning have a low intrinsic rank.

Sparse Transformer further explores increasing the computational efficiency of transformers through two types of factorized self-attention. Notably, the authors also employ gradient checkpointing, a resource-poor method for training large networks by re-computing activations during backpropagation rather than storing them in memory. This method is especially effective for transformers modeling long sequences, since this scenario has a relatively large memory footprint given its cost to compute. This offers an attractive trade: a tolerable decrease in iteration speed for a substantial reduction in GPU footprint during training, allowing for training more transformer layers on longer sequence lengths than would otherwise be possible given any level of hardware restraints. To increase efficiency further, Sparse Transformer also uses mixed precision training, where the network weights are stored as single precision floats, but the activations and gradients are computed in half-precision. This further reduces the memory footprint during training, and increases the trainable model size on a given hardware budget.

Finally, a major (and perhaps somewhat obvious) tool for democratizing the development and application of large models is the release and utilization of pretrained open-source components. CLIP, the ubiquitous workhorse from OpenAI, is open-source with a commercially permissible license, as is LLaMA 2, the groundbreaking LFM release from Meta. Pretrained, open-source components like these consolidate most of the heavy lifting involved in developing LMMs, since these models generalize quickly to new tasks with fine-tuning, which we know is feasible thanks to the contributions listed above. Notably, NExT-GPT constructed their all-to-all LMM using nothing but available pretrained components and clever alignment learning techniques that only required training projections on the inputs and outputs of the transformer (1% of the total model weights). As long as the largest outfits maintain their commitments to open-source philosophy, smaller teams will continue to be able to efficiently make profound contributions.

As we’ve seen, despite the grand scale of the large models, there are a number of complementary approaches that can be utilized for their accessible fine-tuning and deployment. We can compress these models by distilling their knowledge into smaller models and quantizing their weights into integers. We can efficiently fine-tune them using adapters, gradient checkpointing, and mixed precision training. Open-source contributions from large research outfits continue at a respectable pace, and appear to be closing the gap with closed-source capabilities. In this climate, making the shift from traditional problem formulations into the would of large sequence modeling is far from a risky bet. A recent and illustrative success story in this regard is LaVIN, which converted a frozen LLaMA into a LMM using lightweight adapters with only 3.8M parameters trained for 1.4 hours, challenging the performance of LLaVA without requiring any end-to-end fine tuning.

Synergizing Diverse AI Approaches: Combining Multi-Task and Large World Models

While LMMs offer unified solutions for autonomous navigation and threaten the dominant paradigm of modular AV stacks, they are also fundamentally modular under the hood, and the legacy of MTL can be seen cited in LMM research since the start. The spirit is essentially the same: capture a deep and general knowledge in a central network, and use task-specific components to extract the relevant knowledge for a specific task. In many ways, LMM research is an evolution of MTL. It shares the same visionary goal of developing generally capable models, and marks the next major stride towards AGI. Unsurprisingly then, the fingerprints of MTL are found throughout LMM design.

In modern LMMs, input data modalities are individually encoded into the joint embedding space before being passed through the language model, so there is flexibility in experimenting with these encoders. For example, the CLIP image encoders used in many LMMs are typically made with ViT-L (307M parameters), and little work has been done to experiment with other options. One contender could be the PVTv2-B5, which has only 82M parameters and scores just 1.5% lower on the ImageNet benchmark than the ViT-L. It is highly possible that hierarchical transformers like PVTv2 could create versions of language-image aligned image encoders that were effective with far fewer parameters, reducing the overall size of LMMs substantially.

Similarly, there is room for applying the lessons of MTL in decoder designs for output data modalities offered by the LMM. For instance, the decoders used in Multiformer are very lightweight, but able to extract accurate depth, semantic segmentation, and object detection from the joint feature space. Applying their design principles to the decoding side of a LMM could yield output in these modalities, which may be supervised to build a deeper and more generalized knowledge in the central embedding space.

On the other hand, NExT-GPT showed the feasibility and strengths of adding data modalities like depth on the input side of LMMs, so encoding accurate multi-task inference from a model like Multiformer into the LMM inputs is an interesting direction for future research. It is possible that a well-trained and generalizable expert could generate quality pseudo-labels for these additional modalities, avoiding the need for labeled data when training the LMM, but still allowing the model to align the embedding space with reliable representations of the modalities.

In any case, the transition into LMMs in autonomous navigation is far from a hostile takeover. The lessons learned from decades of MTL and RL research have been given an exciting new playground at the forefront of AI research. AV companies have spent vast amounts on labeling their raw data, and many are likely sitting on vast troves of sequential, unlabeled data perfect for the self-supervised world modeling task. Given the revelations discussed in this article, I hope they’re looking into it.

Conclusion

In this article, we’ve seen the dawn of a paradigm shift in AV development that, by virtue of its benefits, could threaten to displace modular driving stacks as the dominant approach in the field. This new approach of AV2.0 employs LMMs in a sequential world modeling task, predicting future states conditioned on previous sensor data and control actions, as well as other modalities like text, thereby providing a synthesis of perception, planning, and control in a simplified problem statement and unified architecture. Previously, end-to-end approaches were seen by many to be too much of a black box for safety-critical deployments, as their inner states and decision making processes were uninterpretable. However, with LMMs making driving decisions based on sensor data, there is potential for the model to explain what it is perceiving and the reasoning behind its actions in natural language if prompted to do so. Such a model can also learn from synthetic examples sampled from its own imagination, reducing the need for real world data collection.

While the potential in this approach is alluring, it requires very large models to be effective, and thus inherits their limitations and challenges. Very few outfits have the resources to train or fine-tune the full weight matrix of a multi-billion parameter LLM, and large models come with a lot of efficiency concerns from the cost of compute to the size of embedded hardware. However, we’ve seen that there are a number of powerful open-source tools and LFMs licensed for commercial use, a variety of methods for parameter-efficient fine-tuning that make customization feasible, and compression techniques that make deployment at manageable scales possible. In light of these things, shying away from the adoption of large models for solving complex problems like autonomous robotics hardly seems justifiable, and would ignore the value in futureproofing systems with a growing technology with plenty of developmental overhead, rather than clinging to approaches which may have already peaked.

Still, small multi-task models have a great advantage in their comparably miniscule scale, which grants accessibility and ease of experimentation, while simplifying a number of engineering and budgeting decisions. However, the limitations of task-specific models creates a different set of challenges, because such models must be arranged in complex modular architectures in order to fulfill all of the necessary functions in an autonomy stack. This design results in a sequential flow of information through perception, prediction, planning, and then finally to control stacks, creating a high risk for compounding error through all of this sequential componentry, and hindering end-to-end optimization. Further, while the overall parameter count may be far lower in this paradigm, the stack complexity is undeniably far higher, as the numerous components each involve specialized problem formulations from their respective fields of research, requiring a large team of highly skilled engineers from diverse disciplines to maintain and develop.

Large models have shown profound ability to reason about information and generalize to new tasks and domains in multiple modalities, something that has eluded the field of deep learning for a long time. It has long been known that models trained to perform tasks through supervised learning are extremely brittle when introduced to examples from outside of their training distributions, and that their ability to perform a single (or even multiple) tasks really well barely deserves the title “intelligence.” Now, after a few short years of explosive development that makes 2020 seem like the bronze age, it would appear that the great white buffalo of AI research has made an appearance, emerging first as a property of gargantuan chat bots, and now casually being bestowed with the gifts of sight and hearing. This technology, along with the revolution in robotics that it has begun, seems poised to deliver nimble robotic control in a matter of years, if not sooner, and AVs will be one of the first fields to demonstrate that power to the world.

Future Work

As mentioned above, the CLIP encoder driving many LMMs is typically made from a ViT-L, and we are past due for experimenting with more recent architectures. Hierarchical transformers like the PVTv2 nearly match the performance of ViT-L on ImageNet with a fraction of the parameters, so they are likely candidates for serving as language-aligned image encoders in compact LMMs.

IRIS and GAIA-1 serve as blueprints for the path forward in building world models with LMMs. However, the output modalities for both models are limited. Both models use autoregressive transformers to predict future frames and rewards, but while GAIA-1 does allow for text prompting, neither of them is designed to generate text, which would be a huge step in evaluating reasoning skills and interpreting fail modes.

At this stage, the field would greatly benefit from the release of an open-source generative world model like GAIA-1, but with an all-to-all modality scheme that provides natural language and actions in the output. This could be achieved through the addition of adaptors, encoders, decoders, and a revised problem statement. It is likely that the pretrained components required to assemble such an architecture already exist, and that they could be aligned using a reasonable number of trainable parameters, so this is an open lane for research.

Further, as demonstrated with Mixtral 8X7B, MoE configurations of small models can top the performance of larger single models, and future work should explore MoE configurations for LMM-based world models. Further, distilling a large MoE into a single model has proven to be an effective method of model compression, and could likely boost large world model performance to the next level, so this provides additional motivation for creating a MoE LMM world model.

Finally, fine-tuning of open-source models using synthetic data with commercially-permissible licenses should become standard practice. Because Vicuna, WizardLM, and Orca are trained using outputs from ChatGPT, those pretrained weights are inherently licensed for research purposes only, so while these releases offer powerful methodology for fine-tuning LLMs, they don’t fully “democratize” this power since anyone seeking to use models created with those methods for commercial purposes must expend the natural and financial resources necessary to gather a new dataset and repeat the experiment. There should be an initiative to generate synthetic instruction tuning datasets with methods like Evol-Instruct using commercially-permissible open-source models rather than ChatGPT so that weights trained using those datasets are fully democratized, helping to elevate those with fewer resources.

Navigating the Future was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Navigating the Future