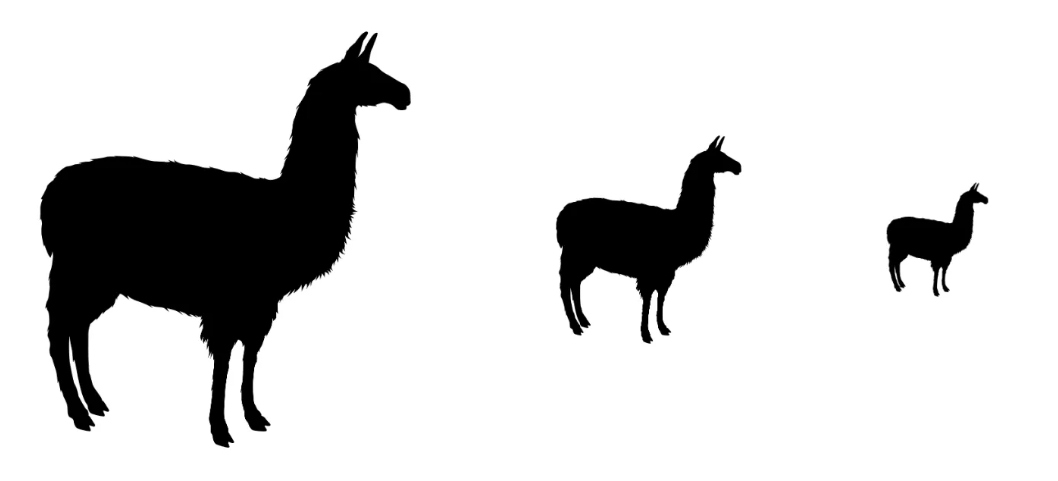

How pruning, knowledge distillation, and 4-bit quantization can make advanced AI models more accessible and cost-effective

Originally appeared here:

Mistral-NeMo: 4.1x Smaller with Quantized Minitron

Go Here to Read this Fast! Mistral-NeMo: 4.1x Smaller with Quantized Minitron