Efficiently scaling GPT from large to titanic magnitudes within the meta-learning framework

Introduction

GPT is a family of language models that has been recently gaining a lot of popularity. The attention of the Data Science community was rapidly captured by the release of GPT-3 in 2020. After the appearance of GPT-2, almost nobody could even assume that nearly in a year there would appear a titanic version of GPT containing 175B of parameters! This is by two orders of magnitude more, compared to its predecessor.

The enormous capacity of GPT-3 made it possible to use it in various everyday scenarios: code completion, article writing, content creation, virtual assistants, etc. While the quality of these tasks is not always perfect, the overall progress achieved by GPT-3 is absolutely astonishing!

In this article, we will have a detailed look at the main details of GPT-3 and useful ideas inspired by GPT-2 creators. Throughout the exploration, we will be referring to the official GPT-3 paper. It is worth noting that most of the GPT-3 settings including data collection, architecture choice and pre-training process are directly derived from GPT-2. That is why most of the time we will be focusing on novel aspects of GPT-3.

Note. For a better understanding, this article assumes that you are already familiar with the first two GPT versions. If not, please navigate to the articles below comprehensively explaining it:

- Large Language Models, GPT-1 — Generative Pre-Trained Transformer

- Large Language Models, GPT-2 — Language Models are Unsupervised Multitask Learners

Meta-learning framework

GPT-3 creators were highly interested in the training approach used in GPT-2: instead of using a common pre-training + fine-tuning framework, the authors collected a large and diverse dataset and incorporated the task objective in the text input. This methodology was convenient for several reasons:

- By eliminating the fine-tuning phase, we do not need several large labelled datasets for individual downstream tasks anymore.

- For different tasks, a single version of the model can be used instead of many.

- The model operates in a more similar way that humans do. Most of the time humans need no or only a few language examples to fully understand a given task. During inference, the model can receive those examples in the form of text. As a result, this aspect provides better perspectives for developing AI applications that interact with humans.

- The model is trained only once on a single dataset. Contrary to the pre-training + fine-tuning paradigm, the model had to be trained on two different datasets which could have had completely dissimilar data distributions leading to potential generalization problems.

Formally, the described framework is called meta-learning. The paper provides an official definition:

“Meta-learning in the context of language models means the model develops a broad set of skills and pattern recognition abilities at training time, and then uses those abilities at inference time to rapidly adapt to or recognize the desired task”

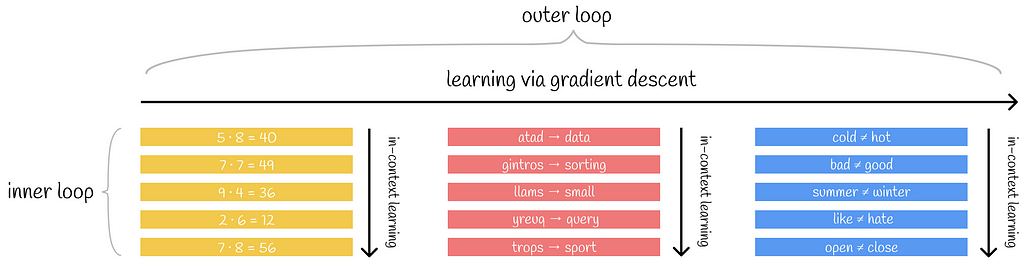

To further describe the learning paradigm, inner and outer loop terms are introduced. Basically, an inner loop is an equivalent of a single forward pass during training while an outer loop designates a set of all inner loops.

Throughout the training process, a model can receive similar tasks on different text examples. For example, the model can see the following examples across different batches:

- Good is a synonym for excellent.

- Computer is a synonym for laptop.

- House is a synonym for building.

In this case, these examples help the model to understand what a synonym is that can be useful during inference when it is asked to find synonyms for a certain word. A combination of examples focused on helping the model capture similar linguistic knowledge within a paritcular task is called “in-context learning”.

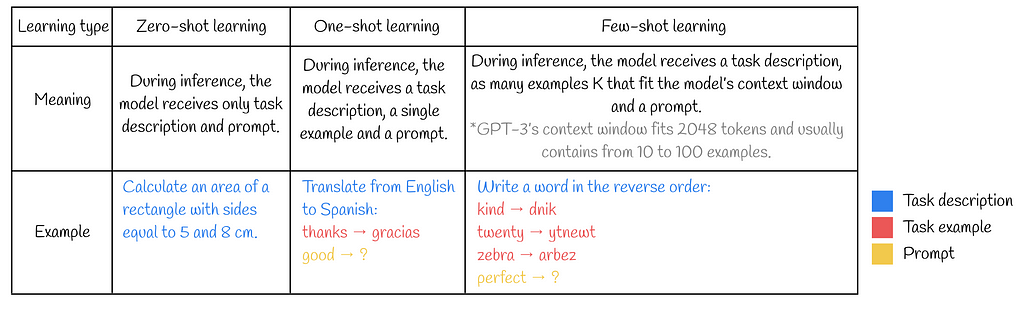

n-shot learning

A query performed for the model during inference can additionally contain task examples. It turns out that task demonstration plays an important role in helping the model to better understand the objective of a query. Based on the number of provided task examples (shots), there exist three types of learning which are summarized in the table below:

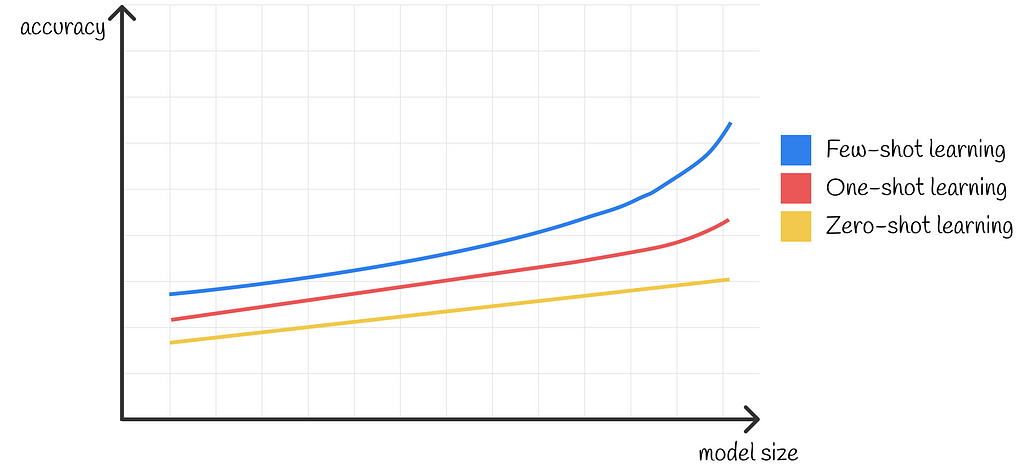

In the majority of cases (but not always) the number of provided examples positively correlates with the model’s ability to provide a correct answer. The authors have completed research in which they used models of different sizes in one of three n-shot settings. The results show that with capacity growth, models become more proficient at in-context learning. This is demonstrated in the lineplot below where the performance gap between few-, one- and zero-shot settings gets larger with the model’s size.

Architecture

The paper precisely describes architecture settings in GPT-3:

“We use the same model and architecture as GPT-2, including the modified initialization, pre-normalization, and reversible tokenization described therein, with the exception that we use alternating dense and locally banded sparse attention patterns in the layers of the transformer, similar to the Sparse Transformer”.

Dataset

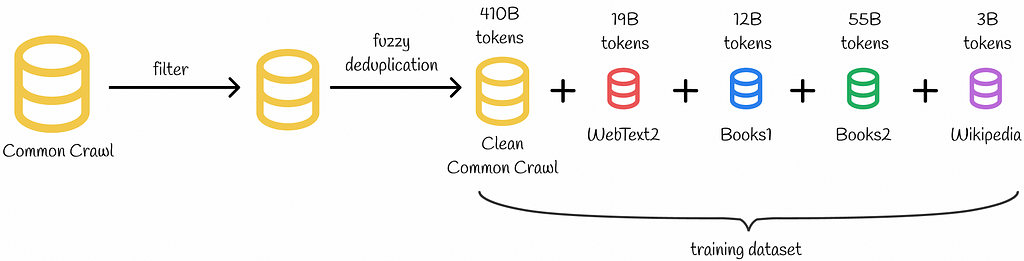

Initially, the authors wanted to use the Common Crawl dataset for training GPT-3. This extremely large dataset captures a diverse set of topics. The raw dataset version had issues with data quality, which is why it was initially filtered and deduplicated. To make the final dataset even more diverse, it was concatenated with four other smaller datasets demonstrated in the diagram below:

The dataset used for training GPT-3 is two magnitudes larger than the one used for GPT-2.

Training details

- Optimizer: Adam (β₁ = 0.9, β₂ = 0.999, ε = 1e-6).

- Gradient clipping at 1.0 is used to prevent the problem of exploding gradients.

- A combination of cosine decay and linear warmup is used for learning rate adjustment.

- Batch size is gradually increased from 32K to 3.2M tokens during training.

- Weight decay of 0.1 is used as a regularizer.

- For better computation efficiency, the length of all sequences is set to 2048. Different documents within a single sequence are separated by a delimiter token.

Beam search

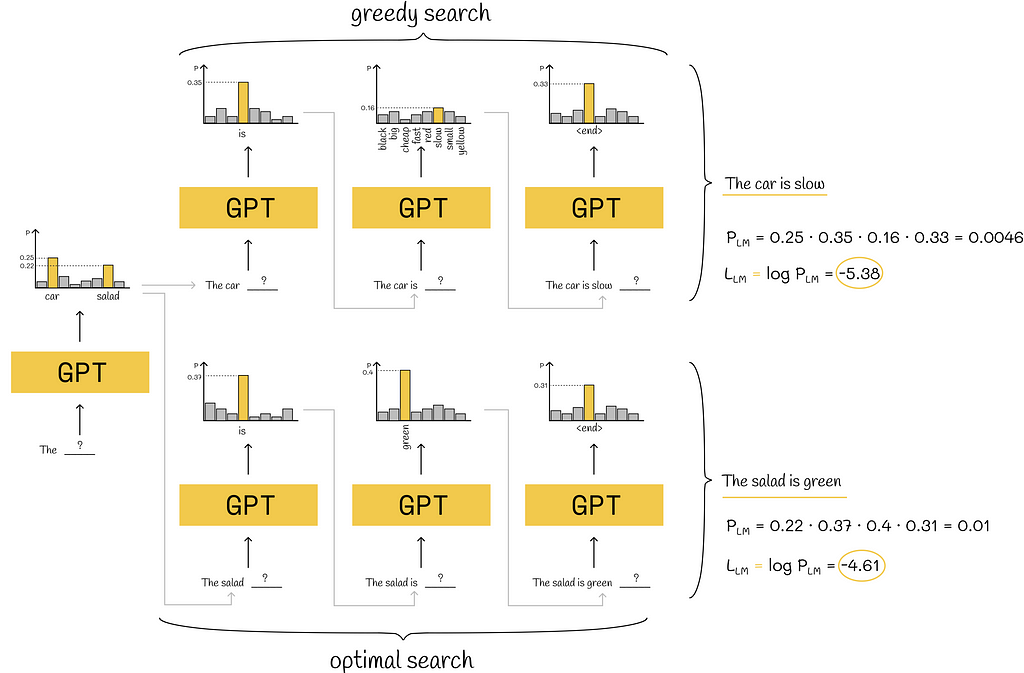

GPT-3 is an autoregressive model which means that it uses information about predicted words in the past as input to predict the next word in the future.

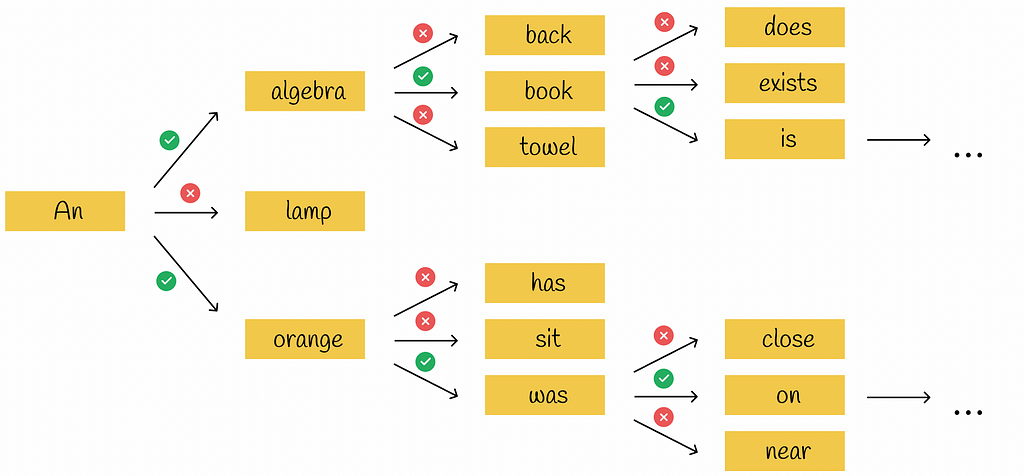

The greedy approach is the most naive method of constructing text sequences in autoregressive models. Basically, at each iteration, it forces the model to choose the most probable word and use it as input for the next word. However, it turns out that choosing the most probable word at the current iteration is not optimal for log-likelihood optimization!

There might be a situation when choosing a current word with a lower probability could then lead to higher probabilities of the rest of the predicted words. In contrast, choosing a local word with the highest probability does not guarantee that the next words will also correspond to high probabilities. An example showing when the greedy strategy does not work optimally is demonstrated in the diagram below:

A possible solution would consist of finding the most probable sequence among all possible options. However, this approach is extremely inefficient since there exist innumerable combinations of possible sequences.

Beam search is a good trade-off between greedy search and exploration of all possible combinations. At each iteration, it chooses the several most probable tokens and maintains a set of the current most probable sequences. Whenever a new more probable sequence is formed, it replaces the least probable one from the set. At the end of the algorithm, the most probable sequence from the set is returned.

Beam search does not guarantee the best search strategy but in practice, its approximations work very well. For that reason, it is used in GPT-3.

Drawbacks

Despite GPT-3 amazing capabilities to generate human-like long pieces of text, it has several drawbacks:

- Decisions made by GPT-3 during text generation are usually not interpretable making it difficult to analyse.

- GPT-3 can be used in harmful ways which cannot always be prevented by the model.

- GPT-3 contains biases in the training dataset making it vulnerable in some cases to fairness aspects, especially when it comes to highly sensitive domains like gender equality, religion or race.

- Compared to its previous predecessor GPT-2, GPT-3 required hundreds times more energy (thousands petaflops / day) to be trained which is not eco-friendly. At the same, the GPT-3 developers justify this aspect by the fact that their model is extremely efficient during inference, thus the average consumption is still low.

Conclusion

GPT-3 gained huge popularity due to its unimaginable 175B trainable parameters which have strongly bet all the previous models on several top benchmarks! At that time, the GPT-3 results were so good that sometimes it was difficult to distinguish whether a text was generated by a human or GPT-3.

Despite several disadvantages and limitations of GPT-3, it has opened doors to researchers for new explorations and potential improvements in the future.

Resources

All images unless otherwise noted are by the author

Large Language Models, GPT-3: Language Models are Few-Shot Learners was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Large Language Models, GPT-3: Language Models are Few-Shot Learners

Go Here to Read this Fast! Large Language Models, GPT-3: Language Models are Few-Shot Learners