Comparing Autogen and LangGraph from a developer standpoint

GenAI models are good at a handful of tasks such as text summarization, question answering, and code generation. If you have a business process which can be broken down into a set of steps, and one or more those steps involves one of these GenAI superpowers, then you will be able to partially automate your business process using GenAI. We call the software application that automates such a step an agent.

While agents use LLMs just to process text and generate responses, this basic capability can provide quite advanced behavior such as the ability to invoke backend services autonomously.

Current weather at a location

Let’s say that you want to build an agent that is able to answer questions such as “Is it raining in Chicago?”. You cannot answer a question like this using just an LLM because it is not a task that can be performed by memorizing patterns from large volumes of text. Instead, to answer this question, you’ll need to reach out to real-time sources of weather information.

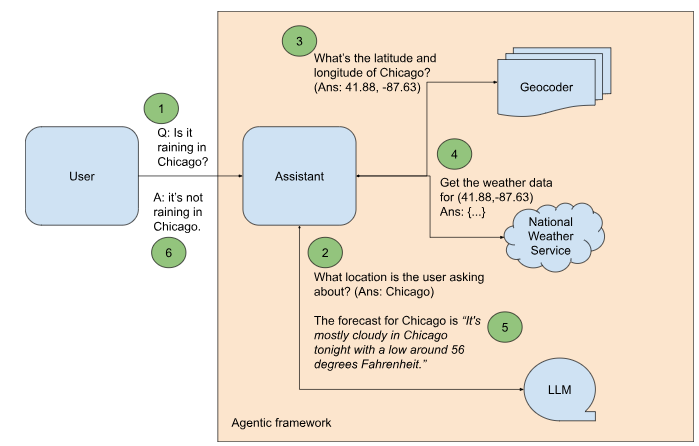

There is an open and free API from the US National Weather Service (NWS) that provides the short-term weather forecast for a location. However, using this API to answer a question like “Is it raining in Chicago?” requires several additional steps (see Figure 1):

- We will need to set up an agentic framework to coordinate the rest of these steps.

- What location is the user interested in? The answer in our example sentence is “Chicago”. It is not as simple as just extracting the last word of the sentence — if the user were to ask “Is Orca Island hot today?”, the location of interest would be “Orca Island”. Because extracting the location from a question requires being able to understand natural language, you can prompt an LLM to identify the location the user is interested in.

- The NWS API operates on latitudes and longitudes. If you want the weather in Chicago, you’ll have to convert the string “Chicago” into a point latitude and longitude and then invoke the API. This is called geocoding. Google Maps has a Geocoder API that, given a place name such as “Chicago”, will respond with the latitude and longitude. Tell the agent to use this tool to get the coordinates of the location.

- Send the location coordinates to the NWS weather API. You’ll get back a JSON object containing weather data.

- Tell the LLM to extract the corresponding weather forecast (for example, if the question is about now, tonight, or next Monday) and add it to the context of the question.

- Based on this enriched context, the agent is able to finally answer the user’s question.

Let’s go through these steps one by one.

Step 1: Setting up Autogen

First, we will use Autogen, an open-source agentic framework created by Microsoft. To follow along, clone my Git repository, get API keys following the directions provided by Google Cloud and OpenAI. Switch to the genai_agents folder, and update the keys.env file with your keys.

GOOGLE_API_KEY=AI…

OPENAI_API_KEY=sk-…

Next, install the required Python modules using pip:

pip install -r requirements.txt

This will install the autogen module and client libraries for Google Maps and OpenAI.

Follow the discussion below by looking at ag_weather_agent.py.

Autogen treats agentic tasks as a conversation between agents. So, the first step in Autogen is to create the agents that will perform the individual steps. One will be the proxy for the end-user. It will initiate chats with the AI agent that we will refer to as the Assistant:

user_proxy = UserProxyAgent("user_proxy",

code_execution_config={"work_dir": "coding", "use_docker": False},

is_termination_msg=lambda x: autogen.code_utils.content_str(x.get("content")).find("TERMINATE") >= 0,

human_input_mode="NEVER",

)

There are three things to note about the user proxy above:

- If the Assistant responds with code, the user proxy is capable of executing that code in a sandbox.

- The user proxy terminates the conversation if the Assistant response contains the word TERMINATE. This is how the LLM tells us that the user question has been fully answered. Making the LLM do this is part of the hidden system prompt that Autogen sends to the LLM.

- The user proxy never asks the end-user any follow-up questions. If there were follow-ups, we’d specify the condition under which the human is asked for more input.

Even though Autogen is from Microsoft, it is not limited to Azure OpenAI. The AI assistant can use OpenAI:

openai_config = {

"config_list": [

{

"model": "gpt-4",

"api_key": os.environ.get("OPENAI_API_KEY")

}

]

}

or Gemini:

gemini_config = {

"config_list": [

{

"model": "gemini-1.5-flash",

"api_key": os.environ.get("GOOGLE_API_KEY"),

"api_type": "google"

}

],

}

Anthropic and Ollama are supported as well.

Supply the appropriate LLM configuration to create the Assistant:

assistant = AssistantAgent(

"Assistant",

llm_config=gemini_config,

max_consecutive_auto_reply=3

)

Before we wire the rest of the agentic framework, let’s ask the Assistant to answer our sample query.

response = user_proxy.initiate_chat(

assistant, message=f"Is it raining in Chicago?"

)

print(response)

The Assistant responds with this code to reach out an existing Google web service and scrape the response:

```python

# filename: weather.py

import requests

from bs4 import BeautifulSoup

url = "https://www.google.com/search?q=weather+chicago"

response = requests.get(url)

soup = BeautifulSoup(response.text, 'html.parser')

weather_info = soup.find('div', {'id': 'wob_tm'})

print(weather_info.text)

```

This gets at the power of an agentic framework when powered by a frontier foundational model — the Assistant has autonomously figured out a web service that provides the desired functionality and is using its code generation and execution capability to provide something akin to the desired functionality! However, it’s not quite what we wanted — we asked whether it was raining, and we got back the full website instead of the desired answer.

Secondly, the autonomous capability doesn’t really meet our pedagogical needs. We are using this example as illustrative of enterprise use cases, and it is unlikely that the LLM will know about your internal APIs and tools to be able to use them autonomously. So, let’s proceed to build out the framework shown in Figure 1 to invoke the specific APIs we want to use.

Step 2: Extracting the location

Because extracting the location from the question is just text processing, you can simply prompt the LLM. Let’s do this with a single-shot example:

SYSTEM_MESSAGE_1 = """

In the question below, what location is the user asking about?

Example:

Question: What's the weather in Kalamazoo, Michigan?

Answer: Kalamazoo, Michigan.

Question:

"""

Now, when we initiate the chat by asking whether it is raining in Chicago:

response1 = user_proxy.initiate_chat(

assistant, message=f"{SYSTEM_MESSAGE_1} Is it raining in Chicago?"

)

print(response1)

we get back:

Answer: Chicago.

TERMINATE

So, step 2 of Figure 1 is complete.

Step 3: Geocoding the location

Step 3 is to get the latitude and longitude coordinates of the location that the user is interested in. Write a Python function that will called the Google Maps API and extract the required coordinates:

def geocoder(location: str) -> (float, float):

geocode_result = gmaps.geocode(location)

return (round(geocode_result[0]['geometry']['location']['lat'], 4),

round(geocode_result[0]['geometry']['location']['lng'], 4))

Next, register this function so that the Assistant can call it in its generated code, and the user proxy can execute it in its sandbox:

autogen.register_function(

geocoder,

caller=assistant, # The assistant agent can suggest calls to the geocoder.

executor=user_proxy, # The user proxy agent can execute the geocder calls.

name="geocoder", # By default, the function name is used as the tool name.

description="Finds the latitude and longitude of a location or landmark", # A description of the tool.

)

Note that, at the time of writing, function calling is supported by Autogen only for GPT-4 models.

We now expand the example in the prompt to include the geocoding step:

SYSTEM_MESSAGE_2 = """

In the question below, what latitude and longitude is the user asking about?

Example:

Question: What's the weather in Kalamazoo, Michigan?

Step 1: The user is asking about Kalamazoo, Michigan.

Step 2: Use the geocoder tool to get the latitude and longitude of Kalmazoo, Michigan.

Answer: (42.2917, -85.5872)

Question:

"""

Now, when we initiate the chat by asking whether it is raining in Chicago:

response2 = user_proxy.initiate_chat(

assistant, message=f"{SYSTEM_MESSAGE_2} Is it raining in Chicago?"

)

print(response2)

we get back:

Answer: (41.8781, -87.6298)

TERMINATE

Steps 4–6: Obtaining the final answer

Now that we have the latitude and longitude coordinates, we are ready to invoke the NWS API to get the weather data. Step 4, to get the weather data, is similar to geocoding, except that we are invoking a different API and extracting a different object from the web service response. Please look at the code on GitHub to see the full details.

The upshot is that the system prompt expands to encompass all the steps in the agentic application:

SYSTEM_MESSAGE_3 = """

Follow the steps in the example below to retrieve the weather information requested.

Example:

Question: What's the weather in Kalamazoo, Michigan?

Step 1: The user is asking about Kalamazoo, Michigan.

Step 2: Use the geocoder tool to get the latitude and longitude of Kalmazoo, Michigan.

Step 3: latitude, longitude is (42.2917, -85.5872)

Step 4: Use the get_weather_from_nws tool to get the weather from the National Weather Service at the latitude, longitude

Step 5: The detailed forecast for tonight reads 'Showers and thunderstorms before 8pm, then showers and thunderstorms likely. Some of the storms could produce heavy rain. Mostly cloudy. Low around 68, with temperatures rising to around 70 overnight. West southwest wind 5 to 8 mph. Chance of precipitation is 80%. New rainfall amounts between 1 and 2 inches possible.'

Answer: It will rain tonight. Temperature is around 70F.

Question:

"""

Based on this prompt, the response to the question about Chicago weather extracts the right information and answers the question correctly.

In this example, we allowed Autogen to select the next agent in the conversation autonomously. We can also specify a different next speaker selection strategy: in particular, setting this to be “manual” inserts a human in the loop, and allows the human to select the next agent in the workflow.

Agentic workflow in LangGraph

Where Autogen treats agentic workflows as conversations, LangGraph is an open source framework that allows you to build agents by treating a workflow as a graph. This is inspired by the long history of representing data processing pipelines as directed acyclic graphs (DAGs).

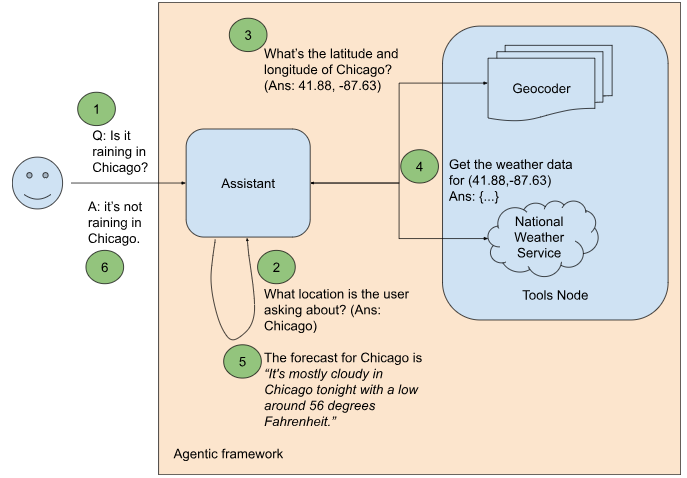

In the graph paradigm, our weather agent would look as shown in Figure 2.

There are a few key differences between Figures 1 (Autogen) and 2 (LangGraph):

- In Autogen, each of the agents is a conversational agent. Workflows are treated as conversations between agents that talk to each other. Agents jump into the conversation when they believe it is “their turn”. In LangGraph, workflows are treated as a graph whose nodes the workflow cycles through based on rules that we specify.

- In Autogen, the AI assistant is not capable of executing code; instead the Assistant generates code, and it is the user proxy that executes the code. In LangGraph, there is a special ToolsNode that consists of capabilities made available to the Assistant.

You can follow along this section by referring to the file lg_weather_agent.py in my GitHub repository.

We set up LangGraph by creating the workflow graph. Our graph consists of two nodes: the Assistant Node and a ToolsNode. Communication within the workflow happens via a shared state.

workflow = StateGraph(MessagesState)

workflow.add_node("assistant", call_model)

workflow.add_node("tools", ToolNode(tools))

The tools are Python functions:

@tool

def latlon_geocoder(location: str) -> (float, float):

"""Converts a place name such as "Kalamazoo, Michigan" to latitude and longitude coordinates"""

geocode_result = gmaps.geocode(location)

return (round(geocode_result[0]['geometry']['location']['lat'], 4),

round(geocode_result[0]['geometry']['location']['lng'], 4))

tools = [latlon_geocoder, get_weather_from_nws]

The Assistant calls the language model:

model = ChatOpenAI(model='gpt-3.5-turbo', temperature=0).bind_tools(tools)

def call_model(state: MessagesState):

messages = state['messages']

response = model.invoke(messages)

# This message will get appended to the existing list

return {"messages": [response]}

LangGraph uses langchain, and so changing the model provider is straightforward. To use Gemini, you can create the model using:

model = ChatGoogleGenerativeAI(model='gemini-1.5-flash',

temperature=0).bind_tools(tools)

Next, we define the graph’s edges:

workflow.set_entry_point("assistant")

workflow.add_conditional_edges("assistant", assistant_next_node)

workflow.add_edge("tools", "assistant")

The first and last lines above are self-explanatory: the workflow starts with a question being sent to the Assistant. Anytime a tool is called, the next node in the workflow is the Assistant which will use the result of the tool. The middle line sets up a conditional edge in the workflow, since the next node after the Assistant is not fixed. Instead, the Assistant calls a tool or ends the workflow based on the contents of the last message:

def assistant_next_node(state: MessagesState) -> Literal["tools", END]:

messages = state['messages']

last_message = messages[-1]

# If the LLM makes a tool call, then we route to the "tools" node

if last_message.tool_calls:

return "tools"

# Otherwise, we stop (reply to the user)

return END

Once the workflow has been created, compile the graph and then run it by passing in questions:

app = workflow.compile()

final_state = app.invoke(

{"messages": [HumanMessage(content=f"{system_message} {question}")]}

)

The system message and question are exactly what we employed in Autogen:

system_message = """

Follow the steps in the example below to retrieve the weather information requested.

Example:

Question: What's the weather in Kalamazoo, Michigan?

Step 1: The user is asking about Kalamazoo, Michigan.

Step 2: Use the latlon_geocoder tool to get the latitude and longitude of Kalmazoo, Michigan.

Step 3: latitude, longitude is (42.2917, -85.5872)

Step 4: Use the get_weather_from_nws tool to get the weather from the National Weather Service at the latitude, longitude

Step 5: The detailed forecast for tonight reads 'Showers and thunderstorms before 8pm, then showers and thunderstorms likely. Some of the storms could produce heavy rain. Mostly cloudy. Low around 68, with temperatures rising to around 70 overnight. West southwest wind 5 to 8 mph. Chance of precipitation is 80%. New rainfall amounts between 1 and 2 inches possible.'

Answer: It will rain tonight. Temperature is around 70F.

Question:

"""

question="Is it raining in Chicago?"

The result is that the agent framework uses the steps to come up with an answer to our question:

Step 1: The user is asking about Chicago.

Step 2: Use the latlon_geocoder tool to get the latitude and longitude of Chicago.

[41.8781, -87.6298]

[{"number": 1, "name": "This Afternoon", "startTime": "2024–07–30T14:00:00–05:00", "endTime": "2024–07–30T18:00:00–05:00", "isDaytime": true, …]

There is a chance of showers and thunderstorms after 8pm tonight. The low will be around 73 degrees.

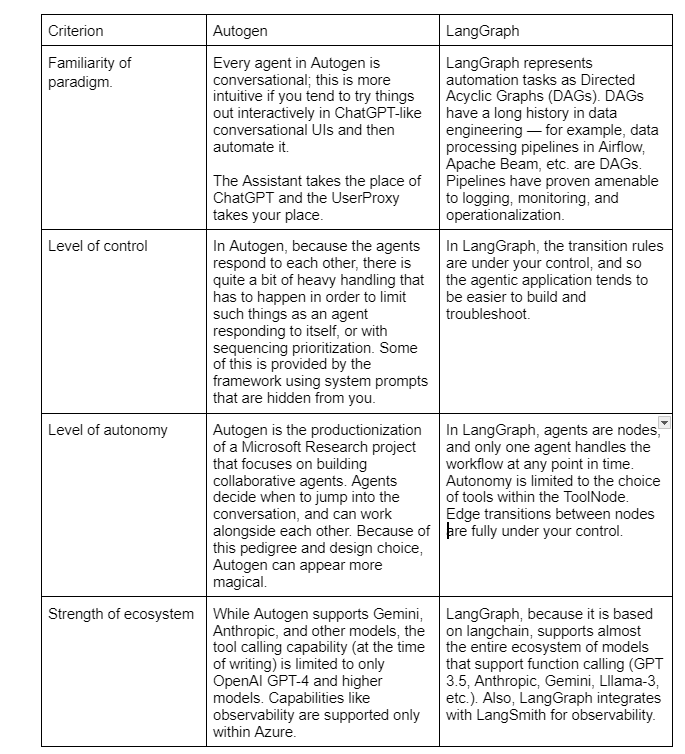

Choosing between Autogen and LangGraph

Between Autogen and LangGraph, which one should you choose? A few considerations:

Of course, the level of Autogen support for non-OpenAI models and other tooling could improve by the time you are reading this. LangGraph could add autonomous capabilities, and Autogen could provide you more fine-grained control. The agent space is moving fast!

Resources

- ag_weather_agent.py: https://github.com/lakshmanok/lakblogs/blob/main/genai_agents/ag_weather_agent.py

- lg_weather_agent.py: https://github.com/lakshmanok/lakblogs/blob/main/genai_agents/lg_weather_agent.py

This article is an excerpt from a forthcoming O’Reilly book “Visualizing Generative AI” that I’m writing with Priyanka Vergadia. All diagrams in this post were created by the author.

How to Implement a GenAI Agent using Autogen or LangGraph was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

How to Implement a GenAI Agent using Autogen or LangGraph

Go Here to Read this Fast! How to Implement a GenAI Agent using Autogen or LangGraph