Put a real-world object into fully AI-generated 4D scenes with minimal effort, so that it can star in your videos.

Progress in generative AI (GenAI) is astonishingly fast. It’s becoming more mature in various text-driven tasks, going from typical natural language processing (NLP) to independent AI agents, capable of performing high-level tasks by themselves. However, it’s still in its infancy for image, audio, and video creation. While these domains are still new, hard to control, and occasionally gimmicky, they are getting better month by month. To illustrate, the video below shows how video generation evolved over the past year, using the infamous “spaghetti eating benchmark” as an example.

In this article, I focus on video generation and show you how you can generate your own videos featuring yourself or actual real-world objects — as shown by the “GenAI Christmas” video below. This article will answer the following questions:

- How good is video generation nowadays?

- Is it possible to generate videos centered around a specific object?

- How can I create one myself?

- What level of quality can I expect?

Let’s dive right in!

Types of GenAI video creation

Video generation through AI comes in various forms, each with unique capabilities and challenges. Most often, you can classify a GenAI video into one of three categories:

- Videos featuring known concepts and celebrities

- Image-based videos starting from fine-tuned image-generation models

- Image-based videos starting from edited content

Let’s break down each in more detail!

Videos featuring known concepts and celebrities

This type of video generation solely relies on text prompts to produce content using concepts that the Large Vision Models (LVM) already know. These are often generic concepts (“A low-angle shot captures a flock of pink flamingos gracefully wading in a lush, tranquil lagoon.” ~ Veo 2 demo shown below) mixed together to create a truly authentic video that aligns well with the inputted prompt.

However, a single picture is worth a thousand words, and prompts are never this long (nor would the video generation listen even if this was the case). This makes it nearly impossible for this approach to create consistent follow-up shots that fit together in a longer-lasting video. Look for example at Coca-Cola’s 2024 fully AI-generated advertisement — and the lack of consistency in the featured trucks (they change every frame!).

Learning: It’s nearly impossible to create consistent follow-up shots with text-to-video models.

One — and probably the most known — exception to the just mentioned limitation are celebrities. Due to their elaborate media presence, LVMs usually have enough training data to generate images or videos of these celebrities following the text prompt’s command. Add some explicit content to it and you have a chance to go viral — as shown by the music video below from The Dor Brothers. Though, notice how they still struggled to maintain consistency, as shown by the clothes that change in every single shot.

The democratization of GenAI tools has made it easier than ever for people to create their own content. This is great since it acts as a creative enabler, but it also increases the chances of misuse. Which, in turn, raises important ethical and legal questions, especially around consent and misrepresentation. Without proper rules in place, there’s a high risk of harmful or misleading content flooding digital platforms, making it even harder to trust what we see online. Luckily, many tools, like Runway, have systems in place to flag questionable or inappropriate content, helping to keep things in check.

Learning: Celebrities can be generated consistently due to the abundance of (visual) data on them, which, rightfully, raises ethical and legal concerns. Luckily, most generation engines help to monitor misuse by flagging such requests.

Image-based videos starting from fine-tuned image-generation models

Another popular approach to generating videos is to start from a generated image, which serves as the first frame of the video. This frame can be completely generated — as shown in the first example below — or based on a real image that’s slightly manipulated to provide better control. You can, for example, modify the image either manually or by using an image-to-image model. One way of doing so is through inpainting, as shown in the second example below.

Learnings:

— Using images as specific frames in the generated video provide for greater control, helping you anchor the video to specific views.

— Frames can be created from scratch using image generation models.

— You can utilize image-to-image models to change existing images that fit the storyline better.

Other, more sophisticated approaches include completely changing the style of your photos using style transfer models or making models learn a specific concept or person to then generate variations, as is done in DreamBooth. This, however, is very tough to pull off since fine-tuning isn’t trivial and requires a lot of trial and error to get right. Also, the final results will always be “as good as it can get”, with an output quality that’s nearly impossible to predict at the start of the tuning process. Nevertheless, when done right, the results look amazing, as shown in this “realistic Simpsons” video:

Image-based videos starting from edited content

A last option — which is what I mostly used to generate the video shown in this article’s introduction — is to manually edit images before feeding them into an image-to-video generative model. These manually edited images then serve as the starting frames of the generated video, or even as intermediate and final frames. This approach offers significant control, as you’re only bound by your own editing skills and the interpretative freedom of the video generation model between the anchoring frames. The following figure shows how I used Sora to create a segue between two consecutive anchor frames.

Learning: Most video generation tools (Runway, Sora, …) allow you to specify starting, intermediate, and/or ending frames, providing great control in the video generation process.

The great thing is that the quality of the edits doesn’t even need to be high, as long as the video generation model understands what you’re trying to do. The example below shows the initial edit — a simple copy-paste of a robot onto a generated background scene — and how this is transformed into the same robot walking through the forest.

Learning: Low-quality edits can still lead to high-quality video generation.

Since the generated video is anchored by the self-edited images, it becomes significantly easier to control the flow of the video and thus ensure that successive shots fit better together. In the next section, I dive into the details of how exactly this can be done.

Learning: Manually editing specific frames to anchor the generated video allows you to create consistent follow-up shots.

Make your own video!

OK, long intro aside, how can you now start to actually make a video?

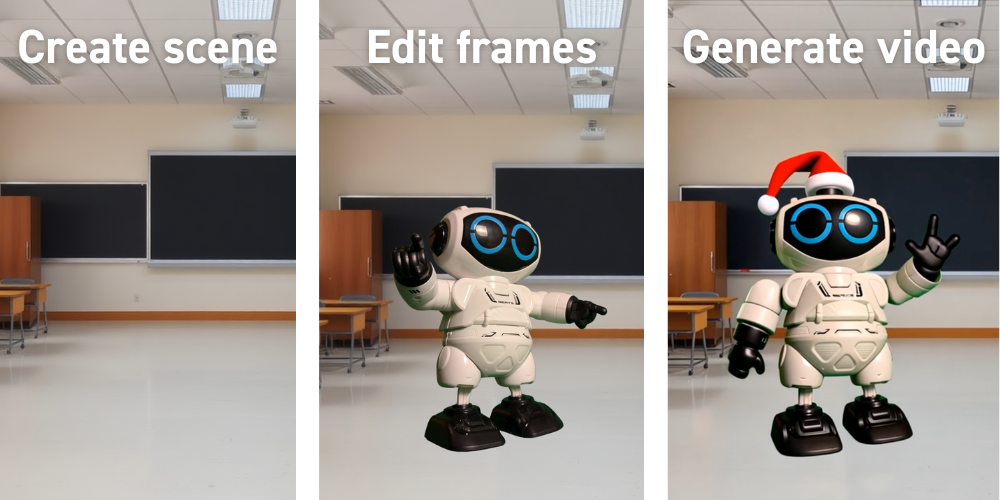

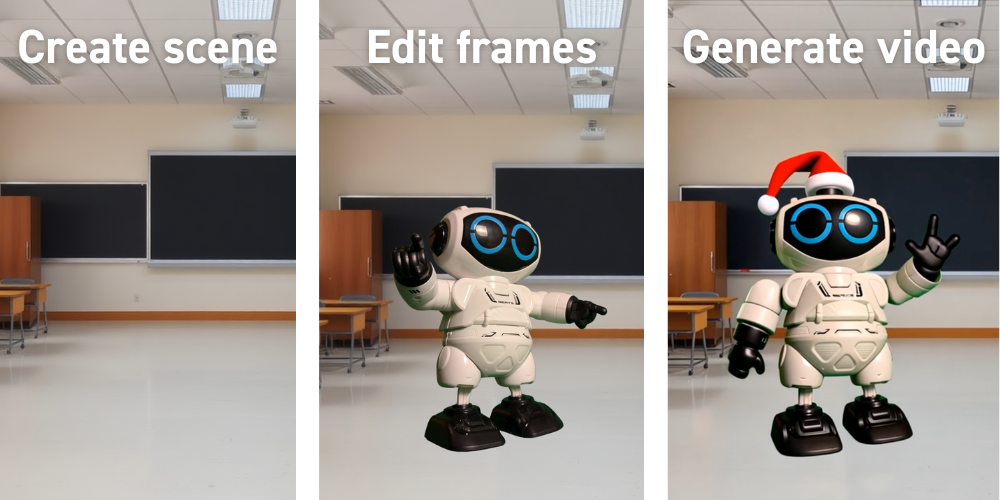

The next three sections explain step by step how I made most of the shots from the video you saw at the beginning of this article. In a nutshell, they almost always follow the same approach:

- Step 1: generate a scene through image generation

- Step 2: make edits to your scenes — even bad ones allowed!

- Step 3: turn your images into a generated video

Let’s get our hands dirty!

Step 1: generate a scene through image generation

First, let’s generate the setting of a specific scene. In the music video I created, increasingly smarter agents are mentioned, so I decided a classroom setting would work well. To generate this scene, I used Flux 1.1 Schnell. Personally, I find the results from Black Forest Labs’ Flux models more satisfactory than those from OpenAI’s DALL-E3, Midjourney’s models, or Stability AI’s Stable Diffusion models.

Learning: At the time of writing, Black Forest Labs’ Flux models provide the best text-to-image and inpainting results.

Step 2: make edits to your scenes — even bad ones allowed!

Next, I wanted to include a toy robot —the subject of the video — in the scene. To do so, I took a photo of the robot. For easier background removal, I used a green screen, though this is not a necessity. Nowadays AI models like Daniel Gatis’ rembg or Meta’s Segment Anything Model (SAM) are great at doing this. If you don’t want to worry about the local setup of these models, you can always use online solutions like remove.bg, too.

Once you remove the subject’s background — and optionally add some other components, like dumbbells — you can paste these into the original scene. The better the edit, the higher the quality of the generated video. Getting the light setup right was a challenge I didn’t seem to succeed at. Nonetheless, it’s surprising how good the video generation can be, even when starting from very badly edited images. For editing, I recommend looking into Canva, it’s an easy-to-use online tool with a very small learning curve.

Learning: Canva is great for editing images.

Step 3: turn your images into a generated video

Once you have your anchor frames, you can turn these into a video using a video generation model of choice and a well-crafted prompt. For this, I experimented with Runway’s video generation models and OpenAI’s Sora (no access to Google’s Veo 2 yet, unfortunately). In my experiments, Runway usually gave better results. Interestingly, though, is that Runway Gen-3 Alpha Turbo had the highest success rate, not its larger brother Gen-3 Alpha. Good, since it’s cheaper and generation credits are quite expensive and sparse for video generative models. Based on the videos I see passing around online, it seems that Google’s Veo 2 is yet another big jump in generation capability. Hope it’ll be generally available soon!

Learnings:

— Runway’s Gen-3 Alpha Turbo had the highest success rate over Runway’s other models — Gen-2 and Gen-3 Alpha — and OpenAI’s Sora.

— Generation credits are expensive and sparse on all platforms. You don’t get much for your money, especially considering the high dependency on ‘luck’ during generation.

Generating videos is unfortunately still more often a miss than a hit. While it is rather trivial to pan the camera around in the scene, asking for specific movement of the video’s subject remains very tough. Instructions like “raise right hand” are nearly impossible — so don’t even think of trying to direct how the subject’s right hand should be raised. To illustrate, below is a failed generation of the same transition between a starting and an ending frame discussed in the previous section. For this generation, the instruction was to zoom in on a snowy road with a robot walking on it. For more hilariously uncanny video generations, see the next section; “Be aware! Expect failure …”.

Learning: Generating videos is more a miss than a hit. Directed movements, in particular, remain challenging to almost impossible.

Repeat …

Once you get satisfactory results, you can repeat this process to get consecutive shots that fit together. This can be done in various ways, like by creating a new starting frame (see first example below), or by continuing the video generation with the frame of the last generation but with a different prompt to change the subject’s behaviors (see second example below).

Be aware! Expect failure …

As said earlier, generating videos is tough, so keep your expectations low. Do you want to generate a specific shot or movement? No chance. Do you want to make a good-looking shot of anything, but you don’t care about what exactly? Yeah, that could work! Is the generated result good, but do you want to change something minor in it? No chance again …

To give you a bit of a feel for this process, here’s a compilation of a few of the best failures I generated during the process of creating my video.

From regular video to music video — turn your story into a song

The cherry on the cake is a fully AI-generated song to complement the story depicted in the video. Of course, this was actually the foundation of the cake, since the music was generated before the video, but that’s not the point. The point is … how great did music generation become?!

The song used in the music video in the introduction of this article was created using Suno, the AI application that has had the biggest “wow!” factor for me so far. The ease and speed of generating music that’s actually quite good is amazing. To illustrate, the music video was generated within five minutes of work — this included the time the models took to process!

Learning: Suno is awesome!

My ideal music-generation workflow is as follows:

- Brainstorm about a story with ChatGPT (simple 4o is fine, o1 did not add much extra) and extract good parts.

- Converge the good parts and ideas to complete lyrics by providing ChatGPT with feedback and manual edits.

- Use Suno (v4) to generate songs and play around with different styles. Rewrite specific words differently if they sound off (instead of “GenAI” wtire “Gen-AI” to prevent a spoken “genaj”).

- Remaster the song in Suno (v4). This improves the quality and range of the song, which is almost always an improvement over the original.

All learnings in a nutshell

To summarize, here are all the lessons I learned while making my own music video and writing this article:

- It’s nearly impossible to create consistent follow-up shots with text-to-video models.

- Celebrities can be generated consistently due to the abundance of (visual) data on them, which, rightfully, raises ethical and legal concerns. Luckily, most generation engines help to monitor misuse by flagging such requests.

- Using images as specific frames in the generated video provide for greater control, helping you anchor the video to specific views.

- Frames can be created from scratch using image generation models.

- You can utilize image-to-image models to change existing images that fit the storyline better.

- Most video generation tools (Runway, Sora, …) allow you to specify starting, intermediate, and/or ending frames, providing great control in the video generation process.

- Low-quality edits can still lead to high-quality video generation.

- Manually editing specific frames to anchor the generated video allows you to create consistent follow-up shots.

- At the time of writing, Black Forest Labs’ Flux models provide the best text-to-image and inpainting results.

- Canva is great for editing images.

- Runway’s Gen-3 Alpha Turbo had the highest success rate over Runway’s other models — Gen-2 and Gen-3 Alpha — and OpenAI’s Sora.

- Generation credits are expensive and sparse on all platforms. You don’t get much for your money, especially considering the high dependency on ‘luck’ during generation.

- Generating videos is more a miss than a hit. Directed movements, in particular, remain challenging to almost impossible.

- Suno is awesome!

Did you like this content? Feel free to follow me on LinkedIn to see my next explorations, or follow me on Medium!

How to Create a Customized GenAI Video in 3 Simple Steps was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

How to Create a Customized GenAI Video in 3 Simple Steps

Go Here to Read this Fast! How to Create a Customized GenAI Video in 3 Simple Steps