Create a server to replicate OpenAI’s Chat Completions API, enabling any LLM to integrate with tools written for the OpenAI API

It is early 2024, and the Gen AI market is being dominated by OpenAI. For good reasons, too — they have the first mover’s advantage, being the first to provide an easy-to-use API for an LLM, and they also offer arguably the most capable LLM to date, GPT 4. Given that this is the case, developers of all sorts of tools (agents, personal assistants, coding extensions), have turned to OpenAI for their LLM needs.

While there are many reasons to fuel your Gen AI creations with OpenAI’s GPT, there are plenty of reasons to opt for an alternative. Sometimes, it might be less cost-efficient, and at other times your data privacy policy may prohibit you from using OpenAI, or maybe you’re hosting an open-source LLM (or your own).

OpenAI’s market dominance means that many of the tools you might want to use only support the OpenAI API. Gen AI & LLM providers like OpenAI, Anthropic, and Google all seem to creating different API schemas (perhaps intentionally), which adds a lot of extra work for devs who want to support all of them.

So, as a quick weekend project, I decided to implement a Python FastAPI server that is compatible with the OpenAI API specs, so that you can wrap virtually any LLM you like (either managed like Anthropic’s Claude, or self-hosted) to mimic the OpenAI API. Thankfully, the OpenAI API specs, have a base_url parameter you can set to effectively point the client to your server, instead of OpenAI’s servers, and most of the developers of aforementioned tools allow you to set this parameter to your liking.

To do this, I’ve followed OpenAI’s Chat API reference openly available here, with some help from the code of vLLM, an Apache-2.0 licensed inference server for LLMs that also offers OpenAI API compatibility.

Game Plan

We will be building a mock API that mimics the way OpenAI’s Chat Completion API (/v1/chat/completions) works. While this implementation is in Python and uses FastAPI, I kept it quite simple so that it can be easily transferable to another modern coding language like TypeScript or Go. We will be using the Python official OpenAI client library to test it — the idea is that if we can get the library to think our server is OpenAI, we can get any program that uses it to think the same.

First step — chat completions API, no streaming

We’ll start with implementing the non-streaming bit. Let’s start with modeling our request:

from typing import List, Optional

from pydantic import BaseModel

class ChatMessage(BaseModel):

role: str

content: str

class ChatCompletionRequest(BaseModel):

model: str = "mock-gpt-model"

messages: List[ChatMessage]

max_tokens: Optional[int] = 512

temperature: Optional[float] = 0.1

stream: Optional[bool] = False

The PyDantic model represents the request from the client, aiming to replicate the API reference. For the sake of brevity, this model does not implement the entire specs, but rather the bare bones needed for it to work. If you’re missing a parameter that is a part of the API specs (like top_p), you can simply add it to the model.

The ChatCompletionRequest models the parameters OpenAI uses in their requests. The chat API specs require specifying a list of ChatMessage (like a chat history, the client is usually in charge of keeping it and feeding back in at every request). Each chat message has a role attribute (usually system, assistant , or user ) and a content attribute containing the actual message text.

Next, we’ll write our FastAPI chat completions endpoint:

import time

from fastapi import FastAPI

app = FastAPI(title="OpenAI-compatible API")

@app.post("/chat/completions")

async def chat_completions(request: ChatCompletionRequest):

if request.messages and request.messages[0].role == 'user':

resp_content = "As a mock AI Assitant, I can only echo your last message:" + request.messages[-1].content

else:

resp_content = "As a mock AI Assitant, I can only echo your last message, but there were no messages!"

return {

"id": "1337",

"object": "chat.completion",

"created": time.time(),

"model": request.model,

"choices": [{

"message": ChatMessage(role="assistant", content=resp_content)

}]

}

That simple.

Testing our implementation

Assuming both code blocks are in a file called main.py, we’ll install two Python libraries in our environment of choice (always best to create a new one): pip install fastapi openai and launch the server from a terminal:

uvicorn main:app

Using another terminal (or by launching the server in the background), we will open a Python console and copy-paste the following code, taken straight from OpenAI’s Python Client Reference:

from openai import OpenAI

# init client and connect to localhost server

client = OpenAI(

api_key="fake-api-key",

base_url="http://localhost:8000" # change the default port if needed

)

# call API

chat_completion = client.chat.completions.create(

messages=[

{

"role": "user",

"content": "Say this is a test",

}

],

model="gpt-1337-turbo-pro-max",

)

# print the top "choice"

print(chat_completion.choices[0].message.content)

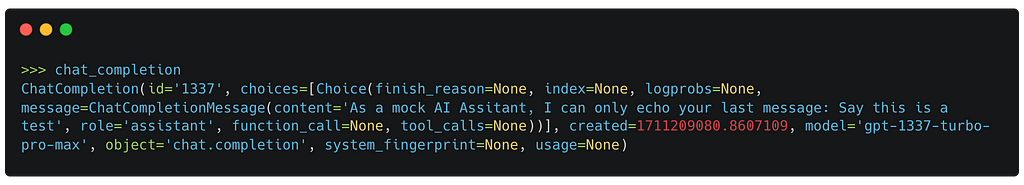

If you’ve done everything correctly, the response from the server should be correctly printed. It’s also worth inspecting the chat_completion object to see that all relevant attributes are as sent from our server. You should see something like this:

Leveling up — supporting streaming

As LLM generation tends to be slow (computationally expensive), it’s worth streaming your generated content back to the client, so that the user can see the response as it’s being generated, without having to wait for it to finish. If you recall, we gave ChatCompletionRequest a boolean stream property — this lets the client request that the data be streamed back to it, rather than sent at once.

This makes things just a bit more complex. We will create a generator function to wrap our mock response (in a real-world scenario, we will want a generator that is hooked up to our LLM generation)

import asyncio

import json

async def _resp_async_generator(text_resp: str):

# let's pretend every word is a token and return it over time

tokens = text_resp.split(" ")

for i, token in enumerate(tokens):

chunk = {

"id": i,

"object": "chat.completion.chunk",

"created": time.time(),

"model": "blah",

"choices": [{"delta": {"content": token + " "}}],

}

yield f"data: {json.dumps(chunk)}nn"

await asyncio.sleep(1)

yield "data: [DONE]nn"

And now, we would modify our original endpoint to return a StreamingResponse when stream==True

import time

from starlette.responses import StreamingResponse

app = FastAPI(title="OpenAI-compatible API")

@app.post("/chat/completions")

async def chat_completions(request: ChatCompletionRequest):

if request.messages:

resp_content = "As a mock AI Assitant, I can only echo your last message:" + request.messages[-1].content

else:

resp_content = "As a mock AI Assitant, I can only echo your last message, but there wasn't one!"

if request.stream:

return StreamingResponse(_resp_async_generator(resp_content), media_type="application/x-ndjson")

return {

"id": "1337",

"object": "chat.completion",

"created": time.time(),

"model": request.model,

"choices": [{

"message": ChatMessage(role="assistant", content=resp_content) }]

}

Testing the streaming implementation

After restarting the uvicorn server, we’ll open up a Python console and put in this code (again, taken from OpenAI’s library docs)

from openai import OpenAI

# init client and connect to localhost server

client = OpenAI(

api_key="fake-api-key",

base_url="http://localhost:8000" # change the default port if needed

)

stream = client.chat.completions.create(

model="mock-gpt-model",

messages=[{"role": "user", "content": "Say this is a test"}],

stream=True,

)

for chunk in stream:

print(chunk.choices[0].delta.content or "")

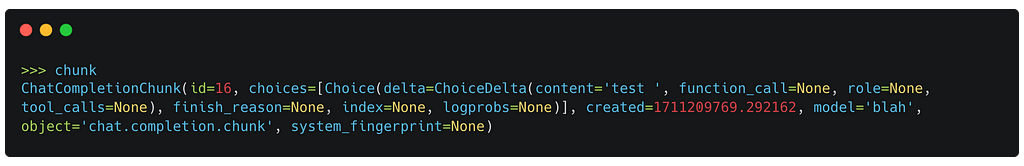

You should see each word in the server’s response being slowly printed, mimicking token generation. We can inspect the last chunk object to see something like this:

Putting it all together

Finally, in the gist below, you can see the entire code for the server.

Final Notes

- There are many other interesting things we can do here, like supporting other request parameters, and other OpenAI abstractions like Function Calls and the Assistant API.

- The lack of standardization in LLM APIs makes it difficult to switch providers, for both companies and developers of LLM-wrapping packages. In the absence of any standard, the approach I’ve taken here is to abstract the LLM behind the specs of the biggest and most mature API.

How to build an OpenAI-compatible API was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

How to build an OpenAI-compatible API

Go Here to Read this Fast! How to build an OpenAI-compatible API