Use LangChain and OpenAI tools to extract structured information from images of receipts stored in Google Drive

This article details how we can use open source Python packages such as LangChain, pytesseract and PyPDF, along with gpt-4-vision and gpt-3.5-turbo, to identify and extract key information from images of receipts. The resulting dataset could be used for a “chat to receipts” application. Check out the full code here.

Paper receipts come in all sorts of styles and formats and represent an interesting target for automated information extraction. They also provide a wealth of itemized costs that, if aggregated into a database, could be very useful for anyone interested in tracking their spend at more detailed level than offered by bank statements.

Wouldn’t it be cool if you could take a photo of a receipt, upload it some application, then have its information extracted and appended to your personal database of expenses, which you could then query in natural language? You could then ask questions of the data like “what did I buy when I last visited IKEA?” or “what items do I spend most money on at Safeway”. Such a system might also naturally extend to corporate finance and expense tracking. In this article, we’ll build a simple application that deals with the first part of this process — namely extracting information from receipts ready to be stored in a database. Our system will monitor a Google Drive folder for new receipts, process them and append the results to a .csv file.

1. Background and motivation

Technically, we’ll be doing a type of automated information extraction called template filling. We have a pre-defined schema of fields that we want to extract from our receipts and the task will be to fill these out, or leave them blank where appropriate. One major issue here is that the information contained in images or scans of receipts is unstructured, and although Optical Character Recognition (OCR) or PDF text extraction libraries might do a decent job at finding the text, they are not good preserving the relative positions of words in a document, which can make it difficult to match an item’s price to its cost for example.

Traditionally, this issue is solved by template matching, where a pre-defined geometric template of the document is created and then extraction is only run in the areas known to contain important information. A great description of this can be found here. However, this system is inflexible. What if a new format of receipt is added?

To get around this, more advanced services like AWS Textract and AWS Rekognition use a combination of pre-trained deep learning models for object detection, bounding box generation and named entity recognition (NER). I haven’t actually tried out these services on the problem at hand, but it would be really interesting to do so in order to compare the results against what we build with OpenAI’s LLMs.

Large Language Models (LLM) such as gpt-3.5-turbo are also great at information extraction and template filling from unstructured text, especially after being given a few examples in their prompt. This makes them much more flexible than template matching or fine-tuning, since adding a few examples of a new receipt format is much faster and cheaper than re-training the model or building a new geometric template.

If we are to use gpt-3.5-turbo on text extracted from a receipts, the question then becomes how can we build the examples from which it can learn? We could of course do this manually, but that wouldn’t scale well. Here we will explore the option of using gpt-4-vision for this. This version of gpt-4 can handle conversations that include images, and appears particularly good at describing the content of images. Given an image of a receipt and a description of the key information we want to extract, gpt-4-vision should therefore be able to do the job in one shot, providing that the image is sufficiently clear.

Why wouldn’t we just use gpt-4-vision alone for this task and abandon gpt-3.5-turbo or other smaller LLMs? Technically we could, and the result might even be more accurate. But gpt-4-vision is very expensive and API calls are limited, so this system also won’t scale. Perhaps in the not-to-distant future though, vision LLMs will become a standard tool in this field of information extraction from documents.

Another motivation for this article is about exploring how we can build this system using Langchain, a popular open source LLM orchestration library. In order to force an LLM to return structured output, prompt engineering is required and Langchain has some excellent tools for this. We will also try to ensure that our system is built in a way that is extensible, because this is just the first part of what could become a larger “chat to receipts” project.

With a brief background out of the way, lets get started with the code! I will be using Python3.9 and Langchain 0.1.14 here, and full details can be found in the repo.

2. Connect to Google Drive

We need a convenient place to store our raw receipt data. Google Drive is one choice, and it provides a Python API that is relatively easy to use. To capture the receipts I use the GeniusScan app, which can upload .pdf, .jpeg or other file types from the phone directly to a Google Drive folder. The app also does some useful pre-processing such as automatic document cropping, which helps with the extraction process.

To set up API access to Google Drive, you’ll need to create service account credentials which can be generated by following the instructions here. For reference, I created a folder in my drive called “receiptchat” and set up a key pair that enables reading of data from that folder.

The following code can be used to set up a drive service object, which gives you access to various methods to query Google Drive

import os

from googleapiclient.discovery import build

from oauth2client.service_account import ServiceAccountCredentials

class GoogleDriveService:

SCOPES = ["https://www.googleapis.com/auth/drive"]

def __init__(self):

# the directory where your credentials are stored

base_path = os.path.dirname(os.path.dirname(os.path.dirname(__file__)))

# The name of the file containing your credentials

credential_path = os.path.join(base_path, "gdrive_credential.json")

os.environ["GOOGLE_APPLICATION_CREDENTIALS"] = credential_path

def build(self):

# Get credentials into the desired format

creds = ServiceAccountCredentials.from_json_keyfile_name(

os.getenv("GOOGLE_APPLICATION_CREDENTIALS"), self.SCOPES

)

# Set up the Gdrive service object

service = build("drive", "v3", credentials=creds, cache_discovery=False)

return service

In our simple application, we only really need to do two things: List all the files in the drive folder and download some list of them. The following class handles this:

import io

from googleapiclient.errors import HttpError

from googleapiclient.http import MediaIoBaseDownload

import googleapiclient.discovery

from typing import List

class GoogleDriveLoader:

# These are the types of files we want to download

VALID_EXTENSIONS = [".pdf", ".jpeg"]

def __init__(self, service: googleapiclient.discovery.Resource):

self.service = service

def search_for_files(self) -> List:

"""

See https://developers.google.com/drive/api/guides/search-files#python

"""

# This query searches for objects that are not folders and

# contain the valid extensions

query = "mimeType != 'application/vnd.google-apps.folder' and ("

for i, ext in enumerate(self.VALID_EXTENSIONS):

if i == 0:

query += "name contains '{}' ".format(ext)

else:

query += "or name contains '{}' ".format(ext)

query = query.rstrip()

query += ")"

# create drive api client

files = []

page_token = None

try:

while True:

response = (

self.service.files()

.list(

q=query,

spaces="drive",

fields="nextPageToken, files(id, name)",

pageToken=page_token,

)

.execute()

)

for file in response.get("files"):

# Process change

print(f'Found file: {file.get("name")}, {file.get("id")}')

file_id = file.get("id")

file_name = file.get("name")

files.append(

{

"id": file_id,

"name": file_name,

}

)

page_token = response.get("nextPageToken", None)

if page_token is None:

break

except HttpError as error:

print(f"An error occurred: {error}")

files = None

return files

def download_file(self, real_file_id: str) -> bytes:

"""

Downloads a single file

"""

try:

file_id = real_file_id

request = self.service.files().get_media(fileId=file_id)

file = io.BytesIO()

downloader = MediaIoBaseDownload(file, request)

done = False

while done is False:

status, done = downloader.next_chunk()

print(f"Download {int(status.progress() * 100)}.")

except HttpError as error:

print(f"An error occurred: {error}")

file = None

return file.getvalue()

Running this gives the following:

service = GoogleDriveService().build()

loader = GoogleDriveLoader(service)

all_files loader.search_for_files() #returns a list of unqiue file ids and names

pdf_bytes = loader.download_file({some_id}) #returns bytes for that file

Great! So now we can connect to Google Drive and bring image or pdf data onto our local machine. Next, we must process it and extract text.

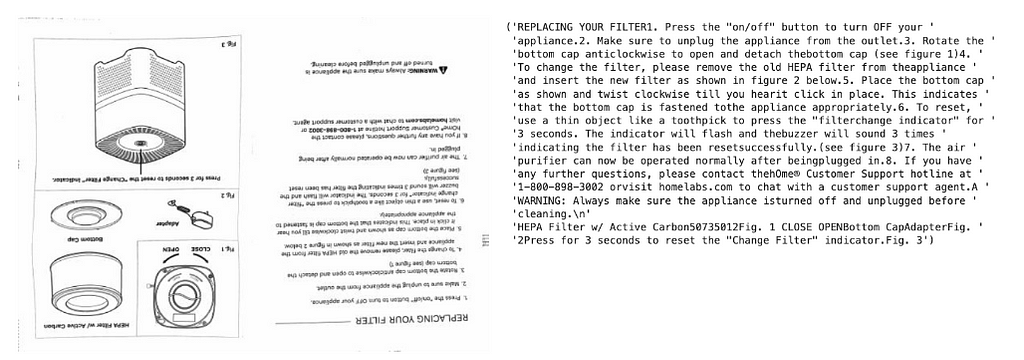

3. Extract raw text from .pdfs and images

Multiple well-documented open source libraries exist to extract raw text from pdfs and images. For pdfs we will use PyPDF here, although for a more comprehensive view of similar packages I recommend this article. For images in jpeg format, we will make use of pytesseract , which is a wrapper for the tesseract OCR engine. Installation instructions for that can be found here. Finally, we also want to be able to convert pdfs into jpeg format. This can be done with the pdf2image package.

Both PyPDF and pytesseract provide high level methods for extraction of text from documents. They both also have options for tuning this. pytesseract , for example, can extract both text and boundary boxes (see here), which may be of useful in future if we want to feed the LLM more information about the format of the receipt whose text its processing. pdf2image provides a method to convert pdf bytes to jpeg image, which is exactly what we want to do here. To convert jpeg bytes to an image that can be visualized, we’ll use the PIL package.

from abc import ABC, abstractmethod

from pdf2image import convert_from_bytes

import numpy as np

from PyPDF2 import PdfReader

from PIL import Image

import pytesseract

import io

DEFAULT_DPI = 50

class FileBytesToImage(ABC):

@staticmethod

@abstractmethod

def convert_bytes_to_jpeg(file_bytes):

raise NotImplementedError

@staticmethod

@abstractmethod

def convert_bytes_to_text(file_bytes):

raise NotImplementedError

class PDFBytesToImage(FileBytesToImage):

@staticmethod

def convert_bytes_to_jpeg(file_bytes, dpi=DEFAULT_DPI, return_array=False):

jpeg_data = convert_from_bytes(file_bytes, fmt="jpeg", dpi=dpi)[0]

if return_array:

jpeg_data = np.asarray(jpeg_data)

return jpeg_data

@staticmethod

def convert_bytes_to_text(file_bytes):

pdf_data = PdfReader(

stream=io.BytesIO(initial_bytes=file_bytes)

)

# receipt data should only have one page

page = pdf_data.pages[0]

return page.extract_text()

class JpegBytesToImage(FileBytesToImage):

@staticmethod

def convert_bytes_to_jpeg(file_bytes, dpi=DEFAULT_DPI, return_array=False):

jpeg_data = Image.open(io.BytesIO(file_bytes))

if return_array:

jpeg_data = np.array(jpeg_data)

return jpeg_data

@staticmethod

def convert_bytes_to_text(file_bytes):

jpeg_data = Image.open(io.BytesIO(file_bytes))

text_data = pytesseract.image_to_string(image=jpeg_data, nice=1)

return text_data

The code above uses the concept of abstract base classes to improve extensibility. Lets say we want to add support for another file type in future. If we write the associated class and inherit from FileBytesToImage , we are forced to write convert_bytes_to_image and convert_bytes_to_text methods in that. This makes it less likely that our classes will introduce errors downstream in a large application.

The code can be used as follows:

bytes_to_image = PDFBytesToImage()

image = PDFBytesToImage.convert_bytes_to_jpeg(pdf_bytes)

text = PDFBytesToImage.convert_bytes_to_jpeg(pdf_bytes)

4. Information extraction with gpt-4-vision

Now let’s use Langchain to prompt gpt-4-vision to extract some information from our receipts. We can start by using Langchain’s support for Pydantic to create a model for the output.

from langchain_core.pydantic_v1 import BaseModel, Field

from typing import List

class ReceiptItem(BaseModel):

"""Information about a single item on a reciept"""

item_name: str = Field("The name of the purchased item")

item_cost: str = Field("The cost of the item")

class ReceiptInformation(BaseModel):

"""Information extracted from a receipt"""

vendor_name: str = Field(

description="The name of the company who issued the reciept"

)

vendor_address: str = Field(

description="The street address of the company who issued the reciept"

)

datetime: str = Field(

description="The date and time that the receipt was printed in MM/DD/YY HH:MM format"

)

items_purchased: List[ReceiptItem] = Field(description="List of purchased items")

subtotal: str = Field(description="The total cost before tax was applied")

tax_rate: str = Field(description="The tax rate applied")

total_after_tax: str = Field(description="The total cost after tax")

This is very powerful because Langchain can use this Pydantic model to construct format instructions for the LLM, which can be included in the prompt to force it to produce a json output with the specified fields. Adding new fields is as straightforward as just updating the model class.

Next, let’s build the prompt, which will just be static:

from dataclasses import dataclass

@dataclass

class VisionReceiptExtractionPrompt:

template: str = """

You are an expert at information extraction from images of receipts.

Given this of a receipt, extract the following information:

- The name and address of the vendor

- The names and costs of each of the items that were purchased

- The date and time that the receipt was issued. This must be formatted like 'MM/DD/YY HH:MM'

- The subtotal (i.e. the total cost before tax)

- The tax rate

- The total cost after tax

Do not guess. If some information is missing just return "N/A" in the relevant field.

If you determine that the image is not of a receipt, just set all the fields in the formatting instructions to "N/A".

You must obey the output format under all circumstances. Please follow the formatting instructions exactly.

Do not return any additional comments or explanation.

"""

Now, we need to build a class that will take in an image and send it to the LLM along with the prompt and format instructions.

from langchain.chains import TransformChain

from langchain_core.messages import HumanMessage

from langchain_core.runnables import chain

from langchain_core.output_parsers import JsonOutputParser

import base64

from langchain.callbacks import get_openai_callback

class VisionReceiptExtractionChain:

def __init__(self, llm):

self.llm = llm

self.chain = self.set_up_chain()

@staticmethod

def load_image(path: dict) -> dict:

"""Load image and encode it as base64."""

def encode_image(path):

with open(path, "rb") as image_file:

return base64.b64encode(image_file.read()).decode("utf-8")

image_base64 = encode_image(path["image_path"])

return {"image": image_base64}

def set_up_chain(self):

extraction_model = self.llm

prompt = VisionReceiptExtractionPrompt()

parser = JsonOutputParser(pydantic_object=ReceiptInformation)

load_image_chain = TransformChain(

input_variables=["image_path"],

output_variables=["image"],

transform=self.load_image,

)

# build custom chain that includes an image

@chain

def receipt_model_chain(inputs: dict) -> dict:

"""Invoke model"""

msg = extraction_model.invoke(

[

HumanMessage(

content=[

{"type": "text", "text": prompt.template},

{"type": "text", "text": parser.get_format_instructions()},

{

"type": "image_url",

"image_url": {

"url": f"data:image/jpeg;base64,{inputs['image']}"

},

},

]

)

]

)

return msg.content

return load_image_chain | receipt_model_chain | JsonOutputParser()

def run_and_count_tokens(self, input_dict: dict):

with get_openai_callback() as cb:

result = self.chain.invoke(input_dict)

return result, cb

The main method to understand here is set_up_chain , which we will walk through step by step. These steps were inspired by this blog post.

- Initialize the prompt, which in this case is just a block of text with some general instructions

- Create a JsonOutputParser from the Pydantic model we made above. This converts the model into a set of formatting instructions that can be added to the prompt

- Make a TransformChain that allows us to incorporate custom functions — in this case the load_image function — into the overall chain. Note that the chain will take in a variable called image_path and output a variable called image , which is a base64-encoded string representing the image. This is one of the formats accepted by gpt-4-vision.

- To the best of my knowledge, ChatOpenAI doesn’t yet natively support sending both text and images. Therefore, we need to make a custom chain that invokes the instance of ChatOpenAI we made with the encoded image, prompt and formatting instructions.

Note that we’re also making use of openai callbacks to count the tokens and spend associated with each call.

To run this, we can do the following:

from langchain_openai import ChatOpenAI

from tempfile import NamedTemporaryFile

model = ChatOpenAI(

api_key={your open_ai api key},

temperature=0, model="gpt-4-vision-preview",

max_tokens=1024

)

extractor = VisionReceiptExtractionChain(model)

# image from PDFBytesToImage.convert_bytes_to_jpeg()

prepared_data = {

"image": image

}

with NamedTemporaryFile(suffix=".jpeg") as temp_file:

prepared_data["image"].save(temp_file.name)

res, cb = extractor.run_and_count_tokens(

{"image_path": temp_file.name}

)

Given our random document above, the result looks like this:

{'vendor_name': 'N/A',

'vendor_address': 'N/A',

'datetime': 'N/A',

'items_purchased': [],

'subtotal': 'N/A',

'tax_rate': 'N/A',

'total_after_tax': 'N/A'}

Not too exciting, but at least its structured in the correct way! When a valid receipt is provided, these fields are filled out and my assessment from running a few tests on different receipts it that its very accurate.

Our callbacks look like this:

Tokens Used: 1170

Prompt Tokens: 1104

Completion Tokens: 66

Successful Requests: 1

Total Cost (USD): $0.01302

This is essential for tracking costs, which can quickly grow during testing of a model like gpt-4.

5. Information extraction with gpt-3.5-turbo

Let’s assume that we’ve used the steps in part 4 to generate some examples and saved them as a json file. Each example consists of some extracted text and corresponding key information as defined by our ReceiptInformation Pydantic model. Now, we want to inject these examples into a call to gpt-3.5-turbo, in the hope that it can generalize what it learns from them to a new receipt. Few-shot learning is a powerful tool in prompt engineering and, if it works, would be great for this use case because whenever a new format of receipt is detected we can generate one example using gpt-4-vision and append it to the list of examples used to prompt gpt-3.5-turbo. Then when a similarly formatted receipt comes along, gpt-3.5-turbo can be used to extract its content. In a way this is like template matching, but without the need to manually define the template.

There are many ways to encourage text based LLMs to extract structured information from a block of text. One of the newest and most powerful that I’ve found is here in the Langchain documentation. The idea is to create a prompt that contains a placeholder for some examples, then inject the examples into the prompt as if they were being returned by some function that the LLM had called. This is done with the model.with_structured_output() functionality, which you can read about here. Note that this is currently in beta and so might change!

Let’s look at the code to see how this is achieved. We’ll first write the prompt.

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

@dataclass

class TextReceiptExtractionPrompt:

system: str = """

You are an expert at information extraction from images of receipts.

Given this of a receipt, extract the following information:

- The name and address of the vendor

- The names and costs of each of the items that were purchased

- The date and time that the receipt was issued. This must be formatted like 'MM/DD/YY HH:MM'

- The subtotal (i.e. the total cost before tax)

- The tax rate

- The total cost after tax

Do not guess. If some information is missing just return "N/A" in the relevant field.

If you determine that the image is not of a receipt, just set all the fields in the formatting instructions to "N/A".

You must obey the output format under all circumstances. Please follow the formatting instructions exactly.

Do not return any additional comments or explanation.

"""

prompt: ChatPromptTemplate = ChatPromptTemplate.from_messages(

[

(

"system",

system,

),

MessagesPlaceholder("examples"),

("human", "{input}"),

]

)

The prompt text is exactly the same as it was in section 4, only we now have a MessagesPlaceholder to hold the examples that we’re going to insert.

class Example(TypedDict):

"""A representation of an example consisting of text input and expected tool calls.

For extraction, the tool calls are represented as instances of pydantic model.

"""

input: str

tool_calls: List[BaseModel]

class TextReceiptExtractionChain:

def __init__(self, llm, examples: List):

self.llm = llm

self.raw_examples = examples

self.prompt = TextReceiptExtractionPrompt()

self.chain, self.examples = self.set_up_chain()

@staticmethod

def tool_example_to_messages(example: Example) -> List[BaseMessage]:

"""Convert an example into a list of messages that can be fed into an LLM.

This code is an adapter that converts our example to a list of messages

that can be fed into a chat model.

The list of messages per example corresponds to:

1) HumanMessage: contains the content from which content should be extracted.

2) AIMessage: contains the extracted information from the model

3) ToolMessage: contains confirmation to the model that the model requested a tool correctly.

The ToolMessage is required because some of the chat models are hyper-optimized for agents

rather than for an extraction use case.

"""

messages: List[BaseMessage] = [HumanMessage(content=example["input"])]

openai_tool_calls = []

for tool_call in example["tool_calls"]:

openai_tool_calls.append(

{

"id": str(uuid.uuid4()),

"type": "function",

"function": {

# The name of the function right now corresponds

# to the name of the pydantic model

# This is implicit in the API right now,

# and will be improved over time.

"name": tool_call.__class__.__name__,

"arguments": tool_call.json(),

},

}

)

messages.append(

AIMessage(content="", additional_kwargs={"tool_calls": openai_tool_calls})

)

tool_outputs = example.get("tool_outputs") or [

"You have correctly called this tool."

] * len(openai_tool_calls)

for output, tool_call in zip(tool_outputs, openai_tool_calls):

messages.append(ToolMessage(content=output, tool_call_id=tool_call["id"]))

return messages

def set_up_examples(self):

examples = [

(

example["input"],

ReceiptInformation(

vendor_name=example["output"]["vendor_name"],

vendor_address=example["output"]["vendor_address"],

datetime=example["output"]["datetime"],

items_purchased=[

ReceiptItem(

item_name=example["output"]["items_purchased"][i][

"item_name"

],

item_cost=example["output"]["items_purchased"][i][

"item_cost"

],

)

for i in range(len(example["output"]["items_purchased"]))

],

subtotal=example["output"]["subtotal"],

tax_rate=example["output"]["tax_rate"],

total_after_tax=example["output"]["total_after_tax"],

),

)

for example in self.raw_examples

]

messages = []

for text, tool_call in examples:

messages.extend(

self.tool_example_to_messages(

{"input": text, "tool_calls": [tool_call]}

)

)

return messages

def set_up_chain(self):

extraction_model = self.llm

prompt = self.prompt.prompt

examples = self.set_up_examples()

runnable = prompt | extraction_model.with_structured_output(

schema=ReceiptInformation,

method="function_calling",

include_raw=False,

)

return runnable, examples

def run_and_count_tokens(self, input_dict: dict):

# inject the examples here

input_dict["examples"] = self.examples

with get_openai_callback() as cb:

result = self.chain.invoke(input_dict)

return result, cb

TextReceiptExtractionChain is going to take in a list of examples, each of which has input and output keys (note how these are used in the set_up_examples method). For each example, we will make a ReceiptInformation object. Then we format the result into a list of messages that can be passed into the prompt. All the work in tool_examples_to_messages is there just to convert between different Langchain formats.

Running this looks very similar to what we did with the vision model:

# Load the examples

EXAMPLES_PATH = "receiptchat/datasets/example_extractions.json"

with open(EXAMPLES_PATH) as f:

loaded_examples = json.load(f)

loaded_examples = [

{"input": x["file_details"]["extracted_text"], "output": x}

for x in loaded_examples

]

# Set up the LLM caller

llm = ChatOpenAI(

api_key=secrets["OPENAI_API_KEY"],

temperature=0,

model="gpt-3.5-turbo"

)

extractor = TextReceiptExtractionChain(llm, loaded_examples)

# convert a PDF file form Google Drive into text

text = PDFBytesToImage.convert_bytes_to_text(downloaded_data)

extracted_information, cb = extractor.run_and_count_tokens(

{"input": text}

)

Even with 10 examples, this call is less than half the cost of the gpt-4-vision and also alot faster to return. As more examples get added, you may need to use gpt-3.5-turbo-16k to avoid exceeding the context window.

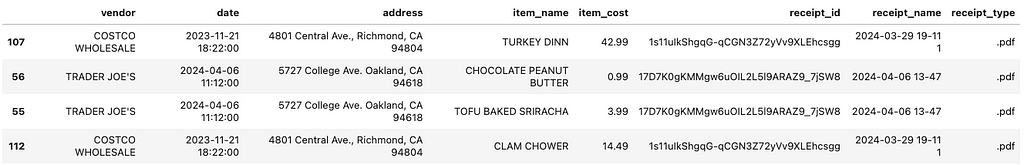

The output dataset

Having collected some receipts, you can run the extraction methods described in sections 4 and 5 and collect the result in a dataframe. This then gets stored and can be appended to whenever a new receipt appears in the Google Drive.

Once my database of extracted receipt information grows a bit larger, I plan to explore LLM-based question answering on top of it, so look out for that article soon! I’m also curious about exploring a more formal evaluation method for this project and comparing the results to what can be obtained via AWS Textract or similar products.

Thanks for making it to the end! Please feel free to explore the full codebase here https://github.com/rmartinshort/receiptchat. Any suggestions for improvement or extensions to the functionality would be much appreciated!

How to Build a Generative AI Tool for Information Extraction from Receipts was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

How to Build a Generative AI Tool for Information Extraction from Receipts