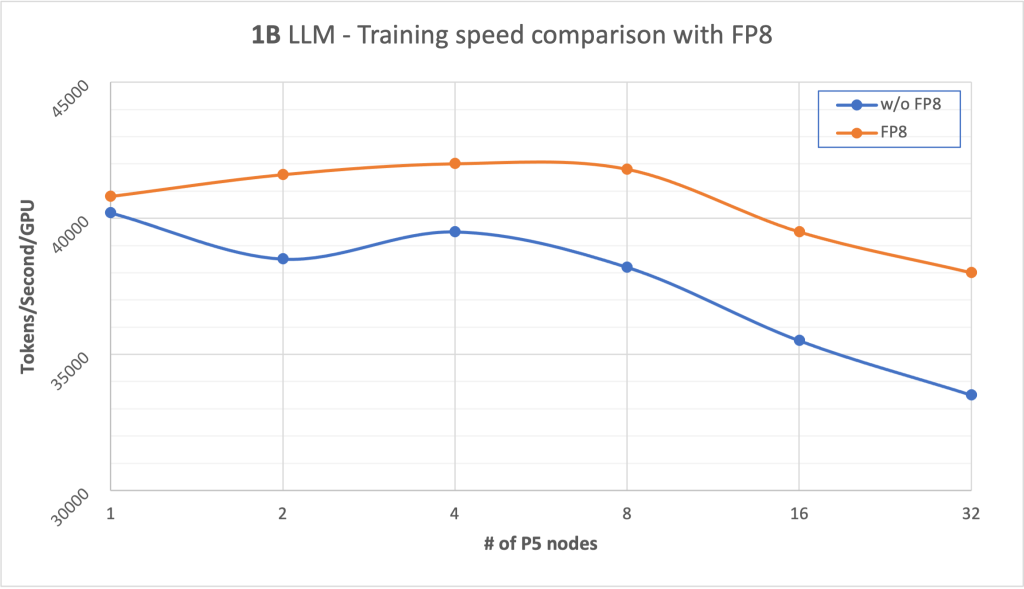

LLM training has seen remarkable advances in recent years, with organizations pushing the boundaries of what’s possible in terms of model size, performance, and efficiency. In this post, we explore how FP8 optimization can significantly speed up large model training on Amazon SageMaker P5 instances.

Originally appeared here:

How FP8 boosts LLM training by 18% on Amazon SageMaker P5 instances

Go Here to Read this Fast! How FP8 boosts LLM training by 18% on Amazon SageMaker P5 instances