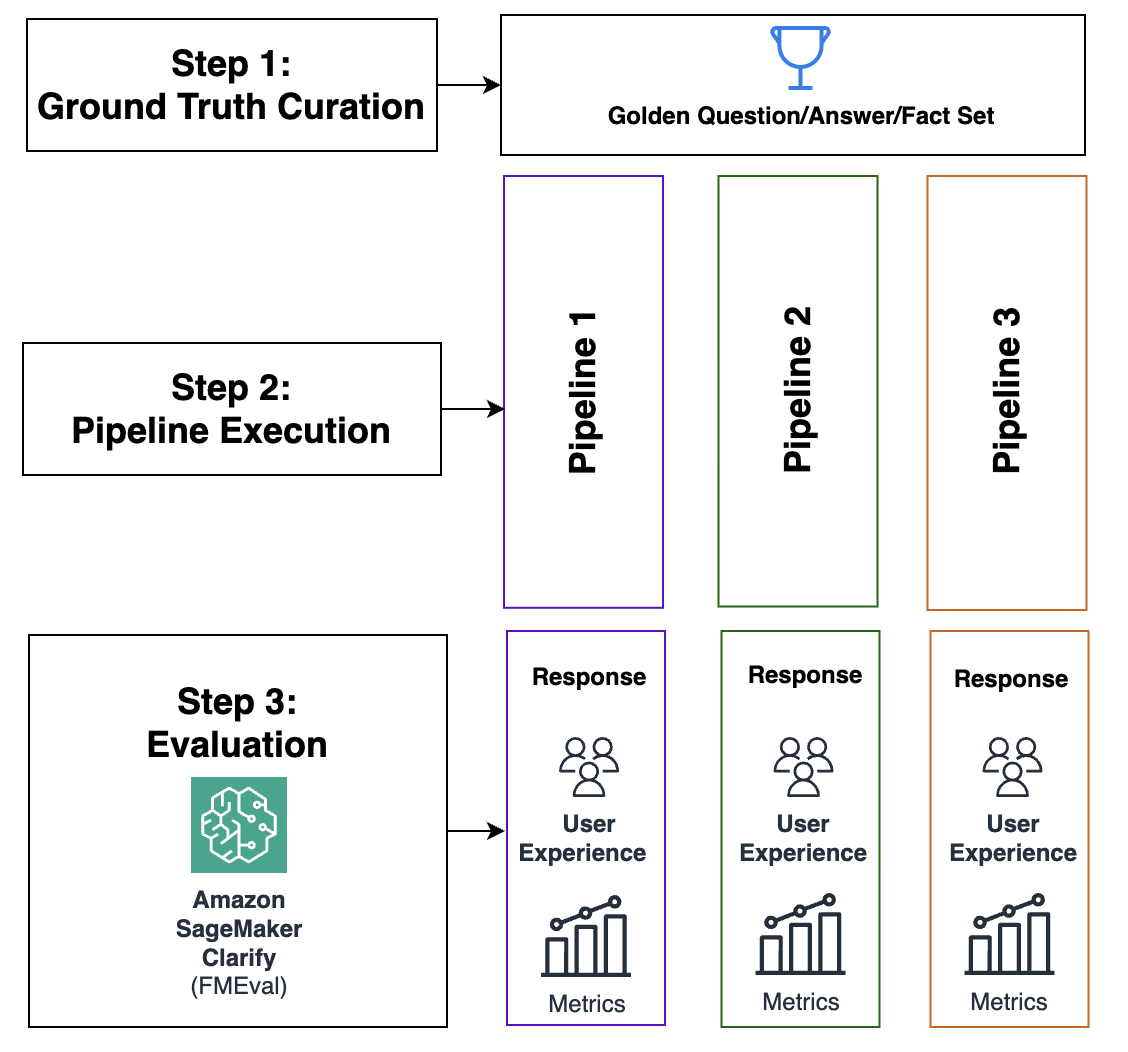

In this post, we discuss best practices for working with Foundation Model Evaluations Library (FMEval) in ground truth curation and metric interpretation for evaluating question answering applications for factual knowledge and quality.

Originally appeared here:

Ground truth curation and metric interpretation best practices for evaluating generative AI question answering using FMEval