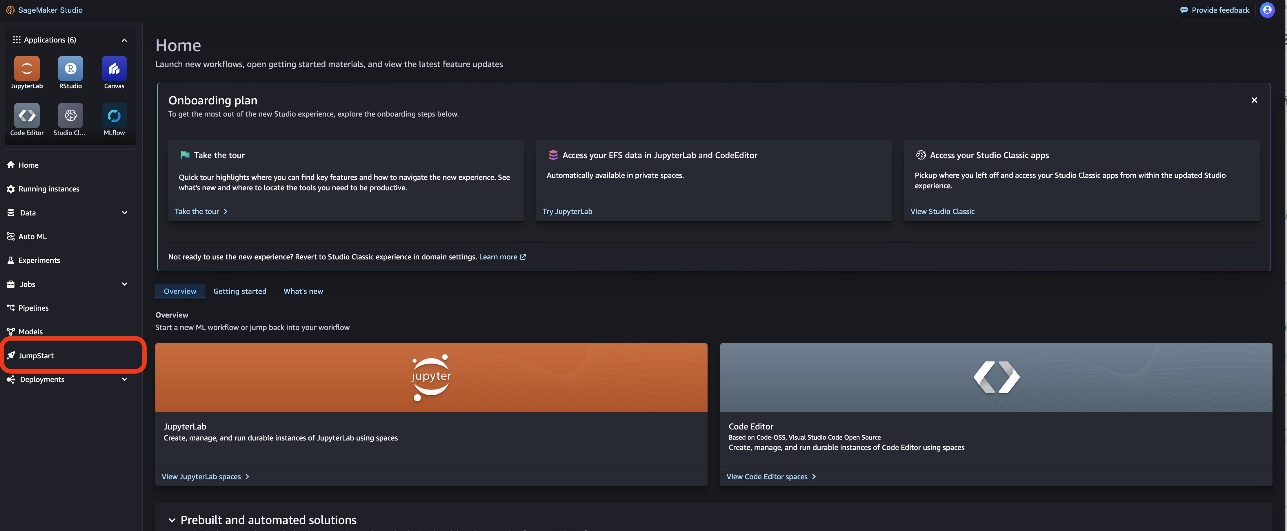

In this post, we showcase how to fine-tune a text and vision model, such as Meta Llama 3.2, to better perform at visual question answering tasks. The Meta Llama 3.2 Vision Instruct models demonstrated impressive performance on the challenging DocVQA benchmark for visual question answering. By using the power of Amazon SageMaker JumpStart, we demonstrate the process of adapting these generative AI models to excel at understanding and responding to natural language questions about images.

Originally appeared here:

Fine-tune multimodal models for vision and text use cases on Amazon SageMaker JumpStart