Nikola Milosevic (Data Warrior)

Why hallucination detection task is wrongly named

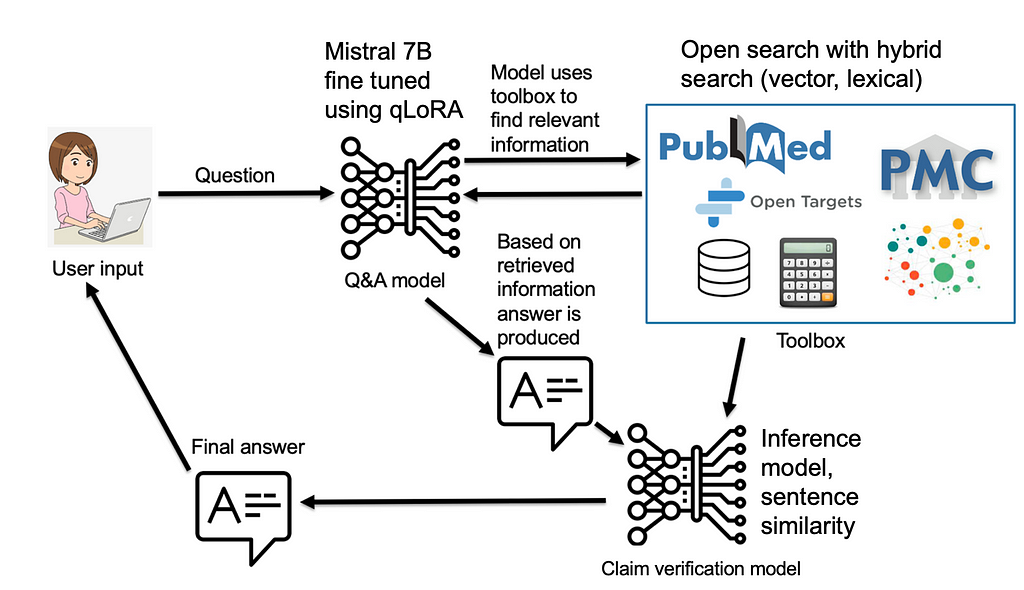

During the past year, I have been working on two projects dealing with hallucination detection of large language models and verifying claims produced by them. As with any research, especially one looking at verification of claims, it led to quite a bit of literature review, in which I have learned that a lot of authors, the task of verifying whether some claim is based on evidence from a reputable source (e.g. previous scientific publication, encyclopedia article, etc.) is often called fact-checking (examples of such publications include Google Deep Mind, University of Pennsylvania, University of Washington, Allen Institute for AI, OpenAI and others). Even datasets, such as SciFact, have factuality in the name.

I assume that calling some metric in large language models factuality goes back to the LaMDA paper by Google, which, published in February 2022, is to the best of my knowledge the first mention of such a metric in LLM. Before one could find occasional instances of fact-checking, like for example in a SciFact paper (from 2020), but LaMDA was the first mention related to LLMs. In the LaMDA paper, this metric was called factual grounding, which is a way better name than the later simplified versions, such as “factuality” or “faithfulness”. In this article, I would like to discuss why the name of the metric should be claim verification, and why I think names like faithfulness, factuality, and fact-checking are wrong from both practical and philosophical standpoints.

Let’s examine what is the base of the task. Given the claim that has been produced by a large language model, we are checking whether it is grounded in evidence from some source. This source can be an article from the literature, but it can be, also, some less formal source, such as encyclopedias, the internet, or any other kind of retrieved information source. Quite often, this task goes back to natural language entailment or natural language inference, where we find whether the claim can be derived from the evidence piece of text. However, there are other approaches, using textual similarity, or other large language models with various kinds of prompts. The task is always whether the generated claim is grounded in the evidence or knowledge we have of the world today. The task can be viewed similarly to generating a literature review part of an article or thesis, and verifying whether the referenced articles support the author’s claims. Of course, we are talking here about automating this task.

Now, what is the problem with naming this task fact-checking or measuring the factuality of the model?

From a philosophical standpoint, it is hard for us to know what the fact is. With all the best intentions, in their pursuit of truth, scientists often write in their publications things that may not be factual, and that will also easily pass peer review. I am here to emphasize, that people do their best effort, especially in scientific publishing, to be as factual as possible. However, that often fails. The publications may contain distorted, exaggerated, or misinterpreted information due to various factors, such as cultural biases, political agendas, or lack of reliable evidence. Often, science is just slowly and naturally moving toward facts by producing new evidence and information.

We had quite a few events in history, in which the common consensus in the field was set, in order to be shaken from its foundation. Think about for example Copernicus: Before Copernicus, most people believed that the Earth was the center of the universe and that the sun, the moon, and the planets revolved around it. This was the geocentric model, which was supported by the teachings of the Catholic Church and the ancient Greek philosopher Aristotle. However, Copernicus, a Polish astronomer and mathematician, proposed a radical alternative: the heliocentric model, which stated that the Earth and the other planets orbited the sun. He based his theory on mathematical calculations and observations of the celestial motions. His work was published in his book On the Revolutions of the Heavenly Spheres in 1543, shortly before his death. Although his theory faced strong opposition and criticism from the religious authorities and some of his contemporaries, it gradually gained acceptance and influence among other scientists, such as Galileo, Kepler, and Newton. The heliocentric model paved the way for the development of modern astronomy and physics and changed the perception of the Earth’s place in the cosmos.

A similar happened with Darwin. Before Darwin, most people believed that the living species were created by God and remained unchanged since their origin. This was the creationist view, which was based on the biblical account of Genesis and the natural theology of the British naturalist John Ray. However, Darwin, an English naturalist and geologist, proposed a radical alternative: the theory of evolution by natural selection, which stated that the living species descended from common ancestors and changed over time due to environmental pressures and the survival of the fittest. There are several more examples, such as Einstein’s relativity, gravity, Khan’s theory on scientific revolutions, and many others.

These events in history are called paradigm shifts, in which the base paradigm in certain fields was significantly shifted. Paradigm shifts may be fairly rare, however, we have as well many common beliefs and myths a lot of people believe, such as that the Great Wall of China can be seen from space, that Napoleon was short, or that Columbus discovered America, which can be found even in scientific articles or books written on the topics, despite them being untrue. People keep citing and referencing works containing this information and they still propagate. Therefore, checking whether the evidence in the referenced literature supports the claim is not a good enough proxy for factuality.

Providing references to the evidence we have for some claims is our best method for supporting the claim. Checking supporting evidence often requires also examining whether the reference is reputable, peer-reviewed, published in a reputable journal, year of publication, etc. Despite all these checks, the information may still be a victim of a paradigm shift or newly generated hypothesis and evidence for it and therefore incomplete and obsolete. But it is our best tool, and we should keep using it. Provided examples illustrate how verification of sources is not always fact-checking, but rather a way of approaching and evaluating claims based on the best available evidence and the most reasonable arguments at a given time and place. However, verification of sources does not imply that all claims are equally valid or that truth is relative or subjective. Verification of sources is a way of seeking and approximating the truth, not denying or relativizing it. Verification of sources acknowledges that truth is complex, multifaceted, and provisional, but also that truth is real, meaningful, and attainable.

Therefore, instead of using the term fact-checking, which suggests a binary and definitive judgment of true or false, we should use the term claim verification, which reflects a more nuanced and tentative assessment of supported or unsupported, credible or dubious, consistent or contradictory. Claim verification is not a final verdict, but a continuous inquiry, that invites us to question, challenge, and revise our beliefs and assumptions in light of new evidence, new sources, and new perspectives.

The right term for the task, in my opinion, is claim verification, as that is what we are doing, we are verifying whether the claim is grounded in the evidence from the referenced article, document, or source. There have been papers published naming the task claim verification (e.g. check this paper). So, I would like to try to call on authors working in this area, to avoid naming their metrics factuality, or fact-checking, but rather call it verifiability, claim verification, etc. I can assume that fact-checking from the marketing perspective looks better, but it is a bad name, not giving the proper treatment and credit to the pursuit of facts and truth in science, which is a much more complex task.

There is a big risk in that name from a practical point of view as well. In a situation where we “absolutely trust” some source to be “absolutely factual”, we lose the ability to critically examine this claim further. No one would have the courage or ability to do so. The core of science and critical thinking is that we examine everything in the pursuit of truth. On top of that, if AI in its current form, would measure factfulness and check facts only based on current knowledge and consensus, we are falling into the risk of halting progress and becoming especially averse to future paradigm shifts.

However, this risk is not only within sciences. The same argument of what is fact and excluding critical thinking from even whole educational systems is a common characteristic of authoritarian regimes. If we would less critically asses what is served to us as facts, we may fall victim to future authoritarians who would utilize it, and integrate their biases into what is considered “fact”. Therefore, let’s be careful of what we call fact, as in most cases it is a claim. A claim may be true based on our current understanding of the world and the universe, or not. Also, whether a claim is correct or not may change with the new evidence and new information that is discovered. One of the big challenges of AI systems, and especially knowledge representation, in my opinion, will be: how to represent knowledge that is our current understanding of the Universe and that will stay up-to-date over time.

Unless otherwise noted, all images are by the author.

Fact-checking vs claim verification was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Fact-checking vs claim verification

Go Here to Read this Fast! Fact-checking vs claim verification