Setting up a Voice Agent using Twilio and the OpenAI Realtime API

Introduction

At the recent OpenAI Dev Day on October 1st, 2024, OpenAI’s biggest release was the reveal of their Realtime API:

“Today, we’re introducing a public beta of the Realtime API, enabling all paid developers to build low-latency, multimodal experiences in their apps.

Similar to ChatGPT’s Advanced Voice Mode, the Realtime API supports natural speech-to-speech conversations using the six preset voices already supported in the API.”

(source: OpenAI website)

As per their message, some of its key benefits include low latency, and its speech to speech capabilities. Let’s see how that plays out in practice in terms of building out voice AI agents.

It also has an interruption handling feature, so that the realtime stream will stop sending audio if it detects you are trying to speak over it, a useful feature for sure when building voice agents.

Contents

In this article we will:

- Compare what a phone voice agent flow might have looked like before the Realtime API, and what it looks like now,

- Review a GitHub project from Twilio that sets up a voice agent using the new Realtime API, so we can see what the implementation looks like in practice, and get an idea how the websockets and connections are setup for such an application,

- Quickly review the React demo project from OpenAI that uses the Realtime API,

- Compare the pricing of these various options.

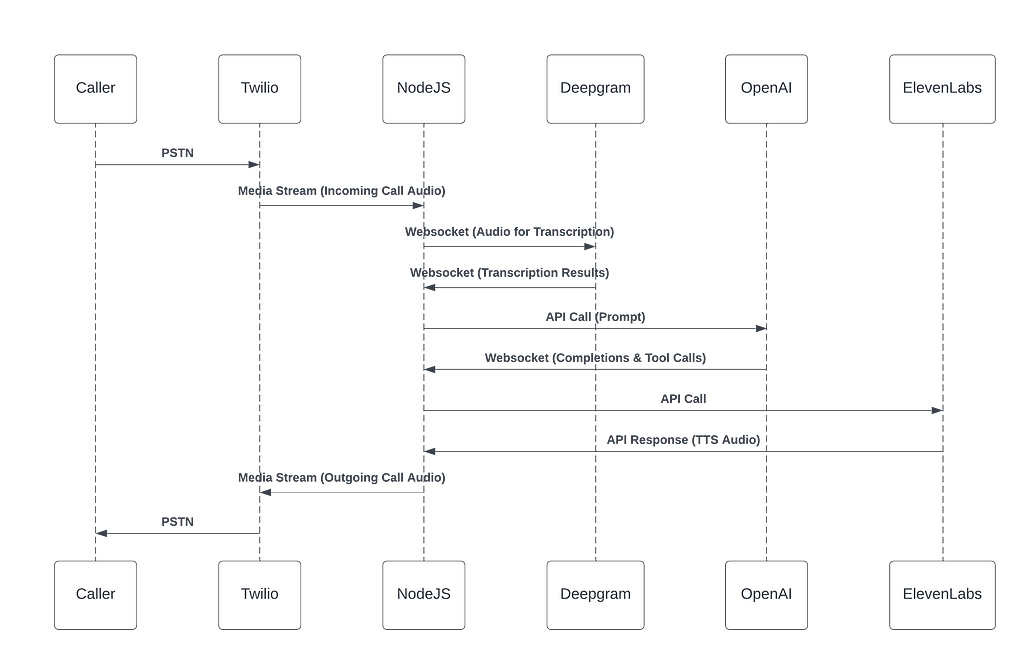

Voice Agent Flows

Before the OpenAI Realtime API

To get a phone voice agent service working, there are some key services we require

- Speech to Text ( e.g Deepgram),

- LLM/Agent ( e.g OpenAI),

- Text to Speech (e.g ElevenLabs).

These services are illustrated in the diagram below

That of course means integration with a number of services, and separate API requests for each parts.

The new OpenAI Realtime API allows us to bundle all of those together into a single request, hence the term, speech to speech.

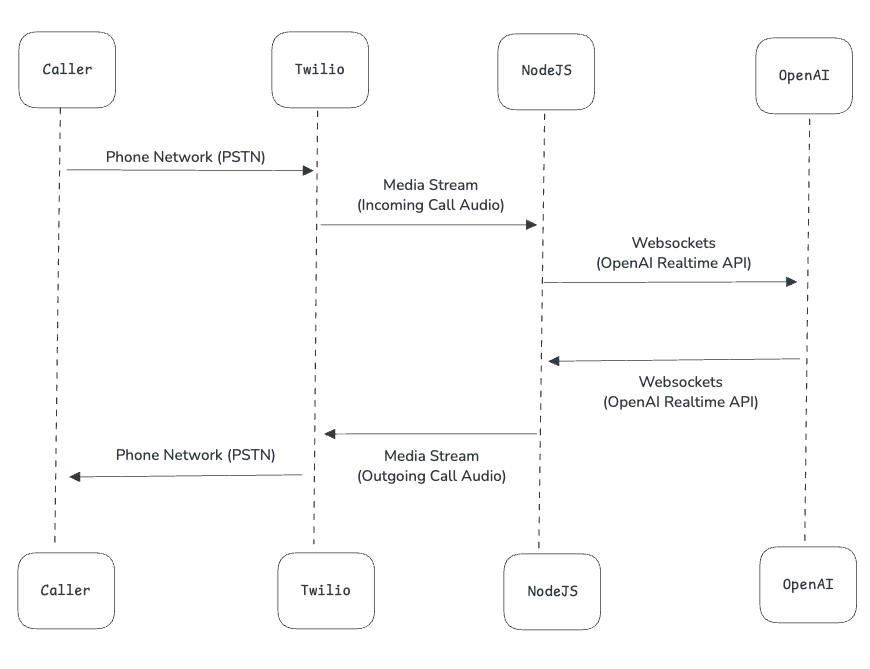

After the OpenAI Realtime API

This is what the flow diagram would look like for a similar new flow using the new OpenAI Realtime API.

Obviously this is a much simpler flow. What is happening is we are just passing the speech/audio from the phone call directly to the OpenAI Realtime API. No need for a speech to text intermediary service.

And on the response side, the Realtime API is again providing an audio stream as the response, which we can send right back to Twilio (i.e to the phone call response). So again, no need for an extra text to speech service, as it is all taken care of by the OpenAI Realtime API.

Source code review for a Twilio and Realtime API voice agent

Let’s look at some code samples for this. Twilio has provided a great github repository example for setting up this Twilio and OpenAI Realtime API flow. You can find it here:

GitHub – twilio-samples/speech-assistant-openai-realtime-api-node

Here are some excerpts from key parts of the code related to setting up

- the websockets connection from Twilio to our application, so that we can receive audio from the caller, and send audio back,

- and the websockets connection to the OpenAI Realtime API from our application.

I have added some comments in the source code below to try and explain what is going on, expecially regarding the websocket connection between Twilio and our applicaion, and the websocket connection from our application to OpenAI. The triple dots (…) refere to sections of the source code that have been removed for brevity, since they are not critical to understanding the core features of how the flow works.

// On receiving a phone call, Twilio forwards the incoming call request to

// a webhook we specify, which is this endpoint here. This allows us to

// create programatic voice applications, for example using an AI agent

// to handle the phone call

//

// So, here we are providing an initial response to the call, and creating

// a websocket (called a MediaStream in Twilio, more on that below) to receive

// any future audio that comes into the call

fastify.all('/incoming', async (request, reply) => {

const twimlResponse = `<?xml version="1.0" encoding="UTF-8"?>

<Response>

<Say>Please wait while we connect your call to the A. I. voice assistant, powered by Twilio and the Open-A.I. Realtime API</Say>

<Pause length="1"/>

<Say>O.K. you can start talking!</Say>

<Connect>

<Stream url="wss://${request.headers.host}/media-stream" />

</Connect>

</Response>`;

reply.type('text/xml').send(twimlResponse);

});

fastify.register(async (fastify) => {

// Here we are connecting our application to the websocket media stream we

// setup above. That means all audio that comes though the phone will come

// to this websocket connection we have setup here

fastify.get('/media-stream', { websocket: true }, (connection, req) => {

console.log('Client connected');

// Now, we are creating websocket connection to the OpenAI Realtime API

// This is the second leg of the flow diagram above

const openAiWs = new WebSocket('wss://api.openai.com/v1/realtime?model=gpt-4o-realtime-preview-2024-10-01', {

headers: {

Authorization: `Bearer ${OPENAI_API_KEY}`,

"OpenAI-Beta": "realtime=v1"

}

});

...

// Here we are setting up the listener on the OpenAI Realtime API

// websockets connection. We are specifying how we would like it to

// handle any incoming audio streams that have come back from the

// Realtime API.

openAiWs.on('message', (data) => {

try {

const response = JSON.parse(data);

...

// This response type indicates an LLM responce from the Realtime API

// So we want to forward this response back to the Twilio Mediat Stream

// websockets connection, which the caller will hear as a response on

// on the phone

if (response.type === 'response.audio.delta' && response.delta) {

const audioDelta = {

event: 'media',

streamSid: streamSid,

media: { payload: Buffer.from(response.delta, 'base64').toString('base64') }

};

// This is the actual part we are sending it back to the Twilio

// MediaStream websockets connection. Notice how we are sending the

// response back directly. No need for text to speech conversion from

// the OpenAI response. The OpenAI Realtime API already provides the

// response as an audio stream (i.e speech to speech)

connection.send(JSON.stringify(audioDelta));

}

} catch (error) {

console.error('Error processing OpenAI message:', error, 'Raw message:', data);

}

});

// This parts specifies how we handle incoming messages to the Twilio

// MediaStream websockets connection i.e how we handle audio that comes

// into the phone from the caller

connection.on('message', (message) => {

try {

const data = JSON.parse(message);

switch (data.event) {

// This case ('media') is that state for when there is audio data

// available on the Twilio MediaStream from the caller

case 'media':

// we first check out OpenAI Realtime API websockets

// connection is open

if (openAiWs.readyState === WebSocket.OPEN) {

const audioAppend = {

type: 'input_audio_buffer.append',

audio: data.media.payload

};

// and then forward the audio stream data to the

// Realtime API. Again, notice how we are sending the

// audio stream directly, not speech to text converstion

// as would have been required previously

openAiWs.send(JSON.stringify(audioAppend));

}

break;

...

}

} catch (error) {

console.error('Error parsing message:', error, 'Message:', message);

}

});

...

fastify.listen({ port: PORT }, (err) => {

if (err) {

console.error(err);

process.exit(1);

}

console.log(`Server is listening on port ${PORT}`);

});

So, that is how the new OpenAI Realtime API flow plays out in practice.

Regarding the Twilio MediaStreams, you can read more about them here. They are a way to setup a websockets connection between a call to a Twilio phone number and your application. This allows streaming of audio from the call to and from you application, allowing you to build programmable voice applications over the phone.

To get to the code above running, you will need to setup a Twilio number and ngrok also. You can check out my other article over here for help setting those up.

AI Voice Agent with Twilio, Express and OpenAI

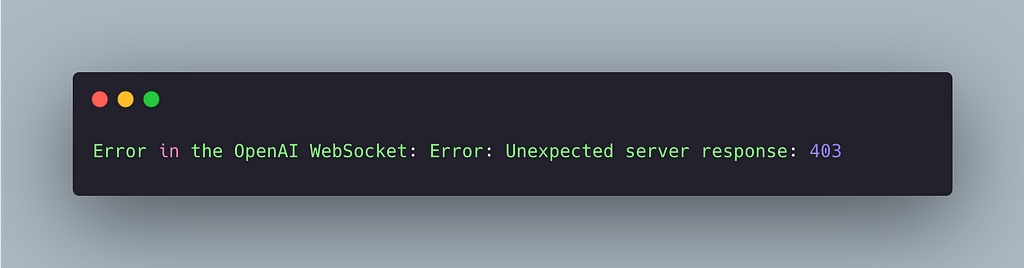

Since access to the OpenAI Realtime API has just been rolled, not everyone may have access just yet. I intially was not able to access it. Running the application worked, but as soon as it tries to connect to the OpenAI Realtime API I got a 403 error. So in case you see the same issue, it could be related to not having access yet also.

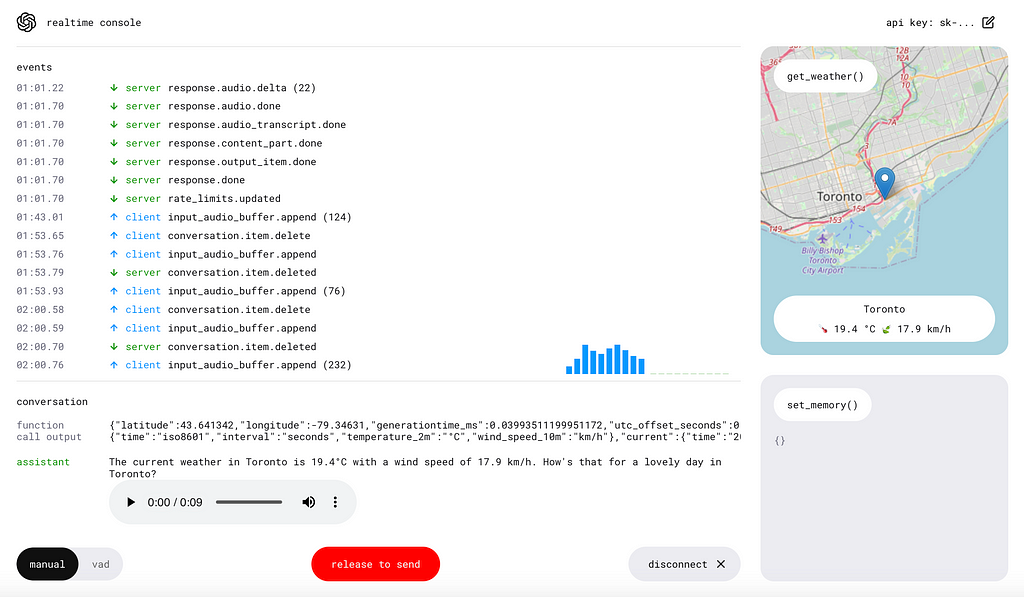

React OpenAI Realtime API Demo

OpenAI have also provided a great demo for testing out their Realtime API in the browser using a React app. I tested this out myself, and was very impressed with the speed of response from the voice agent coming from the Realtime API. The response is instant, there is no latency, and makes for a great user experience. I was definitley impressed when testing it out.

Sharing a link to the source code here. It has intructions in the README.md for how to get setup

This is a picture of what the application looks like once you get it running on local

Pricing

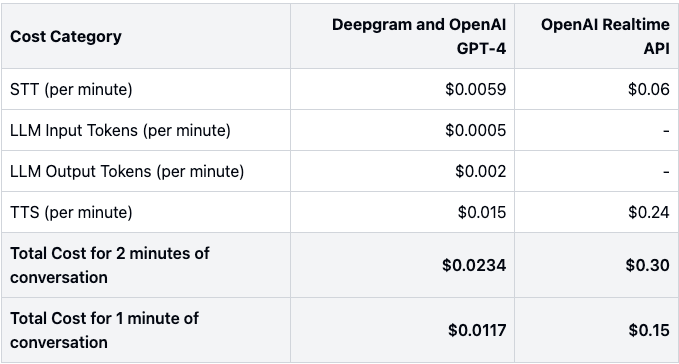

Let’s compare the cost the of using the OpenAI Realtime API versus a more conventional approach using Deepagram for speech to text (STT) and text to speech (TTS) and using OpenAI GPT-4o for the LLM part.

Comparison using the prices from their websites shows that for a 1 minute conversation, with the caller speaking half the time, and the AI agent speaking the other half, the cost per minute using Deepgram and GPT-4o would be $0.0117/minute, whereas using the OpenAI Realtime API would be $0.15/minute.

That means using the OpenAI Realtime API would be just over 10x the price per minute.

It does sound like a fair amount more expensive, though we should balance that with some of the benefits the OpenAI Realtime API could provide, including

- reduced latencies, crucial for having a good voice experience,

- ease of setup due to fewer moving parts,

- conversation interruption handling provided out of the box.

Also, please do be aware that prices can change over time, so the prices you find at the time of reading this article, may not be the same as those reflected above.

Conclusion

Hope that was helpful! What do you think of the new OpenAI Realtime API? Think you will be using it in any upcoming projects?

While we are here, are there any other tutorials or articles around voice agents andvoice AI you would be interested in? I am deep diving into that field a bit just now, so would be happy to look into anything people find interesting.

Happy hacking!

All image provided are by the author, unless stated otherwise

Exploring How the New OpenAI Realtime API Simplifies Voice Agent Flows was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Exploring How the New OpenAI Realtime API Simplifies Voice Agent Flows

Go Here to Read this Fast! Exploring How the New OpenAI Realtime API Simplifies Voice Agent Flows