An open-source, model-agnostic agentic framework that supports dependency injection

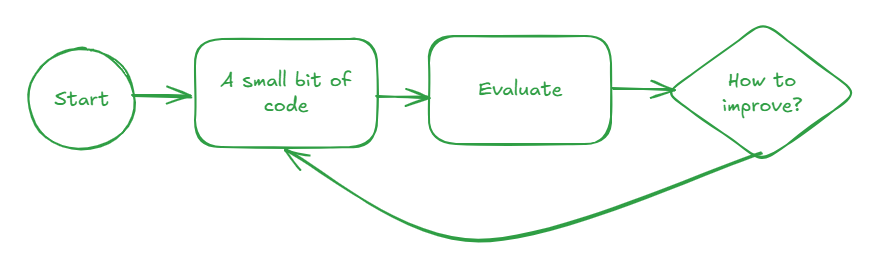

Ideally, you can evaluate agentic applications even as you are developing them, instead of evaluation being an afterthought. For this to work, though, you need to be able to mock both internal and external dependencies of the agent you are developing. I am extremely excited by PydanticAI because it supports dependency injection from the ground up. It is the first framework that has allowed me to build agentic applications in an evaluation-driven manner.

In this article, I’ll talk about the core challenges and demonstrate developing a simple agent in an evaluation-driven way using PydanticAI.

Challenges when developing GenAI applications

Like many GenAI developers, I’ve been waiting for an agentic framework that supports the full development lifecycle. Each time a new framework comes along, I try it out hoping that this will be the One — see, for example, my articles about DSPy, Langchain, LangGraph, and Autogen.

I find that there are core challenges that a software developer faces when developing an LLM-based application. These challenges are typically not blockers if you are building a simple PoC with GenAI, but they will come to bite you if you are building LLM-powered applications in production.

What challenges?

(1) Non-determinism: Unlike most software APIs, calls to an LLM with the exact same input could return different outputs each time. How do you even begin to test such an application?

(2) LLM limitations: Foundational models like GPT-4, Claude, and Gemini are limited by their training data (e.g., no access to enterprise confidential information), capability (e.g., you can not invoke enterprise APIs and databases), and can not plan/reason.

(3) LLM flexibility: Even if you decide to stick to LLMs from a single provider such as Anthropic, you may find that you need a different LLM for each step — perhaps one step of your workflow needs a low-latency small language model (Haiku), another requires great code-generation capability (Sonnet), and a third step requires excellent contextual awareness (Opus).

(4) Rate of Change: GenAI technologies are moving fast. Recently, many of the improvements have come about in foundational model capabilities. No longer are the foundational models just generating text based on user prompts. They are now multimodal, can generate structured outputs, and can have memory. Yet, if you try to build in an LLM-agnostic way, you often lose the low-level API access that will turn on these features.

To help address the first problem, of non-determinism, your software testing needs to incorporate an evaluation framework. You will never have software that works 100%; instead, you will need to be able to design around software that is x% correct, build guardrails and human oversight to catch the exceptions, and monitor the system in real-time to catch regressions. Key to this capability is evaluation-driven development (my term), an extension of test-driven development in software.

The current workaround for all the LLM limitations in Challenge #2 is to use agentic architectures like RAG, provide the LLM access to tools, and employ patterns like Reflection, ReACT and Chain of Thought. So, your framework will need to have the ability to orchestrate agents. However, evaluating agents that can call external tools is hard. You need to be able to inject proxies for these external dependencies so that you can test them individually, and evaluate as you build.

To handle challenge #3, an agent needs to be able to invoke the capabilities of different types of foundational models. Your agent framework needs to be LLM-agnostic at the granularity of a single step of an agentic workflow. To address the rate of change consideration (challenge #4), you want to retain the ability to make low-level access to the foundational model APIs and to strip out sections of your codebase that are no longer necessary.

Is there a framework that meets all these criteria? For the longest time, the answer was no. The closest I could get was to use Langchain, pytest’s dependency injection, and deepeval with something like this (full example is here):

from unittest.mock import patch, Mock

from deepeval.metrics import GEval

llm_as_judge = GEval(

name="Correctness",

criteria="Determine whether the actual output is factually correct based on the expected output.",

evaluation_params=[LLMTestCaseParams.INPUT, LLMTestCaseParams.ACTUAL_OUTPUT],

model='gpt-3.5-turbo'

)

@patch('lg_weather_agent.retrieve_weather_data', Mock(return_value=chicago_weather))

def eval_query_rain_today():

input_query = "Is it raining in Chicago?"

expected_output = "No, it is not raining in Chicago right now."

result = lg_weather_agent.run_query(app, input_query)

actual_output = result[-1]

print(f"Actual: {actual_output} Expected: {expected_output}")

test_case = LLMTestCase(

input=input_query,

actual_output=actual_output,

expected_output=expected_output

)

llm_as_judge.measure(test_case)

print(llm_as_judge.score)

Essentially, I’d construct a Mock object (chicago_weather in the above example) for every LLM call and patch the call to the LLM (retrieve_weather_data in the above example) with the hardcoded object whenever I needed to mock that part of the agentic workflow. The dependency injection is all over the place, you need a bunch of hardcoded objects, and the calling workflow becomes extremely hard to follow. Note that if you don’t have dependency injection, there is no way to test a function like this: obviously, the external service will return the current weather and there is no way to determine what the correct answer is for a question such as whether or not it’s raining right now.

So … is there an agent framework that supports dependency injection, is Pythonic, provides low-level access to LLMs, is model-agnostic, supports building it one eval-at-a-time, and is easy to use and follow?

Almost. PydanticAI meets the first 3 requirements; the fourth (low-level LLM access) is not possible, but the design does not preclude it. In the rest of this article, I’ll show you how to use it to develop an agentic application in an evaluation-driven way.

1. Your first PydanticAI Application

Let’s start out by building a simple PydanticAI application. This will use an LLM to answer questions about mountains:

agent = llm_utils.agent()

question = "What is the tallest mountain in British Columbia?"

print(">> ", question)

answer = agent.run_sync(question)

print(answer.data)

In the code above, I’m creating an agent (I’ll show you how, shortly) and then calling run_sync passing in the user prompt, and getting back the LLM’s response. run_sync is a way to have the agent invoke the LLM and wait for the response. Other ways are to run the query asynchronously, or to stream its response. (Full code is here if you want to follow along).

Run the code above, and you will get something like:

>> What is the tallest mountain in British Columbia?

The tallest mountain in British Columbia is **Mount Robson**, at 3,954 metres (12,972 feet).

To create the agent, create a model and then tell the agent to use that Model for all its steps.

import pydantic_ai

from pydantic_ai.models.gemini import GeminiModel

def default_model() -> pydantic_ai.models.Model:

model = GeminiModel('gemini-1.5-flash', api_key=os.getenv('GOOGLE_API_KEY'))

return model

def agent() -> pydantic_ai.Agent:

return pydantic_ai.Agent(default_model())

The idea behind default_model() is to use a relatively inexpensive but fast model like Gemini Flash as the default. You can then change the model used in specific steps as necessary by passing in a different model to run_sync()

PydanticAI model support looks sparse, but the most commonly used models — the current frontier ones from OpenAI, Groq, Gemini, Mistral, Ollama, and Anthropic — are all supported. Through Ollama, you can get access to Llama3, Starcoder2, Gemma2, and Phi3. Nothing significant seems to be missing.

2. Pydantic with structured outputs

The example in the previous section returned free-form text. In most agentic workflows, you’ll want the LLM to return structured data so that you can use it directly in programs.

Considering that this API is from Pydantic, returning structured output is quite straightforward. Just define the desired output as a dataclass (full code is here):

from dataclasses import dataclass

@dataclass

class Mountain:

name: str

location: str

height: float

When you create the Agent, tell it the desired output type:

agent = Agent(llm_utils.default_model(),

result_type=Mountain,

system_prompt=(

"You are a mountaineering guide, who provides accurate information to the general public.",

"Provide all distances and heights in meters",

"Provide location as distance and direction from nearest big city",

))

Note also the use of the system prompt to specify units etc.

Running this on three questions, we get:

>> Tell me about the tallest mountain in British Columbia?

Mountain(name='Mount Robson', location='130km North of Vancouver', height=3999.0)

>> Is Mt. Hood easy to climb?

Mountain(name='Mt. Hood', location='60 km east of Portland', height=3429.0)

>> What's the tallest peak in the Enchantments?

Mountain(name='Mount Stuart', location='100 km east of Seattle', height=3000.0)

But how good is this agent? Is the height of Mt. Robson correct? Is Mt. Stuart really the tallest peak in the Enchantments? All of this information could have been hallucinated!

There is no way for you to know how good an agentic application is unless you evaluate the agent against reference answers. You can not just “eyeball it”. Unfortunately, this is where a lot of LLM frameworks fall short — they make it really hard to evaluate as you develop the LLM application.

3. Evaluate against reference answers

It is when you start to evaluate against reference answers that PydanticAI starts to show its strengths. Everything is quite Pythonic, so you can build custom evaluation metrics quite simply.

For example, this is how we will evaluate a returned Mountain object on three criteria and create a composite score (full code is here):

def evaluate(answer: Mountain, reference_answer: Mountain) -> Tuple[float, str]:

score = 0

reason = []

if reference_answer.name in answer.name:

score += 0.5

reason.append("Correct mountain identified")

if reference_answer.location in answer.location:

score += 0.25

reason.append("Correct city identified")

height_error = abs(reference_answer.height - answer.height)

if height_error < 10:

score += 0.25 * (10 - height_error)/10.0

reason.append(f"Height was {height_error}m off. Correct answer is {reference_answer.height}")

else:

reason.append(f"Wrong mountain identified. Correct answer is {reference_answer.name}")

return score, ';'.join(reason)

Now, we can run this on a dataset of questions and reference answers:

questions = [

"Tell me about the tallest mountain in British Columbia?",

"Is Mt. Hood easy to climb?",

"What's the tallest peak in the Enchantments?"

]

reference_answers = [

Mountain("Robson", "Vancouver", 3954),

Mountain("Hood", "Portland", 3429),

Mountain("Dragontail", "Seattle", 2690)

]

total_score = 0

for l_question, l_reference_answer in zip(questions, reference_answers):

print(">> ", l_question)

l_answer = agent.run_sync(l_question)

print(l_answer.data)

l_score, l_reason = evaluate(l_answer.data, l_reference_answer)

print(l_score, ":", l_reason)

total_score += l_score

avg_score = total_score / len(questions)

Running this, we get:

>> Tell me about the tallest mountain in British Columbia?

Mountain(name='Mount Robson', location='130 km North-East of Vancouver', height=3999.0)

0.75 : Correct mountain identified;Correct city identified;Height was 45.0m off. Correct answer is 3954

>> Is Mt. Hood easy to climb?

Mountain(name='Mt. Hood', location='60 km east of Portland, OR', height=3429.0)

1.0 : Correct mountain identified;Correct city identified;Height was 0.0m off. Correct answer is 3429

>> What's the tallest peak in the Enchantments?

Mountain(name='Dragontail Peak', location='14 km east of Leavenworth, WA', height=3008.0)

0.5 : Correct mountain identified;Height was 318.0m off. Correct answer is 2690

Average score: 0.75

Mt. Robson’s height is 45m off; Dragontail peak’s height was 318m off. How would you fix this?

That’s right. You’d use a RAG architecture or arm the agent with a tool that provides the correct height information. Let’s use the latter approach and see how to do it with Pydantic.

Note how evaluation-driven development shows us the path forward to improve our agentic application.

4a. Using a tool

PydanticAI supports several ways to provide tools to an agent. Here, I annotate a function to be called whenever it needs the height of a mountain (full code here):

agent = Agent(llm_utils.default_model(),

result_type=Mountain,

system_prompt=(

"You are a mountaineering guide, who provides accurate information to the general public.",

"Use the provided tool to look up the elevation of many mountains."

"Provide all distances and heights in meters",

"Provide location as distance and direction from nearest big city",

))

@agent.tool

def get_height_of_mountain(ctx: RunContext[Tools], mountain_name: str) -> str:

return ctx.deps.elev_wiki.snippet(mountain_name)

The function, though, does something strange. It pulls an object called elev_wiki out of the run-time context of the agent. This object is passed in when we call run_sync:

class Tools:

elev_wiki: wikipedia_tool.WikipediaContent

def __init__(self):

self.elev_wiki = OnlineWikipediaContent("List of mountains by elevation")

tools = Tools() # Tools or FakeTools

l_answer = agent.run_sync(l_question, deps=tools) # note how we are able to inject

Because the Runtime context can be passed into every agent invocation or tool call , we can use it to do dependency injection in PydanticAI. You’ll see this in the next section.

The wiki itself just queries Wikipedia online (code here) and extracts the contents of the page and passes the appropriate mountain information to the agent:

import wikipedia

class OnlineWikipediaContent(WikipediaContent):

def __init__(self, topic: str):

print(f"Will query online Wikipedia for information on {topic}")

self.page = wikipedia.page(topic)

def url(self) -> str:

return self.page.url

def html(self) -> str:

return self.page.html()

Indeed, when we run it, we get correct heights now:

Will query online Wikipedia for information on List of mountains by elevation

>> Tell me about the tallest mountain in British Columbia?

Mountain(name='Mount Robson', location='100 km west of Jasper', height=3954.0)

0.75 : Correct mountain identified;Height was 0.0m off. Correct answer is 3954

>> Is Mt. Hood easy to climb?

Mountain(name='Mt. Hood', location='50 km ESE of Portland, OR', height=3429.0)

1.0 : Correct mountain identified;Correct city identified;Height was 0.0m off. Correct answer is 3429

>> What's the tallest peak in the Enchantments?

Mountain(name='Mount Stuart', location='Cascades, Washington, US', height=2869.0)

0 : Wrong mountain identified. Correct answer is Dragontail

Average score: 0.58

4b. Dependency injecting a mock service

Waiting for the API call to Wikipedia each time during development or testing is a bad idea. Instead, we will want to mock the Wikipedia response so that we can develop quickly and be guaranteed of the result we are going to get.

Doing that is very simple. We create a Fake counterpart to the Wikipedia service:

class FakeWikipediaContent(WikipediaContent):

def __init__(self, topic: str):

if topic == "List of mountains by elevation":

print(f"Will used cached Wikipedia information on {topic}")

self.url_ = "https://en.wikipedia.org/wiki/List_of_mountains_by_elevation"

with open("mountains.html", "rb") as ifp:

self.html_ = ifp.read().decode("utf-8")

def url(self) -> str:

return self.url_

def html(self) -> str:

return self.html_

Then, inject this fake object into the runtime context of the agent during development:

class FakeTools:

elev_wiki: wikipedia_tool.WikipediaContent

def __init__(self):

self.elev_wiki = FakeWikipediaContent("List of mountains by elevation")

tools = FakeTools() # Tools or FakeTools

l_answer = agent.run_sync(l_question, deps=tools) # note how we are able to inject

This time when we run, the evaluation uses the cached wikipedia content:

Will used cached Wikipedia information on List of mountains by elevation

>> Tell me about the tallest mountain in British Columbia?

Mountain(name='Mount Robson', location='100 km west of Jasper', height=3954.0)

0.75 : Correct mountain identified;Height was 0.0m off. Correct answer is 3954

>> Is Mt. Hood easy to climb?

Mountain(name='Mt. Hood', location='50 km ESE of Portland, OR', height=3429.0)

1.0 : Correct mountain identified;Correct city identified;Height was 0.0m off. Correct answer is 3429

>> What's the tallest peak in the Enchantments?

Mountain(name='Mount Stuart', location='Cascades, Washington, US', height=2869.0)

0 : Wrong mountain identified. Correct answer is Dragontail

Average score: 0.58

Look carefully at the above output — there are different errors from the zero-shot example. In Section #2, the LLM picked Vancouver as the closest city to Mt. Robson and Dragontail as the tallest peak in the Enchantments. Those answers happened to be correct. Now, it picks Jasper and Mt. Stuart. We need to do more work to fix these errors — but evaluation-driven development at least gives us a direction of travel.

Current Limitations

PydanticAI is very new. There are a couple of places where it could be improved:

- There is no low-level access to the model itself. For example, different foundational models support context caching, prompt caching, etc. The model abstraction in PydanticAI doesn’t provide a way to set these on the model. Ideally, we can figure out a kwargs way of doing such settings.

- The need to create two versions of agent dependencies, one real and one fake, is quite common. It would be good if we were able to annoate a tool or provide a simple way to switch between the two types of services across the board.

- During development, you don’t need logging as much. But when you go to run the agent, you will usually want to log the prompts and responses. Sometimes, you will want to log the intermediate responses. The way to do this seems to be a commercial product called Logfire. An OSS, cloud-agnostic logging framework that integrates with the PydanticAI library would be ideal.

It is possible that these already exist and I missed them, or perhaps they will have been implemented by the time you are reading this article. In either case, leave a comment for future readers.

Overall, I like PydanticAI — it offers a very clean and Pythonic way to build agentic applications in an evaluation-driven manner.

Suggested next steps:

- This is one of those blog posts where you will benefit from actually running the examples because it describes a process of development as well as a new library. This GitHub repo contains the PydanticAI example I walked through in this post: https://github.com/lakshmanok/lakblogs/tree/main/pydantic_ai_mountains Follow the instructions in the README to try it out.

- Pydantic AI documentation: https://ai.pydantic.dev/

- Patching a Langchain workflow with Mock objects. My “before” solution: https://github.com/lakshmanok/lakblogs/blob/main/genai_agents/eval_weather_agent.py

Evaluation-Driven Development for agentic applications using PydanticAI was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Evaluation-Driven Development for agentic applications using PydanticAI

Go Here to Read this Fast! Evaluation-Driven Development for agentic applications using PydanticAI