Framework to meet practical real-world requirements

Abstract

Ever since OpenAI’s ChatGPT took the world by storm in November 2022, Large Language Models (LLMs) have revolutionized various applications across industries, from natural language understanding to text generation. However, their performance needs rigorous and multidimensional evaluation metrics to ensure they meet the practical, real-world requirements of accuracy, efficiency, scalability, and ethical considerations. This article outlines a broad set of metrics and methods to measure the performance of LLM-based applications, providing insights into evaluation frameworks that balance technical performance with user experience and business needs.

This is not meant to be a comprehensive guide on all metrics to measure the performance of LLM applications, but it provides a view into key dimensions to look at and some examples of metrics. This will help you understand how to build your evaluation criterion, the final choice will depend on your actual use case.

Even though this article focuses on LLM based applications, this could be extrapolated to other modalities as well.

1. Introduction

1.1. LLM-Based Applications: Definition and Scope

There is no dearth of Large Language Models(LLMs) today. LLMs such as GPT-4, Meta’s LLaMA, Anthropic’s Claude 3.5 Sonnet, or Amazon’s Titan Text Premier, are capable of understanding and generating human-like text, making them apt for multiple downstream applications like customer facing chatbots, creative content generation, language translation, etc.

1.2. Importance of Performance Evaluation

LLMs are non-trivial to evaluate, unlike traditional ML models, which have pretty standardized evaluation criteria and datasets. The black box nature of LLMs, as well as the multiplicity of downstream use cases warrants a multifaceted performance measurement across multiple considerations. Inadequate evaluation can lead to cost overruns, poor user experience, or risks for the organization deploying them.

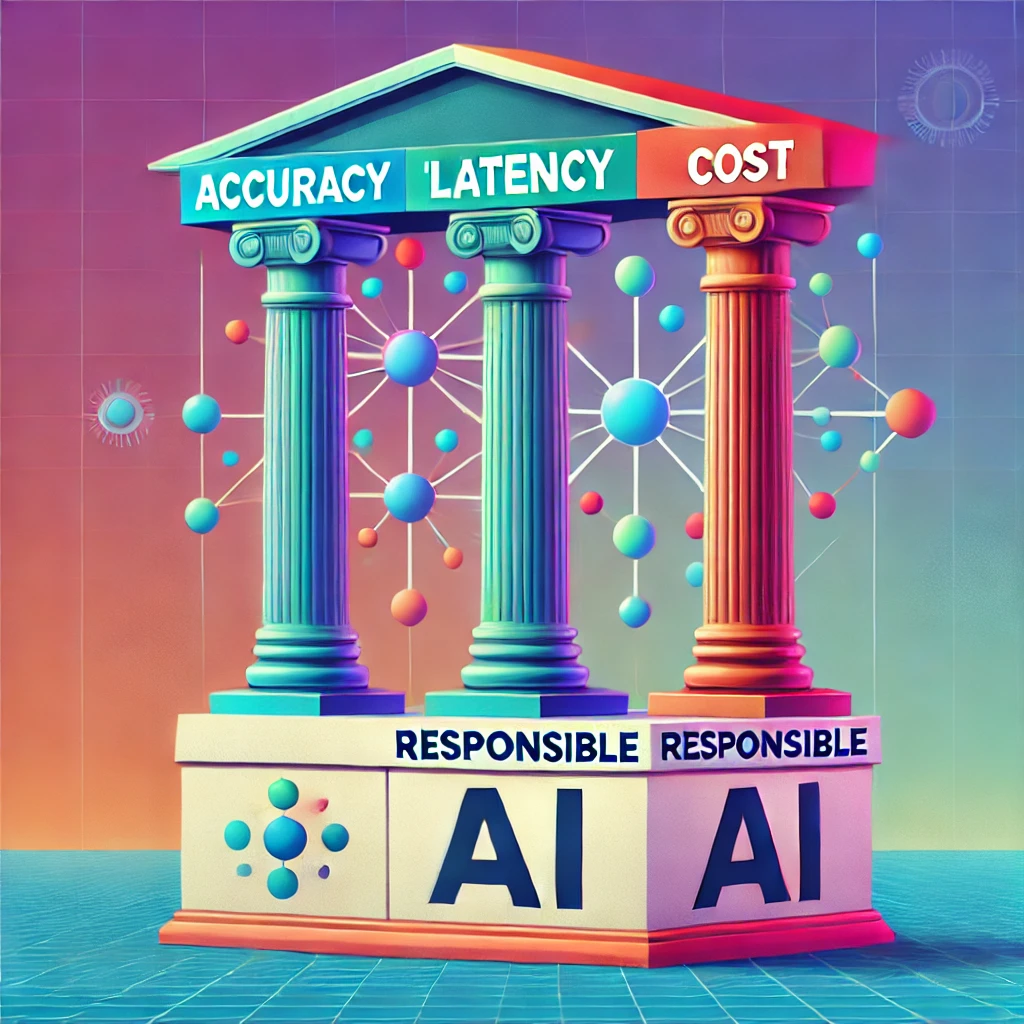

2. The four key dimensions of LLM Performance

There are 3 key ways to look at the performance of LLM based applications- namely accuracy, cost, and latency. It is additionally critical to make sure to have a set of criteria for Responsible AI to ensure the application is not harmful.

Just like the bias vs. variance tradeoff we have in classical Machine Learning applications, for LLMs we have to consider the tradeoff between accuracy on one side and cost + latency on the other side. In general, it will be a balancing act, to create an application that is “accurate”(we will define what this means in a bit) while being fast enough and cost effective. The choice of LLM as well as the supporting application architecture will heavily depend on the end user experience we aim to achieve.

2.1. Accuracy

I use the term “Accuracy” here rather loosely, as it has a very specific meaning, but gets the point across if used as an English word rather than a mathematical term.

Accuracy of the application depends on the actual use case- whether the application is doing a classification task, if it’s creating a blob of text, or if it is being used for specialized tasks like Named Entity Recognition (NER), Retrieval Augmented Generation (RAG).

2.1.1. Classification use cases

For classification tasks like sentiment analysis (positive/negative/neutral), topic modelling and Named Entity Recognition classical ML evaluation metrics are appropriate. They measure accuracy in terms of various dimensions across the confusion matrix. Typical measures include Precision, Recall, F1-Score etc.

2.1.2. Text generation use cases — including summarization and creative content

BLEU, ROUGE and METEOR scores are common metrics used to evaluate text generation tasks, particularly for translation and summarization. To simplify, people also use F1 scores by combining BLEU and ROUGE scores. There are additional metrics like Perplexity which are particularly useful for evaluating LLMs themselves, but less useful to measure the performance of full blown applications. The biggest challenge with all the above metrics is that they focus on text similarity and not semantic similarity. Depending on the use case, text similarity may not be enough, and one should also use measures of semantic proximity like SemScore.

2.1.3. RAG use cases — including summarization and creative content

In RAG based applications, evaluation requires advanced metrics to capture performance across retrieval as well as generation steps. For retrieval, one may use recall and precision to compare relevant and retrieved documents. For generation one may use additional metrics like Perplexity, Hallucination Rate, Factual Accuracy or Semantic coherence. This Article describes the key metrics that one might want to include in their evaluation.

2.2. Latency (and Throughput)

In many situations, latency and throughput of an application determine its end usability, or use experience. In today’s generation of lightning fast internet, users do not want to be stuck waiting for a response, especially when executing critical jobs.

The lower the latency, the better the user experience in user-facing applications which require real time response. This may not be as important for workloads that execute in batches, e.g. transcription of customer service calls for later use. In general, both latency and throughput can be improved by horizontal or vertical scaling, but latency may still fundamentally depend on the way the overall application is architected, including the choice of LLM. A nice benchmark to use speed of different LLM APIs is Artificial Analysis. This complements other leaderboards that focus on the quality of LLMs like LMSYS Chatbot Arena, Hugging Face open LLM leaderboards, and Stanford’s HELM which focus more on the quality of the outputs.

Latency is a key factor that will continue to push us towards Small Language Models for applications that require fast response time, where deployment on edge devices might be a necessity.

2.3. Cost

We are building LLM applications to solve business problems and create more efficiencies, with the hope of solving customer problems, as well as creating bottom line impact for our businesses. All of this comes at a cost, which could add up quickly for generative AI applications.

In my experience, when people think of the cost of LLM applications, there is a lot of discussion about the cost of inference (which is based on #tokens), the cost of find tuning, or even the cost of pre-training a LLM. There is however limited discussion on the total cost of ownership, including infrastructure and personnel costs.

The cost can vary based on the type of deployment (cloud, on-prem, hybrid), the scale of usage, and the architecture. It also varies a lot depending on the lifecycle of the application development.

- Infrastructure costs — includes inference, tuning costs, or potentially pre-training costs as well as the infrastructure — memory, compute, networking, and storage costs associated with the application. Depending on where one is building the application, these costs may not need to be managed separately, or bundled into one if one if using managed services like AWS Bedrock.

- Team and Personnel cost– we may sometimes need an army of people to build, monitor, and improve these applications. This includes the engineers to build this (Data Scientists and ML Engineers, DevOps and MLOps engineers) as well as the cross functional teams of product/project managers, HR, Legal and Risk personnel who are involved in the design and development. We may also have annotation and labelling teams to provide us with high quality data.

- Other costs– which may include the cost of data acquisition and management, customer interviews, software and licensing costs, Operational costs (MLOps/LLMOps), Security, and Compliance.

2.4. Ethical and Responsible AI Metrics

LLM based applications are still novel, many being mere proof of concepts. At the same time, they are becoming mainstream- I see AI integrated into so many applications I use daily, including Google, LinkedIn, Amazon shopping app, WhatsApp, InstaCart, etc. As the lines between human and AI interaction become blurrier, it becomes more essential that we adhere to responsible AI standards. The bigger problem is that these standards don’t exist today. Regulations around this are still being developed across the world (including the Executive Order from the White House). Hence, it’s crucial that application creators use their best judgment. Below are some of the key dimensions to keep in mind:

- Fairness and Bias: Measures whether the model’s outputs are free from biases and fairness related to race, gender, ethnicity, and other dimensions.

- Toxicity: Measures the degree to which the model generates or amplifies harmful, offensive, or derogatory content.

- Explainability: Assesses how explainable the model’s decisions are.

- Hallucinations/Factual Consistency: Ensures the model generates factually correct responses, especially in critical industries like healthcare and finance.

- Privacy: Measures the model’s ability to handle PII/PHI/other sensitive data responsibly, compliance with regulations like GDPR.

3. So are these metrics enough?

Well… not really! While the four dimensions and metrics we discussed are essential and a good starting point, they are not always enough to capture the context, or unique user preferences. Given that humans are typically end consumers of the outputs, they are best positioned to evaluate the performance of LLM based applications, especially in complex or unknown scenarios. There are two ways to take human input:

- Direct via human-in-the-loop: Human evaluators provide qualitative feedback on the outputs of LLMs, focusing on fluency, coherence, and alignment with human expectations. This feedback is crucial for improving the human-like behaviour of models.

- Indirect via secondary metrics: A|B testing from end users can compare secondary metrics like user engagement and satisfaction. E.g., we can compare the performance of hyper-personalized marketing using generative AI by comparing click through rates and conversion rates.

4. Conclusion

As a consultant, the answer to most questions is “It depends.”. This is true for evaluation criteria for LLM applications too. Depending on the use case/industry/function, one has to find the right balance of metrics across accuracy, latency, cost, and responsible AI. This should always be complemented by a human evaluation to make sure that we test the application in a real-world scenario. For example, medical and financial use cases will value accuracy and safety as well as attribution to credible sources, entertainment applications value creativity and user engagement. Cost will remain a critical factor while building the business case for an application, though the fast dropping cost of LLM inference might reduce barriers of entry soon. Latency is usually a limiting factor, and will require right model selection as well as infrastructure optimization to maintain performance.

All views in this article are the Author’s and don’t represent an endorsement of any products or services.

Evaluating performance of LLM-based Applications was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Evaluating performance of LLM-based Applications

Go Here to Read this Fast! Evaluating performance of LLM-based Applications