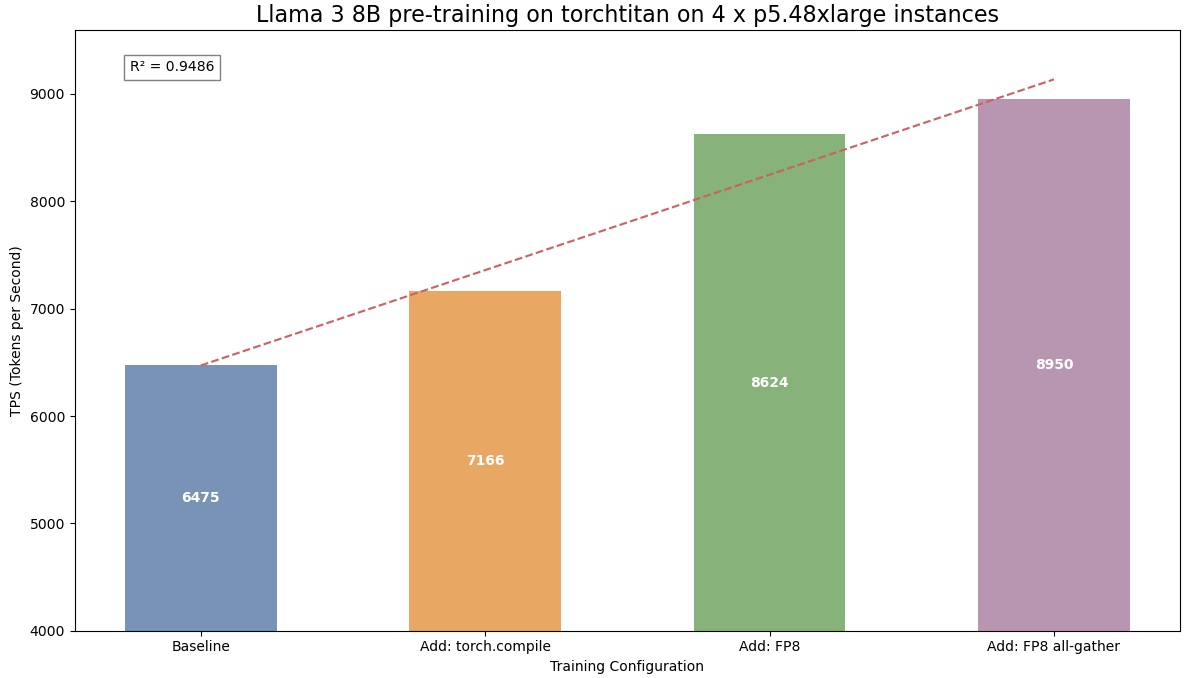

In this post, we collaborate with the team working on PyTorch at Meta to showcase how the torchtitan library accelerates and simplifies the pre-training of Meta Llama 3-like model architectures. We showcase the key features and capabilities of torchtitan such as FSDP2, torch.compile integration, and FP8 support that optimize the training efficiency.

Originally appeared here:

Efficient Pre-training of Llama 3-like model architectures using torchtitan on Amazon SageMaker