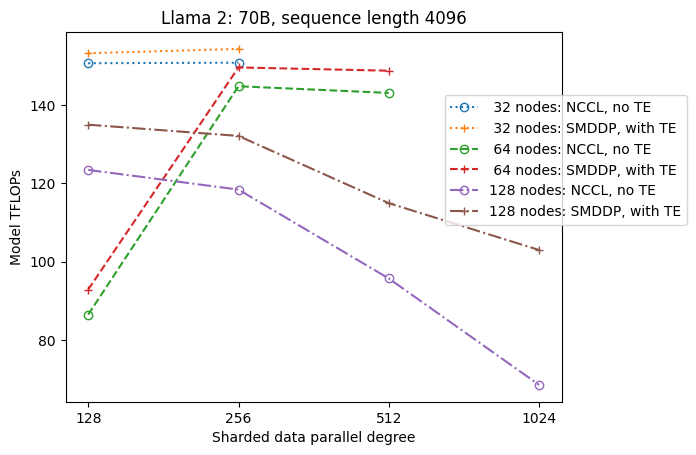

In this post, we explore the performance benefits of Amazon SageMaker (including SMP and SMDDP), and how you can use the library to train large models efficiently on SageMaker. We demonstrate the performance of SageMaker with benchmarks on ml.p4d.24xlarge clusters up to 128 instances, and FSDP mixed precision with bfloat16 for the Llama 2 model.

Originally appeared here:

Distributed training and efficient scaling with the Amazon SageMaker Model Parallel and Data Parallel Libraries