Designing the Relationship Between LLMs and User Experience

How to make your LLM do the right things, and do them right

A while ago, I wrote the article Choosing the right language model for your NLP use case on Medium. It focussed on the nuts and bolts of LLMs — and while rather popular, by now, I realize it doesn’t actually say much about selecting LLMs. I wrote it at the beginning of my LLM journey and somehow figured that the technical details about LLMs — their inner workings and training history — would speak for themselves, allowing AI product builders to confidently select LLMs for specific scenarios.

Since then, I have integrated LLMs into multiple AI products. This allowed me to discover how exactly the technical makeup of an LLM determines the final experience of a product. It also strengthened the belief that product managers and designers need to have a solid understanding of how an LLM works “under the hood.” LLM interfaces are different from traditional graphical interfaces. The latter provide users with a (hopefully clear) mental model by displaying the functionality of a product in a rather implicit way. On the other hand, LLM interfaces use free text as the main interaction format, offering much more flexibility. At the same time, they also “hide” the capabilities and the limitations of the underlying model, leaving it to the user to explore and discover them. Thus, a simple text field or chat window invites an infinite number of intents and inputs and can display as many different outputs.

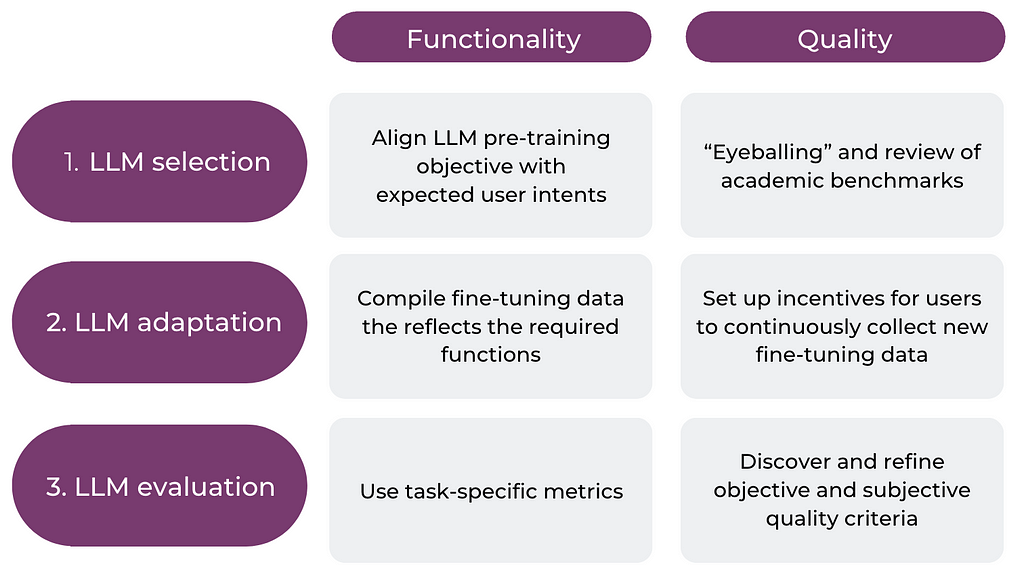

The responsibility for the success of these interactions is not (only) on the engineering side — rather, a big part of it should be assumed by whoever manages and designs the product. In this article, we will flesh out the relationship between LLMs and user experience, working with two universal ingredients that you can use to improve the experience of your product:

- Functionality, i.e., the tasks that are performed by an LLM, such as conversation, question answering, and sentiment analysis

- Quality with which an LLM performs the task, including objective criteria such as correctness and coherence, but also subjective criteria such as an appropriate tone and style

(Note: These two ingredients are part of any LLM application. Beyond these, most applications will also have a range of more individual criteria to be fulfilled, such as latency, privacy, and safety, which will not be addressed here.)

Thus, in Peter Drucker’s words, it’s about “doing the right things” (functionality) and “doing them right” (quality). Now, as we know, LLMs will never be 100% right. As a builder, you can approximate the ideal experience from two directions:

- On the one hand, you need to strive for engineering excellence and make the right choices when selecting, fine-tuning, and evaluating your LLM.

- On the other hand, you need to work your users by nudging them towards intents covered by the LLM, managing their expectations, and having routines that fire off when things go wrong.

In this article, we will focus on the engineering part. The design of the ideal partnership with human users will be covered in a future article. First, I will briefly introduce the steps in the engineering process — LLM selection, adaptation, and evaluation — which directly determine the final experience. Then, we will look at the two ingredients — functionality and quality — and provide some guidelines to steer your work with LLMs to optimize the product’s performance along these dimensions.

A note on scope: In this article, we will consider the use of stand-alone LLMs. Many of the principles and guidelines also apply to LLMs used in RAG (Retrieval-Augmented Generation) and agent systems. For a more detailed consideration of the user experience in these extended LLM scenarios, please refer to my book The Art of AI Product Development.

The LLM engineering process

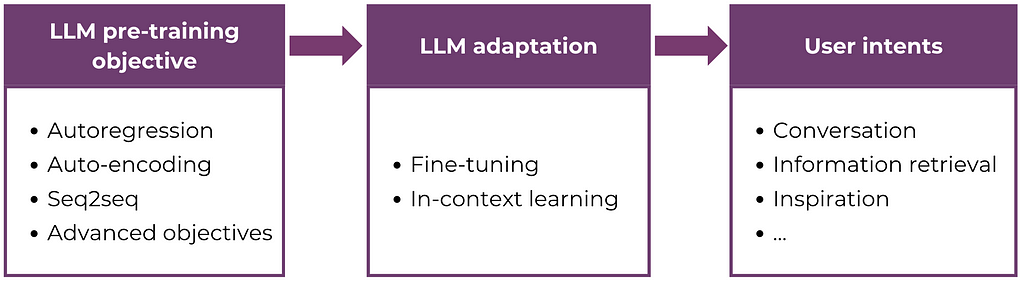

In the following, we will focus on the three steps of LLM selection, adaptation, and evaluation. Let’s consider each of these steps:

- LLM selection involves scoping your deployment options (in particular, open-source vs. commercial LLMs) and selecting an LLM whose training data and pre-training objective align with your target functionality. In addition, the more powerful the model you can select in terms of parameter size and training data quantity, the better the chances it will achieve a high quality.

- LLM adaptation via in-context learning or fine-tuning gives you the chance to close the gap between your users’ intents and the model’s original pre-training objective. Additionally, you can tune the model’s quality by incorporating the style and tone you would like your model to assume into the fine-tuning data.

- LLM evaluation involves continuously evaluating the model across its lifecycle. As such, it is not a final step at the end of a process but a continuous activity that evolves and becomes more specific as you collect more insights and data on the model.

The following figure summarizes the process:

In real life, the three stages will overlap, and there can be back-and-forth between the stages. In general, model selection is more the “one big decision.” Of course, you can shift from one model to another further down the road and even should do this when new, more suitable models appear on the market. However, these changes are expensive since they affect everything downstream. Past the discovery phase, you will not want to make them on a regular basis. On the other hand, LLM adaptation and evaluation are highly iterative. They should be accompanied by continuous discovery activities where you learn more about the behavior of your model and your users. Finally, all three activities should be embedded into a solid LLMOps pipeline, which will allow you to integrate new insights and data with minimal engineering friction.

Now, let’s move to the second column of the chart, scoping the functionality of an LLM and learning how it can be shaped during the three stages of this process.

Functionality: responding to user intents

You might be wondering why we talk about the “functionality” of LLMs. After all, aren’t LLMs those versatile all-rounders that can magically perform any linguistic task we can think of? In fact, they are, as famously described in the paper Language Models Are Few-Shot Learners. LLMs can learn new capabilities from just a couple of examples. Sometimes, their capabilities will even “emerge” out of the blue during normal training and — hopefully — be discovered by chance. This is because the task of language modeling is just as versatile as it is challenging — as a side effect, it equips an LLM with the ability to perform many other related tasks.

Still, the pre-training objective of LLMs is to generate the next word given the context of past words (OK, that’s a simplification — in auto-encoding, the LLM can work in both directions [3]). This is what a pre-trained LLM, motivated by an imaginary “reward,” will insist on doing once it is prompted. In most cases, there is quite a gap between this objective and a user who comes to your product to chat, get answers to questions, or translate a text from German to Italian. The landmark paper Climbing Towards NLU: On Meaning, Form, and Understanding in the Age of Data by Emily Bender and Alexander Koller even argues that language models are generally unable to recover communicative intents and thus are doomed to work with incomplete meaning representations.

Thus, it is one thing to brag about amazing LLM capabilities in scientific research and demonstrate them on highly controlled benchmarks and test scenarios. Rolling out an LLM to an anonymous crowd of users with different AI skills and intents—some harmful—is a different kind of game. This is especially true once you understand that your product inherits not only the capabilities of the LLM but also its weaknesses and risks, and you (not a third-party provider) hold the responsibility for its behavior.

In practice, we have learned that it is best to identify and isolate discrete islands of functionality when integrating LLMs into a product. These functions can largely correspond to the different intents with which your users come to your product. For example, it could be:

- Engaging in conversation

- Retrieving information

- Seeking recommendations for a specific situation

- Looking for inspiration

Oftentimes, these can be further decomposed into more granular, potentially even reusable, capabilities. “Engaging in conversation” could be decomposed into:

- Provide informative and relevant conversational turns

- Maintain a memory of past interactions (instead of starting from scratch at every turn)

- Display a consistent personality

Taking this more discrete approach to LLM capabilities provides you with the following advantages:

- ML engineers and data scientists can better focus their engineering activities (Figure 2) on the target functionalities.

- Communication about your product becomes on-point and specific, helping you manage user expectations and preserving trust, integrity, and credibility.

- In the user interface, you can use a range of design patterns, such as prompt templates and placeholders, to increase the chances that user intents are aligned with the model’s functionality.

Guidelines for ensuring the right functionality

Let’s summarize some practical guidelines to make sure that the LLM does the right thing in your product:

- During LLM selection, make sure you understand the basic pre-training objective of the model. There are three basic pre-training objectives (auto-encoding, autoregression, sequence-to-sequence), and each of them influences the behavior of the model.

- Many LLMs are also pre-trained with an advanced objective, such as conversation or executing explicit instructions (instruction fine-tuning). Selecting a model that is already prepared for your task will grant you an efficient head start, reducing the amount of downstream adaptation and fine-tuning you need to do to achieve satisfactory quality.

- LLM adaptation via in-context learning or fine-tuning gives you the opportunity to close the gap between the original pre-training objective and the user intents you want to serve.

- During the initial discovery, you can use in-context learning to collect initial usage data and sharpen your understanding of relevant user intents and their distribution.

- In most scenarios, in-context learning (prompt tuning) is not sustainable in the long term — it is simply not efficient. Over time, you can use your new data and learnings as a basis to fine-tune the weights of the model.

- During model evaluation, make sure to apply task-specific metrics. For example, Text2SQL LLMs (cf. this article) can be evaluated using metrics like execution accuracy and test-suite accuracy, while summarization can be evaluated using similarity-based metrics.

These are just short snapshots of the lessons we learned when integrating LLMs. My upcoming book The Art of AI Product Development contains deep dives into each of the guidelines along with numerous examples. For the technical details behind pre-training objectives and procedures, you can refer to this article.

Ok, so you have gained an understanding of the intents with which your users come to your product and “motivated” your model to respond to these intents. You might even have put out the LLM into the world in the hope that it will kick off the data flywheel. Now, if you want to keep your good-willed users and acquire new users, you need to quickly ramp up on our second ingredient, namely quality.

Achieving a high quality

In the context of LLMs, quality can be decomposed into an objective and a subjective component. The objective component tells you when and why things go wrong (i.e., the LLM makes explicit mistakes). The subjective component is more subtle and emotional, reflecting the alignment with your specific user crowd.

Objective quality criteria

Using language to communicate comes naturally to humans. Language is ingrained in our minds from the beginning of our lives, and we have a hard time imagining how much effort it takes to learn it from scratch. Even the challenges we experience when learning a foreign language can’t compare to the training of an LLM. The LLM starts from a blank slate, while our learning process builds on an incredibly rich basis of existing knowledge about the world and about how language works in general.

When working with an LLM, we should constantly remain aware of the many ways in which things can go wrong:

- The LLM might make linguistic mistakes.

- The LLM might slack on coherence, logic, and consistency.

- The LLM might have insufficient world knowledge, leading to wrong statements and hallucinations.

These shortcomings can quickly turn into showstoppers for your product — output quality is a central determinant of the user experience of an LLM product. For example, one of the major determinants of the “public” success of ChatGPT was that it was indeed able to generate correct, fluent, and relatively coherent text across a large variety of domains. Earlier generations of LLMs were not able to achieve this objective quality. Most pre-trained LLMs that are used in production today do have the capability to generate language. However, their performance on criteria like coherence, consistency, and world knowledge can be very variable and inconsistent. To achieve the experience you are aiming for, it is important to have these requirements clearly prioritized and select and adapt LLMs accordingly.

Subjective quality criteria

Venturing into the more nuanced subjective domain, you want to understand and monitor how users feel around your product. Do they feel good and trustful and get into a state of flow when they use it? Or do they go away with feelings of frustration, inefficiency, and misalignment? A lot of this hinges on individual nuances of culture, values, and style. If you are building a copilot for junior developers, you hardly want it to speak the language of senior executives and vice versa.

For the sake of example, imagine you are a product marketer. You have spent a lot of your time with a fellow engineer to iterate on an LLM that helps you with content generation. At some point, you find yourself chatting with the UX designer on your team and bragging about your new AI assistant. Your colleague doesn’t get the need for so much effort. He is regularly using ChatGPT to assist with the creation and evaluation of UX surveys and is very satisfied with the results. You counter — ChatGPT’s outputs are too generic and monotonous for your storytelling and writing tasks. In fact, you have been using it at the beginning and got quite embarrassed because, at some point, your readersstarted to recognize the characteristic ChatGPT flavor. That was a slippery episode in your career, after which you decided you needed something more sophisticated.

There is no right or wrong in this discussion. ChatGPT is good for straightforward factual tasks where style doesn’t matter that much. By contrast, you as a marketer need an assistant that can assist in crafting high-quality, persuasive communications that speak the language of your customers and reflect the unique DNA of your company.

These subjective nuances can ultimately define the difference between an LLM that is useless because its outputs need to be rewritten anyway and one that is “good enough” so users start using it and feed it with suitable fine-tuning data. The holy grail of LLM mastery is personalization — i.e., using efficient fine-tuning or prompt tuning to adapt the LLM to the individual preferences of any user who has spent a certain amount of time with the model. If you are just starting out on your LLM journey, these details might seem far off — but in the end, they can help you reach a level where your LLM delights users by responding in the exact manner and style that is desired, spurring user satisfaction and large-scale adoption and leaving your competition behind.

Guidelines

Here are our tips for managing the quality of your LLM:

- Be alert to different kinds of feedback. The quest for quality is continuous and iterative — you start with a few data points and a very rough understanding of what quality means for your product. Over time, you flesh out more and more details and learn which levers you can pull to improve your LLM.

- During model selection, you still have a lot of discovery to do — start with “eyeballing” and testing different LLMs with various inputs (ideally by multiple team members).

- Your engineers will also be evaluating academic benchmarks and evaluation results that are published together with the model. However, keep in mind that these are only rough indicators of how the model will perform in your specific product.

- At the beginning, perfectionism isn’t the answer. Your model should be just good enough to attract users who will start supplying it with relevant data for fine-tuning and evaluation.

- Bring your team and users together for qualitative discussions of LLM outputs. As they use language to judge and debate what is right and what is wrong, you can gradually uncover their objective and emotional expectations.

- Make sure to have a solid LLMOps pipeline in place so you can integrate new data smoothly, reducing engineering friction.

- Don’t stop — at later stages, you can shift your focus toward nuances and personalization, which will also help you sharpen your competitive differentiation.

To sum up: assuming responsibility

Pre-trained LLMs are highly convenient — they make AI accessible to everyone, offloading the huge engineering, computation, and infrastructure spending needed to train a huge initial model. Once published, they are ready to use, and we can plug their amazing capabilities into our product. However, when using a third-party model in your product, you inherit not only its power but also the many ways in which it can and will fail. When things go wrong, the last thing you want to do to maintain integrity is to blame an external model provider, your engineers, or — worse — your users.

Thus, when building with LLMs, you should not only look for transparency into the model’s origins (training data and process) but also build a causal understanding of how its technical makeup shapes the experience offered by your product. This will allow you to find the sensitive balance between kicking off a robust data flywheel at the beginning of your journey and continuously optimizing and differentiating the LLM as your product matures toward excellence.

References

[1] Janna Lipenkova (2022). Choosing the right language model for your NLP use case, Medium.

[2] Tom B. Brown et al. (2020). Language Models are Few-Shot Learners.

[3] Jacob Devlin et al. (2018). BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding.

[4] Emily M. Bender and Alexander Koller (2020). Climbing towards NLU: On Meaning, Form, and Understanding in the Age of Data.

[5] Janna Lipenkova (upcoming). The Art of AI Product Development, Manning Publications.

Note: All images are by the author, except when noted otherwise.

Designing the relationship between LLMs and user experience was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Designing the relationship between LLMs and user experience

Go Here to Read this Fast! Designing the relationship between LLMs and user experience