Pet Project for Data/Analytics Engineers: Explore Modern Data Stack Tools — dbt Core, Snowflake, Fivetran, GitHub Actions.

Here is a simple and fast pet project for Data/Analytics Engineers, who want to kick the tires on Modern Data Stack tools including dbt Core, Snowflake, Fivetran, and GitHub Actions. This hands-on experience will allow you to develop an end-to-end data lifecycle, from extracting data from your Google Calendar to presenting it in a Snowflake analytics dashboard. In this article, I’ll walk you through the project, sharing insights and tips along the way. See Github repo.

Technical Overview

The project architecture is depicted as follows:

Google Calendar -> Fivetran -> Snowflake -> dbt -> Snowflake Dashboard, with GitHub Actions orchestrating the deployment.

Referencing Joe Reis’s “Fundamentals of Data Engineering,” let’s review our project in alignment with the defined stages of the data lifecycle:

- Data Generation — Google Calendar, Fivetran

If you’re a Google Calendar user, chances are you’ve accumulated a wealth of data there. Now you can easily retrieve it from your account by leveraging “data movement platforms” like Fivetran. This tool automates ELT (Extract, Load, Transform) process, integrating your data from the source system of Google Calendar to our Snowflake data warehouse.

As of now, Fivetran offers 14-day free trial — link. Registration is very straightforward. - Storage — Snowflake

Snowflake, a cloud-based data warehouse tailored for analytical needs, will serve as our data storage solution. The data volume we will deal with is small, so we will not try to overkill with data partitioning, time travel, Snowpark, and other Snowflake advanced capabilities. However, we will pay particular attention to Access Control (this will be used for dbt access). You need to set up a trial account which provides you with 30 days of free usage and 400$ limit for the Enterprise edition. - Ingestion — Fivetran

Data ingestion can be configured from both Fivetran and Snowflake using the Partner Connect feature. Choose your preferred method and set up the Google Calendar connector. After the initial sync, you can access your data from the Snowflake UI. You can visit the connector webpage to see the schema diagram here.

A new database will be created specifically for Fivetran sync, and the corresponding warehouse to run SQL workloads. As you should know, Snowflake is built with decoupled Storage and Compute, hence the costs are separated as well. As a best practice, you should use different warehouses for different workloads (ad-hoc, sync, BI analytics) or different environments (dev, prod).

- Transformation — dbt Core

With the data residing in Snowflake (and automatically syncing every 6 hours by default), we move to the Transformation stage using dbt Core. dbt (data build tool) facilitates modularization of SQL queries, enabling the reuse and version control of SQL workflows, just like software code is typically managed. There are two ways to access dbt: dbt Cloud and dbt Core. dbt Cloud is a paid cloud-based version of the service and dbt Core is a python package providing all functionality you can use for free.

Install dbt Core on your machine, initialize the project using “dbt init” command in CLI, and set up the Snowflake connection. See the example of my profiles.yml file.

To be able to connect to Snowflake, we will also need to run a bunch of DCL (Data Control Language) SQL commands, find them here. Following the least privilege principle, we will create a separate user for dbt and give it access only to the source database (where Fivetran syncs data) and development, and production databases (where we will sink transformed data). - Following the best practice structuring approach, you need to create three folders representing the staging, intermediate, and marts layers of your data transformations. Here you can experiment with your models, but also you can copy my examples for Google Calendar data.

In the repo, you will find the “sources.yml” file listing all tables in the Google Calendar schema. There are 3 staging models created (event.sql,

attendee.sql, recurrence.sql), 1 transform model (utc_event.sql), and 1 mart model (event_attendee_summary.sql).

The important features of dbt are Jinja and macros that you can weave into SQL, enhancing its impact and reusability.

- Set data expectations using generic or singular tests in dbt to ensure data quality. Some data quality rules are placed in the “source.yml” file,

as well as in the “/tests” folder. During the “dbt build” command these data quality checks will be run along with models build to prevent data corruption.

- Explore dbt’s Snapshot feature for change data capture and type-2 Slowly Changing Dimensions. In our example, we capture changes in the “recurrence” table.

- Take a while to generate dbt documentation using the “dbt docs generate” command. You will see the data lineage graph and metadata which is automatically created from your project. You can further enhance it by adding descriptions to data entities in your .yml files.

Good documentation provides better data discoverability and governance.

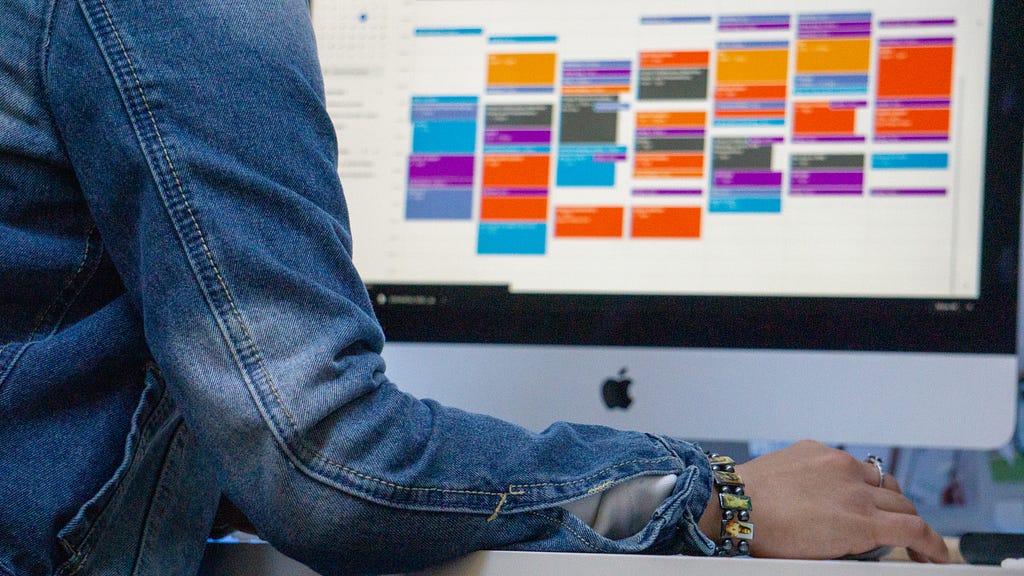

- Serving — Snowflake Dashboard

Finally, visualize your transformed data using Snowflake Dashboards. Create a dashboard in the Snowflake UI and experiment with tiles (plots) based on your SQL queries.

- Deploying — GitHub Actions

While dbt Cloud offers an easy deployment option, we’ll use GitHub Actions workflows for our dbt Core project. You need to create a workflow .yml file which will be triggered whenever changes are pushed to the GitHub dbt repo, running specified actions. In my example workflow, you can see a two-step deployment process: dbt build for the development environment and in case of success, also dbt

build for the production environment.

Note: replace secrets like Snowflake account and password with GitHub secrets. For that, on your repo webpage, go to Settings -> Secrets and Variables -> Actions.

Every time “master” branch gets updated, you can see the workflow started in your Actions tab on the repo:

In this project, we’ve just scratched the surface of various technologies in the modern data engineering stack. It’s not only a practical accomplishment but also an excellent starting point for deeper exploration. Thank you for reading, and happy coding!

If you reached the end of the article, maybe you found it valuable and may want to connect with me on LinkedIn. I am open to opportunities!

Unless otherwise noted, all images are by the author.

References:

[1] Reis, J. (2022). Fundamentals of Data Engineering: Plan and Build Robust Data Systems. O’Reilly Media.

dbt Core, Snowflake, and GitHub Actions: pet project for Data Engineers was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

dbt Core, Snowflake, and GitHub Actions: pet project for Data Engineers

Go Here to Read this Fast! dbt Core, Snowflake, and GitHub Actions: pet project for Data Engineers