Just because it was minimized, doesn’t mean it’s secure!

Based on our paper The Data Minimization Principle in Machine Learning by Prakhar Ganesh, Cuong Tran, Reza Shokri, and Ferdinando Fioretto

The Data Minimization Principle

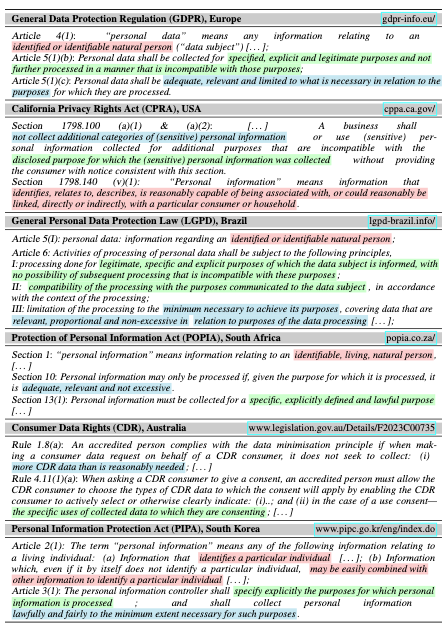

The proliferation of data-driven systems and ML applications escalates a number of privacy risks, including those related to unauthorized access to sensitive information. In response, international data protection frameworks like the European General Data Protection Regulation (GDPR), the California Privacy Rights Act (CPRA), the Brazilian General Data Protection Law (LGPD), etc. have adopted data minimization as a key principle to mitigate these risks.

At its core, the data minimization principle requires organizations to collect, process, and retain only personal data that is adequate, relevant, and limited to what is necessary for specified objectives. It’s grounded in the expectation that not all data is essential and, instead, contributes to a heightened risk of information leakage. The data minimization principle builds on two core pillars, purpose limitation and data relevance.

Purpose Limitation

Data protection regulations mandate that data be collected for a legitimate, specific and explicit purpose (LGPD, Brazil) and prohibit using the collected data for any other incompatible purpose from the one disclosed (CPRA, USA). Thus, data collectors must define a clear, legal objective before data collection and use the data solely for that objective. In an ML setting, this purpose can be seen as collecting data for training models to achieve optimal performance on a given task.

Data Relevance

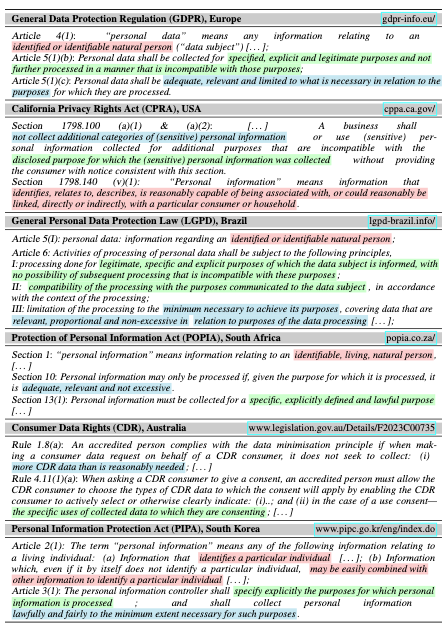

Regulations like the GDPR require that all collected data be adequate, relevant, and limited to what is necessary for the purposes it was collected for. In other words, data minimization aims to remove data that does not serve the purpose defined above. In ML contexts, this translates to retaining only data that contributes to the performance of the model.

Privacy expectations through minimization

As you might have already noticed, there is an implicit expectation of privacy through minimization in data protection regulations. The data minimization principle has even been hailed by many in the public discourse (EDPS, Kiteworks, The Record, Skadden, k2view) as a principle to protect privacy.

The EU AI Act states in Recital 69, “The right to privacy and to protection of personal data must be guaranteed throughout the entire lifecycle of the AI system. In this regard, the principles of data minimisation and data protection by design and by default, as set out in Union data protection law, are applicable when personal data are processed”.

However, this expectation of privacy from minimization overlooks a crucial aspect of real world data–the inherent correlations among various features! Information about individuals is rarely isolated, thus, merely minimizing data, may still allow for confident reconstruction. This creates a gap, where individuals or organizations using the operationalization attempts of data minimization, might expect improved privacy, despite using a framework that is limited to only minimization.

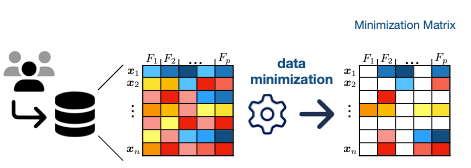

The Correct Way to Talk about Privacy

Privacy auditing often involves performing attacks to assess real-world information leakage. These attacks serve as powerful tools to expose potential vulnerabilities and by simulating realistic scenarios, auditors can evaluate the effectiveness of privacy protection mechanisms and identify areas where sensitive information may be revealed.

Some adversarial attacks that might be relevant in this situation include reconstruction and re-identification attacks. Reconstruction attacks aim to recover missing information from a target dataset. Re-identification attacks aim to re-identify individuals using partial or anonymized data.

The gap between Data Minimization and Privacy

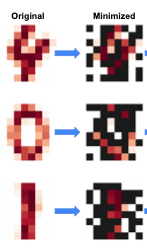

Consider the example of minimizing data from an image, and removing pixels that do not contribute to the performance of the model. Solving that optimization would give you minimized data that looks something like this.

The trends in this example are interesting. As you’ll notice, the central vertical line is preserved in the image of the digit ‘1’, while the outer curves are retained for ‘0’. In other words, while 50% of the pixels are removed, it doesn’t seem like any information is lost. One can even show that is the case by applying a very simple reconstruction attack using data imputation.

Despite minimizing the dataset by 50%, the images can still be reconstructed using overall statistics. This provides a strong indication of privacy risks and suggests that a minimized dataset does not equate to enhanced privacy!

So What Can We Do?

While data protection regulations aim to limit data collection with an expectation of privacy, current operationalizations of minimization fall short of providing robust privacy safeguards. Notice, however, that this is not to say that minimization is incompatible with privacy; instead, the emphasis is on the need for approaches that incorporate privacy into their objectives, rather than treating them as an afterthought.

We provide a deeper empirical exploration of data minimization and its misalignment with privacy, along with potential solutions, in our paper. We seek to answer a critical question: “Do current data minimization requirements in various regulations genuinely meet privacy expectations in legal frameworks?” Our evaluations reveal that the answer is, unfortunately, no.

Data Minimization Does Not Guarantee Privacy was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Data Minimization Does Not Guarantee Privacy

Go Here to Read this Fast! Data Minimization Does Not Guarantee Privacy