The dos and don’ts of processing data in the age of AI

Beyond profits: Giving vs gaining in the digital age

The digital economy has been built on the wonderful promise of equal, fast, and free access to knowledge and information. It has been a long time since then. And instead of the promised equality, we got power imbalances amplified by network effects locking users to the providers of the most popular services. Yet, at first glance, it might appear the users are still not paying anything. But this is where throwing a second glance is worth it. Because they are paying. We all are. We are giving away our data (and a lot of it) to simply access some of the services in question. And all the while their providers are making astronomical profits at the back end of this unbalanced equation. And this applies not only to the present and well-established social media networks but also to the ever-growing number of AI tools and services available out there.

In this article, we will take a full ride on this wild slide and we will do it by considering both the perspective of the users and that of the providers. The current reality, where most service providers rely on dark patterned practices to get their hands on as much data as possible, is but one alternative. Unfortunately, the one we are all living in. To see what some of the other ones might look like, we’ll start off by considering the so-called technology acceptance model. This will help us determine whether the users are actually accepting the rules of the game or if they are just riding the AI hype no matter the consequences. Once we’ve cleared that up, we will turn to what happens in the aftermath with all the (so generously given away) data. Finally, we will consider some practical steps and best practice solutions for those AI developers wanting to do better.

a. Technology acceptance or sleazing your way to consent?

The technology acceptance model is by no means a new concept. Quite to the contrary, this theory has been the subject of public discussion since as early as 1989 when Fred D. Davis introduced it in his Perceived Usefulness, Perceived Ease of Use, and User Acceptance of Information Technology.[1] As the title hints, the gist of the idea is that the users’ perception of the usefulness of the technology, as well as user experience when interacting with the technology, are two crucial components determining how likely it is that the user will agree to just about anything to be able to actually use it.

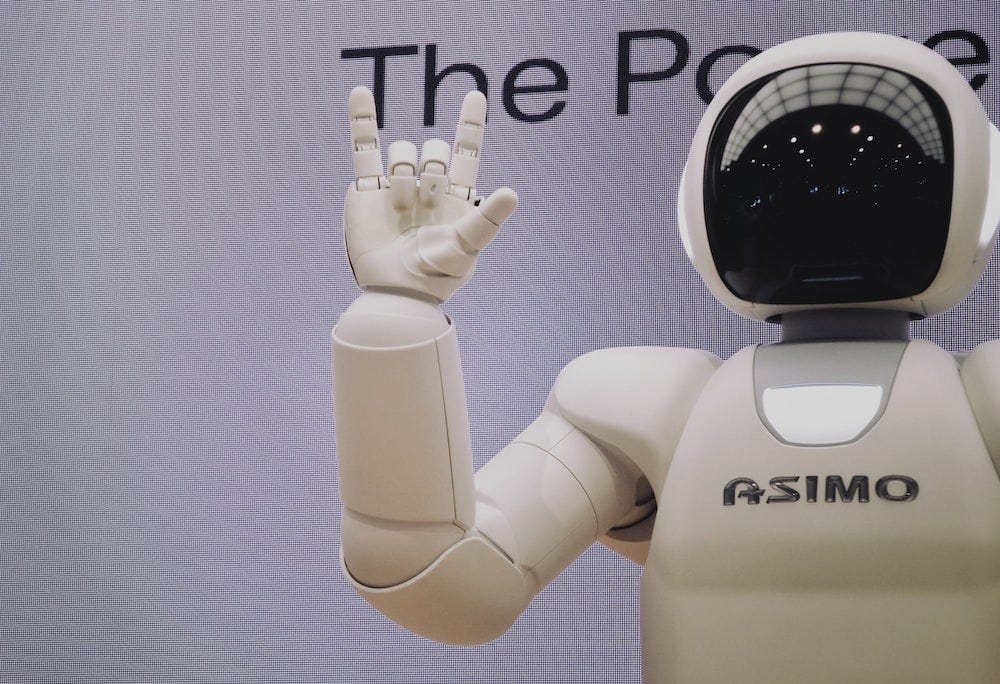

When it comes to many AI technologies, one does not need to think for too long to see that this is the case. The very fact that we call many of these AI systems ‘tools’ is enough to suggest that we do perceive them as useful. If anything, then at least to pass the time. Furthermore, the law of the market basically mandates that only the most user-friendly and aesthetically pleasing apps will make their way to a large-scale audience.

Nowadays, we can add two more things to Davis’s equation, these are network effects and the ‘AI hype’. So now, not only are you a caveman if you had never let ChatGPT correct your spelling or draft you a polite email, but you are also unable to participate in many conversations happening all around, you cannot understand half of the news hitting the headlines, and you also appear to be losing time as everybody else is helping themselves out with these tools. How is that for motivation to accept just about anything presented to you, even more so when it’s nicely packed with a pretty graphic user interface?

b. Default settings — forcefully altruistic

As already hinted, it appears that we are rather open to giving all our data away to the developers of many AI systems. We left our breadcrumbs all over the internet, have no overview nor control over it, and apparently have to tolerate commercial actors collecting those breadcrumbs and using them to make fried chicken. The metaphor may be a little farfetched but its implications apply nonetheless. It appears we simply must tolerate the fact that some systems might have been trained with our data, because if we cannot even tell where all our data is, how can the providers be expected to figure out where all the data comes from and inform all data subjects accordingly.

One thing, however, we are currently being altruistic by default about, but where privacy and the GDPR still have a fighting chance is data collected when interacting with a given system and used for improving that system or developing new models by the same provider. The reason we currently appear to be giving this data away altruistically is, however, rather different than the one described in the previous paragraph. Here, the altruism stems much more from the unclear legal situation we find ourselves in and the abuse of its many gaps and ambiguities. (Aside from the users also in most cases valuing their money more than their privacy, but that is beside the point now.)[2]

For example, as opposed to OpenAI actively finding every single person whose personal data is contained in the data sets used to train their models, it could definitely inform their active users that their chats will be used to improve the current and train new models. And here the disclaimer

“As noted above, we may use Content you provide us to improve our Services, for example to train the models that power ChatGPT. See here for instructions on how you can opt out of our use of your Content to train our models.”

does not make the cut for several reasons.[3] Firstly, the users should be able to actively decide if they want their data to be used for improving the provider’s services, not only be able to opt out of such processing afterwards. Secondly, using words such as ‘may’ can give a very false impression to the average user. It may insinuate that this is something done only sporadically and in special circumstances, whereas this is in fact a common practice and the golden rule of the trade. Thirdly, ‘models that power ChatGPT’ is ambiguous and unclear even for someone very well informed of their practices. Neither have they provided sufficient information on the models they use and how these are trained nor have they explained how these ‘power ChatGPT’.

Finally, when reading their policy, one is left with the belief that they only use Content (with a capital c) to train these unknown models. Meaning that they only use

“Personal Information that is included in the input, file uploads, or feedback that [the users] provide to [OpenAI’s] Services”.

However, this clearly cannot be correct when we consider the scandal from March 2023, which involved some users’ payment details being shared with other users.[4] And if these payment details have ended up in the models, we can safely assume that the accompanying names, email addresses and other account information are not excluded as well.

Of course, in this described context, the term data altruism can only be used with a significant amount of sarcasm and irony. However, even with providers that aren’t blatantly lying about which data they use and aren’t intentionally elusive with the purposes they use it for, we will again run into problems. Such as, for instance, the complexity of the processing operations that either leads to oversimplification of privacy policies, similar to that of OpenAI, or incomprehensible policies that no one wants to have a look at, let alone read. Both end with the same result, users agreeing to whatever is necessary just to be able to access the service.

Now, one very popular response to such observations happens to be that most of the data we give away is not that important to us, so why should it be to anyone else? Furthermore, who are we to be so interesting to the large conglomerates running the world? However, when this data is used to build nothing less than a business model that relies particularly on those small, irrelevant data points collected from millions across the globe, then the question gets a completely different perspective.

c. Stealing data as a business model?

To examine the business model built on these millions of unimportant consents thrown around every day, we need to examine just how altruistic the users are in giving away their data. Of course, when the users access the service and give away their data in the process, they also get that service in exchange for the data. But that is not the only thing they get. They also get advertisements, or maybe a second-grade service, as the first grade is reserved for subscription users. Not to say that these subscription users aren’t still giving away their Content (with a capital c), as well as (at least in the case of OpenAI) their account information.

And so, while the users are agreeing to just about anything being done with their data in order to use the tool or service, the data they give away is being monetized multiple times to serve them personalized ads and develop new models, which may again follow a freemium model of access. Leaving aside the more philosophical questions, such as why numbers on a bank account are so much more valuable than our life choices and personal preferences, it seems far from logical that the users would be giving away so much to get so little. Especially as the data we are discussing is essential for the service providers, at least if they want to remain competitive.

However, this does not have to be the case. We do not have to wait for new and specific AI regulations to tell us what to do and how to behave. At least when it comes to personal data, the GDPR is pretty clear on how it can be used and for which purposes, no matter the context.

What does the law have to say about it?

As opposed to copyright issues, where the regulations might need to be reinterpreted in light of the new technologies, the same cannot be said for data protection. Data protection has for the better part developed in the digital age and in trying to govern the practices of online service providers. Hence, applying the existing regulations and adhering to existing standards cannot be avoided. Whether and how this can be done is another question.

Here, a couple of things ought to be considered:

1. Consent is an obligation, not a choice.

Not informing the users (before they actually start using the tool) of the fact that their personal data and model inputs will be used for developing new and improving existing models is a major red flag. Basically as red as they get. Consent pop-ups, similar to those for collecting cookie consents are a must, and an easily programmable one.

On the other hand, the idea of pay-or-track (or in the context of AI models pay-or-collect), meaning that the choice is left to the users to decide if they are willing to have their data used by the AI developers, is heavily disputed and can hardly be lawfully implemented. Primarily, because the users still have to have a free choice of accepting or declining tracking, meaning that the price has to be proportionally low (read the service has to be quite cheap) to even justify contending the choice is free. Not to mention, you have to stick with this promise and not collect any subscription users’ data. As Meta has recently switched to this model, and the data protection authorities already received the first complaints because of it,[5] it will be interesting to see what the Court of Justice of the EU decides on the matter. However, for the time being, relying on lawful consent is the safest way to go.

2. Privacy policies need an update

Information provided to the data subjects needs to be updated to include the data processing going on throughout the lifecycle of an AI system. Starting from development, over testing, and all the way to deployment. For this, all the complex processing operations need to be translated into plain English. This is by no means an easy task, but there is no way around it. And while consent pop-ups are not the appropriate place to do this, the privacy policy might be. And as long as this privacy policy is linked directly to the consent pop-ups, you are good to go.

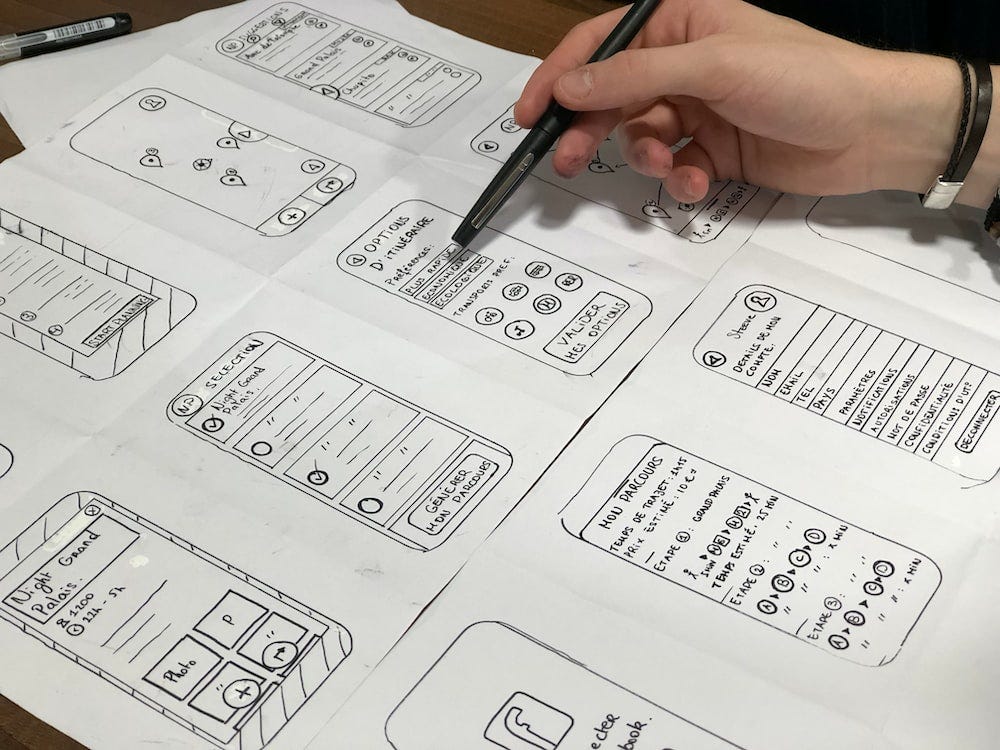

3. Get creative

Translating complex processing operations is a complex task in and of itself, but still an absolutely essential one for achieving the GDPR standards of transparency. Whether you want to use graphics, pictures, quizzes or videos, you need to find a way to explain to average users what in the world is going on with their data. Otherwise, their consent can never be considered informed and lawful. So, now is the time to put your green thinking hat on, roll up your sleeves, and head for the drawing board. [6]

[1] Fred D. Davis, Perceived Usefulness, Perceived Ease of Use, and User Acceptance of Information Technology, MIS Quarterly, Vol. 13, №3 (1989), pp. 319–340 https://www.jstor.org/stable/249008?typeAccessWorkflow=login

[2] Christophe Carugati, The ‘pay-or-consent’ challenge for platform regulators, 06 November 2023, https://www.bruegel.org/analysis/pay-or-consent-challenge-platform-regulators.

[3] OpenAI, Privacy Policy, https://openai.com/policies/privacy-policy

[4] OpenAI, March 20 ChatGPT outage: Here’s what happened, https://openai.com/blog/march-20-chatgpt-outage

[5] nyob, noyb files GDPR complaint against Meta over “Pay or Okay”, https://noyb.eu/en/noyb-files-gdpr-complaint-against-meta-over-pay-or-okay

[6] untools, Six Thinking Hats, https://untools.co/six-thinking-hats

Data Altruism: The Digital Fuel for Corporate Engines was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Data Altruism: The Digital Fuel for Corporate Engines

Go Here to Read this Fast! Data Altruism: The Digital Fuel for Corporate Engines