Go Here to Read this Fast! The Last of Us Part 3: everything we know so far

Originally appeared here:

The Last of Us Part 3: everything we know so far

Go Here to Read this Fast! The Last of Us Part 3: everything we know so far

Originally appeared here:

The Last of Us Part 3: everything we know so far

Originally appeared here:

Take control of your digital footprint with DeleteMe and regain your privacy

Noble Audio just announced pending availability of its most advanced earbuds yet. The FoKus Rex5 earbuds manage to cram a whole lot of tech into a small package, with a $450 price tag to prove it.

First of all, Noble has installed five drivers into each earbud. This is likely the first time that’s ever been done, as the idea of cramming multiple drivers into a tiny earbud is a relatively new concept. Noble’s own FoKus Prestige earbuds include three drivers, but certainly not five.

The FoKus Rex5 earbuds include a dynamic driver, a planar driver and three balanced armature drivers to extend the frequency range. The company says this particular combination creates “an impressive soundstage that effortlessly delivers rich, full bass, detailed mid-tones, and crystal-clear highs across an extended frequency range of 20Hz to 40kHz.”

For the uninitiated, planar drivers provide a more accurate signal through the treble and mid ranges. Dynamic drivers have the power to move a whole lot of air, resulting in an improved bass response. The company’s recently-released FoKus Apollo headphones also combine these two types of drivers.

These earbuds integrate with a proprietary app and Audiodo’s personalization software. This lets people create a custom EQ setting based on their hearing, which is then actually uploaded to the earbuds. Of course, the Rex5 buds offer active noise cancellation and multiple transparency modes.

As for connectivity, the Rex5 earbuds use Bluetooth 5.4 with Multipoint. They’re also equipped with both aptX Adaptive and LDAC hi-res codecs, along with AAC and SBC. The company says customers should expect five hours of use per charge with ANC on and seven hours when it’s off. The earbuds come with a charging case that can power an additional 40 hours of use, with a quick charge feature. The case is also green to match the buds.

Noble Audio’s FoKus Rex5 earbuds are available for preorder right now, with shipments beginning on November 29. As previously mentioned, they cost $450, which is $200 more than Apple’s top-of-the-line AirPods Pro 2.

This article originally appeared on Engadget at https://www.engadget.com/audio/headphones/noble-audio-announces-its-most-advanced-earbuds-yet-with-five-drivers-per-ear-193352556.html?src=rss

Originally appeared here:

Noble Audio announces its most advanced earbuds yet, with five drivers per ear

When it comes to new tech, $50 doesn’t get you a lot— except perhaps during Black Friday sales. Surprisingly, quite a few of the smaller electronics and accessories we recommend are currently on sale for less than $50. These deals include picks from our guides to accessories, portable batteries, budget earbuds and smart speakers. There are also quite a few streaming subscription deals that fall within that price range, too.

Everything on this list has earned the Engadget seal of approval — be it from official reviews, buying guides, personal use or devices from brands we know to be reputable — so you don’t have to guess whether these Black Friday tech deals are worth your (less than) $50.

Halo: The Master Chief Collection for $10 ($30 off): As part of the Xbox Black Friday sale, you can save up to 55 percent on titles (some titles are going as low as $5). A number of our top picks for the best Xbox games are included in the sale, including this Halo collection, Death Stranding: Director’s Cut, Street Fighter 6 and Diablo IV: Vessel of Hatred. And if you’re looking for more deals on game titles, check out Jeff Dunn’s Black Friday gaming roundup.

Amazon Echo Pop (2023) for $18 ($22 off): Amazon’s smallest Echo will fit in any room in your home, so Alexa can add things to your shopping list, set a timer, or answer questions (like “What’s a bomb cyclone?” or “Who is Penelope Cruz married to?”) from anywhere.

Anker Nano Charger 30W USB-C for $13 ($7 off): This compact 30-watt wall charger is smaller than others of its wattage and can speedily juice up an iPhone or Android handset. Anker is one of Engadget’s most recommended accessory brands and this is the model we picked for our fast charger guide. Get the same deal at Anker with an auto-applied code.

Anker Nano power bank with built-in USB-C connector for $16 ($4 off): It’s the size of an old-timey lipstick case but packs enough juice (and its own USB-C plug) to get a dying smartphone back in service with at least a half charge. It’s one of the winners in our guide to power banks. Also direct from Anker with an auto-applied code.

Glocusent Tri-head clip-on book light for $16 ($4 off): Glocusent’s book light can stand on a desk or clip to a book and casts a wide swath of light across the widest of pages. It’s a pick in our book lovers gift guide.

Beshon European travel plug adapter (two-pack) for $13 ($6 off with Prime): If you’re planning any trips abroad, take one of these, as Engadget’s Valentina Palladino recommends in our gifts for travelers guide. They come in versions made for Ireland, China and Japan, too.

Elden Ring (PS4,PS5, Xbox) for $20 ($40 off): One of our favorite games is down to the best price we’ve tracked. It feels impressively handmade despite its epic scale that feels big but never superfluous. Also at Best Buy.

Amazon Smart Plug for $13 ($12 off): If you rely on Alexa as your smart home assistant, this is an affordable and reliable way to control your lamps, fans and Christmas lights. It was one of the more reliable and fuss-free plugs I tested.

Anker Nano II 713 Charger (45W) for $20 ($20 off): This 45-watt charger has a single USB-C port and will let you take advantage of the faster charging speeds newer devices offer (just make sure you have an equally robust cable). It’s one of the picks in our iPad accessories guide. Also at Anker with an auto-applied code.

Chipolo ONE for $20 ($5 off): Our favorite Bluetooth tracker overall is loud, compact and readily tells you when you’ve left your keys (or whatever else you attach them to) behind. If you’re looking for a finding network to locate things you’ve lost out in the wild, this isn’t the one to get, but for everyday locating misplaced keys in the house, this is great.

Belkin Apple AirTag secure holder for $15 ($5 off): AirTags are great, and we recommend them for iPhone users, but they have no built-in method for attaching them. This is one of the gizmos we recommend in our guide to secure the tag to your luggage and more. Also at Amazon.

Elevation Lab TagVault (2 Pack) for $16 ($4 off with Prime): Another recommendation from our guide, Elevation Labs fabric mount is ideal for sticking an AirTag inside your coat, backpack or anything else you want to track. Also at Elevation Lab without Prime.

PopSockets Phone Grip for $15 ($15 off): You can save 50 percent on the Pop Socket we recommend in our guide to iPhone accessories. Many other Pop Sockets are on sale directly from Pop Socket for Black Friday.

J-Tech Digital Ergonomic Mouse for $18 ($13 off): The best budget ergo mouse has a vertical grip that’s a little wider than others of its ilk, which we found to be more comfortable. There are RGB lights, which can be fun. But which can also be turned off.

WAVLINK USB-C hub for $13 ($13 off): The budget pick in our guide to USB-C hubs has an HDMI port, three USB connections (two type-A and one type-C) plus a generous 10-inch cable to give you more options when plugging into your laptop or tablet.

Anker USB-C 240W Bio-Braided cable for $12 ($5 off): A fast charger won’t do much if the cable isn’t rated to handle the wattage. This 240W Anker cable is pulled from our list of the best iPhone accessories and will charge those devices (or any other rechargeable item with a USB-C port) as quickly as the brick and device will allow.

Peak Design Packable Tote for $16 ($4 off): We recommend this handy bag in our gift guide for travelers. We like that it zips shut, is water resistant and has a padded shoulder/hand strap. Plus it packs into itself and takes up just a little more room than a deck of cards.

Max subscription for $18 (6-month) ($42 off): You can get six months of Max with Ads for $2.99 monthly instead of the usual $9.99. The subscription will automatically renew at that rate each month until the end of the promo period, when it’ll automatically renew for the full $10. New and returning subscribers are eligible through Max.com, Roku, Apple and other streaming ecosystems, but is only open to new subscribers via Amazon Fire TV.

Paramount+ Showtime (two months) for $6 ($20 off): New and former subscribers can get two months of Paramount+ Essential (with ads, usually $8 monthly) or Paramount+ with Showtime (ad-free, usually $13 monthly) for just $3 per month. It’s one of our favorite streaming services and the best place to watch as much Star Trek as you want. As with all subscriptions, remember the standard pricing will auto-renew after two months.

Peacock (one year) for $20 for ($75 off): New and returning subscribers can get a full year of Peacock for just $20. It’s also one of our favorite streamings services and has some excellent shows like Mrs. Davis, Poker Face and Killing It. Note this is the ad-supported tier, it is only available through Peacock’s website and will auto renew after the year is up.

Audible Premium Plus (3-month) for $1 ($29 off): If you don’t currently subscribe to Audible you can get three months of the audiobook service’s Premium Plus plan for $1. The service is usually $15 per month after a 30-day free trial. Premium Plus gives you access to the Audible Plus library, and lets you keep one title from a curated selection of audiobooks each month.

Amazon Kindle Unlimited for $0 for one month ($12 off): Amazon’s ebook subscription service gives you access to a selected catalog of thousands titles for unlimited reading as well as some Audible audiobooks and magazines. Prime members can get two months for only $5.

Samsung Galaxy SmartTag2 for $21 ($9 off): If you have a Samsung smartphone, this is the tracker we recommend. The finding network isn’t as vast as Apple’s, but in our tests, the accuracy was good and the physical design is one of the best of its kind.

Roku Streaming Stick 4K for $29 ($21 off): On our list of the best streaming devices we named the Roku Streaming Stick 4K the best pick for those wanting an ocean of free and live content. By combining Roku’s own free channels with content from other FAST apps this simple stick turns any screen into a portal to a near-infinite amount of movies and shows that you won’t pay a dime for. Also at Target and direct from Roku for $1 more.

Anker Soundcore 2 Portable Bluetooth Speaker for $28 ($12 off): Anker’s Soundcore brand proves the accessory brand can make some excellent electronics and we named a number of Soundcore audio devices to our buying guides. This is one of the smaller and more affordable models from Anker and it’s currently back to one of its lowest prices yet.

Anker Nano 3-in-1 10K portable charger for $30 ($15 off): A top pick in our best power banks guide, this 10K brick has a built-in USB-C cable so you don’t need to remember to bring one with you, plus it has an extra USB-C port for charging other devices. Also at Anker within an auto-applied code.

HyperX Cloud Stinger 2 gaming headset for $30 ($20 off): Though we ultimately recommend getting an external mic along with your wired headphones if you need to chat while playing, the Cloud Stinger 2 is our pick for a budget gaming headset. Also at Best Buy and direct from Hyper.

Kasa Smart Plug Mini 15A (4-pack) for $30 ($20 off): Our favorite smart plug overall connects to all the major smart home platforms, including from Apple, Google and Amazon. It’s perfectly simple to set up, stays connected and makes it easy to make your lights do what you want them to. Also at Amazon.

EarFun Free 2S wireless earbuds for $25 ($15 off): These don’t sound as sharp as other budget earbuds we recommend and there’s no noise cancellation or transparency mode, but if you need a pair of earbuds under $50 (or under $30 now) these are decently comfortable with a sound that’s a bit richer than others in its price range.

Amazon Echo Buds for $25 ($25 off): Our favorite budget earbuds with an open ear design are made by Amazon. They don’t go all the way in your ear so you’ll hear more of what’s going on around you. The sound is decently separated, though we recommend tweaking the EQ in the Alexa app to bring down the treble a touch.

Anker USB-C Hub 341 for $25 ($10 off): Anker’s seven-port hub lets you use a range of extras with your tablet, which is why we named it one of the best accessories for an iPad. In addition to extra USB connections, you also get a microSD and standard SD card slots.

Logitech Signature M650 wireless mouse for $30 ($5 off): This portable mouse is great for anyone who changes locations when they work because it connects quickly and easily slips in a pack. It’s one of the gifts we recommend in our stocking stuffer guide. Also at Staples and direct from Logitech.

Baseus 30W Magnetic Power Bank for $25 ($20 off): An honorable mention in our battery guide, this small Baseus bank delivers a fast charge either wirelessly for MagSafe compatible iPhones or via the attached USB-C cable.

Ransom Notes board game for $28 ($7 off): Engadget’s Karissa Bell recommends this party board game in our gift guide thanks to its hilarity-inspiring appeal. It forces players to communicate complex concepts using a given number of word magnet tiles.

Mysterium Board Game for $30 ($25 off): Our own Valentina Palladino recommends this game to anyone who loves a good mystery. It takes Clue to the next level and is best played with friends and family on a dark and stormy night.

Settlers of Catan board game for $26 ($18 off): It’s hard to find anyone into board games who hasn’t yet played Catan, but this trading and settling game is a classic for a reason. Get it and prepare for some lively sheep bartering.

Anker 525 charging station for $36 ($30 off): This is one of the handy items that makes working from home easier, as we recommend in our WFH gift guide. It offers four USB ports up front (both Type-A and Type-C) and three extra AC plugs in the back.

Blink Mini 2 (two-pack) for $35 ($35 off): The newest Blink Mini wired security camera came out earlier this year and it supports 1080p video recordings, a wider field of view than the previous model and improved low-light performance. It may be wired, but you can use it outside with the $10 weather-resistant adapter.

JBL Go 4 for $40 ($10 off): JBL’s smallest portable speaker has up to seven hours of battery life on a charge, has an IP67 waterproof rating and has a tiny built-in carry strap so you can bring it wherever you go. Also at JBL and Best Buy.

Headspace annual plan for $35 ($35 off): Our top pick for the best meditation app has tons of courses that address specific anxieties and worries, a good in-app search engine that makes it easy to find the right meditation you need, and additional yoga routines, podcasts and music sessions to try out.

Amazon Fire TV Stick 4K Max for $33 ($27 off): Amazon’s most powerful dongle supports 4K streaming with Dolby Vision, Wi-Fi 6E and live picture-in-picture mode so you can see security camera feeds directly on your TV as you’re watching a show or movie. In addition to being a solid streamer, it also makes a good retro gaming device.

Anker 633 Magnetic Battery for $40 ($15 off): Choose from a MagSafe option or the 20W Power Delivery port via a USB-C cable (which charges things faster). The handy kickstand means you can look at your phone while it charges and that port lets you charge non-MagSafe devices too.

Blink Outdoor 4 (2023) $38 ($52 off): Amazon’s latest outdoor Blink camera works well (and only) with Alexa, letting you check on your surroundings using the app or a compatible display (like an Echo Show or a Fire TV.

OtterBox Performance Fast Charge Power Bank 20,000 mAh for $32 ($23 off): This is the larger-capacity model of the mid-range battery we recommend in our guide to power banks. Not only does it look cool, it’s durable and charges up a phone quickly through either the USB-C or USB-A port.

Final Fantasy VII Rebirth for $40 ($30 off): Engadget’s Mat Smith gave this title a favorable review earlier this year. It helps if you’ve played its predecessor and it’s absolutely stuffed with things to do. This is a new low for the PS5 exclusive.

Govee Smart LED Light Bars for $35 ($15 off): We like Govee’s playful smart lights and recommend the brand in our guide to smart bulbs. These light bars made the list in our stocking stuffer gift guide thanks to their versatility (they can stand up, lay flat or be mounted) and there’s no end to the multiple colors and sequences you can program.

Razer Basilisk V3 ergonomic gaming mouse for $40 ($30 off): This is the gaming option in our guide to the best ergonomic mice. It’s super light and glides across multiple surfaces. The buttons are customizable and the thumb rest is comfortable. Also at Amazon.

UGREEN Revodok Pro 109 USB-C hub for $38 ($16 off): The top pick in our buying guide to USB-C hubs has a good array of ports, the ability to support two 4K monitors, and a nice long host cable so you can easily arrange it on your desk.

Moft Tripod iPhone Wallet for $40 ($10 off with Prime): Moft’s origami-inspired accessories tend to be clever and surprisingly useful. This one is no different, combining a single-card wallet with a dual-height stand. I was impressed with the sturdiness of the stand in such a thin package. If you don’t need to carry a single card, the wallet-less version is $32 right now.

The Legend of Zelda: Tears of the Kingdom for $40 ($30 off): Nintendo announced its Black Friday deals early, but they didn’t go live until November 25. The big callout here is Tears of the Kingdom. We found the game to be an absolute delight as it builds on all of the concepts and story introduced in Breath of the Wild.

JBL Clip 5 for $49.95 ($30 off): JBL makes a good number of the winners in our guide to the best Bluetooth speakers. We didn’t review this one formally for our guide, but it’s one of the more affordable models the brand makes and the clip plus dunkable water resistance makes it easy to bring JBL’s signature dynamic range just about anywhere. Also at Walmart and direct from JBL.

Amazon Echo Show 5 (2023) for $45 ($45 off): The newest Echo Show 5 made our list of the best smart displays because it doubles as a “stellar alarm clock” with the auto-dimming screen, tap-to-snooze feature and a sunrise alarm. Plus the tiny, five-inch screen is perfect for a nightstand. Also, oddly, at Best Buy.

Amazon Echo Spot (2024) $45 ($35 off): The mini display just shows simple data like the time, weather or song that’s playing while the other half of the circle plays music. It’s an updated version of a model Amazon discontinued a couple of years ago and now it’s back.

Elecom Nestout power bank 15,000mAh for $48 ($12 off): For outdoor charging, this is one of the few portable batteries that can handle a dunk in water (as long as you’ve remembered to screw on the port covers). We recommend it in our guide and particularly like the handy accessories like a tripod stand and light that you can buy to go on it. Also at Nestout for $1 more.

8BitDo Ultimate Bluetooth Controller for $48 at Amazon ($12 off, Prime only): Engadget’s Jeff Dunn raved about this wireless gamepad for Switch and PC, calling it comfortable with durable Hall effect joysticks that should avoid the “drift” sensation that plagues many modern controllers. Also at Best Buy.

Soundcore by Anker Space A40 wireless earbuds for $45 ($35 off): Our top budget wireless earbuds are just $5 shy of their all time low. They have outstanding active noise cancellation for the price and offer a warm and pleasant default sound.

Ultimate Ears Mini Roll for $50 ($30 off): This less-than-a-pound sound maker came out at the same time as the Everboom and is the smallest speaker in UE’s lineup. It’s IP67 rated to be dust- and waterproof and can crank out 85 decibels of volume — impressive for something so small. Also at Amazon and B&H Photo.

Tribit StormBox Micro 2 for $42 ($38 off with coupon): This is the smallest speaker in our guide and it can go with you anywhere with the built-in strap. It pumps out impressive volume for its size and can go for 12 hours on a charge. The audio isn’t the highest fidelity, but this is more about bringing the vibes than emitting flawless musical clarity. Also directly from Tribit (see price in cart).

Anker PowerConf C200 2K webcam for $48 ($12 off): The budget pick in our buying guide to webcams is back down to a low it’s hit a few times before. We like the excellent video clarity and easy set up and customization. Also at Anker with a coupon code.

Thermacell Mosquito Repeller for $43 ($8 off): The mosquitos may be gone for the winter, but we all know they’ll be back next year. This is one of the few mosquito-repelling products we recommend, so grab one now for a less irritating summer next year.

Lego Star Wars: A New Hope Boarding The Tantive IV for $44 (20 percent off): This set recreates the scene in which Darth Vader and his Stormtroopers battled the Rebels, and it includes seven Star Wars minifigures. Also at Target.

Check out all of the latest Black Friday and Cyber Monday deals here.

This article originally appeared on Engadget at https://www.engadget.com/deals/the-62-best-black-friday-tech-deals-under-50-164632307.html?src=rss

Go Here to Read this Fast! The 62 best Black Friday tech deals under $50

Originally appeared here:

The 62 best Black Friday tech deals under $50

Japan’s Fair Trade Commission has conducted a raid on Amazon over antitrust concerns. “There is a suspicion that Amazon Japan is forcing sellers to cut prices in an irrational way,” an unnamed source told Reuters.

Amazon Japan received an on-site inspection by the regulator today to explore whether the retailer gives better product placement in search results to sellers who offer lower prices. Additional reporting in The Japan Times suggested that this inquiry is focused on Amazon’s Buy Box program, which puts recommended items more prominently in front of online shoppers. The publication said that in addition to demanding “competitive pricing,” sellers were allegedly required to use Amazon’s in-house services, such as those for logistics and payment collection, to qualify for Buy Box placement.

The Japanese FTC has not released an official statement about the inquiry. We’ve reached out to Amazon for a comment.

Amazon has also been questioned about anti-competitive behavior around the world. Stateside, both the Fair Trade Commission and the Attorney General of Washington DC have raised similar concerns about Amazon’s practices. The company is also expected to face an antitrust investigation in the European Union next year.

This article originally appeared on Engadget at https://www.engadget.com/big-tech/amazon-japan-hit-with-a-raid-over-antitrust-concerns-191558080.html?src=rss

Go Here to Read this Fast! Amazon Japan hit with a raid over antitrust concerns

Originally appeared here:

Amazon Japan hit with a raid over antitrust concerns

Whether you want a new streaming device to upgrade an aging TV or you want to outfit a new projector with one, Black Friday deals can make it so you spend less on that new set-top box or dongle. One of the best deals we’ve seen on streaming gear is on the latest Roku Ultra, which is on sale for $79, or $21 off its usual rate. That’s an all-time-low price on the 2024 version.

Roku unveiled the 2024 Ultra in September. It claims that the device is at least 30 percent faster than any of its other players. As such, apps should load quickly and moving around the user interface should feel zippy.

The previous version is our pick for the best set-top streaming box (we’re currently testing the 2024 model). The Roku Ultra offers 4K streaming with HDR10+ and Dolby Vision, as well as Dolby Atmos audio. It supports Wi-Fi 6 connectivity and you can plug in an Ethernet cable as well.

This model comes with a second-gen Voice Remote Pro, which boasts backlit buttons and USB-C charging, though Roku says it should run for up to three months on a single charge. Other features include hands-free voice control and a lost remote finder function. Roku ditched the headphone jack for wired listening this time around, unfortunately, but you can still connect wireless headphones to the Roku Ultra via Bluetooth.

The Roku Channel offers more than 400 free, ad-supported streaming channels, along with on-demand shows and movies. The Roku Ultra is also compatible with Alexa, Google Assistant, Apple HomeKit and AirPlay.

In addition to the latest Ultra, you can save on a number of other Roku devices for Black Friday. The most affordable of the bunch is the Roku Express HD, which is down to only $18, and the Roku Streambar SE is on sale for $69 — only $10 more than its record low.

Check out all of the latest Black Friday and Cyber Monday deals here.

This article originally appeared on Engadget at https://www.engadget.com/deals/black-friday-deals-bring-the-2024-roku-ultra-down-to-79-181528064.html?src=rss

Go Here to Read this Fast! Black Friday deals bring the 2024 Roku Ultra down to $79

Originally appeared here:

Black Friday deals bring the 2024 Roku Ultra down to $79

Are you eagerly anticipating the next crop of games from Devolver Digital? Well, you’re going to have to wait a little longer. The indie game studio will unveil the nominees and winners of its annual Devolver Delayed Awards at 1 PM Eastern Wednesday on its official YouTube page. Devolver’s tongue-in-cheek awards show aims to honor “the brightest, best indie games you can’t play yet” and yes, Skate Story is still in that category.

It’s all part of Devolver’s satirical marketing strategy — like calling the event the “15th annual” despite the fact that last year’s Delayed Awards was the “first-ever showcase celebrating brands that are courageously moving into 2024,” according to a press release.

Devolver will at least tide us over with more footage from some of these unplayable games. Titles might include the minimalist brawler Stick It to the Stickmen, the story driven walking sim Baby Steps and the long-awaited ragdoll puzzler Human Fall Flat 2. The studio also hinted that there may be a glimpse of “something new” for 2025.

This article originally appeared on Engadget at https://www.engadget.com/gaming/devolver-digitals-delayed-awards-returns-wednesday-185754203.html?src=rss

Go Here to Read this Fast! Devolver Digital’s Delayed Awards returns Wednesday

Originally appeared here:

Devolver Digital’s Delayed Awards returns Wednesday

Go Here to Read this Fast! QNAP firmware update leaves NAS owners locked out of their boxes

Originally appeared here:

QNAP firmware update leaves NAS owners locked out of their boxes

Understand missing data patterns (MCAR, MNAR, MAR) for better model performance with Missingno

Originally appeared here:

Addressing Missing Data

As generative AI (genAI) models grow in both popularity and scale, so do the computational demands and costs associated with their training and deployment. Optimizing these models is crucial for enhancing their runtime performance and reducing their operational expenses. At the heart of modern genAI systems is the Transformer architecture and its attention mechanism, which is notably compute-intensive.

In a previous post, we demonstrated how using optimized attention kernels can significantly accelerate the performance of Transformer models. In this post, we continue our exploration by addressing the challenge of variable-length input sequences — an inherent property of real-world data, including documents, code, time-series, and more.

In a typical deep learning workload, individual samples are grouped into batches before being copied to the GPU and fed to the AI model. Batching improves computational efficiency and often aids model convergence during training. Usually, batching involves stacking all of the sample tensors along a new dimension — the batch dimension. However, torch.stack requires that all tensors to have the same shape, which is not the case with variable-length sequences.

The traditional way to address this challenge is to pad the input sequences to a fixed length and then perform stacking. This solution requires appropriate masking within the model so that the output is not affected by the irrelevant tensor elements. In the case of attention layers, a padding mask indicates which tokens are padding and should not be attended to (e.g., see PyTorch MultiheadAttention). However, padding can waste considerable GPU resources, increasing costs and slowing development. This is especially true for large-scale AI models.

One way to avoid padding is to concatenate sequences along an existing dimension instead of stacking them along a new dimension. Contrary to torch.stack, torch.cat allows inputs of different shapes. The output of concatenation is single sequence whose length equals the sum of the lengths of the individual sequences. For this solution to work, our single sequence would need to be supplemented by an attention mask that would ensure that each token only attends to other tokens in the same original sequence, in a process sometimes referred to as document masking. Denoting the sum of the lengths of all of the individual by N and adopting ”big O” notation, the size of this mask would need to be O(N²), as would the compute complexity of a standard attention layer, making this solution highly inefficient.

The solution to this problem comes in the form of specialized attention layers. Contrary to the standard attention layer that performs the full set of O(N²) attention scores only to mask out the irrelevant ones, these optimized attention kernels are designed to calculate only the scores that matter. In this post we will explore several solutions, each with their own distinct characteristics. These include:

For teams working with pre-trained models, transitioning to these optimizations might seem challenging. We will demonstrate how HuggingFace’s APIs simplify this process, enabling developers to integrate these techniques with minimal code changes and effort.

Special thanks to Yitzhak Levi and Peleg Nahaliel for their contributions to this post.

To facilitate our discussion we will define a simple generative model (partially inspired by the GPT model defined here). For a more comprehensive guide on building language models, please see one of the many excellent tutorials available online (e.g., here).

We begin by constructing a basic Transformer block, specifically designed to facilitate experimentation with different attention mechanisms and optimizations. While our block performs the same computation as standard Transformer blocks, we make slight modifications to the usual choice of operators in order to support the possibility of PyTorch NestedTensor inputs (as described here).

# general imports

import time, functools

# torch imports

import torch

from torch.utils.data import Dataset, DataLoader

import torch.nn as nn

# Define Transformer settings

BATCH_SIZE = 32

NUM_HEADS = 16

HEAD_DIM = 64

DIM = NUM_HEADS * HEAD_DIM

DEPTH = 24

NUM_TOKENS = 1024

MAX_SEQ_LEN = 1024

PAD_ID = 0

DEVICE = 'cuda'

class MyAttentionBlock(nn.Module):

def __init__(

self,

attn_fn,

dim,

num_heads,

format=None,

**kwargs

):

super().__init__()

self.attn_fn = attn_fn

self.num_heads = num_heads

self.dim = dim

self.head_dim = dim // num_heads

self.norm1 = nn.LayerNorm(dim, bias=False)

self.norm2 = nn.LayerNorm(dim, bias=False)

self.qkv = nn.Linear(dim, dim * 3)

self.proj = nn.Linear(dim, dim)

# mlp layers

self.fc1 = nn.Linear(dim, dim * 4)

self.act = nn.GELU()

self.fc2 = nn.Linear(dim * 4, dim)

self.permute = functools.partial(torch.transpose, dim0=1, dim1=2)

if format == 'bshd':

self.permute = nn.Identity()

def mlp(self, x):

x = self.fc1(x)

x = self.act(x)

x = self.fc2(x)

return x

def reshape_and_permute(self,x, batch_size):

x = x.view(batch_size, -1, self.num_heads, self.head_dim)

return self.permute(x)

def forward(self, x_in, attn_mask=None):

batch_size = x_in.size(0)

x = self.norm1(x_in)

qkv = self.qkv(x)

# rather than first reformatting and then splitting the input

# state, we first split and then reformat q, k, v in order to

# support PyTorch Nested Tensors

q, k, v = qkv.chunk(3, -1)

q = self.reshape_and_permute(q, batch_size)

k = self.reshape_and_permute(k, batch_size)

v = self.reshape_and_permute(v, batch_size)

# call the attn_fn with the input attn_mask

x = self.attn_fn(q, k, v, attn_mask=attn_mask)

# reformat output

x = self.permute(x).reshape(batch_size, -1, self.dim)

x = self.proj(x)

x = x + x_in

x = x + self.mlp(self.norm2(x))

return x

Building on our programmable Transformer block, we construct a typical Transformer decoder model.

class MyDecoder(nn.Module):

def __init__(

self,

block_fn,

num_tokens,

dim,

num_heads,

num_layers,

max_seq_len,

pad_idx=None

):

super().__init__()

self.num_heads = num_heads

self.pad_idx = pad_idx

self.embedding = nn.Embedding(num_tokens, dim, padding_idx=pad_idx)

self.positional_embedding = nn.Embedding(max_seq_len, dim)

self.blocks = nn.ModuleList([

block_fn(

dim=dim,

num_heads=num_heads

)

for _ in range(num_layers)])

self.output = nn.Linear(dim, num_tokens)

def embed_tokens(self, input_ids, position_ids=None):

x = self.embedding(input_ids)

if position_ids is None:

position_ids = torch.arange(input_ids.shape[1],

device=x.device)

x = x + self.positional_embedding(position_ids)

return x

def forward(self, input_ids, position_ids=None, attn_mask=None):

# Embed tokens and add positional encoding

x = self.embed_tokens(input_ids, position_ids)

if self.pad_idx is not None:

assert attn_mask is None

# create a padding mask - we assume boolean masking

attn_mask = (input_ids != self.pad_idx)

attn_mask = attn_mask.view(BATCH_SIZE, 1, 1, -1)

.expand(-1, self.num_heads, -1, -1)

for b in self.blocks:

x = b(x, attn_mask)

logits = self.output(x)

return logits

Next, we create a dataset containing sequences of variable lengths, where each sequence is made up of randomly generated tokens. For simplicity, we (arbitrarily) select a fixed distribution for the sequence lengths. In real-world scenarios, the distribution of sequence lengths typically reflects the nature of the data, such as the length of documents or audio segments. Note, that the distribution of lengths directly affects the computational inefficiencies caused by padding.

# Use random data

class FakeDataset(Dataset):

def __len__(self):

return 1000000

def __getitem__(self, index):

length = torch.randint(1, MAX_SEQ_LEN, (1,))

sequence = torch.randint(1, NUM_TOKENS, (length + 1,))

input = sequence[:-1]

target = sequence[1:]

return input, target

def pad_sequence(sequence, length, pad_val):

return torch.nn.functional.pad(

sequence,

(0, length - sequence.shape[0]),

value=pad_val

)

def collate_with_padding(batch):

padded_inputs = []

padded_targets = []

for b in batch:

padded_inputs.append(pad_sequence(b[0], MAX_SEQ_LEN, PAD_ID))

padded_targets.append(pad_sequence(b[1], MAX_SEQ_LEN, PAD_ID))

padded_inputs = torch.stack(padded_inputs, dim=0)

padded_targets = torch.stack(padded_targets, dim=0)

return {

'inputs': padded_inputs,

'targets': padded_targets

}

def data_to_device(data, device):

if isinstance(data, dict):

return {

key: data_to_device(val,device)

for key, val in data.items()

}

elif isinstance(data, (list, tuple)):

return type(data)(

data_to_device(val, device) for val in data

)

elif isinstance(data, torch.Tensor):

return data.to(device=device, non_blocking=True)

else:

return data.to(device=device)

Lastly, we implement a main function that performs training/evaluation on input sequences of varying length.

def main(

block_fn,

data_collate_fn=collate_with_padding,

pad_idx=None,

train=True,

compile=False

):

torch.random.manual_seed(0)

device = torch.device(DEVICE)

torch.set_float32_matmul_precision("high")

# Create dataset and dataloader

data_set = FakeDataset()

data_loader = DataLoader(

data_set,

batch_size=BATCH_SIZE,

collate_fn=data_collate_fn,

num_workers=12,

pin_memory=True,

drop_last=True

)

model = MyDecoder(

block_fn=block_fn,

num_tokens=NUM_TOKENS,

dim=DIM,

num_heads=NUM_HEADS,

num_layers=DEPTH,

max_seq_len=MAX_SEQ_LEN,

pad_idx=pad_idx

).to(device)

if compile:

model = torch.compile(model)

# Define loss and optimizer

criterion = torch.nn.CrossEntropyLoss(ignore_index=PAD_ID)

optimizer = torch.optim.SGD(model.parameters())

def train_step(model, inputs, targets,

position_ids=None, attn_mask=None):

with torch.amp.autocast(DEVICE, dtype=torch.bfloat16):

outputs = model(inputs, position_ids, attn_mask)

outputs = outputs.view(-1, NUM_TOKENS)

targets = targets.flatten()

loss = criterion(outputs, targets)

optimizer.zero_grad(set_to_none=True)

loss.backward()

optimizer.step()

@torch.no_grad()

def eval_step(model, inputs, targets,

position_ids=None, attn_mask=None):

with torch.amp.autocast(DEVICE, dtype=torch.bfloat16):

outputs = model(inputs, position_ids, attn_mask)

if outputs.is_nested:

outputs = outputs.data._values

targets = targets.data._values

else:

outputs = outputs.view(-1, NUM_TOKENS)

targets = targets.flatten()

loss = criterion(outputs, targets)

return loss

if train:

model.train()

step_fn = train_step

else:

model.eval()

step_fn = eval_step

t0 = time.perf_counter()

summ = 0

count = 0

for step, data in enumerate(data_loader):

# Copy data to GPU

data = data_to_device(data, device=device)

step_fn(model, data['inputs'], data['targets'],

position_ids=data.get('indices'),

attn_mask=data.get('attn_mask'))

# Capture step time

batch_time = time.perf_counter() - t0

if step > 20: # Skip first steps

summ += batch_time

count += 1

t0 = time.perf_counter()

if step >= 100:

break

print(f'average step time: {summ / count}')

For our baseline experiments, we configure our Transformer block to utilize PyTorch’s SDPA mechanism. In our experiments, we run both training and evaluation, both with and without torch.compile. These were run on an NVIDIA H100 with CUDA 12.4 and PyTorch 2.5.1

from torch.nn.functional import scaled_dot_product_attention as sdpa

block_fn = functools.partial(MyAttentionBlock, attn_fn=sdpa)

causal_block_fn = functools.partial(

MyAttentionBlock,

attn_fn=functools.partial(sdpa, is_causal=True)

)

for mode in ['eval', 'train']:

for compile in [False, True]:

block_func = causal_block_fn

if mode == 'train' else block_fn

print(f'{mode} with {collate}, '

f'{"compiled" if compile else "uncompiled"}')

main(block_fn=block_func,

pad_idx=PAD_ID,

train=mode=='train',

compile=compile)

Performance Results:

In this section, we will explore several optimization techniques for handling variable-length input sequences in Transformer models.

Our first optimization relates not to the attention kernel but to our padding mechanism. Rather than padding the sequences in each batch to a constant length, we pad to the length of the longest sequence in the batch. The following block of code consists of our revised collation function and updated experiments.

def collate_pad_to_longest(batch):

padded_inputs = []

padded_targets = []

max_length = max([b[0].shape[0] for b in batch])

for b in batch:

padded_inputs.append(pad_sequence(b[0], max_length, PAD_ID))

padded_targets.append(pad_sequence(b[1], max_length, PAD_ID))

padded_inputs = torch.stack(padded_inputs, dim=0)

padded_targets = torch.stack(padded_targets, dim=0)

return {

'inputs': padded_inputs,

'targets': padded_targets

}

for mode in ['eval', 'train']:

for compile in [False, True]:

block_func = causal_block_fn

if mode == 'train' else block_fn

print(f'{mode} with {collate}, '

f'{"compiled" if compile else "uncompiled"}')

main(block_fn=block_func,

data_collate_fn=collate_pad_to_longest,

pad_idx=PAD_ID,

train=mode=='train',

compile=compile)

Padding to the longest sequence in each batch results in a slight performance acceleration:

Next, we take advantage of the built-in support for PyTorch NestedTensors in SDPA in evaluation mode. Currently a prototype feature, PyTorch NestedTensors allows for grouping together tensors of varying length. These are sometimes referred to as jagged or ragged tensors. In the code block below, we define a collation function for grouping our sequences into NestedTensors. We also define an indices entry so that we can properly calculate the positional embeddings.

PyTorch NestedTensors are supported by a limited number of PyTorch ops. Working around these limitations can require some creativity. For example, addition between NestedTensors is only supported when they share precisely the same “jagged” shape. In the code below we use a workaround to ensure that the indices entry shares the same shape as the model inputs.

def nested_tensor_collate(batch):

inputs = torch.nested.as_nested_tensor([b[0] for b in batch],

layout=torch.jagged)

targets = torch.nested.as_nested_tensor([b[1] for b in batch],

layout=torch.jagged)

indices = torch.concat([torch.arange(b[0].shape[0]) for b in batch])

# workaround for creating a NestedTensor with identical "jagged" shape

xx = torch.empty_like(inputs)

xx.data._values[:] = indices

return {

'inputs': inputs,

'targets': targets,

'indices': xx

}

for compile in [False, True]:

print(f'eval with nested tensors, '

f'{"compiled" if compile else "uncompiled"}')

main(

block_fn=block_fn,

data_collate_fn=nested_tensor_collate,

train=False,

compile=compile

)

Although, with torch.compile, the NestedTensor optimization results in a step time of 131 ms, similar to our baseline result, in compiled mode the step time drops to 42 ms for an impressive ~3x improvement.

In our previous post we demonstrated the use of FlashAttention and its impact on the performance of a transformer model. In this post we demonstrate the use of flash_attn_varlen_func from flash-attn (2.7.0), an API designed for use with variable-sized inputs. To use this function, we concatenate all of the sequences in the batch into a single sequence. We also create a cu_seqlens tensor that points to the indices within the concatenated tensor where each of the individual sequences start. The code block below includes our collation function followed by evaluation and training experiments. Note, that flash_attn_varlen_func does not support torch.compile (at the time of this writing).

def collate_concat(batch):

inputs = torch.concat([b[0] for b in batch]).unsqueeze(0)

targets = torch.concat([b[1] for b in batch]).unsqueeze(0)

indices = torch.concat([torch.arange(b[0].shape[0]) for b in batch])

seqlens = torch.tensor([b[0].shape[0] for b in batch])

seqlens = torch.cumsum(seqlens, dim=0, dtype=torch.int32)

cu_seqlens = torch.nn.functional.pad(seqlens, (1, 0))

return {

'inputs': inputs,

'targets': targets,

'indices': indices,

'attn_mask': cu_seqlens

}

from flash_attn import flash_attn_varlen_func

fa_varlen = lambda q, k, v, attn_mask: flash_attn_varlen_func(

q.squeeze(0),

k.squeeze(0),

v.squeeze(0),

cu_seqlens_q=attn_mask,

cu_seqlens_k=attn_mask,

max_seqlen_q=MAX_SEQ_LEN,

max_seqlen_k=MAX_SEQ_LEN

).unsqueeze(0)

fa_varlen_causal = lambda q, k, v, attn_mask: flash_attn_varlen_func(

q.squeeze(0),

k.squeeze(0),

v.squeeze(0),

cu_seqlens_q=attn_mask,

cu_seqlens_k=attn_mask,

max_seqlen_q=MAX_SEQ_LEN,

max_seqlen_k=MAX_SEQ_LEN,

causal=True

).unsqueeze(0)

block_fn = functools.partial(MyAttentionBlock,

attn_fn=fa_varlen,

format='bshd')

causal_block_fn = functools.partial(MyAttentionBlock,

attn_fn=fa_varlen_causal,

format='bshd')

print('flash-attn eval')

main(

block_fn=block_fn,

data_collate_fn=collate_concat,

train=False

)

print('flash-attn train')

main(

block_fn=causal_block_fn,

data_collate_fn=collate_concat,

train=True,

)

The impact of this optimization is dramatic, 51 ms for evaluation and 160 ms for training, amounting to 2.6x and 2.1x performance boosts compared to our baseline experiment.

In our previous post we demonstrated the use of the memory_efficient_attention operator from xFormers (0.0.28). Here we demonstrate the use of BlockDiagonalMask, specifically designed for input sequences of arbitrary length. The required collation function appears in the code block below followed by the evaluation and training experiments. Note, that torch.compile failed in training mode.

from xformers.ops import fmha

from xformers.ops import memory_efficient_attention as mea

def collate_xformer(batch):

inputs = torch.concat([b[0] for b in batch]).unsqueeze(0)

targets = torch.concat([b[1] for b in batch]).unsqueeze(0)

indices = torch.concat([torch.arange(b[0].shape[0]) for b in batch])

seqlens = [b[0].shape[0] for b in batch]

batch_sizes = [1 for b in batch]

block_diag = fmha.BlockDiagonalMask.from_seqlens(seqlens, device='cpu')

block_diag._batch_sizes = batch_sizes

return {

'inputs': inputs,

'targets': targets,

'indices': indices,

'attn_mask': block_diag

}

mea_eval = lambda q, k, v, attn_mask: mea(

q,k,v, attn_bias=attn_mask)

mea_train = lambda q, k, v, attn_mask: mea(

q,k,v, attn_bias=attn_mask.make_causal())

block_fn = functools.partial(MyAttentionBlock,

attn_fn=mea_eval,

format='bshd')

causal_block_fn = functools.partial(MyAttentionBlock,

attn_fn=mea_train,

format='bshd')

print(f'xFormer Attention ')

for compile in [False, True]:

print(f'eval with xFormer Attention, '

f'{"compiled" if compile else "uncompiled"}')

main(block_fn=block_fn,

train=False,

data_collate_fn=collate_xformer,

compile=compile)

print(f'train with xFormer Attention')

main(block_fn=causal_block_fn,

train=True,

data_collate_fn=collate_xformer)

The resultant step time were 50 ms and 159 ms for evaluation and training without torch.compile. Evaluation with torch.compile resulted in a step time of 42 ms.

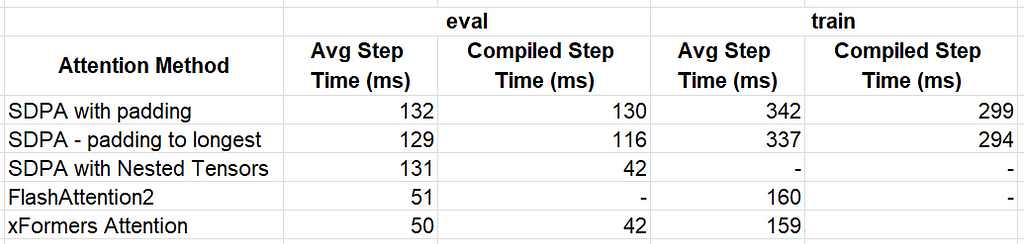

The table below summarizes the results of our optimization methods.

The best performer for our toy model is xFormer’s memory_efficient_attention which delivered a ~3x performance for evaluation and ~2x performance for training. We caution against deriving any conclusions from these results as the performance impact of different attention functions can vary significantly depending on the specific model and use case.

The tools and techniques described above are easy to implement when creating a model from scratch. However, these days it is not uncommon for ML developers to adopt existing (pretrained) models and finetune them for their use case. While the optimizations we have described can be integrated without changing the set of model weights and without altering the model behavior, it is not entirely clear what the best way to do this is. In an ideal world, our ML framework would allow us to program the use of an attention mechanism that is optimized for variable-length inputs. In this section we demonstrate how to optimize HuggingFace models for variable-length inputs.

To facilitate the discussion, we create a toy example in which we train a HuggingFace GPT2LMHead model on variable-length sequences. This requires adapting our random dataset and data-padding collation function according to HuggingFace’s input specifications.

from transformers import GPT2Config, GPT2LMHeadModel

# Use random data

class HuggingFaceFakeDataset(Dataset):

def __len__(self):

return 1000000

def __getitem__(self, index):

length = torch.randint(1, MAX_SEQ_LEN, (1,))

input_ids = torch.randint(1, NUM_TOKENS, (length,))

labels = input_ids.clone()

labels[0] = PAD_ID # ignore first token

return {

'input_ids': input_ids,

'labels': labels

}

return input_ids, labels

def hf_collate_with_padding(batch):

padded_inputs = []

padded_labels = []

for b in batch:

input_ids = b['input_ids']

labels = b['labels']

padded_inputs.append(pad_sequence(input_ids, MAX_SEQ_LEN, PAD_ID))

padded_labels.append(pad_sequence(labels, MAX_SEQ_LEN, PAD_ID))

padded_inputs = torch.stack(padded_inputs, dim=0)

padded_labels = torch.stack(padded_labels, dim=0)

return {

'input_ids': padded_inputs,

'labels': padded_labels,

'attention_mask': (padded_inputs != PAD_ID)

}

Our training function instantiates a GPT2LMHeadModel based on the requested GPT2Config and proceeds to train it on our variable-length sequences.

def hf_main(

config,

collate_fn=hf_collate_with_padding,

compile=False

):

torch.random.manual_seed(0)

device = torch.device(DEVICE)

torch.set_float32_matmul_precision("high")

# Create dataset and dataloader

data_set = HuggingFaceFakeDataset()

data_loader = DataLoader(

data_set,

batch_size=BATCH_SIZE,

collate_fn=collate_fn,

num_workers=12 if DEVICE == "CUDA" else 0,

pin_memory=True,

drop_last=True

)

model = GPT2LMHeadModel(config).to(device)

if compile:

model = torch.compile(model)

# Define loss and optimizer

criterion = torch.nn.CrossEntropyLoss(ignore_index=PAD_ID)

optimizer = torch.optim.SGD(model.parameters())

model.train()

t0 = time.perf_counter()

summ = 0

count = 0

for step, data in enumerate(data_loader):

# Copy data to GPU

data = data_to_device(data, device=device)

input_ids = data['input_ids']

labels = data['labels']

position_ids = data.get('position_ids')

attn_mask = data.get('attention_mask')

with torch.amp.autocast(DEVICE, dtype=torch.bfloat16):

outputs = model(input_ids=input_ids,

position_ids=position_ids,

attention_mask=attn_mask)

logits = outputs.logits[..., :-1, :].contiguous()

labels = labels[..., 1:].contiguous()

loss = criterion(logits.view(-1, NUM_TOKENS), labels.flatten())

optimizer.zero_grad(set_to_none=True)

loss.backward()

optimizer.step()

# Capture step time

batch_time = time.perf_counter() - t0

if step > 20: # Skip first steps

summ += batch_time

count += 1

t0 = time.perf_counter()

if step >= 100:

break

print(f'average step time: {summ / count}')

In the callback below we call our training function with the default sequence-padding collator.

config = GPT2Config(

n_layer=DEPTH,

n_embd=DIM,

n_head=NUM_HEADS,

vocab_size=NUM_TOKENS,

)

for compile in [False, True]:

print(f"HF GPT2 train with SDPA, compile={compile}")

hf_main(config=config, compile=compile)

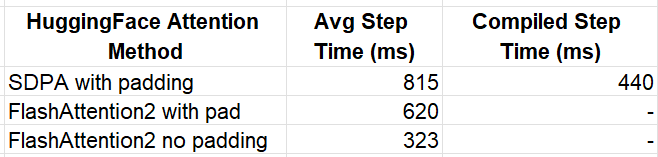

The resultant step times are 815 ms without torch.compile and 440 ms with torch.compile.

We now take advantage of HuggingFace’s built-in support for FlashAttention2, by setting the attn_implementation parameter to “flash_attention_2”. Behind the scenes, HuggingFace will unpad the padded data input and then pass them to the optimized flash_attn_varlen_func function we saw above:

flash_config = GPT2Config(

n_layer=DEPTH,

n_embd=DIM,

n_head=NUM_HEADS,

vocab_size=NUM_TOKENS,

attn_implementation='flash_attention_2'

)

print(f"HF GPT2 train with flash")

hf_main(config=flash_config)

The resultant time step is 620 ms, amounting to a 30% boost (in uncompiled mode) with just a simple flick of a switch.

Of course, padding the sequences in the collation function only to have them unpadded, hardly seems sensible. In a recent update to HuggingFace, support was added for passing in concatenated (unpadded) sequences to a select number of models. Unfortunately, (as of the time of this writing) our GPT2 model did not make the cut. However, adding support requires just five small line additions changes to modeling_gpt2.py in order to propagate the sequence position_ids to the flash-attention kernel. The full patch appears in the block below:

@@ -370,0 +371 @@

+ position_ids = None

@@ -444,0 +446 @@

+ position_ids=position_ids

@@ -611,0 +614 @@

+ position_ids=None

@@ -621,0 +625 @@

+ position_ids=position_ids

@@ -1140,0 +1145 @@

+ position_ids=position_ids

We define a collate function that concatenates our sequences and train our hugging face model on unpadded sequences. (Also see the built-in DataCollatorWithFlattening utility.)

def collate_flatten(batch):

input_ids = torch.concat([b['input_ids'] for b in batch]).unsqueeze(0)

labels = torch.concat([b['labels'] for b in batch]).unsqueeze(0)

position_ids = [torch.arange(b['input_ids'].shape[0]) for b in batch]

position_ids = torch.concat(position_ids)

return {

'input_ids': input_ids,

'labels': labels,

'position_ids': position_ids

}

print(f"HF GPT2 train with flash, no padding")

hf_main(config=flash_config, collate_fn=collate_flatten)

The resulting step time is 323 ms, 90% faster than running flash-attention on the padded input.

The results of our HuggingFace experiments are summarized below.

With little effort, we were able to boost our runtime performance by 2.5x when compared to the uncompiled baseline experiment, and by 36% when compared to the compiled version.

In this section, we demonstrated how the HuggingFace APIs allow us to leverage the optimized kernels in FlashAttention2, significantly boosting the training performance of existing models on sequences of varying length.

As AI models continue to grow in both popularity and complexity, optimizing their performance has become essential for reducing runtime and costs. This is especially true for compute-intensive components like attention layers. In this post, we have continued our exploration of attention layer optimization, and demonstrated new tools and techniques for enhancing Transformer model performance. For more insights on AI model optimization, be sure to check out the first post in this series as well as our many other posts on this topic.

Optimizing Transformer Models for Variable-Length Input Sequences was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

Optimizing Transformer Models for Variable-Length Input Sequences

Go Here to Read this Fast! Optimizing Transformer Models for Variable-Length Input Sequences