Go Here to Read this Fast! The best tablets in 2024: top 11 tablets you can buy now

Originally appeared here:

The best tablets in 2024: top 11 tablets you can buy now

Go Here to Read this Fast! The best tablets in 2024: top 11 tablets you can buy now

Originally appeared here:

The best tablets in 2024: top 11 tablets you can buy now

Go Here to Read this Fast! Everything coming to PBS in February 2024

Originally appeared here:

Everything coming to PBS in February 2024

Earlier this week, the team behind the Arc browser for Mac (and recently Windows) released a brand-new iPhone app called Arc Search. As you might expect, it’s infused with AI to power an experience where the app “browses for you”—pulling together a variety of sources of info across the internet to make a custom webpage to answer whatever questions you throw at it. That’s just one part of what The Browser Company is calling Act 2 of Arc, and the company gave details on three other major new features its bringing to the browser over the coming weeks and months.

The connective tissue of all these updates is that Arc is trying to blur the lines between a browser, search engine and website — the company wants to combine them all to make the internet a bit more useful to end users. In a promo video released today, various people from The Browser Company excitedly discuss a browser that can browse for you (an admittedly handy idea).

The Arc Search app showed off one implementation of that idea, and the next is a feature that arrives today called Instant Links. When you search for something, pressing shift and enter will tell Arc to search and automatically open the top result. This won’t have a 100 percent success rate, but there are definitely times when it comes in handy. One example Arc showed off was searching for “True Detective season 4 trailer” — pressing shift + enter automatically opened the trailer from YouTube in a new tab and started playing it.

You can easily get multiple results with this tool, too. I told it to “show me a folder of five different soup recipes” and Arc created a folder with five different tabs in for me to review. I also asked for the forecasts in Rome, Paris and Athens and got three pages with the details for each city. It’s handy, but I’m looking forward to Arc infusing it with more smarts than just simply pulling the “top” search result. (Side note: after testing this feature, my browser sidebar is awash with all kinds of nonsense. I’m glad Arc auto-closes things every day so I don’t have to sort it out.)

In a similar vein, the upcoming Live Folders feature will collect updates from sites you want to follow, like a sort of RSS feed. The idea is anticipating what sites someone is going to browse to and bring updated results into that folder. One example involved getting tagged in things on GitHub — each time that happened, a tab would be added to the folder with the new item. The demo on this feature was brief, but it should be available in beta on February 15th for further testing.

I got the sense from the video that developers would need to enable their sites to be updated via Live Folders, so it doesn’t seem like you can just add anything you want and expect it to work. In that way, it reminds me of some other Arc features like the one that lets you hover over a Gmail or Google Calendar tab to get a preview of your most recent messages or next appointment. Hopefully it’ll have the smarts to do things like drop new posts from your favorite site into the folder or open a new video from a YouTube channel you subscribe to, but we’ll have to wait to find out. (I also reached out to Arc for more details on how this might work and will update this story if I hear back.)

Finally, the last new feature here is also the most ambitious, and the one that most embodies that “browser that browses for you” vibe. Arc Explore, which the company says should be ready for testing in the next couple of months, uses LLMs to try and collapse the browser, search engine and site into a singular experience. In practice, this feels similar to what Arc is already doing with its new browser, but more advanced. One example the company gave involved making a restaurant reservation — starting with a query of wanting to make a reservation at one of a couple different restaurants, the Arc Explore interface brought back a bunch of details on each location alongside direct links to the Resy pages to book a table for two at exactly the time specified.

Another demo showed off how using Arc Explore can be better than just searching and clicking on results. It centered around soup, as all good demos do. Having Arc Explore bring up details on a certain kind of soup immediately provided details like ingredient lists, direct recipe steps and of course related videos. Compared to the pain of browsing a lot of sites that get loaded down with autoplaying ads, videos, unrelated text and more distractions, the Arc Explore experience does feel pretty serene. Of course, that’s only when it brings back the results relevant to you. But using a LLM, you can converse with Arc to get closer to what you’re looking for.

After using Arc Search on my iPhone, I can appreciate what The Browser Company is going for here — at the same time, though, breaking my old habits on how I browse the internet is no small thing. That means these tools are going to need to work pretty well when they launch if they’re going to supplant the years I’ve spent putting things into a Google box and finding the results I want. But that sums up the whole philosophy and the point behind Arc: to shake up these habits in an effort to make a better browsing experience. Not all these experiments will stick, and others will probably mutate a lot from these initial ideas, but I’m definitely interested in seeing how things evolve from here.

This article originally appeared on Engadget at https://www.engadget.com/the-arc-browser-is-getting-new-ai-powered-features-that-try-to-browse-the-web-for-you-211739679.html?src=rss

Originally appeared here:

The Arc Browser is getting new AI-powered features that try to browse the web for you

YouTube has hit a new milestone with its Music and Premium offerings. The paid services have more than 100 million users between them as of January, including those who were on a free trial. That’s an increase of 20 million members in just over a year, and the figure has doubled since September 2021. YouTube has successfully grown the figures despite a $2 per month increase for Premium that came into force last summer.

It’s unclear how many people are actually using YouTube Music (Premium includes access to that service). However you slice it, the music streaming service has significantly fewer paid users than Spotify, which had 220 million Premium members as of September 30. Spotify will reveal its latest membership numbers in an earnings report next week. Apple no longer breaks out its number of Apple Music subscribers. The last firm number the company gave for the service was 60 million subscribers back in 2019.

Regardless, the comparison between YouTube’s paid service and Apple Music and Spotify Premium is hardly like-for-like. YouTube Premium is its own thing with its own benefits. It can be tough to go back to the lousier ad-strewn free version of the service after having Premium. The option to download videos for offline viewing without having to resort to workarounds and background playback feature are both very useful. YouTube Music is just an extra perk on top of that for many members.

This article originally appeared on Engadget at https://www.engadget.com/youtubes-paid-music-and-premium-services-now-have-more-than-100-million-subscribers-210008040.html?src=rss

Amazon launched a new generative AI shopping assistant, Rufus, on Thursday. The chatbot is trained on Amazon’s product catalog, customer reviews, community Q&As and “information from across the web.” It’s only available to a limited set of Amazon customers for now but will expand in the coming weeks.

The company views the assistant as customers’ one-stop shop for all their shopping needs. Rufus can answer questions like, “What to consider when buying running shoes?” and display comparisons for things such as, “What are the differences between trail and road running shoes?” It can also respond to follow-up questions like, “Are these durable?”

Amazon suggests asking Rufus for general advice about product categories, such as things to look for when shopping for headphones. It can provide contextual advice as well, lending insight into products based on specific activities (like hiking) or events (holidays or celebrations). Other examples include asking it to compare product categories (“What’s the difference between lip gloss and lip oil?” or “Compare drip to pour-over coffee makers”). In addition, it can recommend gifts for people with particular tastes or shopping recommendations for holidays.

Rufus can also answer more fine-tuned questions about a specific product page you’re viewing. Amazon provides the examples, “Is this pickleball paddle good for beginners?” or “Is this jacket machine-washable?”

Amazon said in 2023 every division in its company was working on generative AI. It’s since launched AI-powered review summaries, and it began encouraging sellers to make AI listings and image backgrounds for their products. Rival Walmart teased a similar feature for its shoppers at CES 2024.

“It’s still early days for generative AI, and the technology won’t always get it exactly right,” wrote Amazon executive Rajiv Mehta. “We will keep improving our AI models and fine-tune responses to continuously make Rufus more helpful over time. Customers are encouraged to leave feedback by rating their answers with a thumbs up or thumbs down, and they have the option to provide freeform feedback as well.”

Rufus is launching in beta today to only “a small subset of customers,” and it will appear (for those in the beta) after updating the Amazon mobile app. The assistant will continue rolling out to US customers “in the coming weeks.” Once you’re allowed into the beta, you can summon Rufus by typing or speaking your questions into the search bar. A Rufus chat box will appear at the bottom of the screen.

This article originally appeared on Engadget at https://www.engadget.com/amazon-launches-rufus-an-ai-powered-shopping-assistant-204811837.html?src=rss

Go Here to Read this Fast! Amazon launches Rufus, an AI-powered shopping assistant

Originally appeared here:

Amazon launches Rufus, an AI-powered shopping assistant

If you’ve considered splurging on a premium monitor, Samsung has some deals worth investigating. The company’s offerings, including the 55-inch Odyssey Ark (available for a record-low $2,000), 57-inch Odyssey Neo G9 ($500 off) and 49-inch Odyssey OLED G9 ($400 off) are among the models discounted in a wide-ranging monitor sale on Amazon and Samsung’s website.

The 55-inch Odyssey Ark is a 4K behemoth with a 1000R curvature to ensure all sides of the screen face you at a roughly equal distance. It supports 165Hz refresh rates, making for fairly smooth gaming and other tasks, and it has a 1ms response time. You can even rotate its screen into portrait orientation, although that’s more of a niche bonus than an essential feature for most people.

Samsung fixed one of our biggest gripes about the first-generation version, adding the DisplayPort compatibility and multi-input split view that were perplexingly missing from that inaugural model. The one on sale is the latest variant, launched in 2023.

Usually $3,000, you can cut that in third on Amazon and Samsung, taking the 55-inch Odyssey Ark home for $2,000.

The 57-inch Odyssey G9 Neo has a more elongated (32:9) aspect ratio. This lets you squeeze more apps onto your desktop multitasking setup while supplying a wider field of view for gaming. Despite its different size and shape, it has the same tight 1000R curve as the Ark.

The monitor has 8K resolution, a 240Hz refresh rate and 1ms response time. Its mini LED technology uses 2,392 local dimming zones and “the highest 12-bit black levels.” Ports include DisplayPort 2.1, HDMI 2.1 and a USB hub.

Usually $2,500, you can shave $500 off the 57-inch Neo G9 monitor, taking it home for $2,000. That pricing is available on Amazon and Samsung.

The 49-inch Odyssey G9 OLED also has a 32:9 aspect ratio. Its OLED / Quantum Dot screen produces rich colors and deep blacks to make your games pop more, and its solid-black text can help your workspace lettering jump out more.

This model’s curve (1800R) is less pronounced than the 55-inch Ark and 57-inch G9 Neo’s, but it still supplies a gentle curve inward. It includes built-in speakers, and it has a 240Hz refresh rate with a “near-instant” (0.03ms) response time. It includes connections for HDMI 2.1 and DisplayPort.

The 49-inch G9 OLED retails for $1,600, but you can get it for $1,200 on Amazon. (It’s $1,300 on Samsung’s site.)

We only highlighted some of the standout monitors, but you can browse through the full sale on Amazon and Samsung.

Follow @EngadgetDeals on Twitter and subscribe to the Engadget Deals newsletter for the latest tech deals and buying advice.

This article originally appeared on Engadget at https://www.engadget.com/samsung-odyssey-monitors-are-up-to-1000-off-right-now-200039299.html?src=rss

Go Here to Read this Fast! Samsung Odyssey monitors are up to $1,000 off right now

Originally appeared here:

Samsung Odyssey monitors are up to $1,000 off right now

Apple has made spatial video capture and playback a key selling point of its headset, but it won’t be the only device in town that can handle stereoscopic videos. Meta Quest virtual reality headsets are getting spatial video playback capabilities, perfectly timed to coincide with tomorrow’s Apple Vision Pro launch.

You can upload spatial videos via the Meta Quest mobile app directly from your iPhone, but you’ll need an iPhone 15 Pro or iPhone 15 Pro Max to make the videos. The content will be stored in the cloud, and not the headset, to preserve all-important hard drive space. Once uploaded, you’ll be able to relive precious memories over and over again, as the increased depth that spatial videos provide is pretty engrossing.

Meta has made several demo videos available for users so you can see what all the fuss is about. This feature is not exclusive to the recently-released Meta Quest 3. You’ll be able to view spatial videos via the Meta Quest 2 and Meta Quest Pro. As usual, the OG Meta Quest is left out in the cold. It’s worth noting that the Viture One and One Lite XR glasses can also play spatial videos.

The video viewer is part of a larger system update that brings several other upgrades to Quest users. The headset’s web browser will now be able to play web-based games, with support for external gamepads. Additionally, Facebook live streaming is now available for everyone, after a limited rollout. Finally, there are some new single-gesture quick actions. You can, for instance, mute the microphone or take a photo just by looking down at your wrist and performing a short pinch. Hey, wait a minute. That also sounds suspiciously like Apple Vision Pro’s control scheme. Shots fired.

This article originally appeared on Engadget at https://www.engadget.com/meta-quest-headsets-get-spatial-video-playback-just-in-time-for-the-apple-vision-pro-launch-193821840.html?src=rss

Originally appeared here:

Meta Quest headsets get spatial video playback, just in time for the Apple Vision Pro launch

Generative artificial intelligence (AI) has become astonishingly popular especially after the release of both diffusion models like DALL-E and large language models (LLM) like ChatGPT. In general, AI models are classified as “generative” when the model produces something as an output. For DALL-E the product output is a high-quality image while for ChatGPT the product or output is highly structured meaningful text. These generative models are different than classification models that output a prediction for one side of a decision boundary such as cancer or no cancer and these are also different from regression models that output numerical predictions such as blood glucose level. Medical imaging and healthcare have benefited from AI in general and several compelling use cases and generative models are constantly being developed. A major barrier to clinical use of generative AI models is a lack of validation of model outputs beyond just image quality assessments. In our work, we evaluate our generative model on both a qualitative and quantitative assessment as a step towards more clinically relevant AI models.

In medical imaging, image quality is crucial; it’s all about how well the image represents the internal structures of the body. The majority of the use cases for medical imaging is predicated on having images of high quality. For instance, X-ray scans use ionizing radiation to produce images of many internal structures of the body and quality is important for identifying bone from soft tissue or organs as well as identifying anomalies like tumors. High quality X-ray images result in easier to identify structures which can translate to more accurate diagnosis. Computer vision research has led to the development of metrics meant to objectively measure image quality. These metrics, which we use in our work, include peak signal to noise ratio (PSNR) and structural similarity index (SSIM), for example. Ultimately, a high-quality image can be defined as having sharp, well defined borders, with good contrast between different anatomical structures.

Images are highly structured data types and made up of a matrix of pixels of varying intensities. Unlike natural images as seen in the ImageNet dataset consisting of cars, planes, boats, and etc. which have three red, green, and blue color channels, medical images are mostly gray scale or a single channel. Simply put, sharp edges are achieved by having pixels near the borders of structures be uniform and good contrast is achieved when neighboring pixels depicting different structures have a noticeable difference in value from one another. It is important to note that the absolute value of the pixels are not the most important thing for high quality images and it is in fact more dependent on the relative pixel intensities to each other. This, however, is not the case for achieving images with high quantitative accuracy.

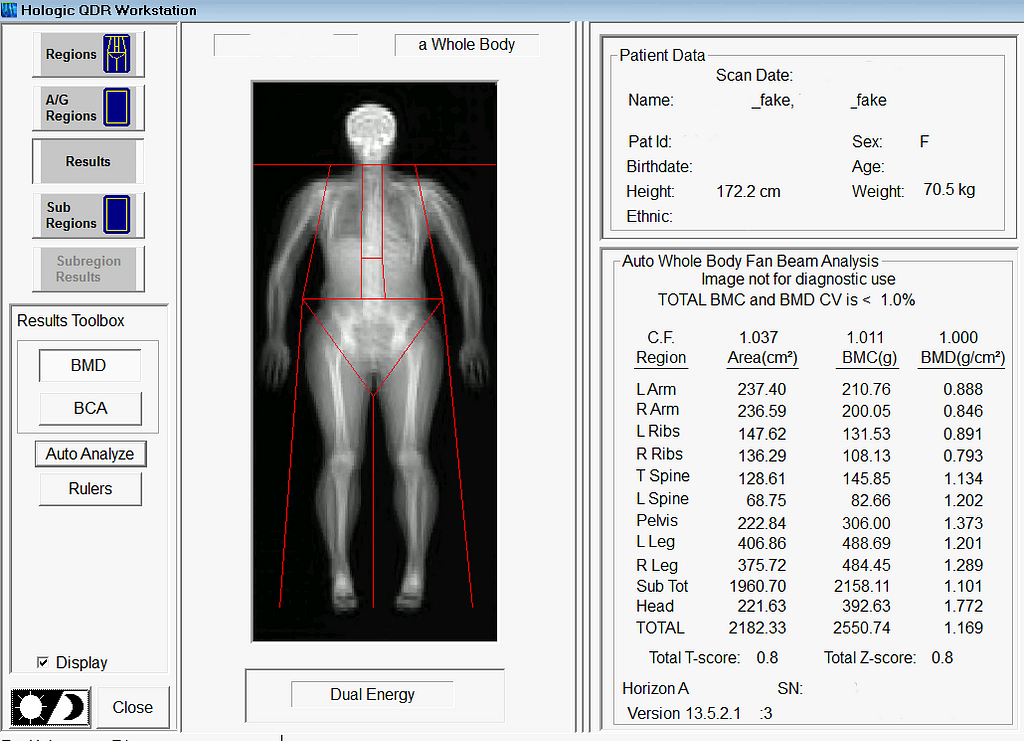

A subset of medical imaging modalities is quantitative meaning the pixel values represent a known quantity of some material or tissue. Dual energy X-ray Absorptiometry (DXA) is a well known and common quantitative imaging modality used for measuring body composition. DXA images are acquired using high and low energy X-rays. Then a set of equations sometimes refered to as DXA math is used to compute the contrast and ratios between the high and low energy X-ray images to yield quantities of fat, muscle, and bone. Hence the word quantitative. The absolute value of each pixel is important because it ultimately corresponds to a known quantity of some material. Any small changes in the pixel values, while it may still look of the same or similar quality, will result in noticeably different tissue quantities.

As previously mentioned, generative AI models for medical imaging are at the forefront of development. Known examples of generative medical models include models for artifact removal from CT images or the production of higher quality CT images from low dose modalities where image quality is known to be lesser in quality. However, prior to our study, generative models creating quantitatively accurate medical images were largely unexplored. Quantitative accuracy is arguably more difficult for generative models to achieve than producing an image of high quality. Anatomical structures not only have to be in the right place, but the pixels representing their location needs to be near perfect as well. When considering the difficulty of achieving quantitative accuracy one must also consider the bit depth of raw medical images. The raw formats of some medical imaging modalities, DXA included, encode information in 12 or 14 bit which is magnitudes more than standard 8-bit images. High bit depths equate to a bigger search space which could equate to it being more difficult to get the exact pixel value. We are able to achieve quantitative accuracy through self-supervised learning methods with a custom physics or DXA informed loss function described in this work here. Stay tuned for a deep dive into that work to come in the near future.

We developed a model that can predict your insides from your outsides. In other words, our model innovatively predicts internal body composition from external body scans, specifically transforming three-dimensional (3D) body surface scans into fully analyzable DXA scans. Utilizing increasingly common 3D body scanning technologies, which employ optical cameras or lasers, our model bypasses the need for ionizing radiation. 3D scanning enables accurate capture of one’s exterior body shape and the technology has several health relevant use cases. Our model outputs a fully analyzable DXA scan which means that existing commercial software can be used to derive body composition or measures of adipose tissue (fat), lean tissue (muscle), and bone. To ensure accurate body composition measurements, our model was designed to achieve both qualitative and quantitative precision, a capability we have successfully demonstrated.

The genesis of this project was motivated by the hypothesis that your body shape or exterior phenotype is determined by the underlying distribution of fat, muscle, and bone. We had previously conducted several studies demonstrating the associations of body shape to measured quantities of muscle, fat, and bone as well as to health outcomes such as metabolic syndrome. Using principal components analysis (PCA), through shape and appearance modeling, and linear regression, a student in our lab showed the ability to predict body composition images from 3D body scans. While this was impressive and further strengthened the notion of the relationship between shape and composition, these predicted images excluded the forelimbs (elbow to hand and knee to feet) and the images were not in a format (raw DXA format) which enabled analysis with clinical software. Our work fully extends and overcomes previous limitations. The Pseudo-DXA model, as we call it, is able to generate the full whole body DXA image from 3D body scan inputs which can be analyzed from using clinical and commercial software.

The cornerstone of the Pseudo-DXA model’s development was a unique dataset comprising paired 3D body and DXA scans, obtained simultaneously. Such paired datasets are uncommon, due to the logistical and financial challenges in scanning large patient groups with both modalities. We worked with a modest but significant sample size: several hundred paired scans. To overcome the data scarcity issue, we utilized an additional, extensive DXA dataset with over 20,000 scans for model pretraining.

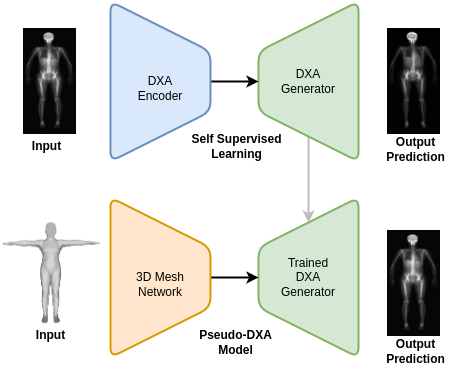

The Pseudo-DXA model was built in two steps. The first self-supervised learning (SSL) or pretraining step involved training a variational auto encoder (VAE) to encode and decode or regenerate raw DXA scan. A large DXA data set, which is independent of the data set used in the final model and evaluation of our model, was used to SSL pretrain our model and it was divided to contain an separate hold out test set. Once the VAE model was able to accurately regenerate the original raw DXA image as validated with the holdout test set, we moved to the second phase of training.

In brief, VAE models consist of two main subnetwork components which include the encoder and the decoder, also known as a generator. The encoder is tasked with taking the high dimensional raw DXA image data and learning a meaningful compressed representation which is encoded into what is known as a latent space. The decoder or generator takes the latent space representation and learns to regenerate the original image from the compressed representation. We used the trained generator from our SSL DXA training as the base of our final Pseudo-DXA model.

The structure of the 3D body scan data consisted of a series of vertices or points and faces which indicate which points are connected to one another. We used a model architecture resembling the Pointnet++ model which has demonstrated the ability to handle point cloud data well. The Pointnet++ model was then attached to the generator we had previously trained. We then fed the mode the 3D data and it was tasked with learning generate the corresponding DXA scan.

In alignment with machine learning best practices, we divided our data such that we had an unseen holdout test for which we reported all our results on.

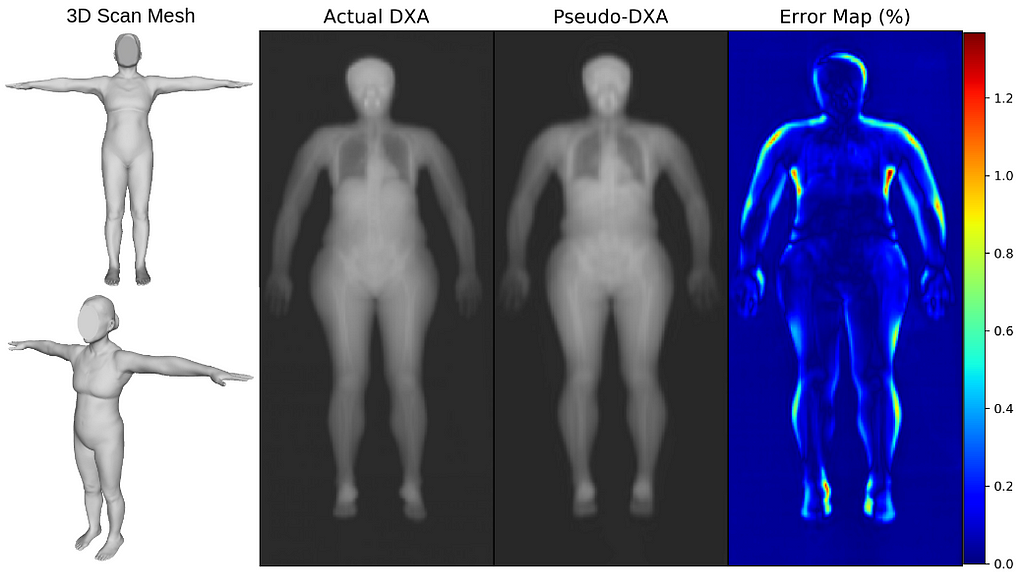

We first evaluated our Pseudo-DXA images using image quality metrics which include normalized mean absolute error (NMAE), peak signal to noise ratio (PSNR), and structural similarity index (SSIM). Our model generated images had mean NMAE, PSNR, and SSIM of 0.15, 38.15, and 0.97, respectively, which is considered to be good with respect to quality. Shown below is an example of a 3D scan, the actual DXA low energy scan, Pseudo-DXA low energy scan and the percent error map of the two DXA scans. As mentioned DXA images have two image channels for high and low energies yet, these examples are just showing the low energy image. Long story short, the Pseudo-DXA model can generate high quality images on par with other medical imaging models with respect to the image quality metrics used.

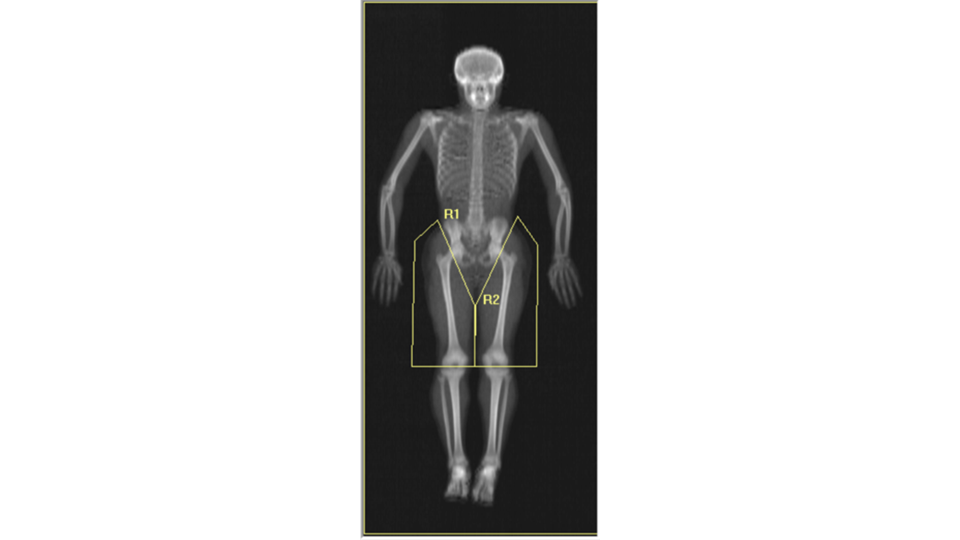

When we analyzed our Pseudo-DXA images for composition and compare the quantities to the actual quantities we achieved coefficients of determination (R²) of 0.72, 0.90, 0.74, and 0.99 for fat, lean, bone, and total mass, respectively. An R²of 1 is desired and our values were reasonably close considering the difficulty of the task. A comment we encountered when presenting our preliminary findings at conferences was “wouldn’t it be easier to simply train a model to predict each measured composition value from the 3D scan so the model would for example, output a quantity of fat and bone and etc., rather than a whole image”. The short answer to the question is yes, however, that model would not be as powerful and useful as the Pseudo-DXA model that we are presenting here. Predicting a whole image demonstrates the strong relationship between shape and composition. Additionally, having a whole image allows for secondary analysis without having to retrain a model. We demonstrate the power of this by performing ad-hoc body composition analysis on two user defined leg subregions. If we had trained a model to just output scalar composition values and not an image, we would only be able to analysis these ad-hoc user defined regions by retraining a whole new model for these measures.

Long story short, the Pseudo-DXA model produced high quality images that were quantitatively accurate, from which software could measure real amounts of fat, muscle, and bone.

The Pseudo-DXA model marks a pivotal step towards a new standard of striving for quantitative accuracy when necessary. The bar for good generative medical imaging models was high image quality yet, as we discussed, good quality may simply not be enough given the task. If the clinical task or outcome requires something to be measured from the image beyond morphology or anthropometry, then quantitative accuracy should be assessed.

Our Pseudo-DXA model is also a step in the direction of making health assessment more accessible. 3D scanning is now in phones and does not expose individuals to harmful ionizing radiation. In theory, one could get a 3D scan of themselves, run in through our models, and receive a DXA image from which they can obtain quantities of body composition. We acknowledge that our model generates statistically likely images and it is not able to predict pathologies such as tumors, fractures, or implants, which are statistically unlikely in the context of a healthy population from which this model was built. Our model also demonstrated great test-retest precision which means it has the ability to monitor change over time. So, individuals can scan themselves every day without the risk of radiation and the model is robust enough to show changes in composition, if any.

We invite you to engage with this groundbreaking technology and/or provided an example of a quantitatively accurate generative medical imaging model. Share your thoughts, ask questions, or discuss potential applications in the comments. Your insights are valuable to us as we continue to innovate in the field of medical imaging and AI. Join the conversation and be part of this exciting journey!

AI Predicts Your Insides From Your Outsides With Pseudo-DXA was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

AI Predicts Your Insides From Your Outsides With Pseudo-DXA

Go Here to Read this Fast! AI Predicts Your Insides From Your Outsides With Pseudo-DXA

Learn how your Text-to-SQL LLM App may be vulnerable to Prompt Injections, and mitigation measures you could adopt to protect your data

Originally appeared here:

Text-to-SQL LLM Applications: Prompt Injections

Go Here to Read this Fast! Text-to-SQL LLM Applications: Prompt Injections

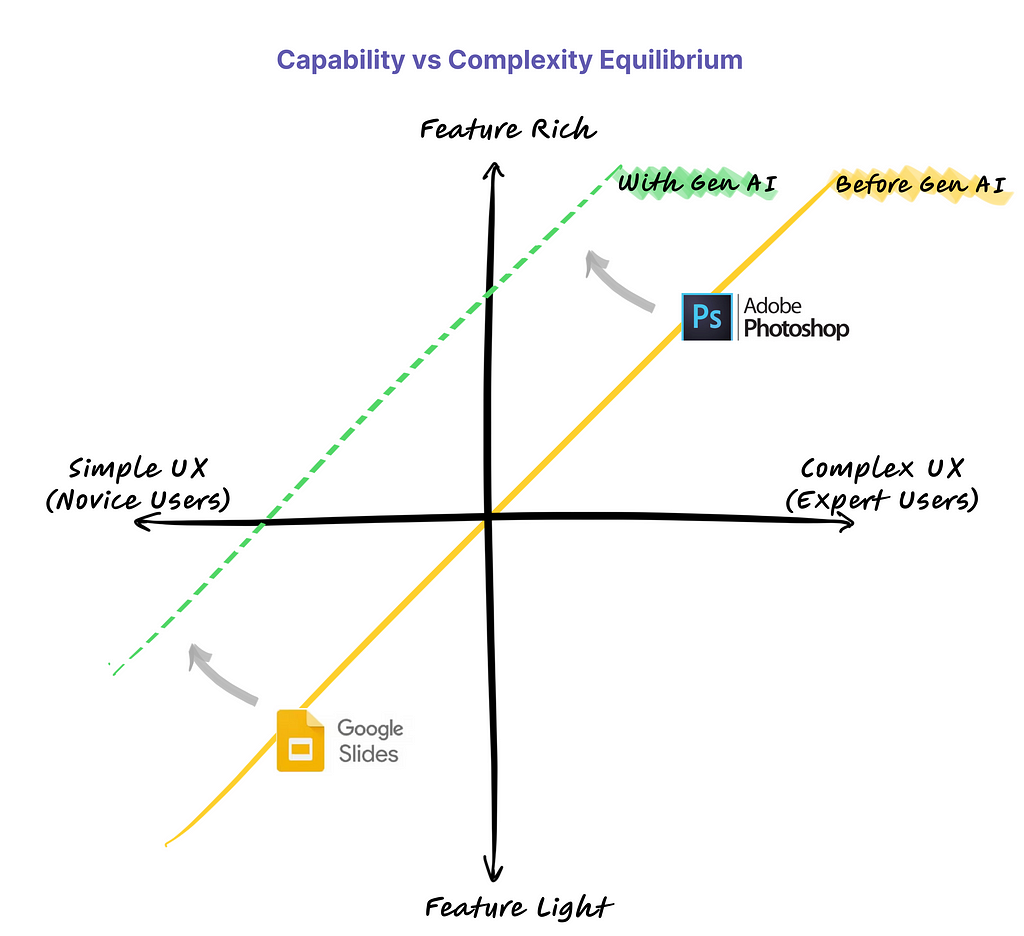

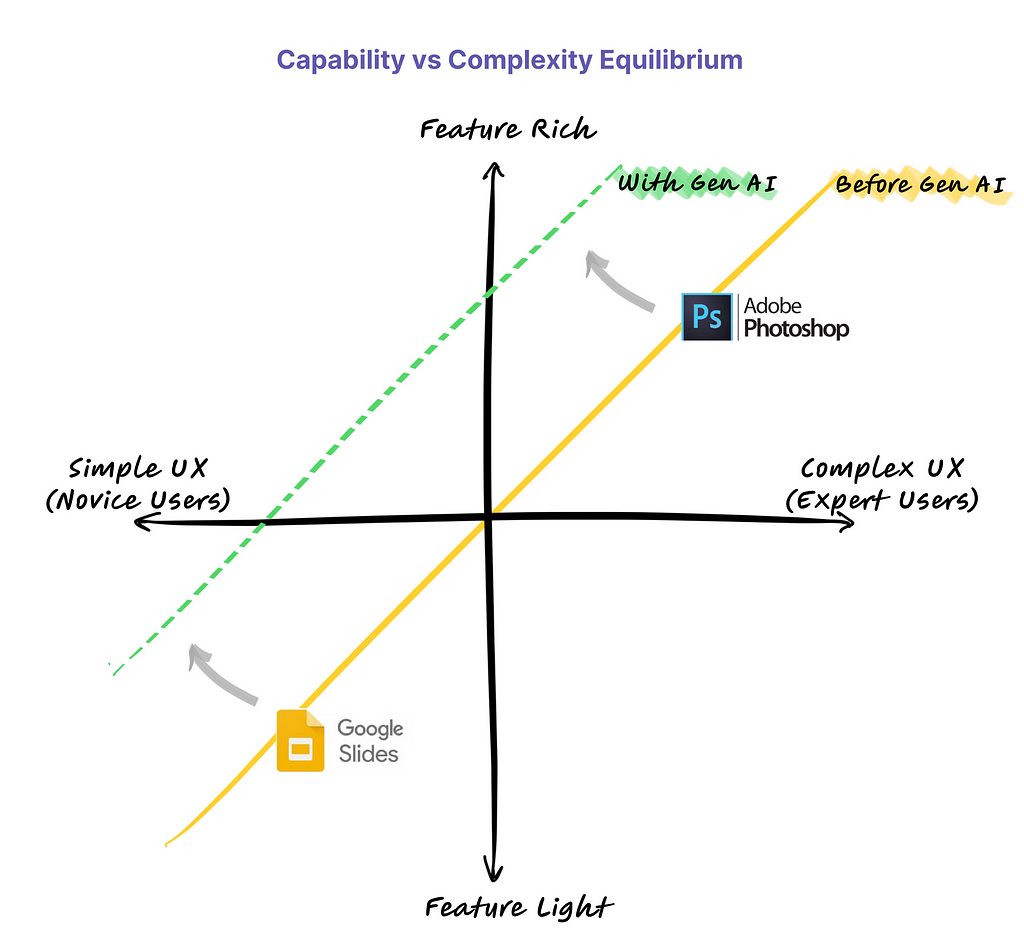

For decades, product builders long accepted a seemingly unbreakable rule: as the capabilities of a product increase, so does its complexity. For users, this has often meant choosing between simplicity and power. Anyone who has grappled with advanced softwares knows the frustration of navigating through countless menus and options to find that one feature they need! It’s the classic trade-off that has, until now, dictated the user experience.

But the rise of generative AI promises to disrupt this trade-off.

Imagine the Adobe Photoshop of yesteryears: while the product boasted a staggering array of rich design features, the product became so complex that only experts could use it. Today, with generative AI, Photoshop can enable users to make requests in plain speech like “remove background” or “make this portrait pop with sharper contrast.” This is a glimpse into how AI is making powerful tools more accessible for everyone.

By interpreting natural language commands, advanced feature-rich products can now make their user experience more accessible and intuitive for users without sacrificing their sophisticated capabilities. Conversely, the existing feature-light products aimed at novice users, which typically favor simple user experience, can now offer a newfound depth of Generative AI capabilities without adding complicating the user interface.

This is the essence of the paradigm shift — where complexity is no longer the cost of capability.

As generative AI redefines the product design landscape, it’s clear that established companies with strong user bases and domain expertise have a head-start. However, success is far from guaranteed.

You can integrate a generative AI API today, but where is the moat?

I have talked with half a dozen product leaders builders just this month. Every believes that paradigm shift of Generative AI has kick started a race. In the end, there will be winners and losers. This article, brings out some of the key strategies that product leaders are leveraging to use Generative AI for delivering a differentiated offerings to their customers.

In Generative AI, “one size fits all” approach doesn’t make the cut for specialized use cases. Generic foundation models are trained on internet data, which lacks industry-specific nuanced knowledge.

Take large vision models (LVMs) as example. LVMs are typically trained on internet images — which include pictures of pets, people, landmarks and every day objects. However, many practical vision applications (manufacturing, aerial imagery, life sciences, etc.) use images that look nothing like most internet images.

Adapting foundation models with proprietary data can vastly improve performance.

“A large vision model trained with domain-specific data performed 36–52% better than generic models for industry specific use cases.” — Andrew NG, Founder DeepLearning.AI

The narrative is similar for text-based large language models (LLMs). For instance, Bloomberg trained an LLM with proprietary financial data to build BloombergGPT, which outperforms other generic models of similar size on most finance NLP tasks. By augmenting foundation models with proprietary, in-domain data, companies can develop tailored generative AI that understands the nuances of the industry and delivers differentiated experience to meet users’ specialized needs.

For all their impressive abilities, generative AI models are a far from being reliable enough for most real-world applications. This gap between “wow!” demos and dependable deployments is what technologists refer to as the “last mile” problem. Generative AI produces probabilistic output and have tendency to hallucinate. This is a cause of concern in many business, finance, medicine, and other high-stakes use cases. As generative models become more capable, implementing practices to ensure fairness, transparency, privacy, and security grows increasingly important.

The framework below lists various initiatives that leading companies are prioritizing to manage the “last mile” risks pertinent to their industry.

By solving difficult responsible AI challenges unique to their industry, companies can successfully integrate these powerful technologies into critical real-world applications. Leading in AI ethics will earn user trust and gain a competitive advantage.

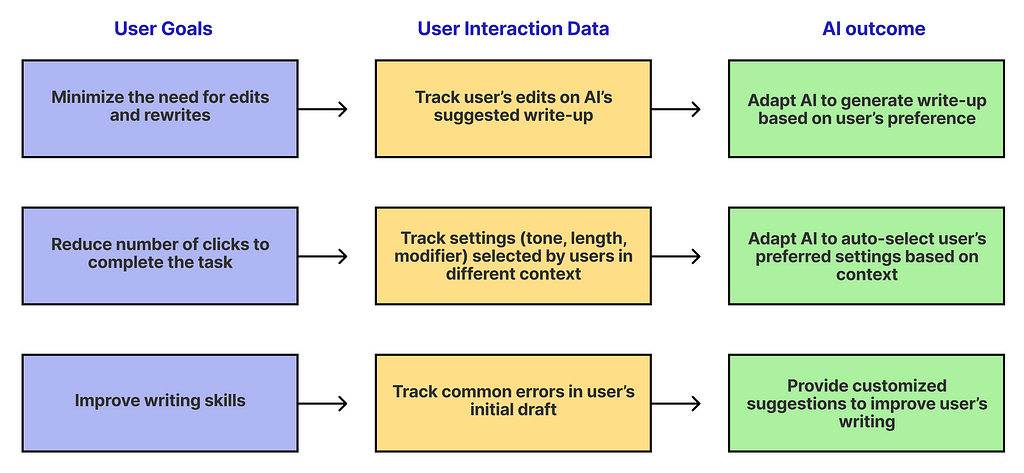

The cornerstone of crafting an exceptional, human-centered user experience is to design products that don’t just respond to users but grows and adapts with them. Leading Generative AI products will implement tight feedback loops between users and AI to enable continuous learning and deliver personalized experience.

“To build an ever-improving AI service, begin with the end-user goals in mind. Build a data-flywheel that continuously captures actionable data points which helps you assess and improve AI to better meet those goals.” — AWS, Director

Consider Grammarly, a tool designed to refine and improve users’ writing. It has recently launched Generative AI features to provide users with personalized writing suggestions.

Here’s a conceptual breakdown of how Grammarly can implement feedback loop to enhance its product aligned with different user goals:

A successful implementation requires:

The result is an AI that becomes increasingly customized to individual needs — without compromising privacy.

Prioritizing these human-centered feedback loops creates living products that continuously improve through real user engagement. This cycle of learning will become a core competitive advantage.

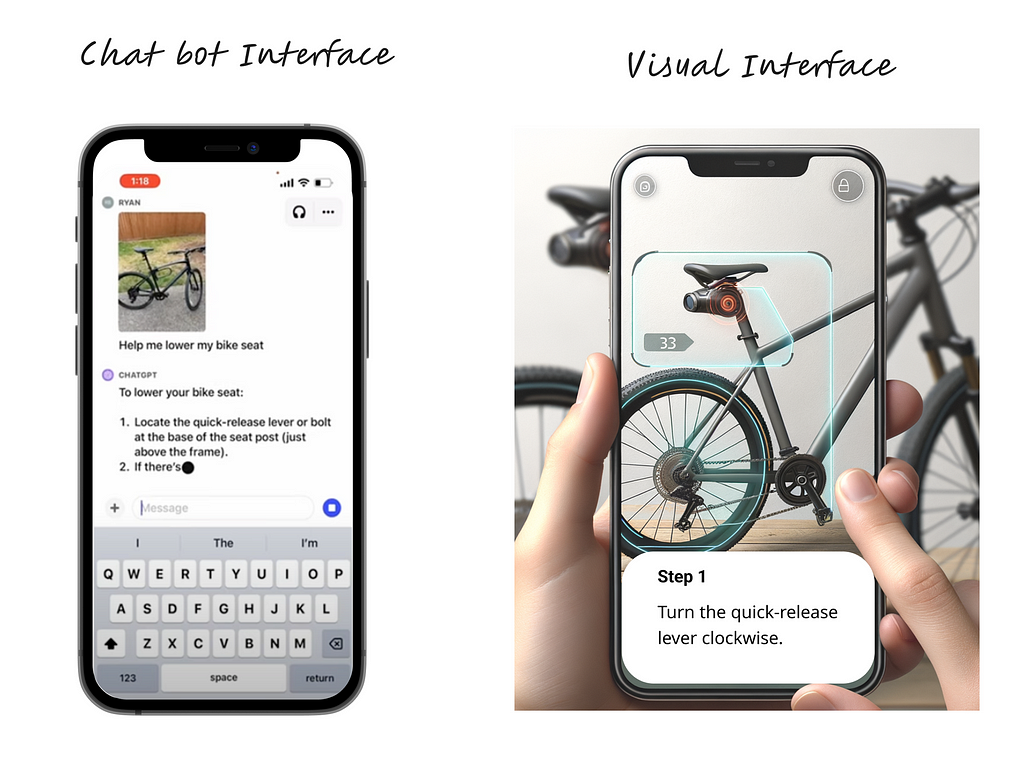

Realizing the full potential of generative AI requires rethinking user experience from the ground up. Bolting an AI chatbot in the application as an afterthought is unlikely to provide a cohesive experience. This requires cross-functional collaboration and rethinking of interactions across the entire product stack right from the beginning.

“Think from first principles. If you had these generative AI capabilities from the start, how would you design the ideal experience?” — Ideo, Design Lead

Consider a bike repair app. A chatbot that allows users to upload pictures of their bike and receive text instructions can be a good MVP. But the ideal UX will likely be a visual interface where:

Delivering this experience requires collaboration across teams:

By bringing these perspectives together from the outset, products can deliver fluid, human-centered user experience. Companies leveraging “AI-first” design thinking and full stack product optimization will be best placed to provide differentiated value to their customers.

As generative AI becomes ubiquitous, product leaders have an enormous opportunity — and responsibility — to shape its impact. Companies who take a human-centered, ethical approach will earn users trust. The key is embracing AI not just for its functionality, but for its potential to augment human creativity and positively transform user experiences. With thoughtful implementation, generative AI can expand access to sophisticated tools, unlock new levels of personalization, and enable products to continuously learn from real-world use.

By keeping the human at the heart of generative product design, forward-thinking companies can form authentic connections with users and deliver truly differentiated value. This human-AI symbiosis is the hallmark of transformative product experiences yet to come.

Thanks for reading! If these insights resonate with you or spark new thoughts, let’s continue the conversation.

Share your perspectives in the comments below or connect with me on LinkedIn.

The Generative AI Advantage: Product Strategies to Differentiate was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Originally appeared here:

The Generative AI Advantage: Product Strategies to Differentiate

Go Here to Read this Fast! The Generative AI Advantage: Product Strategies to Differentiate